Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Here Is the Official "AMD Radeon VII: World's First 7nm Gaming GPU" Reveal Trailer

- Thread starter cageymaru

- Start date

A lot of not-so tech minded people I know used to believe, and some still do, that a card with more memory was better than a card with less memory, irregardless of what chip was on it. Might just be that AMD wants to point and gesticulate about how much RAM they have.The 16GB makes no sense. Is it an architecture limitation, like they HAD to ship it with 16GB if they wanted that many compute units or something like that?

I'm hoping for the best and expecting the worst. Would be pretty great if real-world numbers match or exceed the 2080.Sounds decent but I'll reserve judgement for when the [H] review comes out. I don't believe anything AMD or nVidia (or any other tech company) say about their stuff any more. Let me see the independent reviews.

one thing im a bit suspicious about.with the last review from [H] about vram usage..dx12 and raytracing...if Amd put 16gb hbm mem on this card..and if im not mistaking double the rop? i think there on something here. pretty sure they have raytrace in mind here too. they have "talk" about intrisic shader and rapid pack math(show in slide,Ubisoft) i think where in for a surprise here ... im just speculating.

Last edited:

What a bummer, 2080 performance at 2080 prices without the 2080 feature set.

Only people who would really be interested in such a card are the people who need the 16GB of ram.

You mean like the feature of the 2080Ti bursting into flames? nVidia can keep that feature to themselves.

Thatguybil

Limp Gawd

- Joined

- Jan 21, 2017

- Messages

- 173

Who else thought they were watching a trailer for a stand alone "Flash" superhero movie?

You need 16 GB based on the bandwidth requirements. To do 8 GB and have the same bandwidth you would need either faster memory, a wider bus, or memory chips that were half as dense.

I would not surprise me if we eventually saw a cut down Radeon "5" that has 8 GB and 56 compute units and a Radeon "9" that has the full 64 compute units with 16 GB.

*edit I can't type*

The 16GB makes no sense. Is it an architecture limitation, like they HAD to ship it with 16GB if they wanted that many compute units or something like that?

You need 16 GB based on the bandwidth requirements. To do 8 GB and have the same bandwidth you would need either faster memory, a wider bus, or memory chips that were half as dense.

I would not surprise me if we eventually saw a cut down Radeon "5" that has 8 GB and 56 compute units and a Radeon "9" that has the full 64 compute units with 16 GB.

*edit I can't type*

Last edited:

Considering most were predicting a Navi announcement with 1080 class performance and the instead they announce a GPU with 1080TI class performance people will be upset about anything. AMD is finally getting back on track and offering Nvidia some competition, Nvidia only has one card that can outperform it at double the price. Not including Ray Tracing. Maybe Navi is getting retooled for Ray Tracing, but it was hinted that something else was in the works for this year.

What's interesting is that a lot of people that complain are doing it for the sake of complaining and to the other half competitive means + 50% of 2080 Ti at $ 350 price range. Some of you guys do not make any sense. The bandwith alone of those 16 Gb makes this thing attractive.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,939

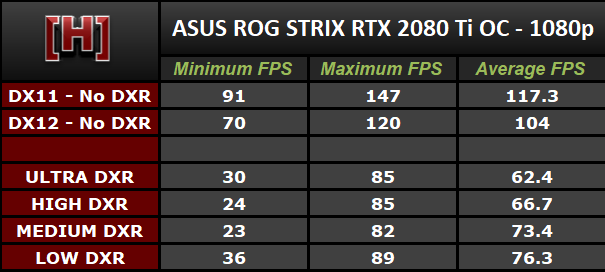

After the updates the 2080 does fine with RTX fps I mean you aren't going to get 4k performance but reasonable 2k isn't out the window according to all the benchmarks, the 2080ti gets 60-90fps now at 1080p, and the 2060 apparantly targets 60fps or greater at 1080p with RTX in low, it's new so really without DLSS it's hard to quantify.

OMG 2k or colloquially known as 1080p. You mean it can do 1080p at 60 fps with barely any Ray-Tracing in the scene? Look at all the GTX 970's shacking in the boots. At least it's cheap like the GTX 970..... oh wait.

HBM works in stacks of 4 and each stack has to be the same, they probably could have gimped the card with 12GB of ram but because of the complexity in producing the stacked memory it probably would have cost more to gimp it than to ship it as is. That being said this is the consumer version of one of their data center cards where I can assure you 16GB of ram is sufficient but not great, my quadro’s are running 24GB a piece and they sit at 70+% memory utilization at any point so on that front 16GB is insufficient.The 16GB makes no sense. Is it an architecture limitation, like they HAD to ship it with 16GB if they wanted that many compute units or something like that?

RTX cards need 16gb to do raytracing which is why BFV is a stutterfest with RTX cards with 8gb. Textures in general are eating up well over 6 gb and is only going to get worse. The price is shit but the VRAM is adequate. They should come out with a Radeon 7 52 CU version with 8gb for $500 to appease a wider market. Not sure if it's worth the cost because 7nm with Vega had to be expensive but they need more market share.

[H] numbers say 30fps is the low.according to all the benchmarks, the 2080ti gets 60-90fps now at 1080p,

I'm not paying $1300 to play on a 2007 resolution and have it slow down to 30fps. That's insanity.

lostin3d

[H]ard|Gawd

- Joined

- Oct 13, 2016

- Messages

- 2,043

If this has the clock speeds to match a 2080 it might actually outperform in a number of games at 4k with that 16GB. It's a well proven fact that even without ray tracing there are many games now that can exceed 8GB at 4k.

Ocellaris

Fully [H]

- Joined

- Jan 1, 2008

- Messages

- 19,077

If this has the clock speeds to match a 2080 it might actually outperform in a number of games at 4k with that 16GB. It's a well proven fact that even without ray tracing there are many games now that can exceed 8GB at 4k.

If the card was going to beat a 2080Ti they would have shown that in the slides

lostin3d

[H]ard|Gawd

- Joined

- Oct 13, 2016

- Messages

- 2,043

Not 2080TI 2080If the card was going to beat a 2080Ti they would have shown that in the slides

Ocellaris

Fully [H]

- Joined

- Jan 1, 2008

- Messages

- 19,077

Oh lol. I read “2080 it” as 2080 tiNot 2080TI 2080

Unknowns: Power consumption. It's 7nm so power might be comparable to nvidia this time around but we'll have to see.

Dual 8-pin power connector indicates to me that this will hardly help slow down climate change...

Dual 8-pin power connector indicates to me that this will hardly help slow down climate change...

2080ti has dual 8 pin

funkydmunky

2[H]4U

- Joined

- Aug 28, 2008

- Messages

- 3,871

AMD renders just fine. What apps do you use that are Cuda only?Gonna try to follow up with both of you comments with one of my own. I'm looking at this from a 3d artist and rendering perspective and based on the tentative analysis of the specs (or lack there of in this case) AMD is clearly not in the market for GPU rendering because it requires CUDA cores, so their intent is pretty clear they're not interested. Which is understandable but troubling none the less because Nvidia will continue to have monopolistic control in this market. And unlike the severe amount of negativity RTX is getting with their raytracing engine, when it comes to gpu rendering, this is where it excels and if these early test benchmarks from Chaosgroup's VRay are any indication, then it's going to be an attractive seller.

Flipping the script back to gaming, and admittedly I'm only a light gamer these days so have no skin in the game. But anyways, if the performance is only marginally better than the 2080, and the fact 90% of gamers won't ever take full advantage of 16GB of VRAM, and they're pricing it exactly as the same as the already overpriced 2080, no CUDA cores, then unfortunately in this humble mother fuckers opinion AMD dropped the ball, especially with the pricing. Unless they were to set the floor at $599 (and I could argue all day even $499 would be more attractive) then it's going to be difficult to convince the hobbyist gamer, or loyalist to jump ship.

At the end of the day nobody is going to care about watts or any, gamers are going to speak with their wallets, and sadly for me I have no choice but to play Nvidia's long term strategy because I would love nothing more than to give them the bird after their arbitrary NDA's and forced Geforce program scandal. This is what happens when in a capitalistic system where there is no competition, progress stagnates and consumers lose to corporate interests and their shareholders.

2080ti has dual 8 pin

Ok, and? They’re not going to do dual 8-pin unless it needs dual 8-pin. It will still be a power hungry GPU. That’s my point.

Blade-Runner

Supreme [H]ardness

- Joined

- Feb 25, 2013

- Messages

- 4,366

Please don't suck.

Please don't suck.

Please don't suck.

Not holding my breath considering how mediocre AMD's offerings have been for the past decade, but I REALLY want them to kick Nvidia in the balls this generation given their recent price gouging and poor Q&A.

As I understand it:

Pro's - Slightly cheaper than a 2080, equivalent performance, double the ram.

Con's - No RTX, No CUDA.

My Concerns: 16GB of HBM2 (if it's anything like Vega) suggests limited availability and that prices won't change much.

Unknowns: Power consumption. It's 7nm so power might be comparable to nvidia this time around but we'll have to see.

Other thoughts: Might be a good machine learning card vs the RTX 2080 with all the ram and raw processing power, depends how much the tensor cores help a given problem. Though you'll have to use custom AMD builds of tensorflow and may have less or no support in other frameworks.

AMD renders just fine. What apps do you use that are Cuda only?

VRay

Ok, and? They’re not going to do dual 8-pin unless it needs dual 8-pin. It will still be a power hungry GPU. That’s my point.

What point do I need to make? Did you make an asinine comment about 2080ti's not going to help the climate?

funkydmunky

2[H]4U

- Joined

- Aug 28, 2008

- Messages

- 3,871

Well then you chose the correct GPU being that it is Nvidia only software.VRay

This varies from your opinion where you blame AMD or more importantly declare AMD unable to render. "AMD is clearly not in the market for GPU rendering because it requires CUDA cores, so their intent is pretty clear they're not interested."

AMD has no control over software design in which a company chooses to support only Nvidia's proprietary Cuda. You do see the difference I hope.

AMD GPU's have traditionally been quite strong in compute.

Well then you chose the correct GPU being that it is Nvidia only software.

This varies from your opinion where you blame AMD or more importantly declare AMD unable to render. "AMD is clearly not in the market for GPU rendering because it requires CUDA cores, so their intent is pretty clear they're not interested."

AMD has no control over software design in which a company chooses to support only Nvidia's proprietary Cuda. You do see the difference I hope.

AMD GPU's have traditionally been quite strong in compute.

And still is, difference is that RTX is a lot better than previous generations.

Making Vega a bit less of an architecture, Radeon VII has atleast more than 8gb vram compared to rtx2080 which is probably it's only strong side.

What point do I need to make? Did you make an asinine comment about 2080ti's not going to help the climate?

A sense of humour would do great things for you. Life’s too short.

lostin3d

[H]ard|Gawd

- Joined

- Oct 13, 2016

- Messages

- 2,043

Oh lol. I read “2080 it” as 2080 ti

No problem. I'm sure I've made the same mistake in other threads.

cptnjarhead

Crossfit Fast Walk Champion Runnerup

- Joined

- Mar 9, 2010

- Messages

- 1,669

Sounds like a beast.... but they always do before people get some real numbers. RTX (new physx) tech is just not exciting to me so if AMD delivers... might go red team next time, we shall see.

Don't see why people are complaining. Vega 7 is a pretty good card, you will see the reviews shortly.

Pretty sure its overstock of the MI60. Basically a stop gap card till Navi comes out. The Big Navi they have promised us in 2020, will be going into production soon.

Problem with Vega was always power, it couldn't maintain high clocks because it would go over its power budget. I figure the 7nm process not only allows higher clocks, but much better efficiency. Doubling the memory bandwidth always helps as well.

I'd complain about it not having RTX, but only one game even has that feature so not like I'm missing anything, besides I play at 4k.

Pretty sure its overstock of the MI60. Basically a stop gap card till Navi comes out. The Big Navi they have promised us in 2020, will be going into production soon.

Problem with Vega was always power, it couldn't maintain high clocks because it would go over its power budget. I figure the 7nm process not only allows higher clocks, but much better efficiency. Doubling the memory bandwidth always helps as well.

I'd complain about it not having RTX, but only one game even has that feature so not like I'm missing anything, besides I play at 4k.

Exactly. In [H] we trust. I can't remember the last time I bought a major PC component that hadn't been reviewed here.Sounds decent but I'll reserve judgement for when the [H] review comes out.

Let just hope Navi is as good as people are saying and they're just trying to dump all Vega chips before they release Navi later this year.Pretty sure its overstock of the MI60. Basically a stop gap card till Navi comes out. The Big Navi they have promised us in 2020, will be going into production soon..

Interesting it only has 60 instead of 64 compute units. 64 compute units would have been the absolute maximum they could have for GCN architecture. (which is limited architecturally to 4096 compute units)

I doubt the cards are made this way, mostly that the Radeon VII GPU's are made up of partially defective full dies.

With this in mind, since they couldn't ship to the professional market without announcing a new product, they instead to make a consumer GPU with them.

Thats my theory of why they have 16GB of ram. they are basically the professional vega 7nm cards that didn't make the cut.

I doubt the cards are made this way, mostly that the Radeon VII GPU's are made up of partially defective full dies.

With this in mind, since they couldn't ship to the professional market without announcing a new product, they instead to make a consumer GPU with them.

Thats my theory of why they have 16GB of ram. they are basically the professional vega 7nm cards that didn't make the cut.

1Nocturnal101

Gawd

- Joined

- Jan 27, 2015

- Messages

- 520

[H] numbers say 30fps is the low.

I'm not paying $1300 to play on a 2007 resolution and have it slow down to 30fps. That's insanity.

Which benchmarks and when? They did patch, drivers, and optimizations, I wasn't aware they retested because that sounds like the original numbers when first released.

Also Tesselation was the same exact way when it released.

These are prosumer features on prosumer products, if you are crying about the price then you were never the intended market, people are acting like this exact scenario hasn't played out a dozen times before within gaming...

You can buy the cards and use and or ignore rtx features or you can buy AMD, you have a choice.

Which benchmarks and when? They did patch, drivers, and optimizations, I wasn't aware they retested because that sounds like the original numbers when first released.

Also Tesselation was the same exact way when it released.

These are prosumer features on prosumer products, if you are crying about the price then you were never the intended market, people are acting like this exact scenario hasn't played out a dozen times before within gaming...

You can buy the cards and use and or ignore rtx features or you can buy AMD, you have a choice.

https://www.hardocp.com/article/201..._nvidia_ray_tracing_rtx_2080_ti_performance/3

1Nocturnal101

Gawd

- Joined

- Jan 27, 2015

- Messages

- 520

So is there a possibility they are 1% lows.

There's a possibility that im a bored billionaire.So is there a possibility they are 1% lows.

they came to the conclusion that RTX just doesn't work well, at least in BF1. This is using the ASUS ROG STRIX RTX 2080 Ti OC mind you. Not a 2070 2080 or even a regular 2080ti, its an OC model. $1300 for 23,24,30 fps minimums. I don't want it to drop every minute and a half ~(1/100) to shit frames, if it really is only 1% lows.There are wild swinging differences in framerate depending where you are in the map. The minimums are not exactly welcoming.

if you find that acceptable, thats fine.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)