SonDa5

Supreme [H]ardness

- Joined

- Aug 20, 2008

- Messages

- 7,437

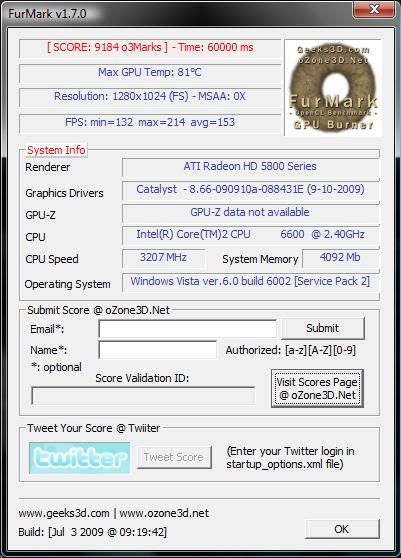

Post some screen shots and info of furmark temps along with system chipset temps using HD5000 series.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

I don't know anyone who uses Furmark as a performance benchmark, but they do use it to check overclocks to see if they're stable.

Yeah, it is quite useless as it is a very specific benchmark. But for the purposes I outlined, the score could be useful. It's also one of the few programs able to leverage the 'theoretical' computational advantage RV770 has over G200 - my GTX260 @ 837/1674/1296 (I don't run those clocks for gaming) produces similar results to a stock 4850

EDIT: I should probably add that I never got 3DMark '06 to complete at those clocks, even though Vantage, Crysis et al were all fine. Street Fighter 4 also bombs out, so furmark stability is no guarantee. I've also had bad memory pass Memtest86.

Personally, I've never been one to judge cards on "theoretical" numbers becasue as we all know, no programs take complete advantage on aviable performance. But I'm also the kind of person who doesn't even have any version of 3DMark installed becasue I'm not into synthetical performance numbers. I built this rig for gaming, and thats what I test with. But, I know that not everyone feels the same, so I will never try to tell someone that their methods are wrong. As the old saying goes, to each their own. As far as testing for stability, I've never seen a program that stesses every single part of a card at the same time, so for testing, a wide selection should be used. That even goes for CPU's with IBT and Prime95. By the way, does Furmark even put a strain on the Texture units, ROP's, or memory? I've been under the impression it was mainly for testing the shaders.

I'm interested in seeing scores too, to help assess whether or not gaming performance is being gimped by the 256-bit bus. With my old 4870 @ 850/1000 i got ~6000 at 1280x1024 with no AA), so a score around 12k (since FurMark is shader-limited) would suggest it is somewhat constrained. 9-10k, however, would put it in line with other benchmarks and indicate it is more likely just early drivers. Only theory, but I think it has merit.

Yes, can you please re-run same settings but without AA. It should produce higher temps, plus on a personal level it will provide a useful comparison score. Also, could you try renaming the exe to see if they are still gimping their cards under Furmark

By the way, does Furmark even put a strain on the Texture units, ROP's, or memory? I've been under the impression it was mainly for testing the shaders.

Keep this in mind the next time you read any video card review.

There is always some type of benchmark being used to compare the performance of one video card to another. It might be a game showing frames per second or a"synthetic" benchmark like 3dMark06. In the big picture the best video card will show the most performance over an array of different types of benchmarks.

Higher perofmance from these test normally means much better game play. Of course individual hardware drivers are important as well but these test help provide performance data.

For me operating temperatures are important because I want my system temps as low as possible so that all of my hardware may enjoy the highest quality electrical power possible.

Done. With the exe renamed to FurMarker.exe

I wouldn't worry to much about the 256-bit bus, it isn't the only factor in performance.

AMD says that Furmark is a "power virus" because it strains everything and they really, really hate it. Whatever it does is unrealistic, since not even Crysis on maximum settings will hammer the GPU that much and provoke such high temperatures and power consumption.By the way, does Furmark even put a strain on the Texture units, ROP's, or memory? I've been under the impression it was mainly for testing the shaders.

The thing is, if you're into comparing 3DMarks with other people, than it's a reasonable app to use. But, if you're a person such as myself who buys hardware to stricktly have better performance in games, than it's not needed. When trying to figure out which card to buy for gaming, the only thing that makes sense to do is compare the actual gameplay perfromce for the games that you personally play. The biggest problem I have with 3DMark is that over the years, less informed gamers have come to think of it as the end all, be all tool. Then they go spreading that in forums and such. People should be fully informed on the subject, especially when paying high dollar amounts for the hardware.

AMD says that Furmark is a "power virus" because it strains everything and they really, really hate it. Whatever it does is unrealistic, since not even Crysis on maximum settings will hammer the GPU that much and provoke such high temperatures and power consumption.

Obligatory car analogy: running Furmark is like pushing the pedal to the metal in neutral gear. It helps you see if your engine will blow up or overheat at a certain RPM which you will never, ever reach while driving. (And of course, blaming the car manufacturer for introducing a rev limiter and demanding it removed or removing it yourself.)

Say no to Furmark!

Right and that is why I stated that the next time you read any video card review you pay attention to all the different types of test done from frames per second in games to "synthetic benchmarks" like 3dMark06.

It all adds up to performance data and the more the better in determining the performance of a video card.

I think they still detect the program with drivers and cut power to unnecessary parts to keep total temps down.

Got proof?

You seem to be not getting the point I'm trying tos ay. .

"Once again you will not find a video card review that doesn't use frames per seconds on games or synthetic benchmarks like 3dMark06."

I'm arguing that only games needed to be used when reviewing cards for gamers. As far as not being able to find a review that doesn't use synthetics, did you forget about [H]'s policy on the issue? And even though other reviewers use them, that doesn't automatically make it relevant. Like I've said before, if you like to use it, then fine. I'm just saying that it should be made clear to everyone, especially people new to this hobbby, that they shouldn't pay any more attention to it than games. Just becasue a card scores higher in 3DMarks and other synthetics doesn't mean it's a better card. The R600 and R770 both score higher than their respective Nvidia counterparts, but real games show the exact opposite.