Archaea

[H]F Junkie

- Joined

- Oct 19, 2004

- Messages

- 11,825

I've used hardware G-Sync since around late 2017 with an Alienware AW3418DW ultrawide.

It's has been phenomenal. I'm one that can always tell with G-Sync or Freesync is on or off - it really benefits my gaming experience, so much so that playing games without it feels terrible to the point I don't want to play the game. Occassionally if G-Sync got turned off I could immediately tell, and my friend blind tested me with G-Sync on and off and I was 100% accurate - even at high frame rate games detecting if it was on or off.

With hardware G-Sync on the Alienware monitor I don't feel any issues in gaming down to the low 40FPS range - it feels buttery smooth.

I recently swapped to the LG C2 OLED with G-Sync compatibility. It feels WAY less smooth at 40-50FPS range than the Alienware does with CyberPunk 2077.

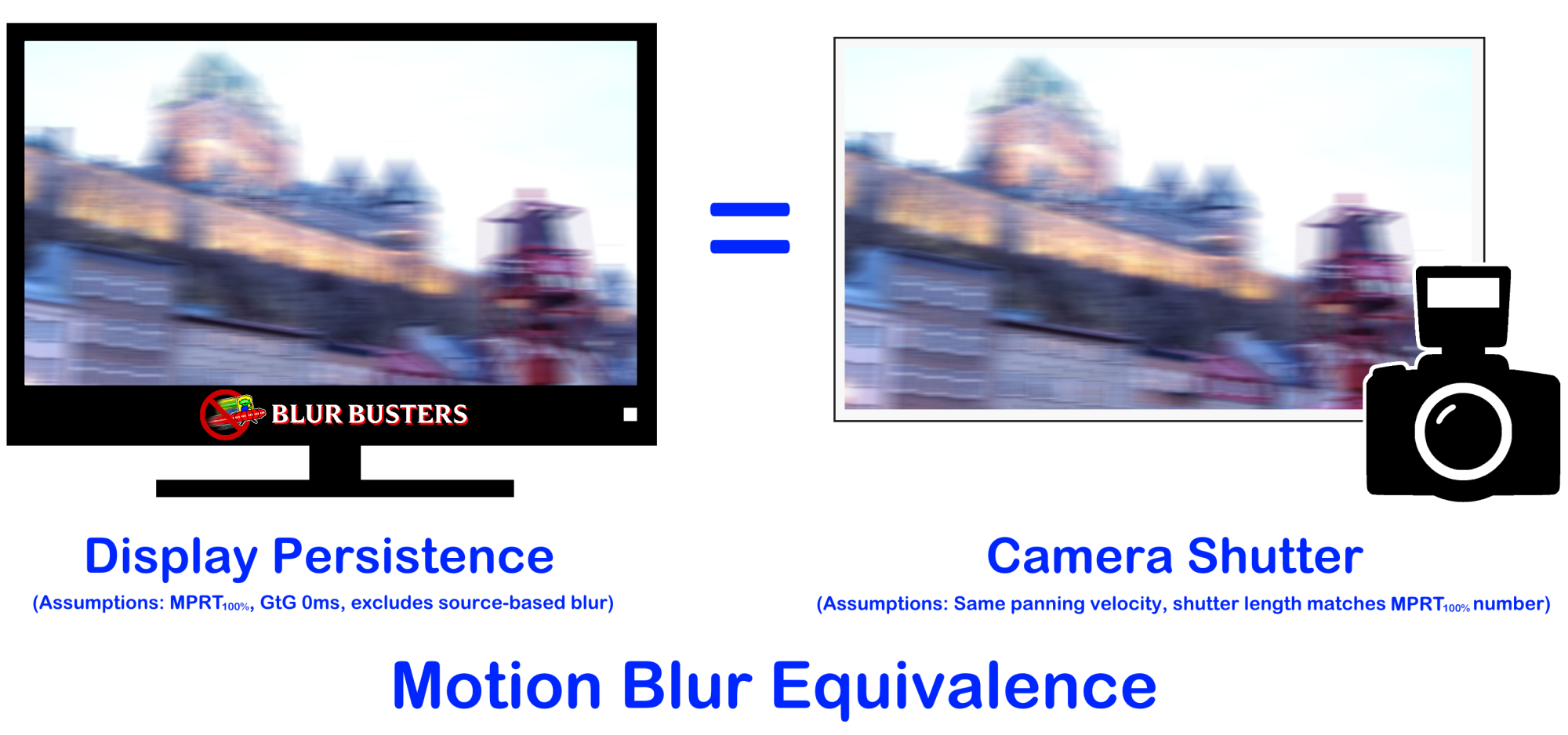

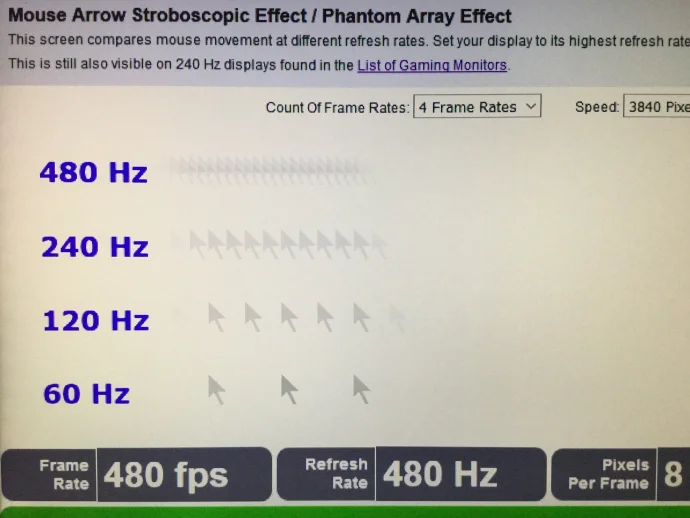

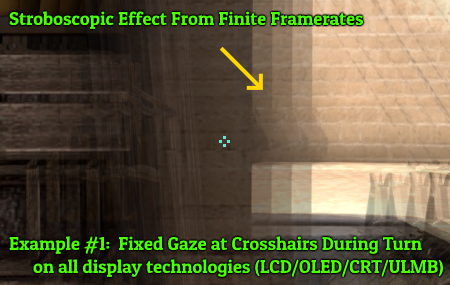

Why? Is this a known issue? Is this the difference between traditional LCD IPS display and OLED motion?

I'm confident this isn't in my head. I played a lot of Cyberpunk at 40-50FPS on the Alienware monitor and found it perfectly acceptable. >100 hours back at game launch. With DLSS I found the game perfectly acceptable on my 1080TI card (with settings reduced) and my 3080 card with settings maxed.

I recently swapped to LG C2 42" OLED, have new CPU hardware (PC upgraded from I7-6950X to I7-12700K) - and the same 3080 video card, but the increased resolution makes my gaming experience still run about the same 40-50FPS at max settings. It doesn't feel smooth at all at these frame rates. It feels like G-Sync is NOT on. (even though it's set to on in the Windows 11 settings and appears to be on with the OLED Game Optimizer config showing 119FPS max referesh rate and VRR).

Granted there is nearly 2 years of driver updates, and CyberPunk 2077 software updates and Windows 10 vs. Windows 11 difference , and HDR turned on/off etc between my two experiences too complicate that direct A/B comparison from my previous play through to this current playtime...But it just feels very different now than it did before, and not in a good way. Visually the game looks SOOOOOOOOOO much better on OLED with perfect blacks, and HDR. But for the feel - the Alienware with hardware G-Sync crushes the LG OLED smoothness feel. I guess I'll have to hook the Alienware back up and try the game again - - try to load settings to get to that same 40-50FPS and see if my memory serves me correctly, but I am very particular about this, and it's easily observed to me subjectively ---and I do game a lot and this OLED just feels quite different at 40-50FPS.

Is hardware G-Sync vs. compatible G-Sync an expected difference in the 40-50FPS range?

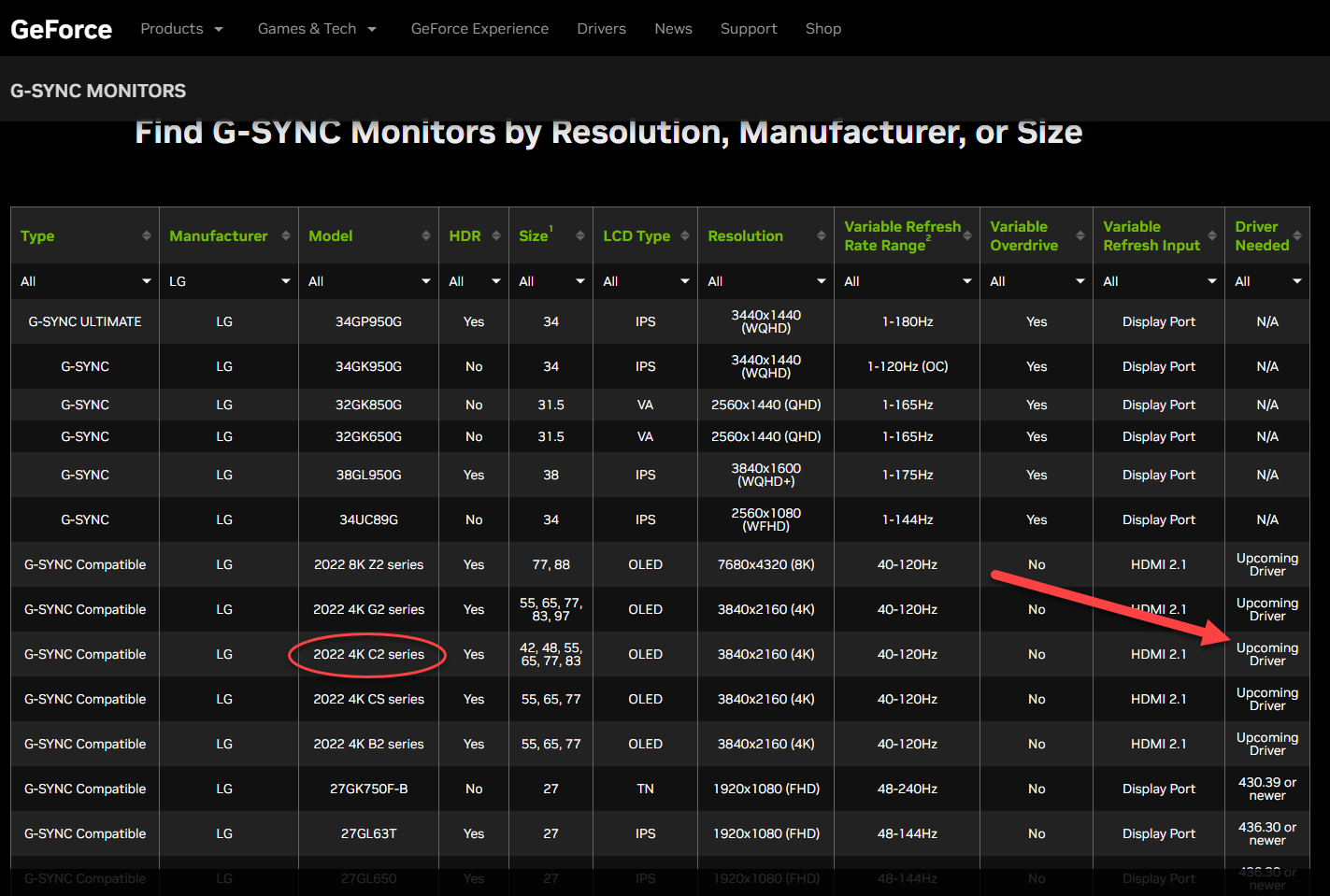

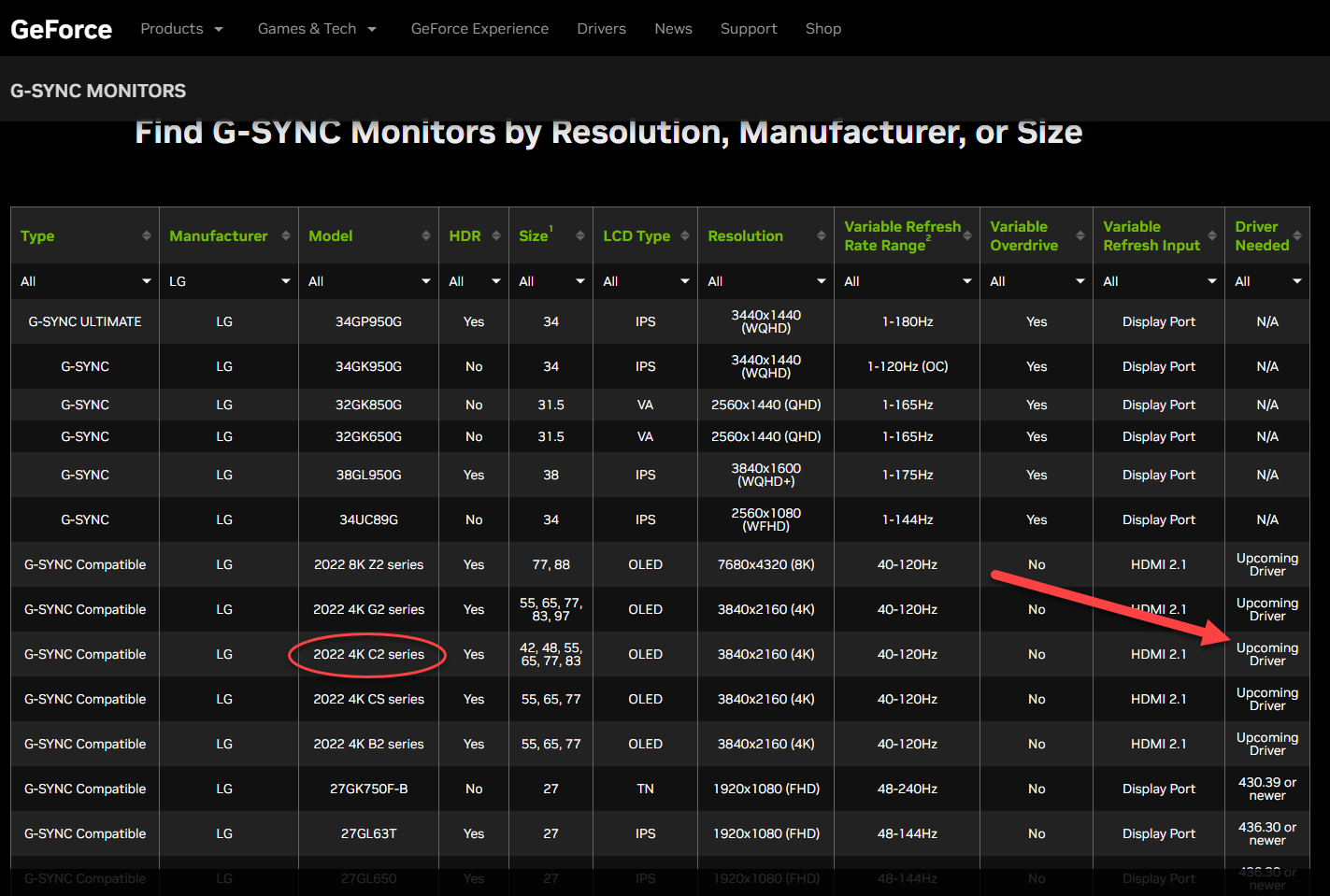

https://www.nvidia.com/en-us/geforce/products/g-sync-monitors/specs/

I notice on Nvidia's site that it says upcoming driver. Is it possible that G-Sync isn't actually working correctly on the LG C2 yet?

I do have the most recent NVidia driver as of this week.

Are there any settings I might be missing that I need to enable with the LG OLED?

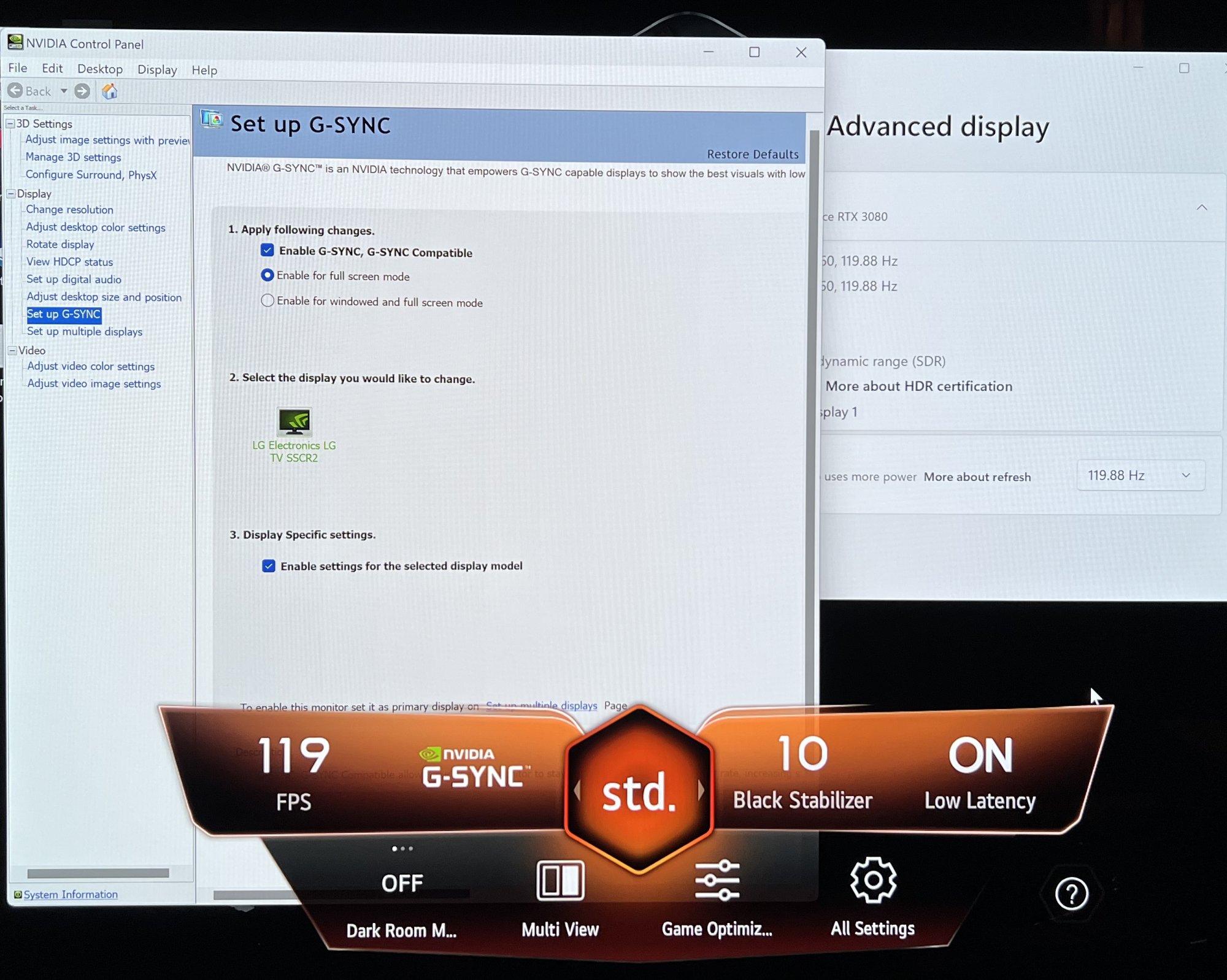

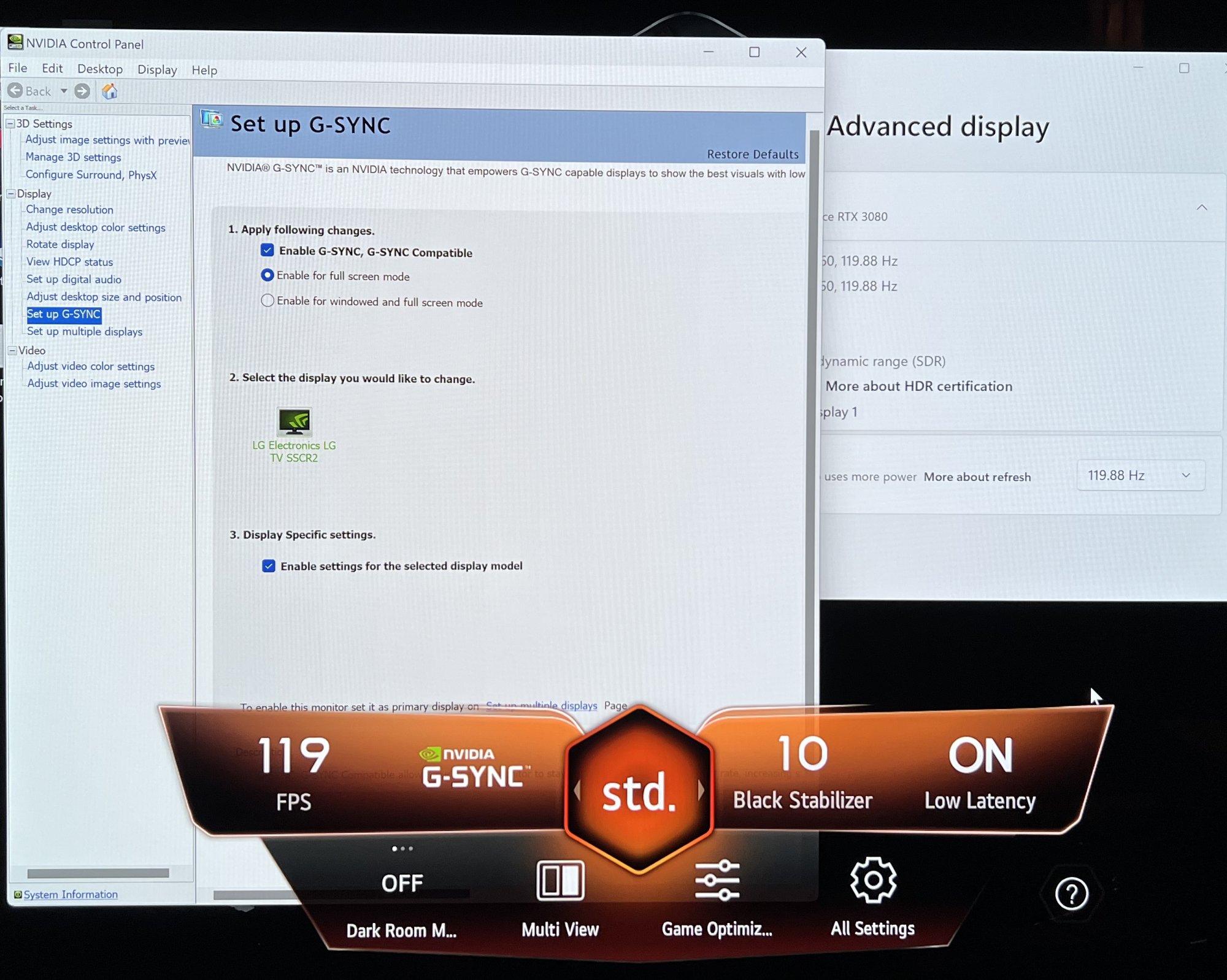

It says it’s working:

It's has been phenomenal. I'm one that can always tell with G-Sync or Freesync is on or off - it really benefits my gaming experience, so much so that playing games without it feels terrible to the point I don't want to play the game. Occassionally if G-Sync got turned off I could immediately tell, and my friend blind tested me with G-Sync on and off and I was 100% accurate - even at high frame rate games detecting if it was on or off.

With hardware G-Sync on the Alienware monitor I don't feel any issues in gaming down to the low 40FPS range - it feels buttery smooth.

I recently swapped to the LG C2 OLED with G-Sync compatibility. It feels WAY less smooth at 40-50FPS range than the Alienware does with CyberPunk 2077.

Why? Is this a known issue? Is this the difference between traditional LCD IPS display and OLED motion?

I'm confident this isn't in my head. I played a lot of Cyberpunk at 40-50FPS on the Alienware monitor and found it perfectly acceptable. >100 hours back at game launch. With DLSS I found the game perfectly acceptable on my 1080TI card (with settings reduced) and my 3080 card with settings maxed.

I recently swapped to LG C2 42" OLED, have new CPU hardware (PC upgraded from I7-6950X to I7-12700K) - and the same 3080 video card, but the increased resolution makes my gaming experience still run about the same 40-50FPS at max settings. It doesn't feel smooth at all at these frame rates. It feels like G-Sync is NOT on. (even though it's set to on in the Windows 11 settings and appears to be on with the OLED Game Optimizer config showing 119FPS max referesh rate and VRR).

Granted there is nearly 2 years of driver updates, and CyberPunk 2077 software updates and Windows 10 vs. Windows 11 difference , and HDR turned on/off etc between my two experiences too complicate that direct A/B comparison from my previous play through to this current playtime...But it just feels very different now than it did before, and not in a good way. Visually the game looks SOOOOOOOOOO much better on OLED with perfect blacks, and HDR. But for the feel - the Alienware with hardware G-Sync crushes the LG OLED smoothness feel. I guess I'll have to hook the Alienware back up and try the game again - - try to load settings to get to that same 40-50FPS and see if my memory serves me correctly, but I am very particular about this, and it's easily observed to me subjectively ---and I do game a lot and this OLED just feels quite different at 40-50FPS.

Is hardware G-Sync vs. compatible G-Sync an expected difference in the 40-50FPS range?

https://www.nvidia.com/en-us/geforce/products/g-sync-monitors/specs/

I notice on Nvidia's site that it says upcoming driver. Is it possible that G-Sync isn't actually working correctly on the LG C2 yet?

I do have the most recent NVidia driver as of this week.

Are there any settings I might be missing that I need to enable with the LG OLED?

It says it’s working:

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)