wra18th

[H]F Junkie

- Joined

- Nov 11, 2009

- Messages

- 8,492

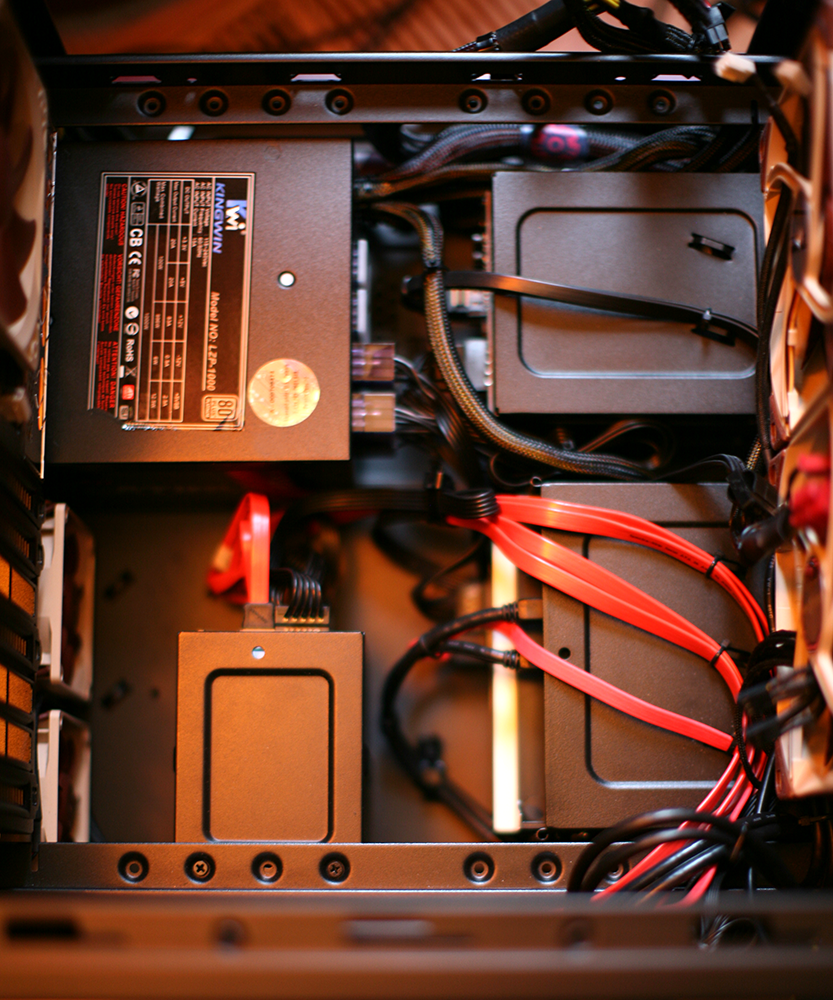

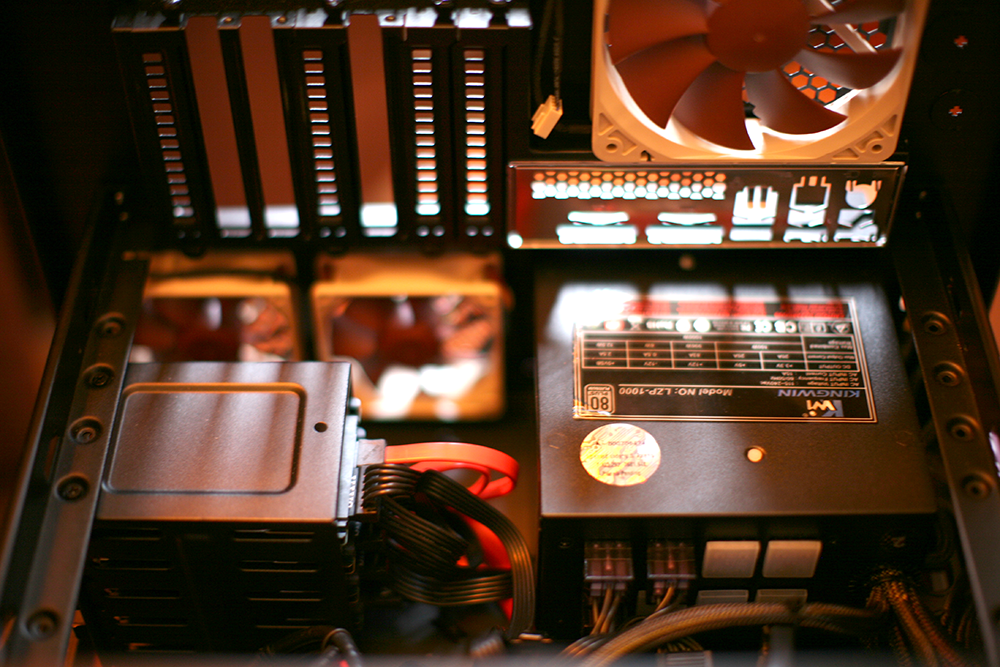

Looks like the twin towers of power!

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Looks great. Your thread has me strongly considering a HAF XB for m next build.

Hi SonataSys,

Could you tell us how is the system going on? Are you happy with it?

thanks

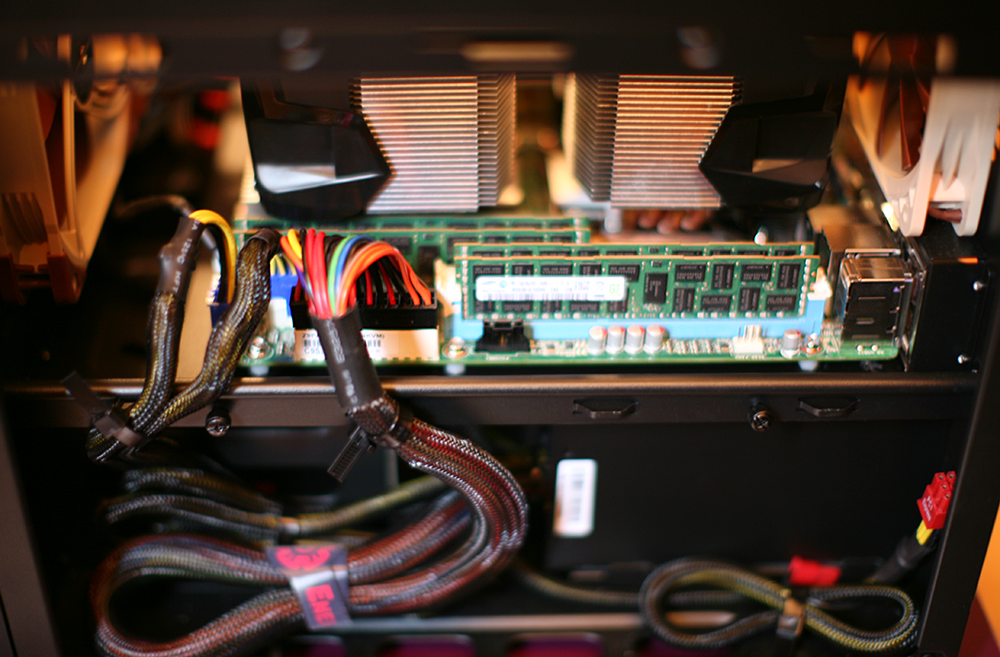

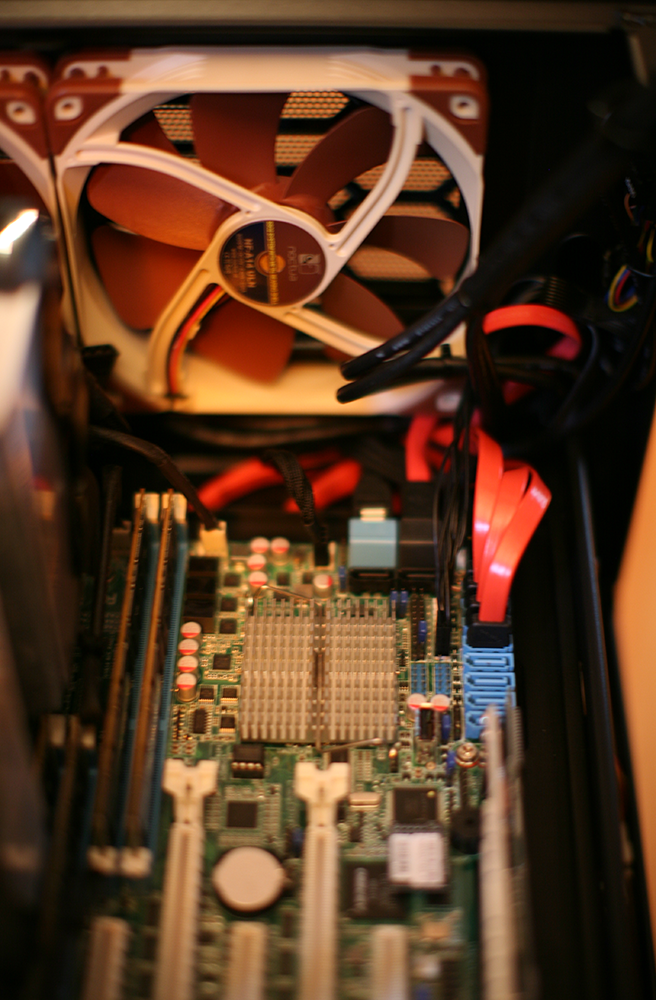

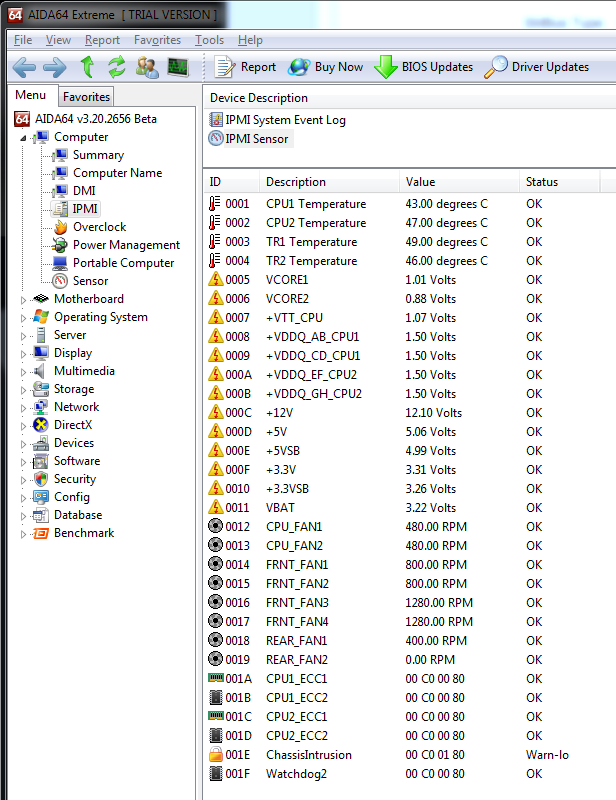

I also heard back from Noctua, seems the NH-U12DXi4 or NH-U12S coolers will work, however, they've a height taller than my tower case allows, but confirmed that the slimmer NH-U9DXi4 will work, but with a caveat: "We've tested this cooler with both fans installed using 130w TDP single CPU LGA2011 systems and I'm able to state they're suitable for typical workloads, but you will have to expect increased CPU temperatures under continuous heavy CPU load. I would recommend you to fit both coolers blowing towards the top of the enclosure if you're using a tower type case and ensure good case ventilation." Bit perplexed by what he means here, place the coolers on the CPUs such that the fans are pointing towards the PSU at the top of the case or somehow retrofit the fans on the cooler in that direction? Could be that they don't recommend having the CPU1 fan blowing out hot air into the CPU2 fan intake...not sure. The Arctics are also too tall; so, I'm now considering other cooling options like the Thermalake NiC F3 or F4 or perhaps the Supermicro SNK-P0050AP4 with a Noctua 200mm fan like you did with the Arctics...bummer.

Option 1: $53 for 5 direct contact heat pipes at 126 x 96 x 105mm

Cooler: Supermicro SNK-P0050AP4 (recommended for the X9DRL-EF)

Fan: Noctua NF-BP 92mm (1600RPM, 37.8CFM, 64MaxAir & 17.6dbA)

Option 2: $64 for 4 direct contact heat pipes at 155x140x50mm (1mm wider than Arctic)

Cooler: Thermaltake NiC F4

Fan: Noctua NF-F12 PWM 120mm (1500RPM, 55CFM, 93MaxAir & 22.4dbA)

Option 1: $53 for 5 direct contact heat pipes at 126 x 96 x 105mm

Cooler: Supermicro SNK-P0050AP4 (recommended for the X9DRL-EF)

Fan: Noctua NF-BP 92mm (1600RPM, 37.8CFM, 64MaxAir & 17.6dbA)

Option 2: $64 for 4 direct contact heat pipes at 155x140x50mm (1mm wider than Arctic)

Cooler: Thermaltake NiC F4

Fan: Noctua NF-F12 PWM 120mm (1500RPM, 55CFM, 93MaxAir & 22.4dbA)

I'm leaning toward option 1 at the moment, but would appreciate your thoughts on it?

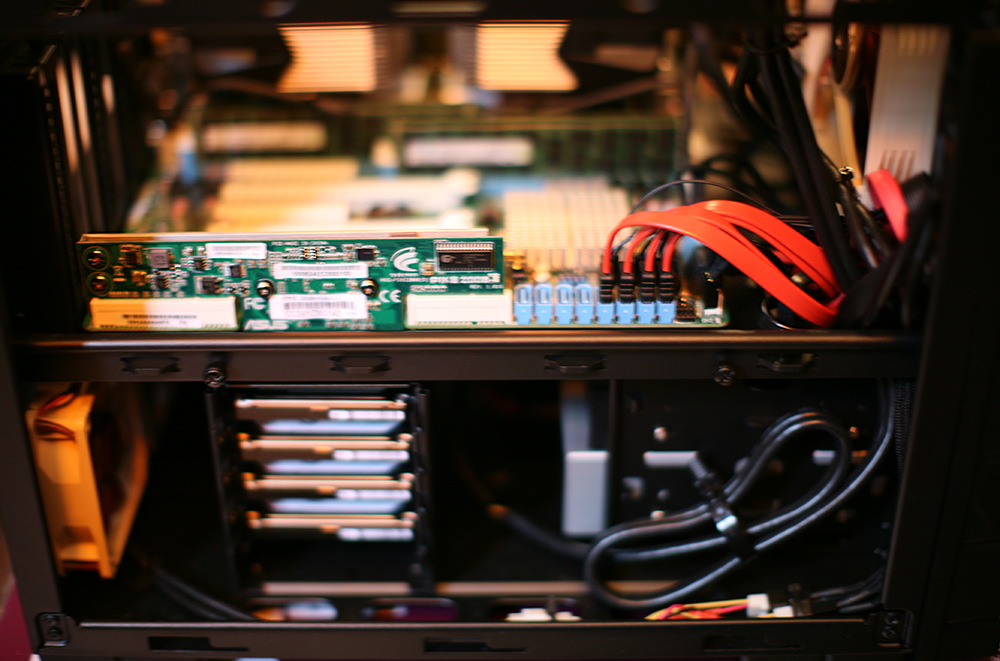

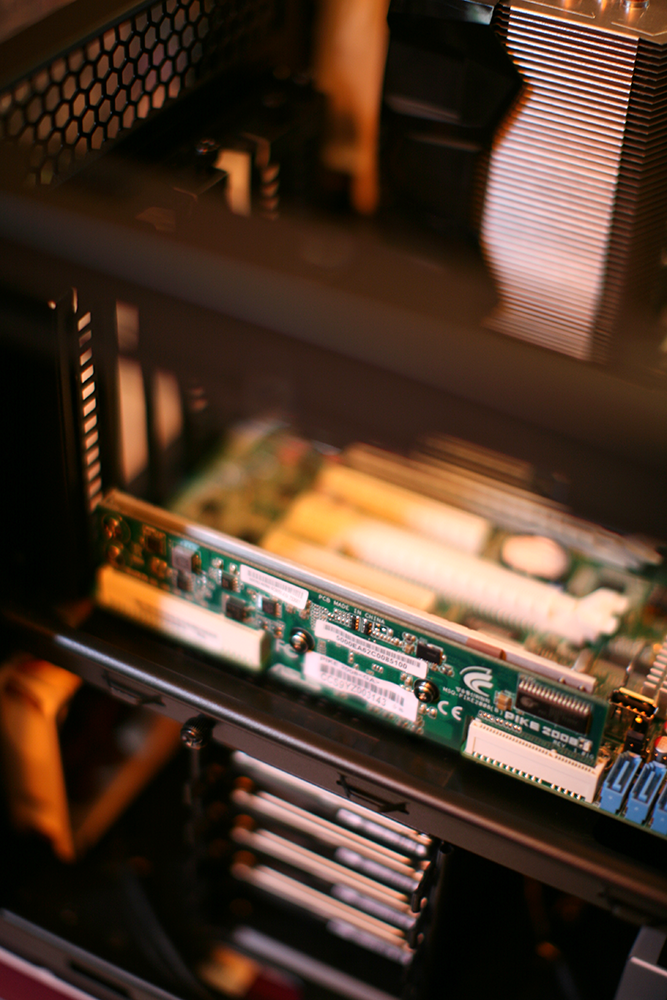

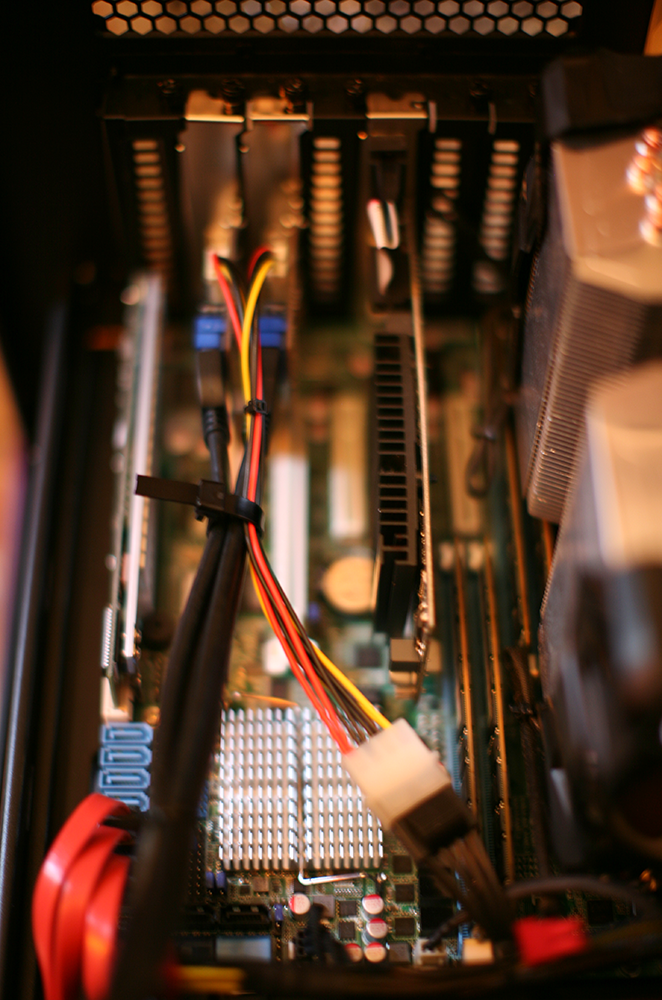

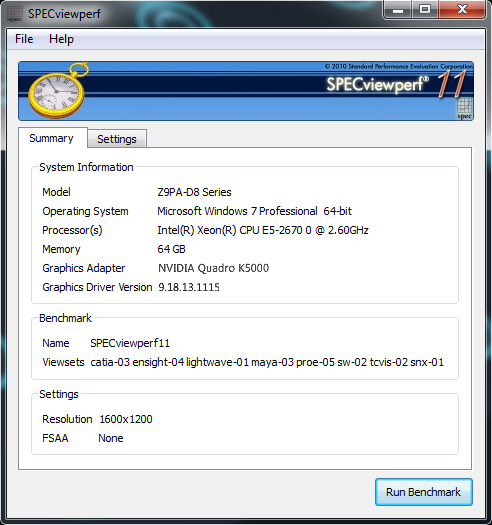

Components are starting to come in and expect to start building next weekend; will post results for future Z9PA-D8 owners or considering being owners...