drescherjm

[H]F Junkie

- Joined

- Nov 19, 2008

- Messages

- 14,941

BTW, some of the pictures of the used Dell servers at Amazon appear to be very deceptive. I mean they take a picture of 6 servers in a rack when you are purchasing a single server.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

That's exactly what I use mine for. 144GB of RAM and a 3.2GB FusionIO drive (massive iops) for the dB.

It's basically a big SSD that plugs into a x8 pcie slot, except you can't boot from it. Even though it's 7 years old, it's about as fast as a modern nvme drive except with about twice the iops. I use it to hold the db, as well as store ramdrive images (ramdrive is for the tempdb files and to hold the IIS drive for fast .NET execution).what'd you use the fusion drive for? Like a db cache?

Anyone who owns one, how much power do they actually use? How much $ per month would you say it costs to run one of these?

Curious what are most people using these for at home besides a storage server? You say you could run 4-5 vms.. what do people run on them exactly?

So if I wanted to setup one of these to be primarily used for storage (8-12gb total storage (raid 5)) what all would you need to get one up and going?

These are definitely an edge use case. My usage is for a SQL database that's highly RAM and iops dependent, so it's a good fit.

Pros:

Cheap

Built like a tank, great reliability and redundancy (dual PSUs, 4 ethernet ports), enterprise level build quality

Ability to put in lots o' memory (I have 144GB in mine that I got for $100 on ebay)

CPU power is OK, not great, but good enough for most things.

Well documented and patched.

Cons:

Most are limited to SATA II

PERC cards are limited to 2GB drives and have proprietary cables - upgrading is expensive

Only PCIe x8 slots

Big, heavy, inefficient compared with more modern hardware.

RamonGTP: Did you do a write-up on how to mod the pci-e slot for video cards? If that was you, thank you! I did it and now have a GT730 running in mine. Nice for higher resolutions and digital output.

It's a little pricier than the Dell, but as far as performance, it's the HP twin of the Dell. Keep in mind both companies do things differently in terms of their bios, system layout, compatibility, and parts, so you might find you like one brand better than the other. I like them both as they're like tanks.As someone who knows nothing about servers and is finally going to dive in once the right hardware is found, would this HP ProLiant be as good a value as the Dells?

If you're using it for storage, you have a ton of options--you can run freenas on it baremetal or in a VM, or you could literally just install win10 and make a giant share using the raid controller and share it. The controllers typically have a battery backed cache so you'll have 512MB-1GB+ of nvme level performance before it hits the drives, but even then sas drives are fast and you can replace them with ssds if you want.Curious what are most people using these for at home besides a storage server? You say you could run 4-5 vms.. what do people run on them exactly?

So if I wanted to setup one of these to be primarily used for storage (8-12gb total storage (raid 5)) what all would you need to get one up and going?

I had these questions too when starting out. So a 'hypervisor' is the 'os' that runs the virtual machines. A hypervisor can be installed 'bare metal' (directly on the hardware like any other OS), or can be run inside a host operating system like windows (Microsoft's Hyper-V can do this). Some of the more popular ones are Esxi (VMware), Hyper-V (Microsoft), and there's even open source free ones like Proxmox.So when they say run vm's they are referring to like a plexi server? (VM is virtual machine right?). so running that in its own virtual server/os?

Reliability on these things is awesome--it's why you still see servers for sale that are well beyond their prime in terms of specs. Like my dual lga771 HP DL380 Gen 5. It runs great and is super reliable, and the original MSRP of over $7000 makes you quickly realize why--they put the money into it for sure.Pretty solid deals here, I am still running an r710 as a file server that chugs right along.

Yep, or install Proxmox and do the same. And push it to see how many VMs you can put on there. A lot of people keep linux isos handy to spin up a VM of one whenever they want one. You can also run windows inside VMs so you don't have to worry about updates breaking things. Snapshot the VM and just make a VM off the snapshot every time.Install esxi on it. It's easy and effective. Then make vm's with 2-4 cores test on those vm's. Break em, build em, destroy em, do it all over again. Install an ssd for the ones that you are going to destroy often.

The good thing about these is that they are designed to be remote controlled and headless so you can pretty much put them anywhere. I have some in the attic and garage at my parents house and in other places they can't even hear them. I've even heard of people putting them under the bed.Id love one of these old rack mount servers but the problem is the noise.

The 1u ones can be because their fans have to spin so much faster than 2u fans. But the 2u units when not loaded can be quite silent. Not desktop pc slient, but definitely not datacenter jet howling.Even still, man these things are whiny.

What I really want is one of those tiny Dell tower servers that I can stuff an HBA card in and run FreeNas. Maybe even one of those HP Proliant microservers.

They must be a bad environment then. I was talking to my wife yesterday over facetime while working on the R410 and the only time I couldn't hear her perfectly was when I initially booted it and the fans went 100% and that was for only 5 seconds.Power usage is no picnic either. We have a bunch of the R series servers and I hate getting on calls with field techs, can never hear them.

If you get a Supermicro one you typically can modify certain things like the fans. These are more like the server analog to regular computers where you can pick the parts and make them how you want them.Can I stuff noctua fans in it?

Nice! How much ram did you have and what processors?Mine was installed into a Corsair Air 540 chassis,

View attachment 225051

The heat can be great in the winter.That too and the heat.

But hey, if the goal is to get a small VM lab going to practice Xen or VMware, its a great way to get into some hardware for it.

These are ridiculous for the purposes of a NAS. In fact, even the Dell T30 that was so popular a few years back is overkill. A synology with 5 bays is like 600 bucks. After you get done putting a system together or buying the T30 and adding a HBA card to it, the synology becomes cheaper. What a world.

So I have several of these and use them along side desktops. The UPSs all have a power draw indicator so I can tell how much more power the servers draw than the desktops. And honestly it's not that much more--50-100w peak. When idling or low usage they will use more, but at peak they're actually about the same. And just think about it this way--servers generally come with 500-700w power supplies, and that's pretty much the standard with desktops today, so I they're in the same ballpark except when idling as they're not made to idle as low.Anyone who owns one, how much power do they actually use? How much $ per month would you say it costs to run one of these?

I love my 2950 startup noise.Just listened to the sound of a R720 on yt, one video he was comparing a 2950 to a R710 (not sure how much difference a 710 is to a 720) but the 2950 was WAY louder than the 710.. the other video I watched was a r720 and overall I don't think it was to bad.. nothing like the 2950 was.

Those things are tanks.I thought you were talking about those old janky cisco 2950 switches lol

Great summary! The software support issues is one of the reasons I plan to use Proxmox as even the newest version will support my old Dell 2950s, and apparently I could even create a cluster with all the hardware I have.Keep in mind VMWare is part of the reason these servers are being phased out of production. VMware's HCL support is incredibly poor and they like to phase things out before the warranty even expires. x20 series Dells are not supported for ESXi 6.7, so the last version you can load is 6.5. I don't believe the x10 series support 6.5 at all, and 6.0 is going EOL in less than a month. That said if you want to run Windows, there shouldn't be any issues there, nor should there be any issues using Linux. If you're having hypervisor compatibility issues I'd suggest either using Hyper-V on Windows or Proxmox for a Linux based hypervisor. Both of these can support just about anything you can come up and you won't have to spend a bunch of time trying to side load your Intel NIC drivers. *Glares at VMWare*

As for noise it's been so long since I've even heard a 2950 I wouldn't want to comment on it. Just in general any type of system that is a 2U will be slightly quieter and less whiny than a 1U. More modern systems have better power management, and try to slow the fans down more than older systems would. But most of these servers are still pulling like 200W from the wall if they are filled with disks, so it's $$$ to keep them on 24/7. In a datacenter no one really cares that much, but in your house you'll notice if there is a $20 a month jump in your power bill. With LED lighting now, one server probably consumes more power than all of the lights in your home do every month.

Pros:

Cheap to purchase

ECC memory

hot swap drive support / hardware RAID

lots of HDD slots

Dual PSU

Well built with quality components (For the most part)

Way more RAM slots

Out of band management (iDrac, etc)

Cons:

loud

power hungry

incredibly long, might not fit into your rack

old / possible hardware issues

software issues with vmware

For the power costs alone it's certainly possible to just build a new desktop and you'll probably break even around the 3 year mark. If you don't need tons of disks or memory, it might be a better option. If you're buying an 8 year old server, you'll probably either spend half your time on ebay finding replacement disks or end up having to buy new ones anyway, so keep that in mind in the costs.

Yep, that's a turnkey that you can put in any case and be up and running.I mean, if someone is trying to get into the home server lab game I have a X8DTI-LN4F that has two E5620 in it and some RAM that'd I'd sell pretty cheap.

It's a fine setup. I just swapped it out of the chassis for a Threadripper. I will probably post it up in fs/ft if no one wants it locally. My buddy's kid might want to play with it.Yep, that's a turnkey that you can put in any case and be up and running.

People talk about the power usage of these as if they need 1.21 Gigawatts.Yeah, after re-considering and reading all the feedback I too would pass on these. For what it's capable of, I don't think it's worth the cost in power really. Like you said, unless you needed something to run like a database or something that would require lots or ram and storage, what the hell are you really going to do with it.

Amazon?!! Deceptive?!? Say it isn't so?!?BTW, some of the pictures of the used Dell servers at Amazon appear to be very deceptive. I mean they take a picture of 6 servers in a rack when you are purchasing a single server.

Fixed it for you.610/710's are getting to the point of being cheap....

Roast me

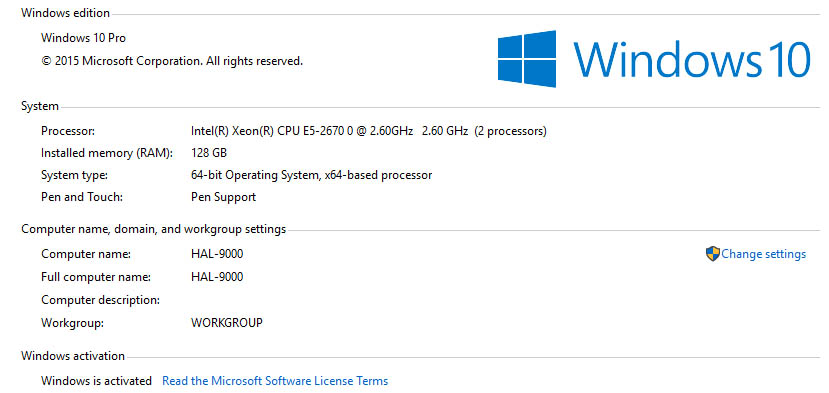

I had the older E5-2670's 8 core CPU's and 128GB Ram,Nice! How much ram did you have and what processors?

I love my 2950 startup noise.It's like having a turbine engine start up in front of you.

But that's me.

The R710 isn't much quieter upon startup, but it is quieter running--probably because it's more powerful than the 2950 and doesn't have to work as hard for what I throw at it. I haven't been able to hear a 720 in person--too expensive.

Nice!I had the older E5-2670's 8 core CPU's and 128GB Ram,

View attachment 228288

Awesome.I have my R720 all setup now and its not loud at all, on startup it winds up pretty good but during normal use with esxi 6.7, windows 2019 server vm and a few linux vm's its not loud at all. Still trying to get 10g ethernet working on my 3705-E switch and x520 nic.. can't seem to get a link.

Awesome.Are you using sfp modules, dac cable or rj45?