jschuricht

Weaksauce

- Joined

- Sep 7, 2008

- Messages

- 100

EricThompson guessed correctly. It's a Supermicro 5018A-AR12L.

Total Storage 137.4 TB

Max single system storage 72.6 TB in 1U

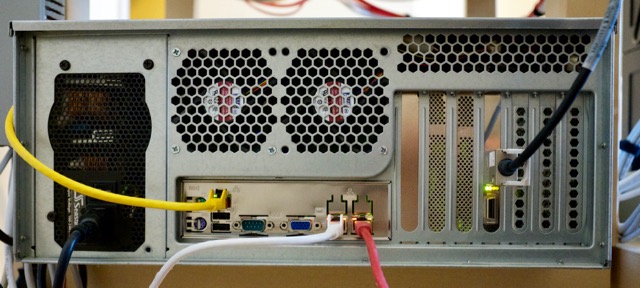

Supermicro 5018A-AR12L (1U)

RAW capacity 72.6 TB (HDD/SSD combined)

Atom C2750 (8x 2.4GHz cores)

32GB ECC RAM

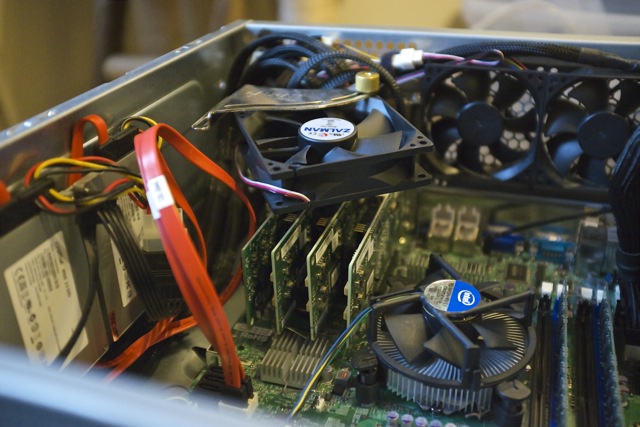

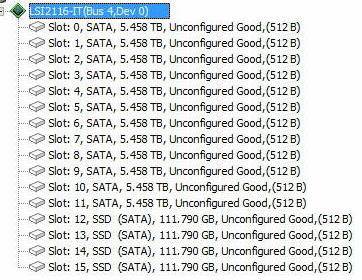

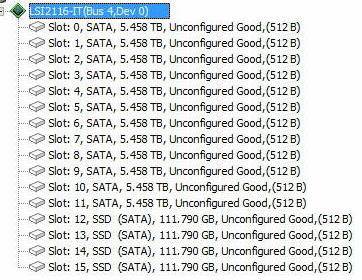

Onboard LSI 2116

12x 6TB Hitachi HDD

5x 120GB Intel 530 SSD (2x boot, 3x cache)

10Gb Myricom CX4 NIC

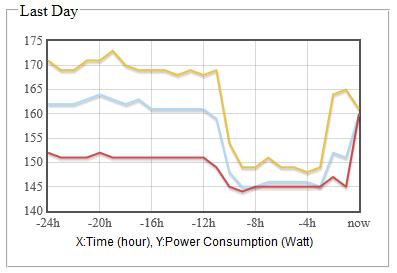

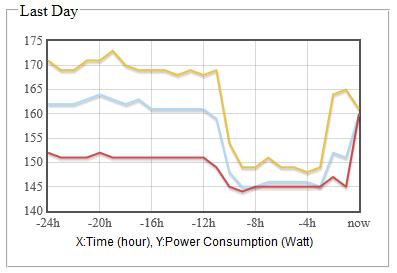

2012 R2 Storage spaces with 100GB write cache

Supermicro intended for 2x 2.5" drives with a mount that wasn't listed when I ordered this server. I adapted it to hold 5x 2.5" 7mm drives with a few zip ties. I wouldn't ship it like that but it holds fine between the workbench and rack.

Old pic of the rest of the rack

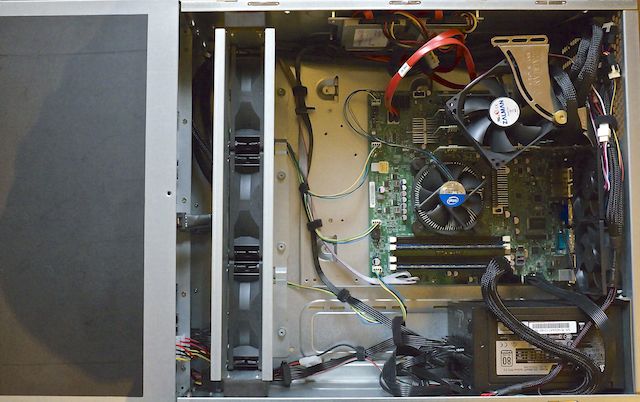

Case - Supermicro SC846E1-R900B

PSU - 900w redundant

Mobo - Supermicro X7DWN+

CPU - 2X Intel Xeon E5420 @ 3GHz

RAM 56GB

RAID controller - Supermicro AOC-USAS-H4iR

26x 2TB Hitachi

8x 450GB 15k Hitachi

Case - Supermicro SC118G-1400B

PSU - 1400w single

Mobo - Supermicro X8DTG-D

CPU - 2x Intel Xeon E5620 @2.4GHz

RAM 48GB

GPU 1x Nvidia 560ti 2GB

2x 600GB WD Velociraptors

4x 1TB WD Velociraptors

Total Storage 137.4 TB

Max single system storage 72.6 TB in 1U

Supermicro 5018A-AR12L (1U)

RAW capacity 72.6 TB (HDD/SSD combined)

Atom C2750 (8x 2.4GHz cores)

32GB ECC RAM

Onboard LSI 2116

12x 6TB Hitachi HDD

5x 120GB Intel 530 SSD (2x boot, 3x cache)

10Gb Myricom CX4 NIC

2012 R2 Storage spaces with 100GB write cache

Supermicro intended for 2x 2.5" drives with a mount that wasn't listed when I ordered this server. I adapted it to hold 5x 2.5" 7mm drives with a few zip ties. I wouldn't ship it like that but it holds fine between the workbench and rack.

Old pic of the rest of the rack

Case - Supermicro SC846E1-R900B

PSU - 900w redundant

Mobo - Supermicro X7DWN+

CPU - 2X Intel Xeon E5420 @ 3GHz

RAM 56GB

RAID controller - Supermicro AOC-USAS-H4iR

26x 2TB Hitachi

8x 450GB 15k Hitachi

Case - Supermicro SC118G-1400B

PSU - 1400w single

Mobo - Supermicro X8DTG-D

CPU - 2x Intel Xeon E5620 @2.4GHz

RAM 48GB

GPU 1x Nvidia 560ti 2GB

2x 600GB WD Velociraptors

4x 1TB WD Velociraptors

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)