erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,894

This is darn impressive. Wonder if it take off? Hardware Acceleration support and major application support?

"Fraunhofer HHI today with its partners announced the official H.266/VVC standard. The aim is with its improved compression to offer around 50% lower data requirements relative to H.265 while offering the same quality. H.266 should work out much better for 4K and 8K content than H.264 or H.265.

Fraunhofer won't be releasing H.266 encoding/decoding software until this autumn. It will be interesting to see meanwhile what open-source solutions materialize. Similarly, how H.266 ultimately stacks up against the royalty-free AV1.

More details on H.266 via Fraunhofer.de."

UnknownSouljer

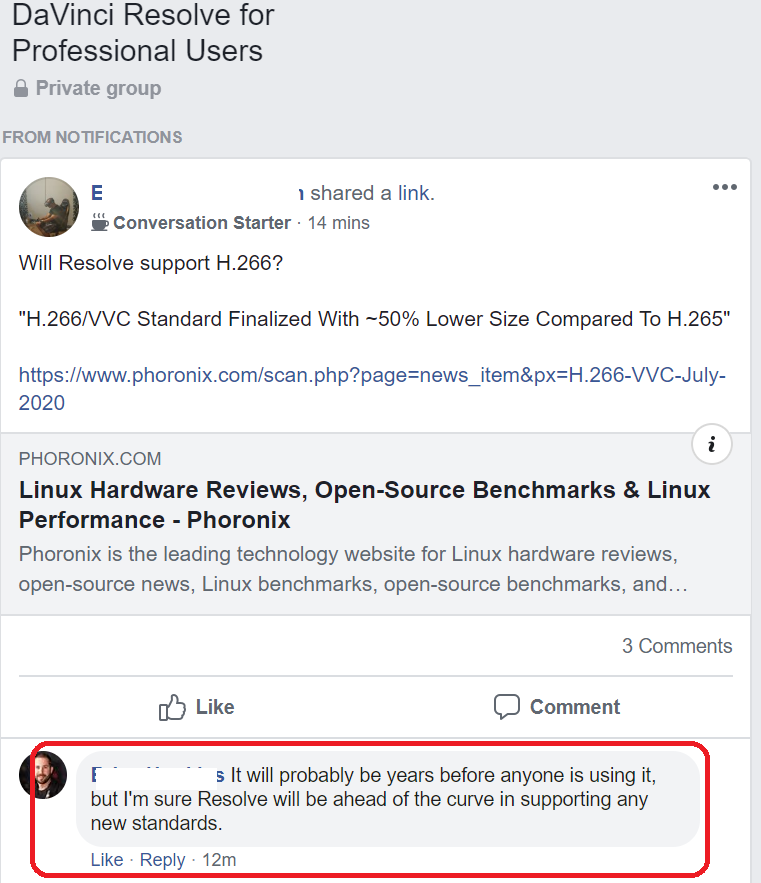

https://www.phoronix.com/scan.php?page=news_item&px=H.266-VVC-July-2020

"Fraunhofer HHI today with its partners announced the official H.266/VVC standard. The aim is with its improved compression to offer around 50% lower data requirements relative to H.265 while offering the same quality. H.266 should work out much better for 4K and 8K content than H.264 or H.265.

Fraunhofer won't be releasing H.266 encoding/decoding software until this autumn. It will be interesting to see meanwhile what open-source solutions materialize. Similarly, how H.266 ultimately stacks up against the royalty-free AV1.

More details on H.266 via Fraunhofer.de."

UnknownSouljer

https://www.phoronix.com/scan.php?page=news_item&px=H.266-VVC-July-2020

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)