NamelessPFG

Gawd

- Joined

- Oct 16, 2016

- Messages

- 893

I was gonna say...Doesn't limiting the color information kinda go against the whole HDR thing?..

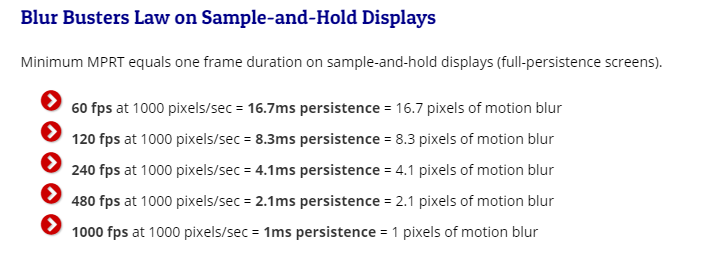

I thought half of what was needed with modern HDR displays was basically doing what Matrox was doing back in the '90s/early 2000s and using 10-bit color channels instead of just 8-bit (yeah, that's right, DisplayPort and HDMI are catching up to decades-old VGA!), since the expanded gamut would make banding more obvious with the limited color resolution. Needless to say, that's just even more data to be shunting through the video interface at high refresh rates - not a good thing when we're hitting bandwidth limits.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)