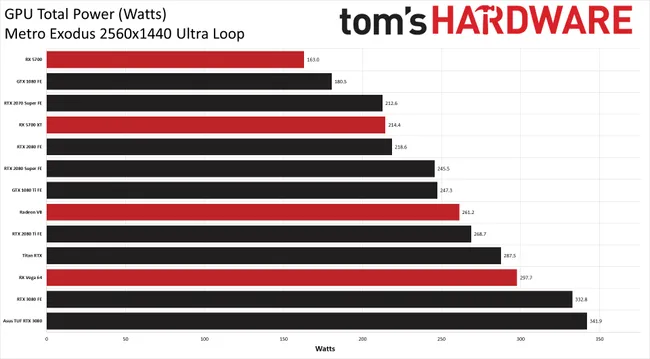

Seems like desperation somewhat from Nvidia. How would Ampere perform if at 250w? Vice 320w or 350w? Maybe a tester will do this comparing Turing to Ampere watt per watt.Looking at it again there's one thing not in this list. The manufacturing process and the power being used. I'm surprised no one really has touched on this but these cards use more power than ANY nVidia card before it. It uses more power than ANY Titan card. Actually these cards use more power than ANY video card before within the last few generations. So the AIBs literally can't reuse components like they probably have in the past. The top cards of the 80 series have pretty much stayed around the 250 mark. These cards are 100w more.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GeForce RTX 3080 sees increasing reports of crashes in games

- Thread starter kac77

- Start date

Seems like desperation somewhat from Nvidia. How would Ampere perform if at 250w? Vice 320w or 350w? Maybe a tester will do this comparing Turing to Ampere watt per watt.

I'm guessing at 250w it would be an underwhelming jump over Turing. Something like Pascal to Turing (or maybe worse?). But with current pricing I would still have been in the market for it that's the funny thing (I skipped Turing entirely). Performance jump is bigger than I expected but it's like buying a super overclocked Turing card with all those watts and seemingly barely stable clocks.

I'm guessing at 250w it would be an underwhelming jump over Turing. Something like Pascal to Turing (or maybe worse?). But with current pricing I would still have been in the market for it that's the funny thing (I skipped Turing entirely). Performance jump is bigger than I expected but it's like buying a super overclocked Turing card with all those watts and seemingly barely stable clocks.

Gamer Nexus kind of runs past this without talking about the elephant in the room:

"The 2080 Ti pulled 264W stock and 330W overclocked, for comparison, with the 2080 FE stock at 235W. With that 330W OC number, the 3080 was often still 10-15% ahead of the 2080 Ti OC while being a few watts lower, proving its efficiency improvements. "

Wait hold up, 10 - 15 %? After a node shrink? Basically at same power you have memory differences, architectural changes (additional cores which was doubled), and process advancement all crammed into that 15%.

Last edited:

Nvidia maxed out the clocks on GA102, not much headroom. To see arch improvements I think watt to watt would be a good starting point except different loads will probably cause some differences in power usage once configured. If Nvidia put a bigger cooler on a 2080Ti, maxed out the power, added faster ram it would be like you wrote 10-15% faster. In that respect Amper just doesn't seem as strong. Curious in how well the 3070 expectations of equalling to beating out a 2080 Ti is or is that another lie.Gamer Nexus kind of runs past this without talking about the elephant in the room:

"The 2080 Ti pulled 264W stock and 330W overclocked, for comparison, with the 2080 FE stock at 235W. With that 330W OC number, the 3080 was often still 10-15% ahead of the 2080 Ti OC while being a few watts lower, proving its efficiency improvements. "

Wait hold up, 10 - 15 %? After a node shrink? Basically at same power you have memory differences, architectural changes (additional cores which was doubled), and process advancement all crammed into that 15%.

Last edited:

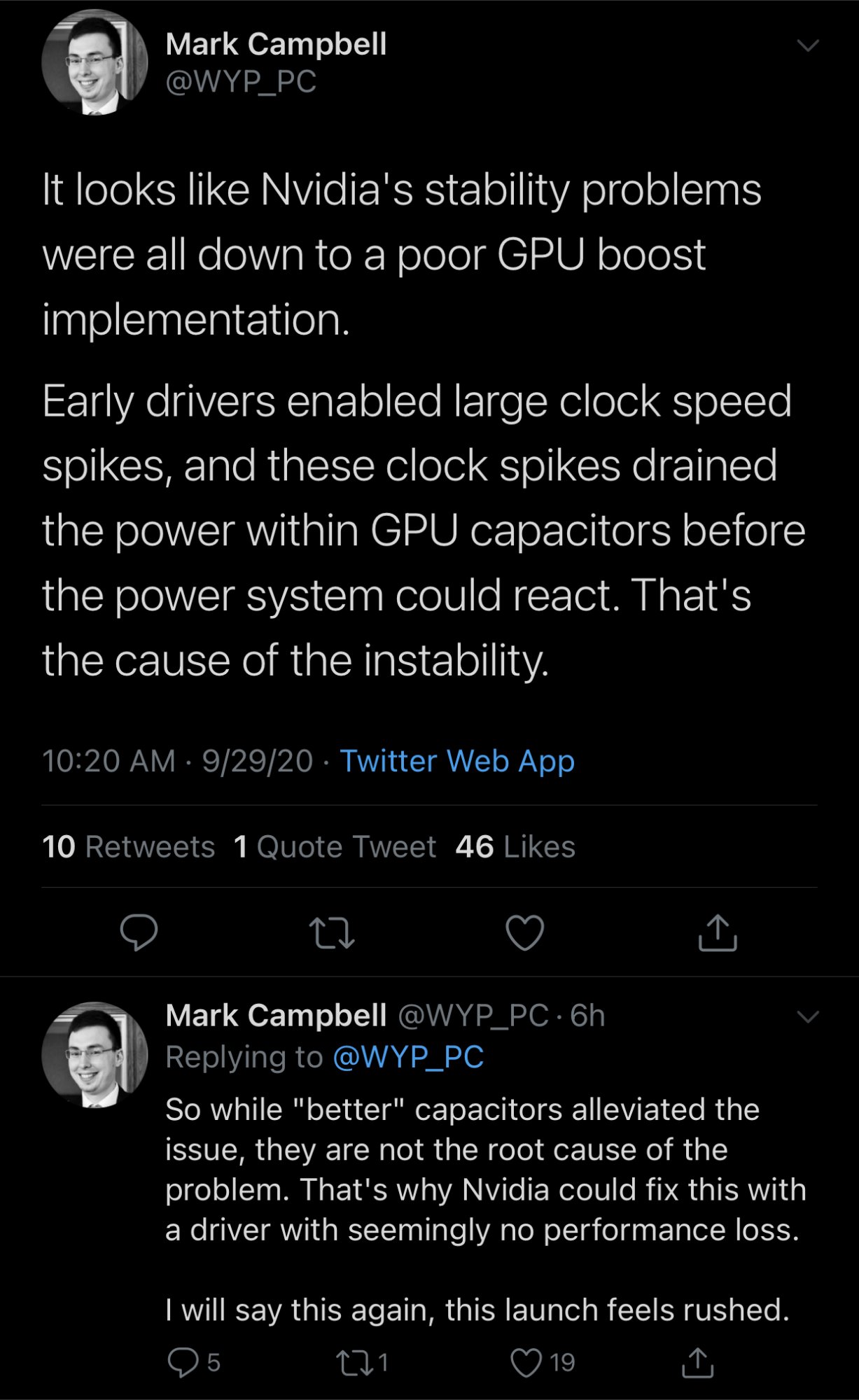

Some new stability findings timestamped in video link below. He found instability in Windows, but none whatsoever in Linux even with higher boost clocks (2100Mhz). He also says that the press drivers weren't crashing, but the mainstream ones were.

Edit - I forgot this was a 3080 thread, but seems applicable nonetheless.

Edit - I forgot this was a 3080 thread, but seems applicable nonetheless.

Nobu

[H]F Junkie

- Joined

- Jun 7, 2007

- Messages

- 10,017

Some new stability findings timestamped in video link below. He found instability in Windows, but none whatsoever in Linux even with higher boost clocks (2100Mhz). He also says that the press drivers weren't crashing, but the mainstream ones were.

Edit - I forgot this was a 3080 thread, but seems applicable nonetheless.

Sometimes linux can recover from a gpu hardware fault without crashing, so you'd need to check the logs to be sure it was stable. Good to know, though.

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,688

Fixed by today's driver. Lock the thread up, put it in storage, we're done here.

MavericK

Zero Cool

- Joined

- Sep 2, 2004

- Messages

- 31,885

Fixed by today's driver. Lock the thread up, put it in storage, we're done here.

I wonder what the "fix" looks like in practice. Was it actually a driver issue, or is it doing some sort of clock manipulation?

So far I've seen one report from a known Nvidia leaker on Reddit that his 3080's max boost clock was reduced by approximately 30Mhz while also increasing the power consumption of the card by 10w. He also indicated that despite the lower clock speed his card managed to score higher in Time Spy. If I can find the post I'll link it.I wonder what the "fix" looks like in practice. Was it actually a driver issue, or is it doing some sort of clock manipulation?

EDIT: He actually tweeted about it: https://twitter.com/kopite7kimi/status/1310582120121679872

Keep in mind this is ONE source and is not indicative of anything. Going need to see several sets of data before reaching any conclusion.

Last edited:

Correct Linux is far more resilient.Sometimes linux can recover from a gpu hardware fault without crashing, so you'd need to check the logs to be sure it was stable. Good to know, though.

Which means the 3080 is essentially a 350w card. This is above any Titan in recent memory. I would have to say that the 3080/90 as I said before are the true Titans of the line up. There is some value in that but it's no free lunch considering the power draw.So far I've seen one report from a known Nvidia leaker on Reddit that his 3080's max boost clock was reduced by approximately 30Mhz while also increasing the power consumption of the card by 10w. He also indicated that despite the lower clock speed his card managed to score higher in Time Spy. If I can find the post I'll link it.

EDIT: He actually tweeted about it: https://twitter.com/kopite7kimi/status/1310582120121679872

Keep in mind this is ONE source and is not indicative of anything. Going need to see several sets of data before reaching any conclusion.

Increase power might of allowed a higher substaind boost clocks.So far I've seen one report from a known Nvidia leaker on Reddit that his 3080's max boost clock was reduced by approximately 30Mhz while also increasing the power consumption of the card by 10w. He also indicated that despite the lower clock speed his card managed to score higher in Time Spy. If I can find the post I'll link it.

EDIT: He actually tweeted about it: https://twitter.com/kopite7kimi/status/1310582120121679872

Keep in mind this is ONE source and is not indicative of anything. Going need to see several sets of data before reaching any conclusion.

kirbyrj

Fully [H]

- Joined

- Feb 1, 2005

- Messages

- 30,693

Fixed by today's driver. Lock the thread up, put it in storage, we're done here.

Lol...like a driver is going to magically solve the cap issue. At best you're going to have reduced performance and/or even higher power draw.

A 30 mhz decrease in clocks is not much of a performance hit. Cards will still hit way above advertised boost clocks. The caps used are irrelevant. You buy a cheap model don't expect to get same clocks as higher end models which is also irrelevant since all the 3080/3090s have a hard time being stable above 2000mhz. It is not like you can't oc to get better performance like you use too. Yea this release was rushed and could have all these bugs worked out of they gave AiBs to properly test their shit. Nvidia should know better but they probably don't care.Lol...like a driver is going to magically solve the cap issue. At best you're going to have reduced performance and/or even higher power draw.

Last edited:

pek

prairie dog

- Joined

- Nov 7, 2005

- Messages

- 2,991

Not sure if the link to Jayztwocents has been posted, but here it is:

Bottom line, get the Aib boards that over-engineer if you want to have a large oc. Now trying to fix is in drivers by backing off the clock to where it is stable is a no-cost alternative, but not something I would accept. But, that's me being me.

Bottom line, get the Aib boards that over-engineer if you want to have a large oc. Now trying to fix is in drivers by backing off the clock to where it is stable is a no-cost alternative, but not something I would accept. But, that's me being me.

D

Deleted whining member 223597

Guest

Yeah I wouldn’t accept a 1% decrease in clock speed and same performance either, fuck that shit! /s

Yeah I wouldn’t accept a 1% decrease in clock speed and same performance either, fuck that shit! /s

Heh, I'm not seeing any complaints from actual Ampere owners just from the peanut gallery.

pek

prairie dog

- Joined

- Nov 7, 2005

- Messages

- 2,991

Geeze, I didn't know some of these mfrs were still in business, or the gpu bussiness, anyway:

https://www.techpowerup.com/272676/...090-crash-to-desktop-issues-capacitor-choices

I wonder if AMD will step on their own crank after this. Guess we'll find out soon, the reviewers have scented blood in the water, they'll be disassembling boards down to the component level now.

https://www.techpowerup.com/272676/...090-crash-to-desktop-issues-capacitor-choices

I wonder if AMD will step on their own crank after this. Guess we'll find out soon, the reviewers have scented blood in the water, they'll be disassembling boards down to the component level now.

I’d say mostly resolved but I wouldn’t close the book on it just yet.So resolved?

DejaWiz

Fully [H]

- Joined

- Apr 15, 2005

- Messages

- 21,824

I've got a bit of a predicament...

Originally, I was shooting for either an Asus TUF or an FE, but was willing to jump on just about any 3080 that was MSRP'd up to $750 before tax/shipping.

Back on the 21st, the MSI Ventus OC popped into stock at Best Buy, and I was able to complete the transaction, but then it disappeared from my order history.

Well, I'll be... I guess the Best Buy back office folks fixed the problem, so it looks like I'm getting the MSI Ventus OC, after all.

My first new GPU in five years. Now to keep fingers crossed that it doesn't get poached while on its way.

I'm still a bit leery over the whole POSCAP/MLCC thing despite the new drivers being hailed as the fix.

Guess I'll find out soon.

You're going to pair a 3080 with a 3770k? And at 1080p?Well, I'll be... I guess the Best Buy back office folks fixed the problem, so it looks like I'm getting the MSI Ventus OC, after all.

My first new GPU in five years. Now to keep fingers crossed that it doesn't get poached while on its way.

View attachment 284512

I'm still a bit leery over the whole POSCAP/MLCC thing despite the new drivers being hailed as the fix.

Guess I'll find out soon.

DejaWiz

Fully [H]

- Joined

- Apr 15, 2005

- Messages

- 21,824

You're going to pair a 3080 with a 3770k? And at 1080p?

Hell yes I am. Gonna be bottleneck bliss.

...until I gather the rest of the parts for my upcoming Zen3 build.

Have a 1440p 144Hz IPS sitting new in box since last October.

Just got in my 2x16GB DDR4-3600 set a few days ago.

Have had a Corsair 450D new in box for pushing two years, since my original plan was to go with a 2700X build, but that got put on hold.

Still going back and forth between getting a 570X MoBo soon, or just wait for 670X when Zen3 drops.

Is there even going to be a 670x? I haven't even seen the single leak or a rumor in the last few months from any of the usual reliable sources.Hell yes I am. Gonna be bottleneck bliss.

View attachment 284529

...until I gather the rest of the parts for my upcoming Zen3 build.

Have a 1440p 144Hz IPS sitting new in box since last October.

Just got in my 2x16GB DDR4-3600 set a few days ago.

Have had a Corsair 450D new in box for pushing two years, since my original plan was to go with a 2700X build, but that got put on hold.

Still going back and forth between getting a 570X MoBo soon, or just wait for 670X when Zen3 drops.

DejaWiz

Fully [H]

- Joined

- Apr 15, 2005

- Messages

- 21,824

Is there even going to be a 670x? I haven't even seen the single leak or a rumor in the last few months from any of the usual reliable sources.

The rumor is that ASmedia will be manufacturing the 670X chipset. Still no bona fide confirmation, to my knowledge.

There isn’t a lot of talk about it because it’s going to likely be a minimal upgrade over the X570. Likely going to just have reduced power consumption compared to the X570 and passive cooling across the board. I’m not really sure what else they could add at this time.Is there even going to be a 670x? I haven't even seen the single leak or a rumor in the last few months from any of the usual reliable sources.

Last edited:

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

Looks like it was driver issues. No issues in Linux, but had constant issues in windows until the new driver came out....

DejaWiz

Fully [H]

- Joined

- Apr 15, 2005

- Messages

- 21,824

XBarbarian

[H]ard|Gawd

- Joined

- Dec 29, 2006

- Messages

- 1,370

grats! Im still stuck checking various sites, nada luck yet.

I know it is a non issue and all but I wonder if some lawyers out there will try to start a class action lawsuit claiming reduced performance from this fix lol. Wouldn't surprise me even though they wouldn't have a case since cards still perform above advertised speed and have been reported that there was no loss in performance.

l88bastard

2[H]4U

- Joined

- Oct 25, 2009

- Messages

- 3,710

Well, I'll be... I guess the Best Buy back office folks fixed the problem, so it looks like I'm getting the MSI Ventus OC, after all.

My first new GPU in five years. Now to keep fingers crossed that it doesn't get poached while on its way.

View attachment 284512

I'm still a bit leery over the whole POSCAP/MLCC thing despite the new drivers being hailed as the fix.

Guess I'll find out soon.

The 3080 Ventus OC is a solid card, I had one for a week and can tell you first hand that it will solve any erective problems. Joe Montana throwing a footyball through a tire swing aint got nothing on the Vent!

heard you wanted more power, so I made your card less powerful to make your card more powerful.

EquaLiZr

Supreme [H]ardness

- Joined

- May 8, 2000

- Messages

- 5,841

Another vote for the Ventus 3x card. I've had mine for a week now with ZERO issues. She runs at 2ghz no problem undervolted.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)