cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Those results are with DX12 mGPU, which is the best case scenario, but impressive nonetheless.Wow! Almost 100% yield with NV link on the 2080 TI. That is a steep price to pay but that is also a great result.

Yes, in well supported titles a 1080 Ti SLI rig could outpace a single 2080 Ti, but not in many games.Better price for the money (if ya already own a 1080ti) would be 1080ti sli.

Wow! Almost 100% yield with NV link on the 2080 TI. That is a steep price to pay but that is also a great result.

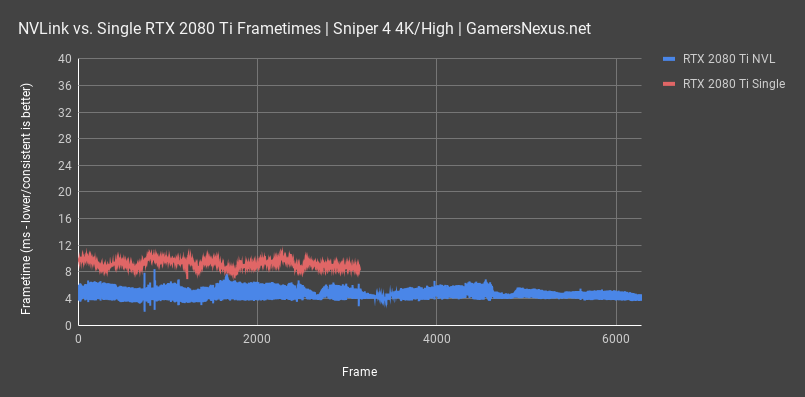

I don't know about that, but frametimes look inconsistent so far. In GamersNexus' review, Sniper Elite 4 looks pretty bad:Does NVLink still introduce a full frame of input lag like SLI did? Going from 2x970 to 1x980 Ti made a clear difference in responsiveness.

Yes, in well supported titles a 1080 Ti SLI rig could outpace a single 2080 Ti, but not in many games.

Will BFV use sli?

Ah, 4K/120 is finally possible for well supported mGPU games and it only costs a cool $2500 for the GPUs and another $1500-$2000 for a capable monitor. So it's only about $4-5k in just upgrades to an existing decent gaming PC to turn it into a 4K/120 machine...

I'll wait a few more years when the pricing is more realistic.

DisplayPort 1.4 has enough bandwidth for full RGB at 4K 120 Hz. It's only limited with HDR.Enjoy 4:2:2. “But it doesn’t make a difference in games!!”

Yes it actually does.

AA doesn't really matter. I find myself just using FXAA these days since SMAA is inconsistent depending on the game for some reason, and don't get me started on TAA. But AO off? Then what is the fucking point?

DisplayPort 1.4 has enough bandwidth for full RGB at 4K 120 Hz. It's only limited with HDR.

AA doesn't really matter. I find myself just using FXAA these days since SMAA is inconsistent depending on the game for some reason, and don't get me started on TAA. But AO off? Then what is the fucking point?

Eh glad they list them. I'll gladly sacrifice AO or just about anything to get the fps to a more consistent ~144 fps. I personally don't like TAA much either and might not turn it on regardless of performance. Though in SP games that's not a big concern of mine (v. high fps) and I lean more on gsync than in MP games.

TAA or FXAA wouldn't impact the FPS much, but disabling the AO is just stupid.

"hey, look at this amazing 4k monitor! It has 8 million pixels to clearly see all the areas where the missing AO would make the game look better!"

Again that's the problem, you're always going to have to make cuts somewhere in most games. Now if I get a 2080 ti I might have to make very few if any cuts @ 1440p. I guess the conclusion we might draw from tomb raider is that you won't be playing that at 4k 120 fps unless you want to spend a ton of cash.

As a 4K gamer, I don't see the point in completely removing features that add detail to get high FPS, some features, like shadows and AA can be SLIGHTLY reduced without much noticeable Effect, but I just don't get the idea of having such a high resolution to render N64 levels of image quality...

Again that's the problem, you're always going to have to make cuts somewhere in most games.

Not if the cards were fast enough.

¯\_(ツ)_/¯

Nice.Yeah, new name for SLI. The main difference is that it has 50x the transfer bandwidth of SLI.

Nice.

Do the game developers support this tech? Does it actually work?

I don't know about that, but frametimes look inconsistent so far. In GamersNexus' review, Sniper Elite 4 looks pretty bad:

View attachment 108303

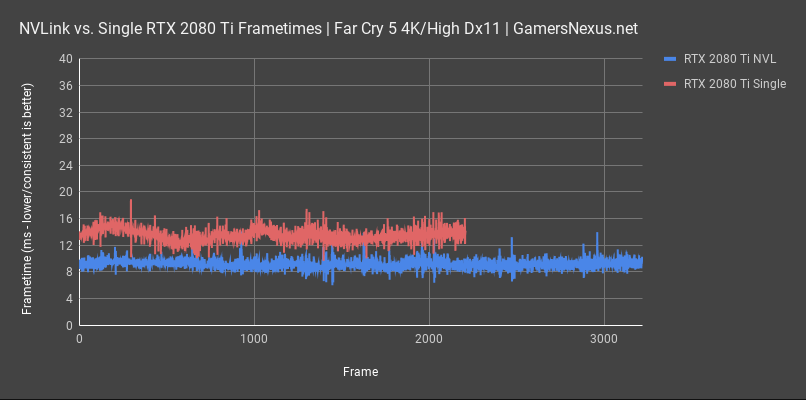

But Far Cry 5 looks pretty good:

View attachment 108304

YesAt 4k do you even need AA?

I would never turn AO off. Doing so would be an immersion killer. I'd turn the quality down to basic SSAO to save frames, but I'd never turn it all the way off. SSAA is a nice benefit to have if there are frames to spare, but it's not going to make or break the experience.Again that's the problem, you're always going to have to make cuts somewhere in most games. Now if I get a 2080 ti I might have to make very few if any cuts @ 1440p. I guess the conclusion we might draw from tomb raider is that you won't be playing that at 4k 120 fps unless you want to spend a ton of cash.

No matter the resolution, you at least need a post process AA method, otherwise you're going to get shimmering on all of those wonderful specular effects made in the pixel pipeline. SMAA is usually a happy medium between barebones FXAA and aggressive TAA, but like I said it seems the algorithm for it isn't consistent between developers.At 4k do you even need AA?

I would never turn AO off. Doing so would be an immersion killer. I'd turn the quality down to basic SSAO to save frames, but I'd never turn it all the way off. SSAA is a nice benefit to have if there are frames to spare, but it's not going to make or break the experience.

No matter the resolution, you at least need a post process AA method, otherwise you're going to get shimmering on all of those wonderful specular effects made in the pixel pipeline. SMAA is usually a happy medium between barebones FXAA and aggressive TAA, but like I said it seems the algorithm for it isn't consistent between developers.