PontiacGTX is talking out of his ass. That we understand.

PontiacGTX is kinda correct but it wasn't optimizations being done for both vendors, developers can spend the time to optimize for all hardware,

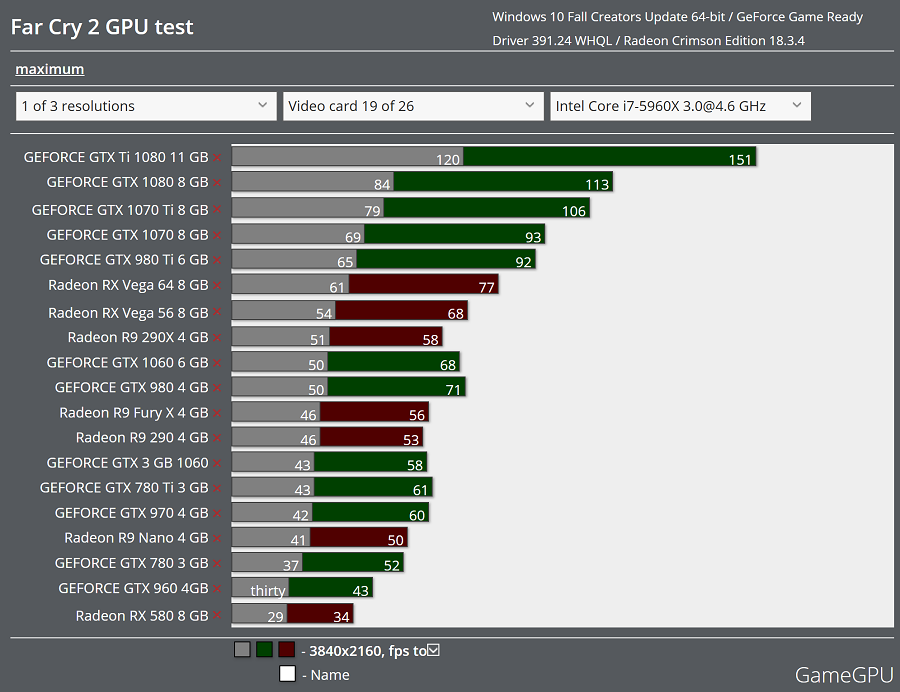

Now they also had optimization routines for Xbox and PS as well, so it would be easier for them to port those over using shader intrinsics. Now if we look at performance of rx 580, which is close to the same chip in Xbox and PS4, the performance seems retaliative to standing of the competition's cards better than Vega, that is what shader intrinsics is about, same hardware different platform, still get the same optimizations to port over.

With Vega, those shader intrinsics will not come over well.

If Anarchist4000 was still around, we would have a nice flash back to discussions about this and how he wanted to say shader intrinsics are the end all of nV, since consoles will force optimizations paths. Of course I stated quite the opposite it will hurt AMD in the long run because it will really only work for similar level and generation of hardware, once that is different, then it all falls apart. This is a good example of that.

As far as tessellation they can't push polycounts for consoles, so game assets and tessellation levels have to be made for AMD hardware so that will automatically favor AMD PC hardware or actually in this case as PontiacGTX stated "doesn't hurt" AMD hardware.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)