Just wondering what makes the most sense to setup. I used FreeNAS awhile back with NFS, but haven't touched it in awhile. Here is the specs of the SAN.

2x 10 Gb NICs (LACP)

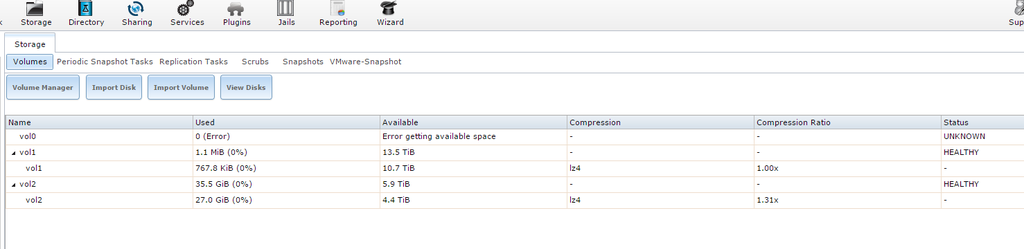

38x 2 TB SATA Drives

16x 1 TB SATA Drives

12x 600 GB SAS Drives

I don't currently have a use for the SAS drives, but they were already in the SAN so I created a volume with them. The 16x 1 TB drive may end up being iSCSI mounted in Windows for use with Backup Exec, but I am undecided. And then the primary volume is the 38x 2 TB drives that I wanted mounted to several ESXi hosts. It will be used for Veeam backups, Oracle RMAN backups, and Zerto replication.

Right now all other storage on the ESXi hosts is mounted using iSCSI, so I was thinking about going that route unless there is a good reason to use NFS.

2x 10 Gb NICs (LACP)

38x 2 TB SATA Drives

16x 1 TB SATA Drives

12x 600 GB SAS Drives

I don't currently have a use for the SAS drives, but they were already in the SAN so I created a volume with them. The 16x 1 TB drive may end up being iSCSI mounted in Windows for use with Backup Exec, but I am undecided. And then the primary volume is the 38x 2 TB drives that I wanted mounted to several ESXi hosts. It will be used for Veeam backups, Oracle RMAN backups, and Zerto replication.

Right now all other storage on the ESXi hosts is mounted using iSCSI, so I was thinking about going that route unless there is a good reason to use NFS.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)