Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,517

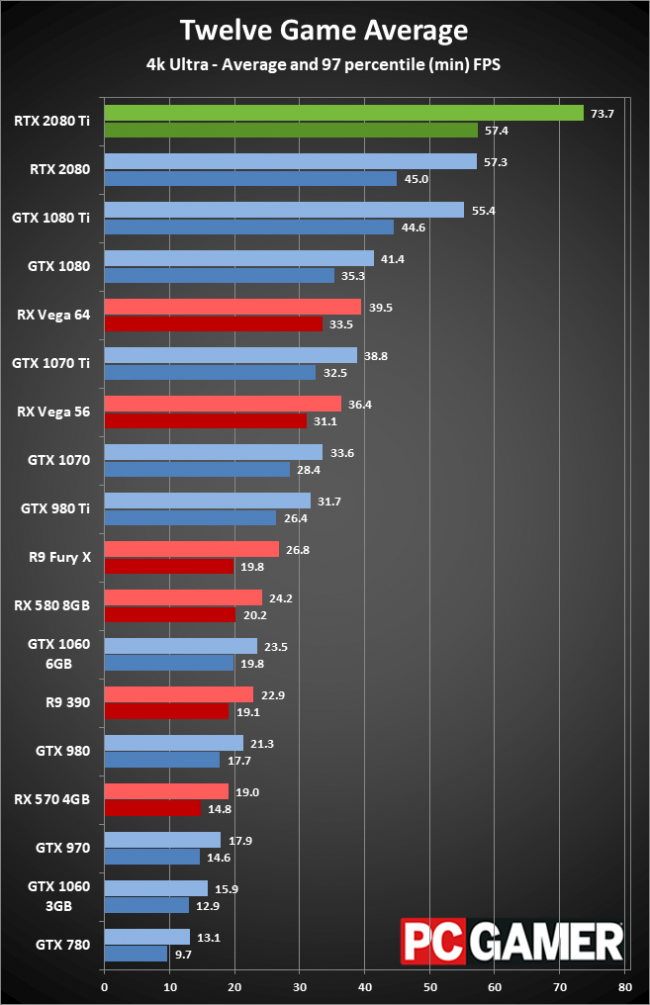

Are you more comfortable with the location of the goal posts now that you have moved them somewhere new? The OP does not mention gaming. Your "definitive" answer talks about a tiny niche within FPS gaming which itself is a niche within the submarket of gaming. For your 0.001% niche, the 1080Ti isn't worth it either because improved image fidelity is counterproductive. For everybody else, the 2080 Ti is a massive improvement.

You're right I should have specified this is not work-related upgrades. In all honesty that won't matter much though, I appreciate as much feedback as people will give!

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)