- Joined

- May 18, 1997

- Messages

- 55,626

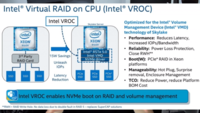

der8auer had a video up this morning that showed him running eight NVME drives running in RAID on a Threadripper motherboard. Since then the video has been made private, so NO SOUP FOR YOU! However, we did sneak a couple of screenshots (thanks cageymaru). AMD has stated that NVME RAID support is on the way for Threadripper and this would seem to back that up, but we do not see a new chipset driver on AMD's website. Below is a screen grab of a benchmark run and the hardware used in all its glory, as well as some UEFI documentation.

Check out the pics.

He is using ASUS HYPER cards which support four NVME drives for his testing.

Check out the pics.

He is using ASUS HYPER cards which support four NVME drives for his testing.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)