MrGuvernment

Fully [H]

- Joined

- Aug 3, 2004

- Messages

- 21,796

https://www.servethehome.com/e1-and-e3-edsff-to-take-over-from-m-2-and-2-5-in-ssds-kioxia/

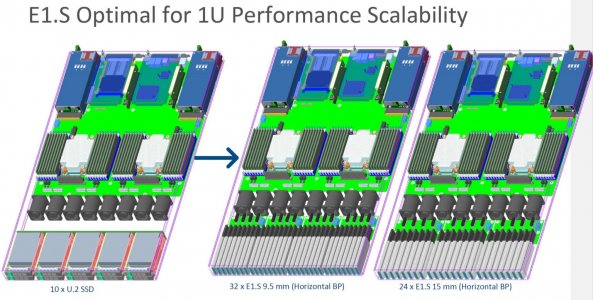

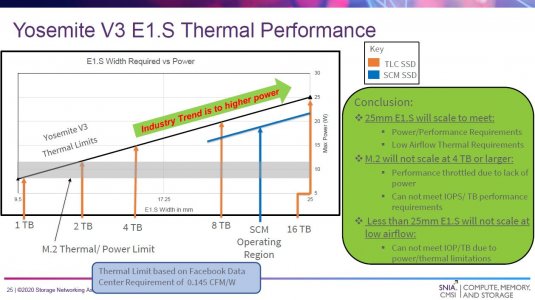

In the not too distant future (2022) we are going to see a rapid transition away from two beloved SSD form factors in servers. Both the M.2 and 2.5″ SSD form factors have been around for many years. As we transition into the PCIe Gen5 SSD era, EDSFF is going to be what many of STH’s community will want. Instead of M.2 and 2.5″ we are going to have E1.S, E1.L, E3.S, and E3.L along with a mm designation that means something different than it does with M.2 SSDs. If that sounds confusing, you are in luck. At STH, we managed to grab some drives (thanks to Kioxia for the help here) to be able to show you exactly how the world of storage and some PCIe memory/ accelerators will work.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)