Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Disable HDCP to make your computer slightly more responsive

- Thread starter bigbluefe

- Start date

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

There is zero evidence that HDCP introduces any input lag, or reduces performance.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

You’re free to do that and show the results.You can run latencymon before and after and see that it reduces latency. What there's no evidence for is your bullcrap statement.

Silentbob343

[H]ard|Gawd

- Joined

- Aug 2, 2004

- Messages

- 1,944

I recently installed latmon to troubleshoot an issue with a pice riser cable problem.

Perhaps I'll give it a try.

Perhaps I'll give it a try.

NattyKathy

[H]ard|Gawd

- Joined

- Jan 20, 2019

- Messages

- 1,483

does disabling HDCP still prevent playing back certain types of media or was that just a DVD / Blu-ray thing? I haven't even thought about HDCP in awhile... it would be both hilarious and incredibly frustrating if that has been contributing to Nvidia's infamous DPC latency issues this whole time.

But this is the first I've heard of HDCP being a culprit, and I'd like to see some data from folks here to corroborate the YT vid.

But this is the first I've heard of HDCP being a culprit, and I'd like to see some data from folks here to corroborate the YT vid.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,594

All the various streaming service apps won't work without HDCP. Disney+, Amazon Prime Video, etc.does disabling HDCP still prevent playing back certain types of media or was that just a DVD / Blu-ray thing? I haven't even thought about HDCP in awhile... it would be both hilarious and incredibly frustrating if that has been contributing to Nvidia's infamous DPC latency issues this whole time.

But this is the first I've heard of HDCP being a culprit, and I'd like to see some data from folks here to corroborate the YT vid.

You also have to disable HDCP to be able to use a capture card. Games don't require HDCP. But if its on, the capture card won't be able to grab the video signal.

NattyKathy

[H]ard|Gawd

- Joined

- Jan 20, 2019

- Messages

- 1,483

Yeah, I have noticed that HDCP is automatically disabled on my outputs that are connected to capture cards. Didn't know about the streaming thing but that makes sense.All the various streaming service apps won't work without HDCP. Disney+, Amazon Prime Video, etc.

You also have to disable HDCP to be able to use a capture card. Games don't require HDCP. But if its on, the capture card won't be able to grab the video signal.

Silentbob343

[H]ard|Gawd

- Joined

- Aug 2, 2004

- Messages

- 1,944

You’re free to do that and show the results.

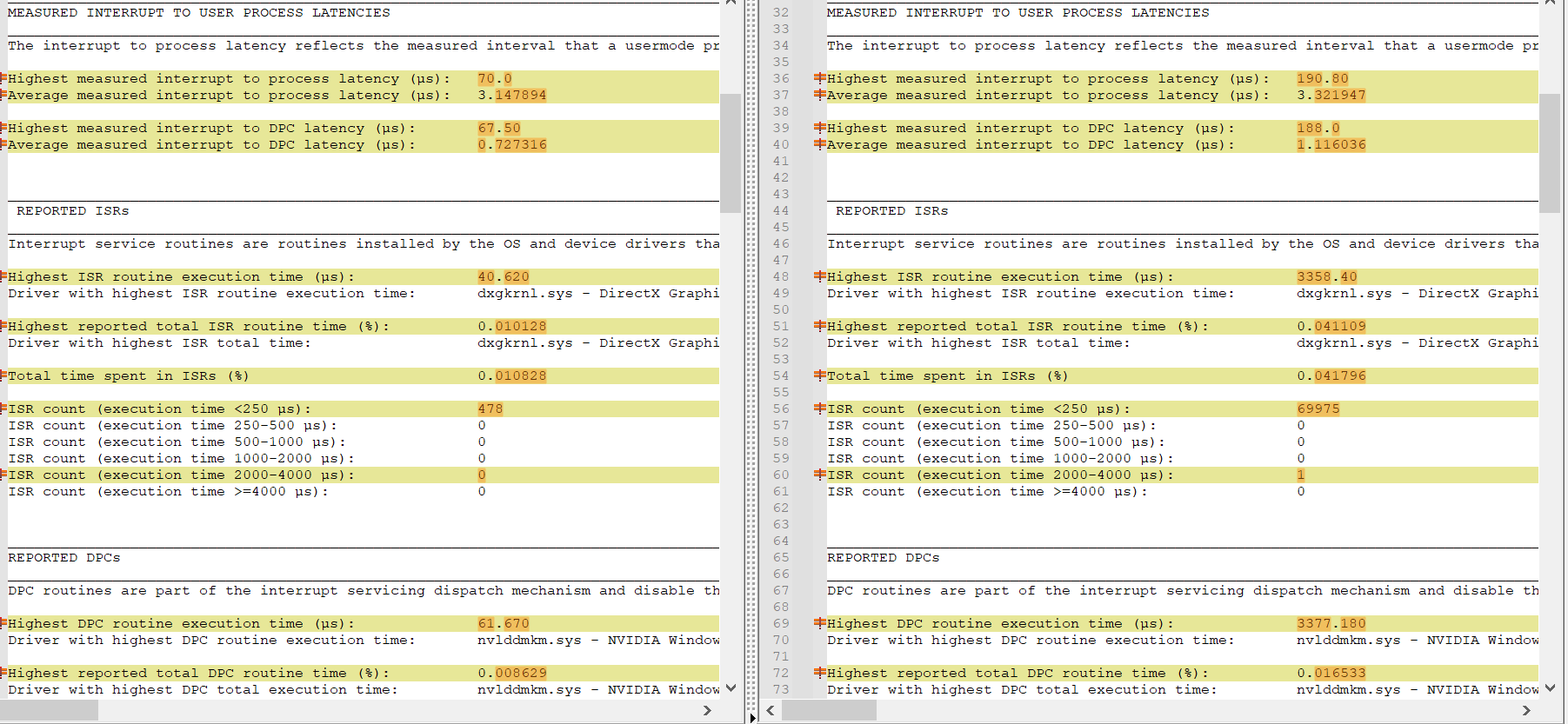

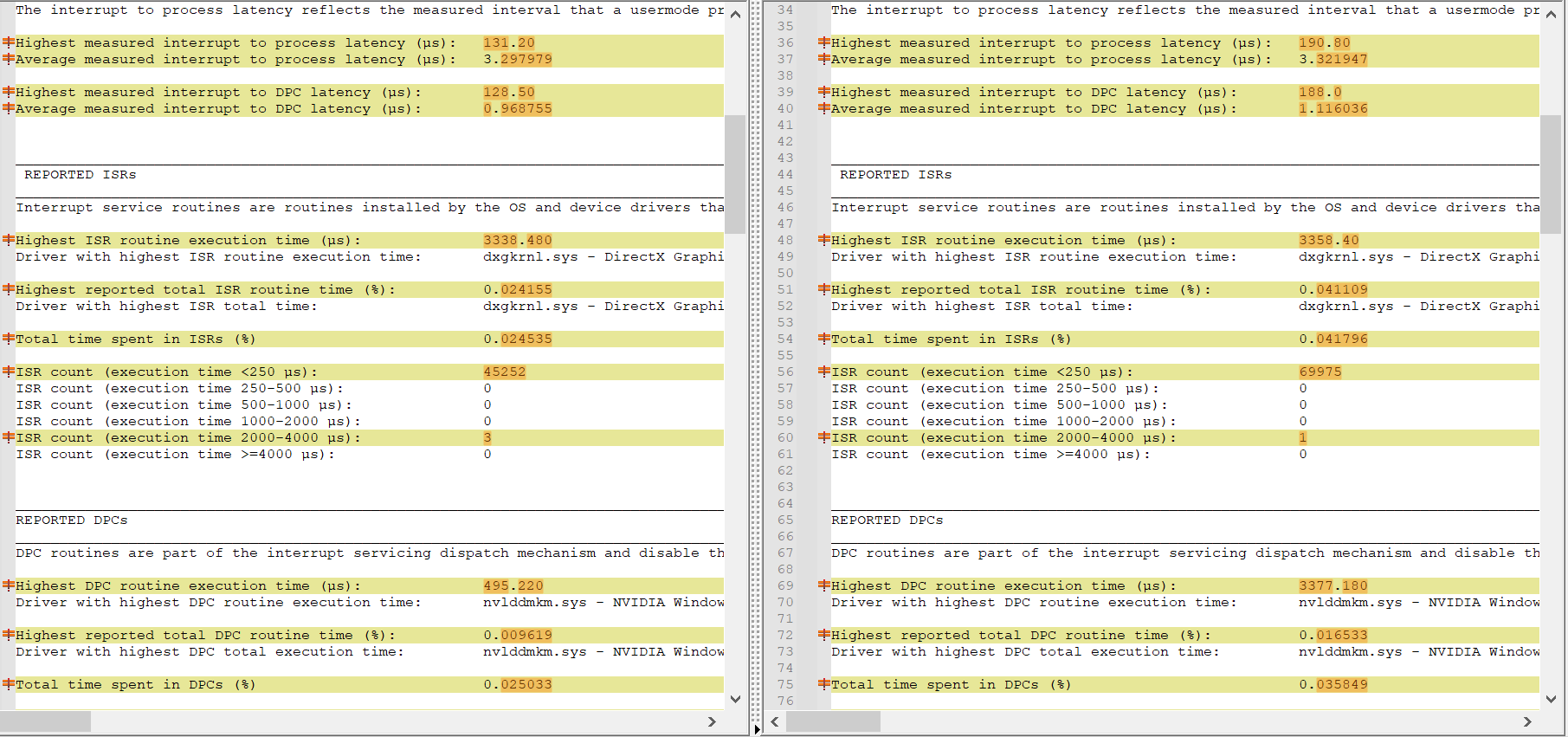

Here is my testing. It is very basic, started latmon and ran furmark three times. Do with these results what you will, feel free to do more in depth testing.Wow... someone's Big Mad.

You're going to need to come at us with some data before I can bring myself to care exactly how erect you get at changing a value in the registry.

- Stock, no reg entry

- Reg entry enabled, set to 1

- Reg entry disabled, set to 0

On the left is no reg entry and on the right is with entry disabled, set to 0.

You can see with either no reg entry or the reg entry disabled, set to 0, the latency appears to be higher compared to reg entry enabled, set to 1. This was a highly unscientific test, please feel free to knock yourselves out with your own testing, I've already wasted enough time on it.

Last edited:

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,594

AMD has a setting in their driver control panel, to disable HDCP.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

Even if you measure the delta between measured highs on latency and not the averages, that still isn't something anyone is going to be able to perceive. Keep in mind 1 millisecond is 1000 microseconds. So yes, you've demonstrated there may be a difference in that test, but we aren't even talking something that is even 1ms in difference. No human being is going to notice that.

If you've got some other test that demonstrates why this might be useful, please do so. At this point, i'm not convinced it's worth worrying about.

If you've got some other test that demonstrates why this might be useful, please do so. At this point, i'm not convinced it's worth worrying about.

Here is my testing. It is very basic, started latmon and ran furmark three times. Do with these results what you will, feel free to do more in depth testing.

[...]

You can see with either no reg entry or the reg entry disabled, set to 0, the latency is much higher compared to reg entry enabled, set to 1.

Looks like a whole lot of nothing to me. Like, that reads as a single 3ms spike on process instantiation, and then business as usual. Which is what I'd expect -- OS verifies the status of the HDCP hardware, which does its thing basically for free because it's a dedicated hardware IP block.

In any case this micro-benchmarking of interrupts isn't even relevant. Show motion-to-photon variance and we'll have something to work with. Show stuttering on frame-time graphs and >1% framerates. Absent that, it's just a whole lot of "oh noes, the OS is doing something in the background, and I don't understand it!"

cjcox

2[H]4U

- Joined

- Jun 7, 2004

- Messages

- 2,947

Depends on "how" it's disabled. I have no problems capturing "whatever".All the various streaming service apps won't work without HDCP. Disney+, Amazon Prime Video, etc.

You also have to disable HDCP to be able to use a capture card. Games don't require HDCP. But if its on, the capture card won't be able to grab the video signal.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,594

The point is, streaming apps won't start, if they detect that HDCP isn't being respected. And capture cards can't capture a signal where HDCP is being respected.Depends on "how" it's disabled. I have no problems capturing "whatever".

Even if you measure the delta between measured highs on latency and not the averages, that still isn't something anyone is going to be able to perceive. Keep in mind 1 millisecond is 1000 microseconds. So yes, you've demonstrated there may be a difference in that test, but we aren't even talking something that is even 1ms in difference. No human being is going to notice that.

If you've got some other test that demonstrates why this might be useful, please do so. At this point, i'm not convinced it's worth worrying about.

Does it really need to be stated that latency is a CUMULATIVE phenomenon? 1ms here, 1ms there. It all adds up until it's higher than your refresh rate. At 144hz, you have to be under 8ms TOTAL for next frame response. It's even worse at 240hz. Just stop.

Objectively proven wrong but such an ego case psychopath that you can't just admit it. Laughable.

Hi I'm mchart. I LIKE latency. Please. Give me more daddy.

The point is, streaming apps won't start, if they detect that HDCP isn't being respected. And capture cards can't capture a signal where HDCP is being respected.

People here actually pay for streaming services?

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

You haven't demonstrated a cumulative latency phenomenon, nor shown us the delta between those measures with HDCP on/off.Does it really need to be stated that latency is a CUMULATIVE phenomenon? 1ms here, 1ms there. It all adds up until it's higher than your refresh rate. At 144hz, you have to be under 8ms TOTAL for next frame response. It's even worse at 240hz. Just stop.

Objectively proven wrong but such an ego case psychopath that you can't just admit it. Laughable.

Hi I'm mchart. I LIKE latency. Please. Give me more daddy.

If you have a 360hz display, the entire frame has to be processed in 2.8ms, and you're pretending that 1ms isn't a big deal. 1ms of extra latency is catastrophic, and it's only going to get worse as refresh rates rise. End game is 1000hz so you can finally eliminate motion blur/persistence, which is 0.1ms for the whole frame meaning that this adds 10x more latency than the entire frame should take.You haven't demonstrated a cumulative latency phenomenon, nor shown us the delta between those measures with HDCP on/off.

latency is a big deal. But, I don't see how HDCP adds latency, with the exception of connection latency, which is annoying, but doesn't count for anything in games or whatever, unless you're switching monitors a lot in the middle of a deathmatch. Beyond a handful of gate delays, I don't see how you would implement a stream cipher on an up to 48 Gbps link and add significant latency, you'd need a rather speedy dual ported buffer chip, and what would it get you? xor isn't more efficient in bulk. Probably the data stream always goes through the xor anyway, if you're not running HDCP, set the cipher input to zeros; that way you don't need to have a data path switch.If you have a 360hz display, the entire frame has to be processed in 2.8ms, and you're pretending that 1ms isn't a big deal. 1ms of extra latency is catastrophic, and it's only going to get worse as refresh rates rise. End game is 1000hz so you can finally eliminate motion blur/persistence, which is 0.1ms for the whole frame meaning that this adds 10x more latency than the entire frame should take.

It's cute that you assume that something like HDCP would be implemented in a sensical manner.latency is a big deal. But, I don't see how HDCP adds latency, with the exception of connection latency, which is annoying, but doesn't count for anything in games or whatever, unless you're switching monitors a lot in the middle of a deathmatch. Beyond a handful of gate delays, I don't see how you would implement a stream cipher on an up to 48 Gbps link and add significant latency, you'd need a rather speedy dual ported buffer chip, and what would it get you? xor isn't more efficient in bulk. Probably the data stream always goes through the xor anyway, if you're not running HDCP, set the cipher input to zeros; that way you don't need to have a data path switch.

cjcox

2[H]4U

- Joined

- Jun 7, 2004

- Messages

- 2,947

A quick trip to wal*mart can fix all of that.... just saying...The point is, streaming apps won't start, if they detect that HDCP isn't being respected. And capture cards can't capture a signal where HDCP is being respected.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)