Yeah they use them on a lot of high end mobos too; so you can do 4-way CF/SLI on a lga 115x platform that has only 16 native CPU lanes. 16 lanes from cpu, four x8 to video cards.I've only seen those in extreme HPC cases but since those exist I'm sure they could tailor ones for other platforms.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Dell Quad M.2 PCIe card.

- Thread starter JustLong

- Start date

Yeah they use them on a lot of high end mobos too; so you can do 4-way CF/SLI on a lga 115x platform that has only 16 native CPU lanes. 16 lanes from cpu, four x8 to video cards.

Well yea lol but it still gets fed through the DMI link. Either way I guess the PLX chip was much more dynamic then I thought. I'd be willing to go for a consumer board with a PLX and u.2

SomeGuy133

2[H]4U

- Joined

- Apr 12, 2015

- Messages

- 3,447

has anyone actually studied and tested the latency those add? I would love to see an empirical comparison with plex and without and with and without load.Yeah they use them on a lot of high end mobos too; so you can do 4-way CF/SLI on a lga 115x platform that has only 16 native CPU lanes. 16 lanes from cpu, four x8 to video cards.

I wouldn't trust PLEX until someone showed there isn't a hidden downside.

Depends, I mean you could theoretically build a board that had the PLX chip hooked to the native 16 CPU lanes and then spat out 32 lanes for SSD's.Well yea lol but it still gets fed through the DMI link. Either way I guess the PLX chip was much more dynamic then I thought. I'd be willing to go for a consumer board with a PLX and u.2

There is definitely a small amount of latency. I haven't ever seen any really really good tests that truly quantify the latency, however, in the case of using a PLX chip for video cards, it tends to add only like a 1-2% hit in performance vs running the card on native lanes. There are certainly scenarios where it would be a benefit, but not all.has anyone actually studied and tested the latency those add? I would love to see an empirical comparison with plex and without and with and without load.

I wouldn't trust PLEX until someone showed there isn't a hidden downside.

has anyone actually studied and tested the latency those add? I would love to see an empirical comparison with plex and without and with and without load.

I wouldn't trust PLEX until someone showed there isn't a hidden downside.

It's not a big deal honestly.

Depends, I mean you could theoretically build a board that had the PLX chip hooked to the native 16 CPU lanes and then spat out 32 lanes for SSD's.

For graphics that would make no sense but for IO it would make for an interesting product.

SomeGuy133

2[H]4U

- Joined

- Apr 12, 2015

- Messages

- 3,447

Depends, I mean you could theoretically build a board that had the PLX chip hooked to the native 16 CPU lanes and then spat out 32 lanes for SSD's.

There is definitely a small amount of latency. I haven't ever seen any really really good tests that truly quantify the latency, however, in the case of using a PLX chip for video cards, it tends to add only like a 1-2% hit in performance vs running the card on native lanes. There are certainly scenarios where it would be a benefit, but not all.

It's not a big deal honestly.

For graphics that would make no sense but for IO it would make for an interesting product.

thats whatn people say but i dont believe shit without proof

What do you mean about switching and I'm not sure about your SSD example.thats whatn people say but i dont believe shit without proofI really dont understand how no data exists on the pros and cons and latency it adds. What if you seriously load the channels and it has to do massive amount sof switching...like SSDs can they crap out? i have never heard of any actual testing of these to truely tell if they are a solid option so for me i won't touch them until i know how they work.

They have tested GPUs on PLX connected PCIE slots and it showed no visible degradation of performance.

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,001

thats whatn people say but i dont believe shit without proofI really dont understand how no data exists on the pros and cons and latency it adds. What if you seriously load the channels and it has to do massive amount sof switching...like SSDs can they crap out? i have never heard of any actual testing of these to truely tell if they are a solid option so for me i won't touch them until i know how they work.

I am quick to call BS on people claiming that latency in the single-millisecond range or lower is perceivable or significant, If people could perceive that low of a time interval, dodging bullets would be an everyday news story.

Lets break this down: It takes longer than 30 milliseconds for the nerves in your finger to react to your brain telling you to click on the mouse button. The time it takes your finger to relax and allow the button to lift-off the actuator is longer than 50 milliseconds. The time it takes for the SOUND of the mouse click to travel through the air to your ear is an additional 15 milliseconds.

So yeah, I don't think a PLX chip adding 1 or 2 milliseconds in a WORSE CASE SCENARIO is going to slow down any file loading or communications, especially as that is an instruction latency, and does not affect bandwidth.

SomeGuy133

2[H]4U

- Joined

- Apr 12, 2015

- Messages

- 3,447

irrelevant comparison.I am quick to call BS on people claiming that latency in the single-millisecond range or lower is perceivable or significant, If people could perceive that low of a time interval, dodging bullets would be an everyday news story.

Lets break this down: It takes longer than 30 milliseconds for the nerves in your finger to react to your brain telling you to click on the mouse button. The time it takes your finger to relax and allow the button to lift-off the actuator is longer than 50 milliseconds. The time it takes for the SOUND of the mouse click to travel through the air to your ear is an additional 15 milliseconds.

So yeah, I don't think a PLX chip adding 1 or 2 milliseconds in a WORSE CASE SCENARIO is going to slow down any file loading or communications, especially as that is an instruction latency, and does not affect bandwidth.

You do not perceive ms actions but computers do. If you add 1ms to an SSD that is .009ms that totally fucks up the SSD. Has anyone tested to see how it handles high amount of switching? how does it manage it? If you have 32 Lanes of SSDs fighting for BW and this does full derp with massive amount of switching does it inquire a lager penalty for high IO random requests? Is the penalty minimal if small amounts of switching occurs but if it needs to do high switching does it become self defeating? Has anyone tested that or proved it properly* manages high IO and hi random requests?

Thats what I thought....no one has. I know you pulled 1ms out of your ass because if the penalty is ms thats fucking shit. 1/.009=111.1 times added delay...trash

thats bullshit it takes 50ms to click the mouse. I can see the frames loading when i open windows explorer. That is 3 frames at 60hz. 17ms per frame and you can actual perceive that. You can see movement beyond the flicker threshold...you just don't see an actual flicker (hence the name flicker threshold). Flicker threshold is not the same thing as perceiving motion.

But again your comparison is irrelevant but you clearly don't understand how sensitive humans are.

Basically, if your right and its 1ms latency its total trash already and useless. If its 1us latency its very useful.

with your logic DRAM and SSDs and CPUs and cache can be 1ms and its tots awesome....see the irrelevant comparison?

Last edited:

That escalated quickly...

To calm your nerves a bit there are some massively parallel PLX systems out there are purely used with NAND (and flash) systems. They ensure that the pathway to the DMI (which I think is 3.0 on some) are prioritized. I doubt these systems would be put into place if the PLX trashed the SSD.

I'm still not sure about this switching thing you are talking about, are you referring to the lane switching?

To calm your nerves a bit there are some massively parallel PLX systems out there are purely used with NAND (and flash) systems. They ensure that the pathway to the DMI (which I think is 3.0 on some) are prioritized. I doubt these systems would be put into place if the PLX trashed the SSD.

I'm still not sure about this switching thing you are talking about, are you referring to the lane switching?

SomeGuy133

2[H]4U

- Joined

- Apr 12, 2015

- Messages

- 3,447

yes. how does it handle highly random and small I/O requests. XPoint is going to have .009ms reseponse times or 9us. PLX might not handle 9us random I/O requests at high load. How does it switch. If it batches at .1ms that will murder XPoints usefulnessThat escalated quickly...

To calm your nerves a bit there are some massively parallel PLX systems out there are purely used with NAND (and flash) systems. They ensure that the pathway to the DMI (which I think is 3.0 on some) are prioritized. I doubt these systems would be put into place if the PLX trashed the SSD.

I'm still not sure about this switching thing you are talking about, are you referring to the lane switching?

or can it switch 1,000,000s of times in a single second? Whats it switch rate and delay? See my point? People don't even understand the tech and think its good. Way too many unknown questions for me to touch it

oops hit reply and not edit

Also how does it handle even faster requests? Xeon Phi accessing DRAM or CPU. These are <1us latencies!

I could see plx killing Xeon Phis performance due to latency additions

NAND is X ms or .x ms when XPoint is X us and cache is nano seconds to us. and these things happen millions and billions if not more times a second.

Last edited:

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,001

Fair points.yes. how does it handle highly random and small I/O requests. XPoint is going to have .009ms reseponse times or 9us. PLX might not handle 9us random I/O requests at high load. How does it switch. If it batches at .1ms that will murder XPoints usefulness

or can it switch 1,000,000s of times in a single second? Whats it switch rate and delay? See my point? People don't even understand the tech and think its good. Way too many unknown questions for me to touch it

oops hit reply and not edit

Also how does it handle even faster requests? Xeon Phi accessing DRAM or CPU. These are <1us latencies!

I could see plx killing Xeon Phis performance due to latency additions

NAND is X ms or .x ms when XPoint is X us and cache is nano seconds to us. and these things happen millions and billions if not more times a second.

Incredible numbers, though I can't think of what I'd use 2TB of 8 GB/s storage for. I'd be tempted to spend the money on a server board with a whole pile of RAM instead. An interesting real-world test would be to have Photoshop use the array as a scratch disk. Then see how much slower things get when you burn through your main memory.

SomeGuy133

2[H]4U

- Joined

- Apr 12, 2015

- Messages

- 3,447

Photo shop deoesnt gain a wholr lot st that point. Better coding and cpu would help. Photoshop at 4K ....like running bridge at 4k with actual full res murdered a 4GHz quad IB...iy eould hang for 10s of mins trashing the cpu. Eveb with gpu enabled cpu was wrekted. A4.8ghz hw even strugglesIncredible numbers, though I can't think of what I'd use 2TB of 8 GB/s storage for. I'd be tempted to spend the money on a server board with a whole pile of RAM instead. An interesting real-world test would be to have Photoshop use the array as a scratch disk. Then see how much slower things get when you burn through your main memory.

- Joined

- Aug 12, 2004

- Messages

- 8,374

Interesting read..... Ran across this thread since I just ordered a new Precision T7910 and there is almost zero info on the web about the 4 port M.2 card that I ordered with it. Looking forward to playing with it for sure. My main use is graphic design work in Photoshop, Illustrator, and FlexiSign. Some of the files I work on get pretty massive getting up over 6GB or more in size when its all said and done. Photoshop is multi-threaded but there gets to be diminishing returns on more cores and where raw frequency is more beneficial so I configured it with dual E5-2643 V3 processors which are only 6 core but 3.4ghz native. I could have gone with some higher core CPU's but it would have been at the expense of frequency (or massive amounts of money) and I'm coming from dual X5650 procs so same number of cores but I'll have a considerable frequency boost. Of course when I saw I could add a NVMe PCIe card with a 512GB SSD as my boot drive I could not resist. Now I just need to resist getting 3 more NVMe SSD's to add to the other three spots on the card. Of course RAM is stupid expensive from Dell so I only configured it with 16GB for now. Will be upgrading to to 128GB asap.  The speed numbers on those SSD's using SS is mouth watering. On storage I'm coming from a regular SATA SSD on the current machine....

The speed numbers on those SSD's using SS is mouth watering. On storage I'm coming from a regular SATA SSD on the current machine....

SomeGuy133

2[H]4U

- Joined

- Apr 12, 2015

- Messages

- 3,447

are you using dual sock? Is it possible to do x99? You cpuld get a 4.5GHz 8 core xeon with 128gb of ecc quad channel

- Joined

- Aug 12, 2004

- Messages

- 8,374

Yes the computer is a Dell Precision T7910 Pro Workstation which is a dual socket. X99, sort of..... the board has the C610 / C612 chipset, the C610 is essentially the same chipset as the X99 chipset. That being said the Dell mobo in the system "officially" only supports the Xeon E5-26xx (V3 and V4) processors. None of the E5-16xx or desktop i7 processors have the QPI links for dual socket board use. Of course being a Dell board there are really no overclocking options in the system setup either. This is the spec sheet from Dell on these..... http://i.dell.com/sites/doccontent/...ecision_Tower_7000_Series_7910_Spec_Sheet.pdfare you using dual sock? Is it possible to do x99? You cpuld get a 4.5GHz 8 core xeon with 128gb of ecc quad channel

Not too worried about the RAM. It has 16 dimm slots and supports up to 512GB total (256GB max per CPU socket).

Another interesting thought on this system. It has 8 on board SAS3 (12Gb/s) ports (LSI 3008 controller). If it plays like the LSI cards then that would also be capable of sustained of at least 4Gb/s transfer rates with a pile of lower cost SSD's done as a RAID set. A lot of options with this Dell system that I have really not had the chance to explore.

dvsman

2[H]4U

- Joined

- Dec 2, 2009

- Messages

- 3,628

Just wanted to pop in and say Wow!

thebeephaha

2[H]4U

- Joined

- May 27, 2007

- Messages

- 2,054

is it possile to buy pci-e x16 @ 4x M.2 card ?

I am looking for one, even 2x DUAL M.2 but x8 but no luck

I've only seen 4x cards to date unfortunately.

iamwhoiamtoday

Limp Gawd

- Joined

- Oct 29, 2013

- Messages

- 493

I prematurely (aka: without doing any research) picked up one of these cards and four 128GB SM951 SSDs.

Tried to get it to function on my X9DR3-LN4F+ to no avail. (I can see one drive, not all four)

I assume that I'm up a creek without a Dell Mobo with a PLX chip? Or would any system with a PLX chip theoretically work?

Tried to get it to function on my X9DR3-LN4F+ to no avail. (I can see one drive, not all four)

I assume that I'm up a creek without a Dell Mobo with a PLX chip? Or would any system with a PLX chip theoretically work?

I prematurely (aka: without doing any research) picked up one of these cards and four 128GB SM951 SSDs.

Tried to get it to function on my X9DR3-LN4F+ to no avail. (I can see one drive, not all four)

I assume that I'm up a creek without a Dell Mobo with a PLX chip? Or would any system with a PLX chip theoretically work?

i dont have this board but

1. are you sure you put it in pci-e x16 slot (no x16 @ x4 speed)

2. ssds are nvm-e? c602/x79 not support nvm-e i think, maybe try with newer mobo (z170/X99/C612?)

3. maybe you have to create array first ?

I prematurely (aka: without doing any research) picked up one of these cards and four 128GB SM951 SSDs.

Tried to get it to function on my X9DR3-LN4F+ to no avail. (I can see one drive, not all four)

I assume that I'm up a creek without a Dell Mobo with a PLX chip? Or would any system with a PLX chip theoretically work?

Have you tried to turn bifurcation on in the Supermicro bios? X4x4x4x4 mode for the x16 slot? Does it work then?

The options are x4x4x4x4, x4x4x8, x8x4x4, x8x8, x16, and Auto.

As I was interested in the PLX chip latency discussion, I checkout out the Datasheets from Avago (Who now own PLX) and they state a latency of max 150ns. The strategy used to route traffic is cut-through forwarding rather then store-and-forward.

Source:

PCI Express Switches

I am not sure these adapter cards can be made without some form of switcher chip onboard. I suspect that there is some switcher on the board pictured. If I remember correctly, the mainboard PCIE slot can only talk to one endpoint. Don't quote me on this though because I am not 100% sure on it, and I have no time to dig in the PCIe specs at the moment.

RAID 0 or other levels on these cards is not really feasible because the OS will always see these as 4 different SSD devices. I know that Intel is working on new UEFI drivers to be able to raid NVMe drives together in the same way as their rapidraid software for SATA drives. This is bad IMO because it still is the system CPU that does the XOR calculations for the raid levels. It is in essence software raid (Except the software in this case is the UEFI firmware). If software raid is going to be used, it is better to use the OS based software raid IMO because it gives the OS more control, and the overhead is essentially the same. Think dmraid on Linux machines, or mirrored pools on Solaris, or fuck all if you are unlucky and have to use windows.

Source:

PCI Express Switches

I am not sure these adapter cards can be made without some form of switcher chip onboard. I suspect that there is some switcher on the board pictured. If I remember correctly, the mainboard PCIE slot can only talk to one endpoint. Don't quote me on this though because I am not 100% sure on it, and I have no time to dig in the PCIe specs at the moment.

RAID 0 or other levels on these cards is not really feasible because the OS will always see these as 4 different SSD devices. I know that Intel is working on new UEFI drivers to be able to raid NVMe drives together in the same way as their rapidraid software for SATA drives. This is bad IMO because it still is the system CPU that does the XOR calculations for the raid levels. It is in essence software raid (Except the software in this case is the UEFI firmware). If software raid is going to be used, it is better to use the OS based software raid IMO because it gives the OS more control, and the overhead is essentially the same. Think dmraid on Linux machines, or mirrored pools on Solaris, or fuck all if you are unlucky and have to use windows.

SomeGuy133

2[H]4U

- Joined

- Apr 12, 2015

- Messages

- 3,447

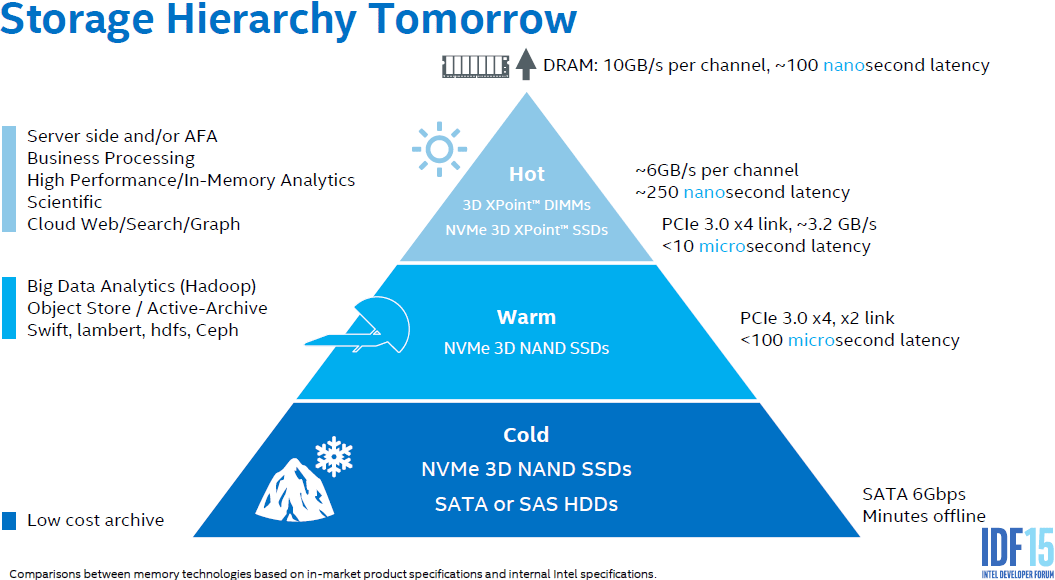

nice find. What i have always wanted to see was a layout of a MB with the varies times it takes to move data. CPU to memory. CPU to PCH, CPU to PCIe, PCH to PCIe.As I was interested in the PLX chip latency discussion, I checkout out the Datasheets from Avago (Who now own PLX) and they state a latency of max 150ns. The strategy used to route traffic is cut-through forwarding rather then store-and-forward.

Source:

PCI Express Switches

I am not sure these adapter cards can be made without some form of switcher chip onboard. I suspect that there is some switcher on the board pictured. If I remember correctly, the mainboard PCIE slot can only talk to one endpoint. Don't quote me on this though because I am not 100% sure on it, and I have no time to dig in the PCIe specs at the moment.

RAID 0 or other levels on these cards is not really feasible because the OS will always see these as 4 different SSD devices. I know that Intel is working on new UEFI drivers to be able to raid NVMe drives together in the same way as their rapidraid software for SATA drives. This is bad IMO because it still is the system CPU that does the XOR calculations for the raid levels. It is in essence software raid (Except the software in this case is the UEFI firmware). If software raid is going to be used, it is better to use the OS based software raid IMO because it gives the OS more control, and the overhead is essentially the same. Think dmraid on Linux machines, or mirrored pools on Solaris, or fuck all if you are unlucky and have to use windows.

I tried finding that ones but no luck, which surprises me that isn't common knowledge or at least wasn't 1-2 years ago :/ You ever see that info?

You can get a small idea from intel slides on optane in PCI vs memory. but it isn't exact.

nice find. What i have always wanted to see was a layout of a MB with the varies times it takes to move data. CPU to memory. CPU to PCH, CPU to PCIe, PCH to PCIe.

I tried finding that ones but no luck, which surprises me that isn't common knowledge or at least wasn't 1-2 years ago :/ You ever see that info?

You can get a small idea from intel slides on optane in PCI vs memory. but it isn't exact.

Some of the numbers you want can be calculated based on the clock rate of the transmission.

For example the CPU->PCIe is defined by the transfer rate of the PCIe bus (PCIe controller is directly in the CPU).

The bus runs at 8 GT's or 8000000000 transfers per second. This means that sending 1 bit on a single lane takes 1/8000000000 of a second, or 0.125 nanoseconds.

However, the encoding on a PCIE 3.0 lane is 128b/130b, this implies 2 things:

- Smallest transfer that can be sent is 128 bits.

- 2 extra bits get added per block of 128 bits.

The shortest possible packet transfer time is 130 * 0.125 nanoseconds.

The CPU->PCH interconnect (DMI link) is basically also PCIE 3.0 (x4 width) and thus the same applies. This is also true for the PCH->PCIE slots.

The real unknowns are these:

- When the CPU decides to send data accross the PCIE controller (using DMA), how much latency is introduced in the PCIE controller in the CPU due to buffering/queueing/prioritization.

- How much latency is introduced by the PCH when transferring PCIE data from its input (The DMI in intels docs) to the output PCIE slot.

- Joined

- Aug 12, 2004

- Messages

- 8,374

Here are a couple of high res shots of one of these cards in case anyone wants to ID the actual chips. I can always pull the cover off this T7910 and get shots of the mobo or other shots of the card as well if needed. I now have two of the slots on it populated and plan on getting two more of the 951 NVME 512 drives for the other two slots I still have open. But yeah - each shows as an individual drive. Performance is of course phenomenal in benchmarks but in real work use it has its moments. Depends on what I'm working on though - if I'm working on a massive bus wrap file that is 6+ GB in size in Photoshop its great although having 128GB of ram and dual 3.4 hex core E5 2643 V3 procs helps too.

Dell has the card in both two and 4 port versions. They now call them the Ultra-Speed Quad and Duo. They still cannot be ordered directly though. The only way to get them so far has been with a few different system models. That being said there was just recently a run of the cards sold on eBay that were new pulls. I think they were selling for around $75 or so if I remember right.

I doubt it will be too long before someone comes out with a multi-port HBA card like this that has a raid chip (and probably a nice bit of cache and maybe even a battery) and just presents to the system as a single drive.

High res shots are here - http://www.sl-digital.com/forums/cardfront.jpg http://www.sl-digital.com/forums/cardback.jpg

Dell has the card in both two and 4 port versions. They now call them the Ultra-Speed Quad and Duo. They still cannot be ordered directly though. The only way to get them so far has been with a few different system models. That being said there was just recently a run of the cards sold on eBay that were new pulls. I think they were selling for around $75 or so if I remember right.

I doubt it will be too long before someone comes out with a multi-port HBA card like this that has a raid chip (and probably a nice bit of cache and maybe even a battery) and just presents to the system as a single drive.

High res shots are here - http://www.sl-digital.com/forums/cardfront.jpg http://www.sl-digital.com/forums/cardback.jpg

Last edited:

SomeGuy133

2[H]4U

- Joined

- Apr 12, 2015

- Messages

- 3,447

the other issue is distance and thats the primary issue here.Some of the numbers you want can be calculated based on the clock rate of the transmission.

For example the CPU->PCIe is defined by the transfer rate of the PCIe bus (PCIe controller is directly in the CPU).

The bus runs at 8 GT's or 8000000000 transfers per second. This means that sending 1 bit on a single lane takes 1/8000000000 of a second, or 0.125 nanoseconds.

However, the encoding on a PCIE 3.0 lane is 128b/130b, this implies 2 things:

This means that the effective bit rate of PCIE 3.0 lanes is less the the 8GBit/s you would expect, to be exact, 8000000000*128/130 = 7.8769 GBit/second. (divided by 8 = 0.984 GByte/s This jives with the number on wikipedia: PCI Express - Wikipedia, the free encyclopedia ).

- Smallest transfer that can be sent is 128 bits.

- 2 extra bits get added per block of 128 bits.

The shortest possible packet transfer time is 130 * 0.125 nanoseconds.

The CPU->PCH interconnect (DMI link) is basically also PCIE 3.0 (x4 width) and thus the same applies. This is also true for the PCH->PCIE slots.

The real unknowns are these:

If someone has details on these, I would very much like to know

- When the CPU decides to send data accross the PCIE controller (using DMA), how much latency is introduced in the PCIE controller in the CPU due to buffering/queueing/prioritization.

- How much latency is introduced by the PCH when transferring PCIE data from its input (The DMI in intels docs) to the output PCIE slot.

Primary unknowns are PCH overhead and distance traveled

there is no added controller issues or major overhead for the most part between PCIe and memory. The biggest issue from my understanding (limited at best) is distance. Correct me if i am wrong but I do not think PCI bus magically added 40x latency. I believe a lot of it is due to distance. Hence why L4 memory has much better latency than DDR4. L4 latency was not anything special in regards to latency either...it was just it sits on the actual die.

Again my understanding of this is very limited because i have not seen any real good detailed stuff on the overall system latency and how and why it is made. My understanding is built off of my own experience with PCs, and a few tech examples like optane and UltraDIMMS showing the difference between various places on a computer.

Hi there!

Please help to find the drivers for Dell Quad M.2 PCIe card. I want to use it in my PC with four Samsung 950 PRO M.2 but can't make this card to see all four M.2 drives.

The problem is that Windows access only the first drive. In BIOS I see that only four of sixteen PCI lanes used.

May be there is a manual or documentation for this card?

Please help to find the drivers for Dell Quad M.2 PCIe card. I want to use it in my PC with four Samsung 950 PRO M.2 but can't make this card to see all four M.2 drives.

The problem is that Windows access only the first drive. In BIOS I see that only four of sixteen PCI lanes used.

May be there is a manual or documentation for this card?

drescherjm

[H]F Junkie

- Joined

- Nov 19, 2008

- Messages

- 14,941

In BIOS I see that only four of sixteen PCI lanes used.

That is your problem.

Hi there!

Please help to find the drivers for Dell Quad M.2 PCIe card. I want to use it in my PC with four Samsung 950 PRO M.2 but can't make this card to see all four M.2 drives.

The problem is that Windows access only the first drive. In BIOS I see that only four of sixteen PCI lanes used.

May be there is a manual or documentation for this card?

You need a motherboard chipset and BIOS that has bifurcation support.

dvsman

2[H]4U

- Joined

- Dec 2, 2009

- Messages

- 3,628

Hi there!

Please help to find the drivers for Dell Quad M.2 PCIe card. I want to use it in my PC with four Samsung 950 PRO M.2 but can't make this card to see all four M.2 drives.

The problem is that Windows access only the first drive. In BIOS I see that only four of sixteen PCI lanes used.

May be there is a manual or documentation for this card?

What mobo are you trying to stuff that into man?

ASUS Z87-PLUS @ Intel Z87What mobo are you trying to stuff that into man?

iamwhoiamtoday

Limp Gawd

- Joined

- Oct 29, 2013

- Messages

- 493

Meta2, I'm not seeing anything on the ASUS website about it having a PLX chip or any bifurcation support.

I've run into the same problem, having a motherboard that doesn't support bifurcation. Next time I rebuild my rig, that's high up on my list xD

I've run into the same problem, having a motherboard that doesn't support bifurcation. Next time I rebuild my rig, that's high up on my list xD

Cards are on the way. No idea on price, but this looks promising: PCI Express Gen 3 Carrier Board for 4 M.2 SSD modules - Amfeltec

and

Liqid (however that one dosen't look like you can use your own m.2 drive?)

and

Liqid (however that one dosen't look like you can use your own m.2 drive?)

Last edited:

Happy Hopping

Supreme [H]ardness

- Joined

- Jul 1, 2004

- Messages

- 7,837

Cards are on the way. No idea on price, but this looks promising: PCI Express Gen 3 Carrier Board for 4 M.2 SSD modules - Amfeltec

and

Liqid (however that one dosen't look like you can use your own m.2 drive?)

So, has anyone uses Squid?

1) why do we need that fan? It's not cooling the SSD. If so, how loud is it?

2) does it requires bifurcation support?

3) can you format them as 4 independent drive, i.e., no RAID

==================

then there is a guy named Sedna, for $119.9, that seems to do the same thing but has 1 bad customer review

https://www.amazon.com/SEDNA-HyoperDuo-acceleration-function-included/dp/B01EHKCMIG

Last edited:

As an Amazon Associate, HardForum may earn from qualifying purchases.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)