Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Countdown to the Vega Architecture Preview

- Thread starter HardOCP News

- Start date

Ironically no longer making any noiseI know, right? And where are all the drummer boys that belong to all those drums?

I already have a drum set.

Amusing considering they end with "make some noise", classic marketing

Cheers

MotionBlur

[H]ard|Gawd

- Joined

- Mar 27, 2001

- Messages

- 1,636

I really hope these come through - will buy 2 day 1 if they do.

D

Deleted member 243478

Guest

Shit look at all those drums! This has to be faster than the TITAN XP now!!! What the hell are they smoking over there...

I guess inside of each drum is a brand new Vega Card. New type of packaging, that is just the dingy smelly warehouse AMD has stuck them in.Shit look at all those drums! This has to be faster than the TITAN XP now!!! What the hell are they smoking over there...

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

What catches my eye is the 512TB Virtual Address Space and High Bandwidth Cache Controller - could we see the desktop gaming card coming with a 32GB-128GB SSD (option), caching all the game assets? Being rather transparent to the application via drivers and hardware?

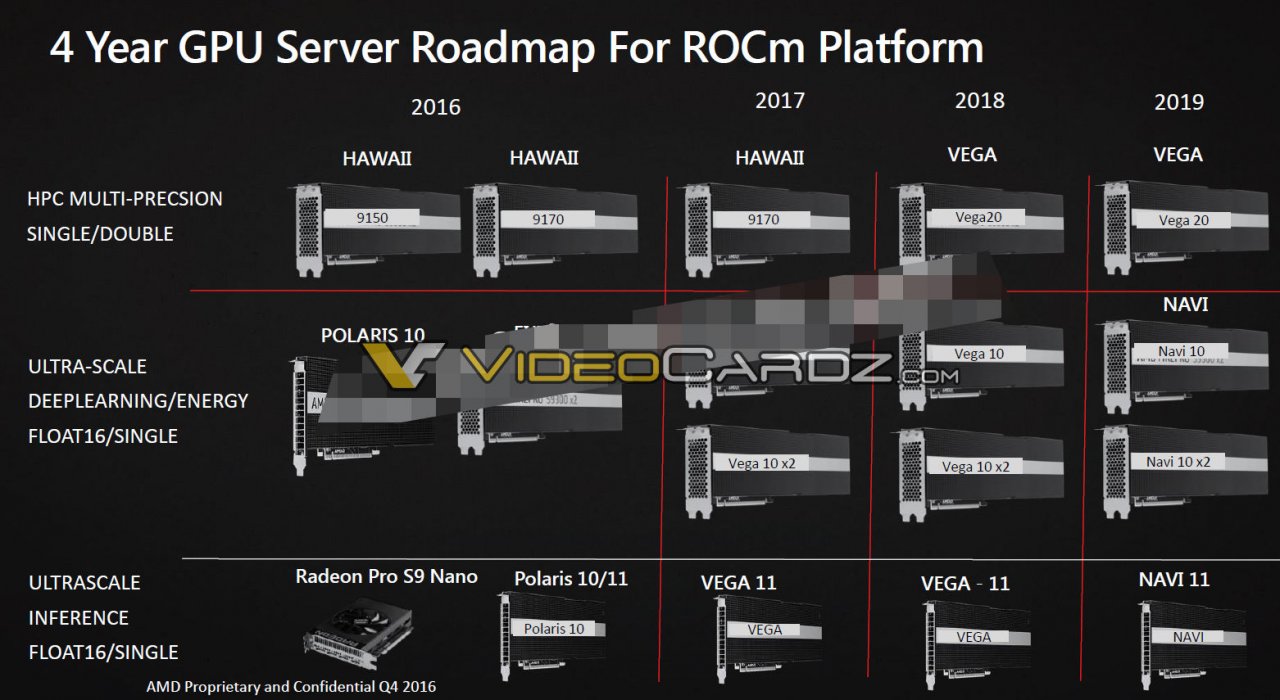

Yes the roadmap for Navi shows NexGen memory tech. They already are testing SSG GPUs.

Isn't the SSD 'memory' more for HPC-research-modelling setups rather than gaming?

Because wouldn't 16GB/32GB of DDR4 memory on Skylake and newer CPUs actually be faster than the SSD type option even if it is integral to the GPU?

Games are designed to load assets in a pretty fluid-dynamic way these days and the speed of having 16GB DDR4 and 8GB minimum VRAM (in reality if a GPU can have the SSD option then you are looking at GPUs than should have 12GB GDDR 8gbits minimum spec and equal to 384bits bus or much higher) is more than enough for current next gen games.

I can see its use for large scale HPC-scientific work-etc though.

Cheers

Because wouldn't 16GB/32GB of DDR4 memory on Skylake and newer CPUs actually be faster than the SSD type option even if it is integral to the GPU?

Games are designed to load assets in a pretty fluid-dynamic way these days and the speed of having 16GB DDR4 and 8GB minimum VRAM (in reality if a GPU can have the SSD option then you are looking at GPUs than should have 12GB GDDR 8gbits minimum spec and equal to 384bits bus or much higher) is more than enough for current next gen games.

I can see its use for large scale HPC-scientific work-etc though.

Cheers

Napoleon

[H]ard|Gawd

- Joined

- Jan 27, 2003

- Messages

- 1,073

How would people ever see these marketing pieces if they aren't browsing hardware forums? They seem to put a lot of work into it, does it pop up on steam?

Most of the time I just play games or browse the web, although I've recently let myself indulge in the [H] underworld more often

Most of the time I just play games or browse the web, although I've recently let myself indulge in the [H] underworld more often

Looks like they are using Battlefront to demo Rizen+Vega at CES.

http://videocardz.com/65343/amd-demos-star-wars-battlefront-on-ryzen-and-vega-at-ces2017

Bit concerned with the performance when looking at the details, such as a 55 FOV, and occasionally dipping below 60fps for 4k Ultra.

Battlefront historically has better performance with AMD cards (the Fury X beats the 980ti in this game by around 15%), but worth noting the 1080 custom models average 73fps and minimum at 65fps..

Realistically and IMO this game it should be better than the 1080 in this game and then equal out in other games, early days but looks like it may be tricky competing with 1080.

It might improve in some ways and also it is different maps, but worth noting the lowered FoV in the CES demo.

Its performance looks more similar to the basic FE 1080 that without tweaks would throttle; that at 4k ultra in Battlefront averages 66fps and minimum 56fps.

To my mind it only needs to compete with the 1080 as there are people still waiting to buy an AMD product, but this is looking like it is somewhere in-between.

Ah well time will tell, fingers crossed the figures do align with the Nvidia 1080.

Cheers

http://videocardz.com/65343/amd-demos-star-wars-battlefront-on-ryzen-and-vega-at-ces2017

Bit concerned with the performance when looking at the details, such as a 55 FOV, and occasionally dipping below 60fps for 4k Ultra.

Battlefront historically has better performance with AMD cards (the Fury X beats the 980ti in this game by around 15%), but worth noting the 1080 custom models average 73fps and minimum at 65fps..

Realistically and IMO this game it should be better than the 1080 in this game and then equal out in other games, early days but looks like it may be tricky competing with 1080.

It might improve in some ways and also it is different maps, but worth noting the lowered FoV in the CES demo.

Its performance looks more similar to the basic FE 1080 that without tweaks would throttle; that at 4k ultra in Battlefront averages 66fps and minimum 56fps.

To my mind it only needs to compete with the 1080 as there are people still waiting to buy an AMD product, but this is looking like it is somewhere in-between.

Ah well time will tell, fingers crossed the figures do align with the Nvidia 1080.

Cheers

Last edited:

Looks like they are using Battlefront to demo Rizen+Vega at CES.

http://videocardz.com/65343/amd-demos-star-wars-battlefront-on-ryzen-and-vega-at-ces2017

Bit concerned with the performance when looking at the details, such as a 55 FOV, and occasionally dipping below 60fps for 4k Ultra.

Battlefront historically has better performance with AMD cards (the Fury X beats the 980ti in this game by around 15%), but worth noting the 1080 custom models average 73fps and minimum at 65fps..

Realistically and IMO this game it should be better than the 1080 in this game and then equal out in other games, early days but looks like it may be tricky competing with 1080.

It might improve in some ways and also it is different maps, but worth noting the lowered FoV in the CES demo.

Its performance looks more similar to the basic FE 1080 that without tweaks would throttle; that at 4k ultra in Battlefront averages 66fps and minimum 56fps.

To my mind it only needs to compete with the 1080 as there are people still waiting to buy an AMD product, but this is looking like it is somewhere in-between.

Cheers

Good point but they used an unreleased DLC and as you stated FOV was changed.

Napoleon

[H]ard|Gawd

- Joined

- Jan 27, 2003

- Messages

- 1,073

What CPU was used for the performance graph you Showed? Ryzen 8 core at 3.4 GHZ doesn't scream enthusiast gaming until we have more bench/boost data, a 4 or 6 core could fare better.Looks like they are using Battlefront to demo Rizen+Vega at CES.

http://videocardz.com/65343/amd-demos-star-wars-battlefront-on-ryzen-and-vega-at-ces2017

Bit concerned with the performance when looking at the details, such as a 55 FOV, and occasionally dipping below 60fps for 4k Ultra.

Battlefront historically has better performance with AMD cards (the Fury X beats the 980ti in this game by around 15%), but worth noting the 1080 custom models average 73fps and minimum at 65fps..

Realistically and IMO this game it should be better than the 1080 in this game and then equal out in other games, early days but looks like it may be tricky competing with 1080.

It might improve in some ways and also it is different maps, but worth noting the lowered FoV in the CES demo.

Its performance looks more similar to the basic FE 1080 that without tweaks would throttle; that at 4k ultra in Battlefront averages 66fps and minimum 56fps.

To my mind it only needs to compete with the 1080 as there are people still waiting to buy an AMD product, but this is looking like it is somewhere in-between.

Cheers

TBH , while not inspiring, that would put Vega in between 1070/1080, right where it need to be. I wonder what the price will be...at $500 it could have stopping power (based in this single AMD friendly data leak, no benches, and beta drivers)

Yep one point in its favour is unreleased DLC that may be more intensive but against that is the FoV change, kinda mixed bag.Good point but they used an unreleased DLC and as you stated FOV was changed.

But this may start to indicate why they used a Pascal Titan for the 4k Battlefield 1 comparison between Intel and Ryzen.

Cheers

Last edited:

We've seen AMD release benchmarks before. I'll be impressed and excited about RyZen / Vega when they hand [H] a physical copy of each with no restrictions on whether or not [H] can release the data on the hardware whether or not it is good news for AMD.

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,122

its not unreleased, I was playin last night and the default FOV is 55.Good point but they used an unreleased DLC and as you stated FOV was changed.

edit: see here: https://hardforum.com/threads/what-fov-are-you-using-for-star-wars-battlefront-on-pc.1882134/

its not unreleased, I was playin last night and the default FOV is 55.

edit: see here: https://hardforum.com/threads/what-fov-are-you-using-for-star-wars-battlefront-on-pc.1882134/

Well either way 55 to 75 is a pretty big difference of what is being shown on the screen, an FOV of 75 degrees will give you many more objects on the screen, more polygons, more textures.

Good point but they used an unreleased DLC and as you stated FOV was changed.

55 is the default FOV for SW: Battlefront. It's the vertical FOV setting.

It does raise the question, what settings are used for FOV by reviewers, kinda silly default low setting.55 is the default FOV for SW: Battlefront. It's the vertical FOV setting.

I mean even they show 55 being pretty close to the minimum for its scale in settings slider, and I doubt anyone keeps it that low as you lose peripheral information/enviornment motion (also linked to motion sickness sensitivity).

Cheers

It does raise the question, what settings are used for FOV by reviewers, kinda silly default low setting.

I mean even they show 55 being pretty close to the minimum for its scale in settings slider, and I doubt anyone keeps it that low as you lose peripheral information/enviornment motion (also linked to motion sickness sensitivity).

Cheers

55 vertical FOV is about 90~ horizontal FOV on a 16:9 monitor.

55 vertical FOV is about 90~ horizontal FOV on a 16:9 monitor.

You beat me to it heh, I was going to mention that at 55 it sounds like they're using vertical FOV

yes but it can still cause motion sickness and loss of peripheral game information, otherwise why offer higher scale where comparable would be around 101-105.55 vertical FOV is about 90~ horizontal FOV on a 16:9 monitor.

How many other games use a FOV of 55 or near that figure by default?

I would say nearly none.

Just curious, how many here who play the game actually leave it at 55?

I have only read of members changing it.

Just to add, I appreciate it is more vertical focused in Battlefront but does it also adjust width as part of the calculation?

Maybe I am over-reacting to it in Battlefront *shrug*.

Cheers

Last edited:

We've seen AMD release benchmarks before. I'll be impressed and excited about RyZen / Vega when they hand [H] a physical copy of each with no restrictions on whether or not [H] can release the data on the hardware whether or not it is good news for AMD.

Not gonna happen unless Kyle has persuaded AMD to respect truth in journalism. I'm sure he will buy them ASAP to provide us with reviews like with Polaris. He's good like that.

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,122

guru3d and GN both left it at default 55. they didn't have anything bad to say about it being 55 either. they(g3d) simply said its adjustable between 44-110 so it has the range to prevent motion sickness. fov doesn't have a set standard either.well it depends on the game and monitor size ,resolutions used, how many monitors etc. Kinda hard to say why they used 55 but any case..... shouldn't matter too much outside of what other reviews have used for their settings.

guru3d and GN both left it at default 55. they didn't have anything bad to say about it being 55 either. they(g3d) simply said its adjustable between 44-110 so it has the range to prevent motion sickness. fov doesn't have a set standard either.

yeah there is nothing bad about it, its all about the monitor and person that's about it.

Yes but is bottleneck with PCIe bandwidths with other overhead happening (while being theoretical ~16gb/s), two cards would be half that if 8x pcie , also cpu write which holds up the bus for like another card, increases cpu usage as well plus the asset is most likely is coming from a SSD as well on the cpu side anyways (add up latencies, the traditional side compared to the direct path on the GPU side this I would say it would be way faster). It would probably be more for compute stuff and other unique stuff but I can see a definite advantage for gaming if it could work. I am just guessing on game card with SSD and maybe too early for this round.Isn't the SSD 'memory' more for HPC-research-modelling setups rather than gaming?

Because wouldn't 16GB/32GB of DDR4 memory on Skylake and newer CPUs actually be faster than the SSD type option even if it is integral to the GPU?

Games are designed to load assets in a pretty fluid-dynamic way these days and the speed of having 16GB DDR4 and 8GB minimum VRAM (in reality if a GPU can have the SSD option then you are looking at GPUs than should have 12GB GDDR 8gbits minimum spec and equal to 384bits bus or much higher) is more than enough for current next gen games.

I can see its use for large scale HPC-scientific work-etc though.

Cheers

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,122

8hrs mst...

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

Yes but is bottleneck with PCIe bandwidths with other overhead happening (while being theoretical ~16gb/s), two cards would be half that if 8x pcie , also cpu write which holds up the bus for like another card, increases cpu usage as well plus the asset is most likely is coming from a SSD as well on the cpu side anyways (add up latencies, the traditional side compared to the direct path on the GPU side this I would say it would be way faster). It would probably be more for compute stuff and other unique stuff but I can see a definite advantage for gaming if it could work. I am just guessing on game card with SSD and maybe too early for this round.

From what I hear it's mostly oil and gas exploration industries. Apparently they have huge lookup tables when exploring and the SSG enables them to speed this up significantly. Which is huge and really they can't use Nvidia to do this, as they don't have the patent or capability yet... Basically they see nearly instant performance with AMD or ten minutes with Nvidia, even though nvidia has the super duper die tech right now.All due to PCIE bus limitations and data transportation. Imagine an array of cards like Nvidia uses for deep learning, instead employed in this role all trying to saturate the PCIE bus to look up tables from storage.. that shit ain't going to work.

It's like AMD with the IMC CPU in the good old Athlon 64 days.

I can see this being very beneficial in texture dense games at 4k and 8k, it could enable all sorts of crazy stuff to be done with game design and persistent worlds. Imagine your GPU keeps a cache of an EvE-online type world so that there are no loading times and seamless transitions...

That just re-inforces my point, it is designed specifically for HPC-scientific-modelling implementations.From what I hear it's mostly oil and gas exploration industries. Apparently they have huge lookup tables when exploring and the SSG enables them to speed this up significantly. Which is huge and really they can't use Nvidia to do this, as they don't have the patent or capability yet... Basically they see nearly instant performance with AMD or ten minutes with Nvidia, even though nvidia has the super duper die tech right now.All due to PCIE bus limitations and data transportation. Imagine an array of cards like Nvidia uses for deep learning, instead employed in this role all trying to saturate the PCIE bus to look up tables from storage.. that shit ain't going to work.

It's like AMD with the IMC CPU in the good old Athlon 64 days.

I can see this being very beneficial in texture dense games at 4k and 8k, it could enable all sorts of crazy stuff to be done with game design and persistent worlds. Imagine your GPU keeps a cache of an EvE-online type world so that there are no loading times and seamless transitions...

This is integrated SSD memory vs traditional large scale nodes and massive modelling requirements.

We will not need this for consumer GPUs.

Nvidia has a few other approaches in the HPC world but that is for other threads, but I do see the benefit of the GPU-SSD solution implemented by AMD in large scale HPC-science projects.

Cheers

SighTurtle

[H]ard|Gawd

- Joined

- Jul 29, 2016

- Messages

- 1,410

http://videocardz.com/65406/exclusive-amd-vega-presentation

Looks like all the presentation slides are here.

Looks like all the presentation slides are here.

limitedaccess

Supreme [H]ardness

- Joined

- May 10, 2010

- Messages

- 7,594

Isn't the SSD 'memory' more for HPC-research-modelling setups rather than gaming?

Because wouldn't 16GB/32GB of DDR4 memory on Skylake and newer CPUs actually be faster than the SSD type option even if it is integral to the GPU?

Games are designed to load assets in a pretty fluid-dynamic way these days and the speed of having 16GB DDR4 and 8GB minimum VRAM (in reality if a GPU can have the SSD option then you are looking at GPUs than should have 12GB GDDR 8gbits minimum spec and equal to 384bits bus or much higher) is more than enough for current next gen games.

I can see its use for large scale HPC-scientific work-etc though.

Cheers

The original demoed showcase was with a video editing work load and the comparison point was the speed up with attached to the GPU vs. system storage and not from attached to the GP vs. system memory.

Makes sense and why I cannot see it working for gaming or consumers that some feel it will be implemented in, context being discussion I am having with a few.The original demoed showcase was with a video editing work load and the comparison point was the speed up with attached to the GPU vs. system storage and not from attached to the GP vs. system memory.

Thanks

Using most powerful card on the market was logical to eliminate bottlenecks from GPU as much as possible. Vega wasn't readyYep one point in its favour is unreleased DLC that may be more intensive but against that is the FoV change, kinda mixed bag.

But this may start to indicate why they used a Pascal Titan for the 4k Battlefield 1 comparison between Intel and Ryzen.

Cheers

But then they go and play on 4K, reducing load for CPU. When comparing CPUs that is a weird move.

What do you mean Vega was not ready?Using most powerful card on the market was logical to eliminate bottlenecks from GPU as much as possible. Vega wasn't ready

But then they go and play on 4K, reducing load for CPU. When comparing CPUs that is a weird move.

At the very same presentation 5-10 minutes later Lisa used Vega with the unreleased Battlefront DLC.

Cheers

Anarchist4000

[H]ard|Gawd

- Joined

- Jun 10, 2001

- Messages

- 1,659

For the enthusiast products I could see it as a possibility. Maybe even an upgrade option. Ideally they just need a storage solution on par with PCIE 3.0 for bandwidth(16GB/s). So a partner could potentially add DDR3/4 whatever to the board to increase the effective pool size. In theory the Vega boards are short enough thanks to the HBM to fit all kinds of things on a regular sized PCB. 16GB of DDR3 is ~$100 retail, a partner I'm sure could get it cheaper. That's not a huge add for a high end product and would probably be beneficial as it would cut PCIE congestion and CPU load. 8+16GB should be sufficient for most games out there. That cost is easy enough to pass on to the consumer and wouldn't be a requirement.That just re-inforces my point, it is designed specifically for HPC-scientific-modelling implementations.

This is integrated SSD memory vs traditional large scale nodes and massive modelling requirements.

We will not need this for consumer GPUs.

Shintai

Supreme [H]ardness

- Joined

- Jul 1, 2016

- Messages

- 5,678

Looks like the (improved) geometry and primitive shaders wont be used as default.

https://www.pcper.com/reviews/Graph...w-Redesigned-Memory-Architecture/Primitive-Sh

More developer work needed.

Current output of cards:

http://www.hardware.fr/articles/953-7/performances-theoriques-geometrie.html

The new programmable geometry pipeline on Vega will offer up to 2x the peak throughput per clock compared to previous generations by utilizing a new “primitive shader.” This new shader combines the functions of vertex and geometry shader and, as AMD told it to me, “with the right knowledge” you can discard game based primitives at an incredible rate. This right knowledge though is the crucial component – it is something that has to be coded for directly and isn’t something that AMD or Vega will be able to do behind the scenes.

https://www.pcper.com/reviews/Graph...w-Redesigned-Memory-Architecture/Primitive-Sh

More developer work needed.

Current output of cards:

http://www.hardware.fr/articles/953-7/performances-theoriques-geometrie.html

Looks like the (improved) geometry and primitive shaders wont be used as default.

https://www.pcper.com/reviews/Graph...w-Redesigned-Memory-Architecture/Primitive-Sh

More developer work needed.

Current output of cards:

http://www.hardware.fr/articles/953-7/performances-theoriques-geometrie.html

It is a shame Polaris does not have this as it would had given AMD a great gaming development position and it would then be more universal from Console-to-PC gaming, would had put Nvidia under big pressure IMO.

I still think Nvidia underestimated the influence modern consoles based upon PC CPU-GPUs has for development-optimisation-functionality, definitely helping AMD drivers for PC IMO and also enabling them to push better their GPUOpen and the synergy it has with consoles.

Cheers

Anarchist4000

[H]ard|Gawd

- Joined

- Jun 10, 2001

- Messages

- 1,659

I believe the geometry shaders still get used for part of the pipeline. What needs programming is the discard. I'm guessing it's similar to this: http://gpuopen.com/geometryfx-1-2-cluster-culling/

The vertex and geometry shader part is most likely an upcoming API thing we haven't seen. Let devs run a shader to create triangles however they see fit. Limited use outside of development now, but cool feature for the future. Just one more stage becoming programmable.

The vertex and geometry shader part is most likely an upcoming API thing we haven't seen. Let devs run a shader to create triangles however they see fit. Limited use outside of development now, but cool feature for the future. Just one more stage becoming programmable.

D

Deleted member 93354

Guest

Programable vertex shaders were in spec since DX 9. Or am I missing something?I believe the geometry shaders still get used for part of the pipeline. What needs programming is the discard. I'm guessing it's similar to this: http://gpuopen.com/geometryfx-1-2-cluster-culling/

The vertex and geometry shader part is most likely an upcoming API thing we haven't seen. Let devs run a shader to create triangles however they see fit. Limited use outside of development now, but cool feature for the future. Just one more stage becoming programmable.

cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,085

Anarchist4000

[H]ard|Gawd

- Joined

- Jun 10, 2001

- Messages

- 1,659

Sorry primitive shaders. What they seem to be adding is a programmable pipeline enveloping vertex, geometry, tessellation, etc. Basically a compute shader that shits triangles. Allowing a dev to transform, tessellate, cull, and batch how they see fit. There was a SIGGRAPH presentation on GPU Driven Rendering Pipelines included in that link that would seemingly encompass a lot of what was proposed with the primitive shaders. It's not just transforming everything, but allowing interesting methods to organize and cull based on some technique.Programable vertex shaders were in spec since DX 9. Or am I missing something?

Vega 10x2... That's like a dual titan.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)