Hi all- first post, been lurking and admiring for some time and thought this was a good time to join in. I'm not super well-versed in hardware let alone large storage solutions, so I figured this would be a good way to learn some new tricks.

Currently I have an 8-port Dell PE 2950 and PowerVault MD1000, came with a PERC 5E raid card. I've tested it out with a handful of disks on hand and everything seems to be working as expected. This is all for a media server build, so speed really is not an issue-- it's all about capacity and failure tolerance for me. The 2950 is running ubuntu server 14.04 lts since I'm fairly familiar with it.

Originally, I thought I'd fill the MD1000 with 2TB drives in a raid-10 off the 5E card, but if at all possible I'd love to go with 4TB drives in Z3. The problem is of course that the 5E supports neither 4TB drives nor JBOD for zfs. Last week before deciding to go for ZFS I went ahead and picked up a PERC H800 which would do 4TB drives but is still hardware raid, not JBOD. From what I've read the H800 could only do a series of 1-disk RAID-0 vdisks, which is not only the right thing for ZFS, but might actually invalidate the benefits of it. So scratch that. From what I can tell, two options might actually attain what I'm looking for.. a PERC H200e (which can do JBOD for sure, though I can't determine 4TB compatibility?) or LSI 2008 HBA, which I believe would check all the boxes for me, but tbh I'm not entirely which specific one would be the right choice for the 2950.

For disks, I'm thinking WD Red 4tb, though a buddy of mine recommended comparable HGST 4tb based on reputation for low failure rate. (I think from BackBlaze's most recently published reports..) As long as neither is a terrible decision (have read to avoid green drives for disk arrays), I think I'm good there.

Interposers. What the hell do they do. Have read conflicting reports that interposers are required / not required for sata disks, and that they do or do not limit recognition of disk capacity down to 2TB in the MD1000. I figured worst-case scenario, I could go ahead and buy a couple 4tb disks, a couple interposers, try it both ways, and see which one works out properly-- unless you guys know for sure.

Configuration... 14-disk z3 plus a hot spare = 44 TB usable? It gets a little fuzzy for me when trying to determine how this all relates to block size and what constitutes a good idea vs a bad idea. I've seen a few setups on here with 11-disk z3 plus hot spare, but wouldn't quite be able to articulate why that would be better or worse than a 14-disk setup.

The OS on the 2950 is on a 2-disk raid 0-- my other tinge of paranoia was using software-based ZFS and then having the OS disk(s) go belly-up on me, therefore losing the config for the zfs pool.. is there any way to abate this risk?

Last but not least, the 2950 has dual quad core xeons (max spec, I don't remember precisely atm) but only 8gb ram, so I'm unsure if that will be sufficient for what will effectively become a NAS, or if I should look at more. Probably will.

I feel like I've done most of my homework at this point, but can't quite confirm compatibility on the setup without some advice. Muchos thanks for any help pointing me in the right direction.

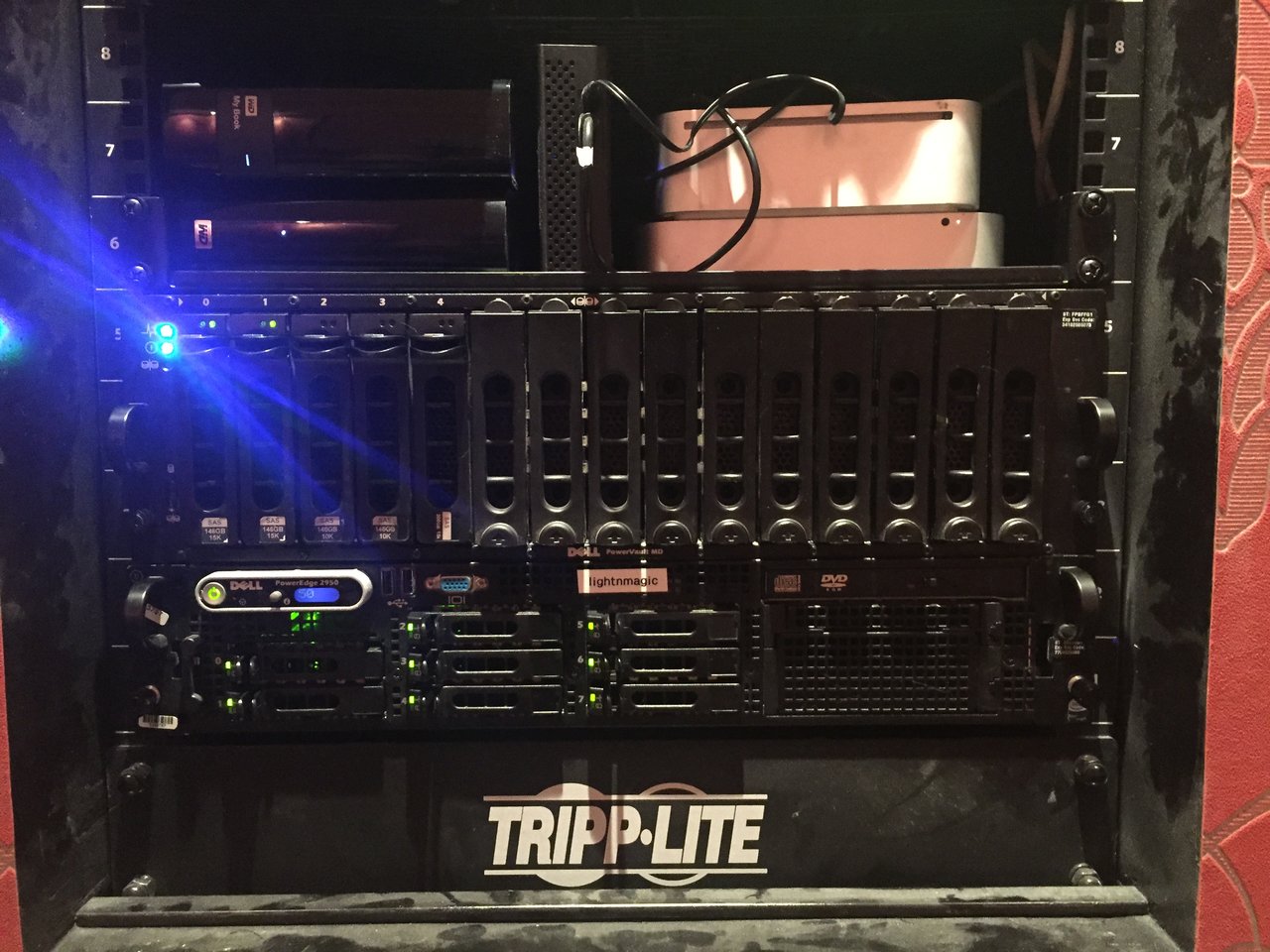

Included potato-phone picture for reference. Why the hell is it so dusty? Why is that blue LED so damn bright? I have no idea in either case. Cheers.

Currently I have an 8-port Dell PE 2950 and PowerVault MD1000, came with a PERC 5E raid card. I've tested it out with a handful of disks on hand and everything seems to be working as expected. This is all for a media server build, so speed really is not an issue-- it's all about capacity and failure tolerance for me. The 2950 is running ubuntu server 14.04 lts since I'm fairly familiar with it.

Originally, I thought I'd fill the MD1000 with 2TB drives in a raid-10 off the 5E card, but if at all possible I'd love to go with 4TB drives in Z3. The problem is of course that the 5E supports neither 4TB drives nor JBOD for zfs. Last week before deciding to go for ZFS I went ahead and picked up a PERC H800 which would do 4TB drives but is still hardware raid, not JBOD. From what I've read the H800 could only do a series of 1-disk RAID-0 vdisks, which is not only the right thing for ZFS, but might actually invalidate the benefits of it. So scratch that. From what I can tell, two options might actually attain what I'm looking for.. a PERC H200e (which can do JBOD for sure, though I can't determine 4TB compatibility?) or LSI 2008 HBA, which I believe would check all the boxes for me, but tbh I'm not entirely which specific one would be the right choice for the 2950.

For disks, I'm thinking WD Red 4tb, though a buddy of mine recommended comparable HGST 4tb based on reputation for low failure rate. (I think from BackBlaze's most recently published reports..) As long as neither is a terrible decision (have read to avoid green drives for disk arrays), I think I'm good there.

Interposers. What the hell do they do. Have read conflicting reports that interposers are required / not required for sata disks, and that they do or do not limit recognition of disk capacity down to 2TB in the MD1000. I figured worst-case scenario, I could go ahead and buy a couple 4tb disks, a couple interposers, try it both ways, and see which one works out properly-- unless you guys know for sure.

Configuration... 14-disk z3 plus a hot spare = 44 TB usable? It gets a little fuzzy for me when trying to determine how this all relates to block size and what constitutes a good idea vs a bad idea. I've seen a few setups on here with 11-disk z3 plus hot spare, but wouldn't quite be able to articulate why that would be better or worse than a 14-disk setup.

The OS on the 2950 is on a 2-disk raid 0-- my other tinge of paranoia was using software-based ZFS and then having the OS disk(s) go belly-up on me, therefore losing the config for the zfs pool.. is there any way to abate this risk?

Last but not least, the 2950 has dual quad core xeons (max spec, I don't remember precisely atm) but only 8gb ram, so I'm unsure if that will be sufficient for what will effectively become a NAS, or if I should look at more. Probably will.

I feel like I've done most of my homework at this point, but can't quite confirm compatibility on the setup without some advice. Muchos thanks for any help pointing me in the right direction.

Included potato-phone picture for reference. Why the hell is it so dusty? Why is that blue LED so damn bright? I have no idea in either case. Cheers.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)