erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,894

"Nothing much is news-worthy in this news." - XL-R8R

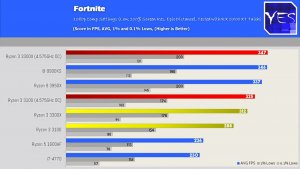

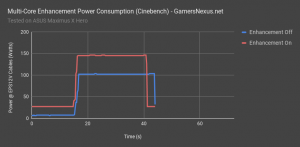

"A comprehensive review of the Intel Core i9-10900K 10-core/20-thread processor by Chinese tech publication TecLab leaked to the web on video sharing site bilibili. Its testing data reveals that Intel has a fighting chance against the Ryzen 9 3900X both in gaming- and non-gaming tasks despite a deficit of 2 cores; whereas the much pricier Ryzen 9 3950X only enjoys leads in multi-threaded synthetic- or productivity benchmarks.

Much of Intel's performance leads are attributed to a fairly high core-count, significantly higher clock speeds than the AMD chips, and improved boosting algorithms, such as Thermal Velocity Boost helping the chip out in gaming tests. Where Intel loses hard to AMD is power-draw and energy-efficiency. TecLab tested the three chips with comparable memory- and identical graphics setups."

https://www.techpowerup.com/267287/...eview-leaked-suggests-intel-option-formidable

"A comprehensive review of the Intel Core i9-10900K 10-core/20-thread processor by Chinese tech publication TecLab leaked to the web on video sharing site bilibili. Its testing data reveals that Intel has a fighting chance against the Ryzen 9 3900X both in gaming- and non-gaming tasks despite a deficit of 2 cores; whereas the much pricier Ryzen 9 3950X only enjoys leads in multi-threaded synthetic- or productivity benchmarks.

Much of Intel's performance leads are attributed to a fairly high core-count, significantly higher clock speeds than the AMD chips, and improved boosting algorithms, such as Thermal Velocity Boost helping the chip out in gaming tests. Where Intel loses hard to AMD is power-draw and energy-efficiency. TecLab tested the three chips with comparable memory- and identical graphics setups."

https://www.techpowerup.com/267287/...eview-leaked-suggests-intel-option-formidable

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)