- Joined

- Mar 3, 2018

- Messages

- 1,713

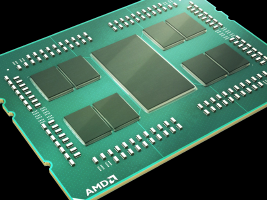

While AMD recently gained notoriety for using a "chiplet" based design in their EPYC CPUs, Semiconductor Engineering says that numerous other companies are working on chiplet-based products. The report claims that Marvell is actually the first, and only, company to deploy the chiplet concept commercially so far, but a senior director at the company said "Next year you’ll be hearing a lot more about chiplets." Multiple industry leaders claim that the chiplet approach is becoming more viable as Moore's Law "comes to a grinding halt." Nevertheless, there are still some major hurdles to overcome with multi-chip designs, like added testing complexities, packaging concerns, and managing IPs from different vendors, among many others. But Semi Engineering's experts seem to think chiplets are the next logical step in silicon chip design, and their opinions are worth a read.

"Chiplets will increase the rate of sales, and there will be more innovation" said Amin Shokrollahi, CEO of Kandou Bus. "And this will accelerate innovation because you are designing only one part. This has been the driver among IP houses and the IP business in general. You grab one IP from here and another from there. But where this has run into problems is putting these IPs together. That part is tough.

"Chiplets will increase the rate of sales, and there will be more innovation" said Amin Shokrollahi, CEO of Kandou Bus. "And this will accelerate innovation because you are designing only one part. This has been the driver among IP houses and the IP business in general. You grab one IP from here and another from there. But where this has run into problems is putting these IPs together. That part is tough.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)