Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Build: 3970x, dual 2080ti, 8TB m.2 RAID = Render Monster

- Thread starter thesmokingman

- Start date

Why suggest Storemi and not Primocache ? Because it's free instead of some 10s of $ ? Not even sure Storemi is compatible with Raid. Get the real thing, aka Primocache. And some youtube video suggests it works great with Optane on AMD CPU+MB.Why ECC for a rendering workstation? Note, I am not asking what ECC is or does.

Also, for optane isn't storemi not that great when it comes to caching and he would need to use something like PrimoCache?

Well, it's huge, and the motherboard stays flt aon it... while served with air (and dust) from the top.The Core X9 is so darn great to work with, it's like cheating. Pop the top off and its like an open bench.

View attachment 206817

Not very fund. I favor quite the opposite. Small, micro-ATX, mother board stays rather vertical.

Silentbob343

[H]ard|Gawd

- Joined

- Aug 2, 2004

- Messages

- 1,944

I didn't suggest storemi over primocache. In fact I suggested the opposite.Why suggest Storemi and not Primocache ? Because it's free instead of some 10s of $ ? Not even sure Storemi is compatible with Raid. Get the real thing, aka Primocache. And some youtube video suggests it works great with Optane on AMD CPU+MB.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

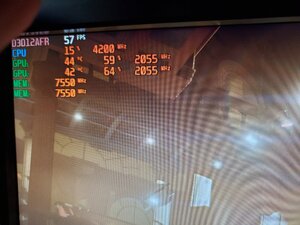

My friend is out of the country so I can't get my hands on the 2080ti. It will be a day or two before I can throw blocks on them. For now, I've validated the memory which is 3600mhz 16-16-16 at stock cpu speeds. I'm priming it right now at [email protected] (loaded) and its kind of crazy watching the power draw climb. Edit, dropping it down 50mhz and as well as lowering some volts, the draw numbers are getting large.

Mmm, temps peak at 76c with PrimeAVX at max load (not in test mode) and water temps are chilling at 27c. Damn these ML120s can get loud, though they are running at 2.5K rpm. Since water temp is low, the heat is all in the block. Going to lower voltage some more and bring fan pwm scaling down some so its not a tornado in here, lol.

Mmm, temps peak at 76c with PrimeAVX at max load (not in test mode) and water temps are chilling at 27c. Damn these ML120s can get loud, though they are running at 2.5K rpm. Since water temp is low, the heat is all in the block. Going to lower voltage some more and bring fan pwm scaling down some so its not a tornado in here, lol.

Last edited:

somebrains

[H]ard|Gawd

- Joined

- Nov 10, 2013

- Messages

- 1,668

So how about running some render tests, cpu only, stock then clocked?

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

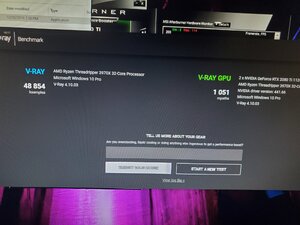

Did a quickie vray bench, got 45667 good for 4th on the 3970x listings.

https://benchmark.chaosgroup.com/ne...adripper+3970X+32-Core+Processor+x64&id=14057

https://benchmark.chaosgroup.com/ne...adripper+3970X+32-Core+Processor+x64&id=14057

somebrains

[H]ard|Gawd

- Joined

- Nov 10, 2013

- Messages

- 1,668

Cool.

Does your buddy have any existing projects you can pull and run?

My concern would be ram use for whatever projects are running now.

There was another TR thread where they didn't get my points.

A build optimized for a given project, or slate of projects, changes once the next set of contracts get signed.

The builds change, and so on and so forth.

Pcie reserves for future capture cards, nics, storage, etc are abundant.

So headroom to accommodate invoices start speeding up velocity of work in a way that I find exciting and enjoyable.

I've spent enough time in studios where at the end of a given product arc I'll look over 12-24 main builds like this TR box, and up to 50 generic workstations feeding the mains.

It's crazy, but in smaller studios you get the above or more gear but fewer people running clusters of gear dedicated to project lines or workflows.

Does your buddy have any existing projects you can pull and run?

My concern would be ram use for whatever projects are running now.

There was another TR thread where they didn't get my points.

A build optimized for a given project, or slate of projects, changes once the next set of contracts get signed.

The builds change, and so on and so forth.

Pcie reserves for future capture cards, nics, storage, etc are abundant.

So headroom to accommodate invoices start speeding up velocity of work in a way that I find exciting and enjoyable.

I've spent enough time in studios where at the end of a given product arc I'll look over 12-24 main builds like this TR box, and up to 50 generic workstations feeding the mains.

It's crazy, but in smaller studios you get the above or more gear but fewer people running clusters of gear dedicated to project lines or workflows.

Last edited:

Do not touch storemi

WARNING

I'm literally avoiding you calamity and disaster and a failed friendship in the making. Trust me!!

Create a stable raid 10 using a true nvme raid hba like... Broadcom or LSI nvme cards

Or motherboard raid 10 on software level in raid 10. Dont use 0. And for the love of God do not use tiered caching at all. It's like politicians, it sounds great behind the pulpit, but when its allowed to run the office, it's a public disaster in the making.

This reply is for anyone reading.

WARNING

I'm literally avoiding you calamity and disaster and a failed friendship in the making. Trust me!!

Create a stable raid 10 using a true nvme raid hba like... Broadcom or LSI nvme cards

Or motherboard raid 10 on software level in raid 10. Dont use 0. And for the love of God do not use tiered caching at all. It's like politicians, it sounds great behind the pulpit, but when its allowed to run the office, it's a public disaster in the making.

This reply is for anyone reading.

Last edited:

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

I get the dual 2080ti tonight. While adding those into loop, will be plumbing QDCs in as well, and maybe some casters.

Don't use storemi. RAID 0 is fine for the render drive, that's kind of the whole point of the bifurication AIC.

Don't use storemi. RAID 0 is fine for the render drive, that's kind of the whole point of the bifurication AIC.

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Brand new box of Delta 14mmHg high pressure PWM fans. Loud AF at full throttle but whisper at 500rpm.

So are you putting it one county over or two when you put it under load?

So are you putting it one county over or two when you put it under load?

Theres no need to run them past 1100rpm. They go to 3700 which is crazy.

I run them via Corsair icue profile. So not based on motherboard vs CPU load throttle up down stuff.

I have corsair commander pro. Which is a very good fan controller on PWM.

I'll make a video later with them running quietly.

I have a silent, workstation, and gaming profile I hand built so they are hands down the absolute BEST radiator fan I have ever laid my hands on.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

View attachment 207936

Oh yea, time to finish this up!

I think your gonna "Jizz in my pants" -lonely Island

When you get those dual cards running.

I would love to see some rendering benches that can use SLI. Dont forget your nVlink bridge, they are not included with gpus.

Last edited:

I think your gonna "Jizz in my pants" -lonely Island

When you get those dual cards running.

I would love to see some rendering benches that can use SLI. Dont forget your nVlink bridge, they are not included with gpus.

They aren't cheap either.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

I think your gonna "Jizz in my pants" -lonely Island

When you get those dual cards running.

I would love to see some rendering benches that can use SLI. Dont forget your nVlink bridge, they are not included with gpus.

Omfg, finding 2 slot Quadro 6k or 8K bridges is a pita. I will get one eventually but SLI is secondary since its not important for rendering. But would be nice for game benching.

Update, got the blocks on but am waiting for the backplates. Will use a scalar bridge also. It's annoying though that the cpu block is argb, but the gpu block is 12v. Now I need more rgb calbes, smh. Will also plumb QDc's to the rads, then one could pull both rads as one unit to access the mb/cards, etc.

Last edited:

somebrains

[H]ard|Gawd

- Joined

- Nov 10, 2013

- Messages

- 1,668

The bridges are a prob, always have been.

Test before and after to see what the real benefits are bc I have my doubts on the rigs I've built.

Test before and after to see what the real benefits are bc I have my doubts on the rigs I've built.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

Getting late here but I ran some tspy and fstrike. Darn, just realized it's running ram at 3200 instead of 3600. Oh well will fix it tomorrow.

https://www.3dmark.com/spy/9752103

https://www.3dmark.com/fs/21270633

https://www.3dmark.com/spy/9752103

https://www.3dmark.com/fs/21270633

Getting late here but I ran some tspy and fstrike. Darn, just realized it's running ram at 3200 instead of 3600. Oh well will fix it tomorrow.

https://www.3dmark.com/spy/9752103

https://www.3dmark.com/fs/21270633

Also your FS score will be low because your not nVLinking your cards. But I think you said you were not going to SLI.

Here's to hoping nV actually releases checkerboard rendering soon. I would toss a 2060 alongside my 2080ti so I could get more fps while ray tracing. Let the 60 supplement from behind.

DogsofJune

Supreme [H]ardness

- Joined

- Nov 7, 2008

- Messages

- 4,638

I am interested in seeing thatHere's to hoping nV actually releases checkerboard rendering soon. I would toss a 2060 alongside my 2080ti so I could get more fps while ray tracing. Let the 60 supplement from behind.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

Also your FS score will be low because your not nVLinking your cards. But I think you said you were not going to SLI.

Here's to hoping nV actually releases checkerboard rendering soon. I would toss a 2060 alongside my 2080ti so I could get more fps while ray tracing. Let the 60 supplement from behind.

I ordered one at rip off rate shrugs. We don't need sli on a production machine but will use it for gaming. Hopefully it gets here soon so I can throw some benches at it.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

DogsofJune

Supreme [H]ardness

- Joined

- Nov 7, 2008

- Messages

- 4,638

That's not a bad processor. Nice build

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

Thanks man. At this point, running a higher all core overclock is possible but the cooling is the limiting factor, that and of course the power draw. In sli on Vray, it was pushing over 1100w, higher at the wall. 24/7 use will be with cpu stock with PBO and the gpus overclocked for the best balance of heat/power vs the cooling on hand.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

My trusty old EVGA 1200w PS was able to push 2 old Titans in SLI easily, something my Antec 1600w Platinum couldn't do.

Is the EVGA 1600w PS that much better?

I'm guessing guessing you had a multi rail psu then. That has nothing to do with quality but design. Single rail psus like the EVGA G/P/T are great for high power setups where you may not know what your exact power draw per component is. Multi rail psus were kind of popular a few years back but it seems not so much anymore. I gave up on multi rail designs back then since I was a long time quad gpu user/bencher. With multiple high power devices it became a nightmare balancing what when on which rail. And so you'd get rails that were over taxed and rails that were under used, etc etc.

I’m still using the EVGA 1200. No more SLI but I do have a [email protected] 2080Ti and 6 hard drives. Takes it all in stride, Best Psu I’ve ever owned. The Antec 1600 Platinum was almost double the price and was junk.I'm guessing guessing you had a multi rail psu then. That has nothing to do with quality but design. Single rail psus like the EVGA G/P/T are great for high power setups where you may not know what your exact power draw per component is. Multi rail psus were kind of popular a few years back but it seems not so much anymore. I gave up on multi rail designs back then since I was a long time quad gpu user/bencher. With multiple high power devices it became a nightmare balancing what when on which rail. And so you'd get rails that were over taxed and rails that were under used, etc etc.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

somebrains

[H]ard|Gawd

- Joined

- Nov 10, 2013

- Messages

- 1,668

Thanks man. At this point, running a higher all core overclock is possible but the cooling is the limiting factor, that and of course the power draw. In sli on Vray, it was pushing over 1100w, higher at the wall. 24/7 use will be with cpu stock with PBO and the gpus overclocked for the best balance of heat/power vs the cooling on hand.

There is a reason why studios run dedicated 20a circuits as their workload increases.

At some point your single/dual box TR user is going to have to contemplate subpanel upgrades if they go multi gpu or tr4 is 2x what TR3 is.

Storage arrays are easy to take to single phase.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

There is a reason why studios run dedicated 20a circuits as their workload increases.

At some point your single/dual box TR user is going to have to contemplate subpanel upgrades if they go multi gpu or tr4 is 2x what TR3 is.

Storage arrays are easy to take to single phase.

Nah, don't need a 20a circuit. System draw doesn't approach the the limits of 15a.

somebrains

[H]ard|Gawd

- Joined

- Nov 10, 2013

- Messages

- 1,668

Nah, don't need a 20a circuit. System draw doesn't approach the the limits of 15a.

System doesn't on its own, was thinking NAS and other job flow peripherals.

Add a 2nd box or gpu expand this build with Batt backups for everything.

vxspiritxv

[H]ard|Gawd

- Joined

- Feb 10, 2001

- Messages

- 1,610

My apc sua1500 goes into overload status with a single 2080ti and 3960x both at full load (never happens for me out side of load testing). Still works tho. No way I can afford another sua2200, nor do I have room for it.

thesmokingman

Supreme [H]ardness

- Joined

- Nov 22, 2008

- Messages

- 6,617

My apc sua1500 goes into overload status with a single 2080ti and 3960x both at full load (never happens for me out side of load testing). Still works tho. No way I can afford another sua2200, nor do I have room for it.

You should look at the PR1500LCD, its pretty well priced for a 1500w ups. The problem with ups labeling is they go by VA and not watts.

System doesn't on its own, was thinking NAS and other job flow peripherals.

Add a 2nd box or gpu expand this build with Batt backups for everything.

Ok, but that's not my problem.

My apc sua1500 goes into overload status with a single 2080ti and 3960x both at full load (never happens for me out side of load testing). Still works tho. No way I can afford another sua2200, nor do I have room for it.

A 1500VA UPS can't even handle my [email protected] with a custom loop and an RTX 2080 Ti. There is no chance it will handle a modern HEDT CPU.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)