do you ever have anything pleasent to say? Always the bringer of the black cloud. And far too many assumptions on actual involvement of IHVs. Your position at your job at best gives you little insight to the vast market and you using it as a prop for the inclusion of your so-called-facts is improper in any venue.

when one can distance themselves from petty pissin contests aka: Fps meters, they then can see what it is DX whatever cam bring and what its limitations are.

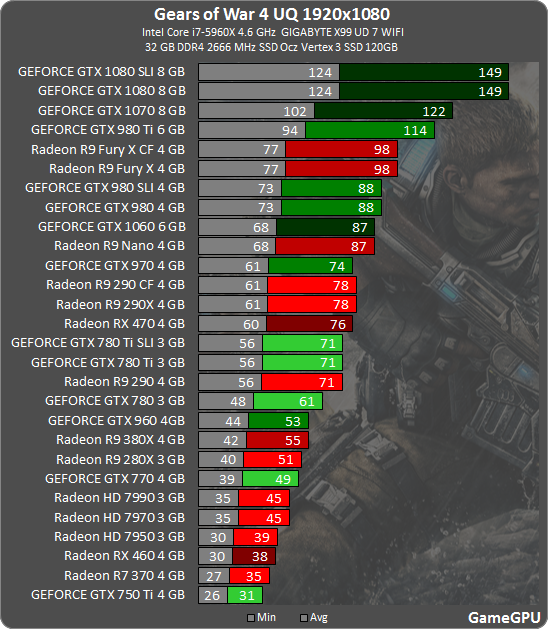

oddly enough looking at the graphs DX12 looks very flat, with the plummets being the negative and points that need attention. but being DX11 is about as good as it is going to get, a reasonable observer can see the absolute benifit that DX12 can bring. It is far too early to be complaining that it hasn't surplanted it's predecessor.

So be it. I'm not arguing with a flagwaver.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)