elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

Well the 2019 lg oleds did have bfi options that got removed before they shipped. With the gsync firmware update happening later this year it seems LG is aware of PC gamers.

I'm hopeful 2020 models will have their own version of ELMB with simultaneous bfi and vrr.

It may seem fast, but LG surprised everyone by having full HDMI 2.1 support in 2019 models.

The thing is you will potentially be able to have a ps5 with 120hz quasi 4k and VRR by the end of next year to play on hdmi 2.1 tvs out now and those due out in 2020. PC monitors and gpus have roadmapped way behind making some of us who realize what they are marketing to us for now in limited dp 1.4 high resolution gaming monitors (and non-hdmi 2.1 gpus) very frustrated.

NO HDMI 2.1 GPUs for over 60fps on HDMI 2.1 TVs

--------------------------------------------------------------------------

-Having hdmi 2.1 4k VRR on a giant tv and running 60fps max off of a pc to it is inadequate for higher hz gameplay in relation motion blur and motion defintiion/smoothness/pathing.

BFI problems/trade-offs are severe in the tech's current state

-----------------------------------------------------------------------------------

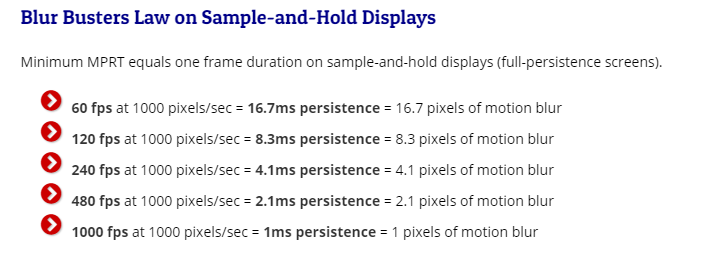

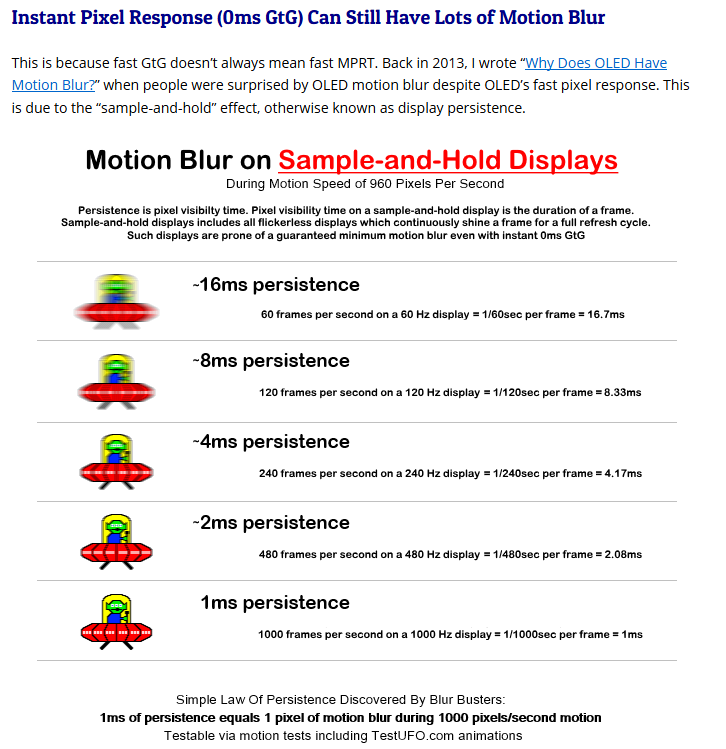

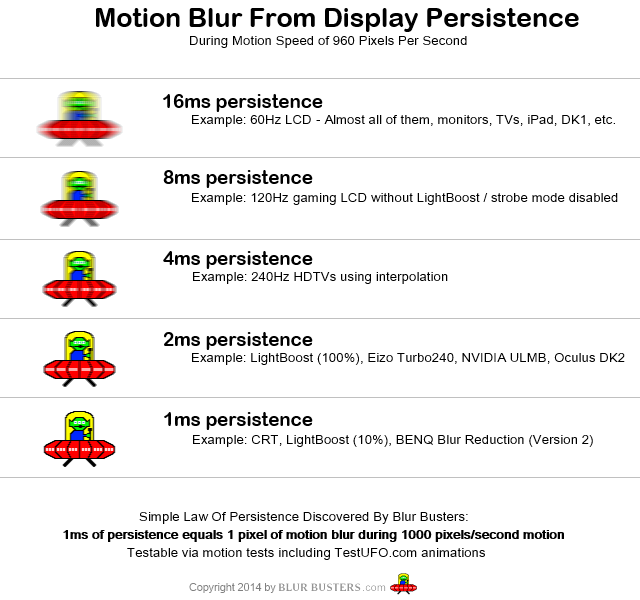

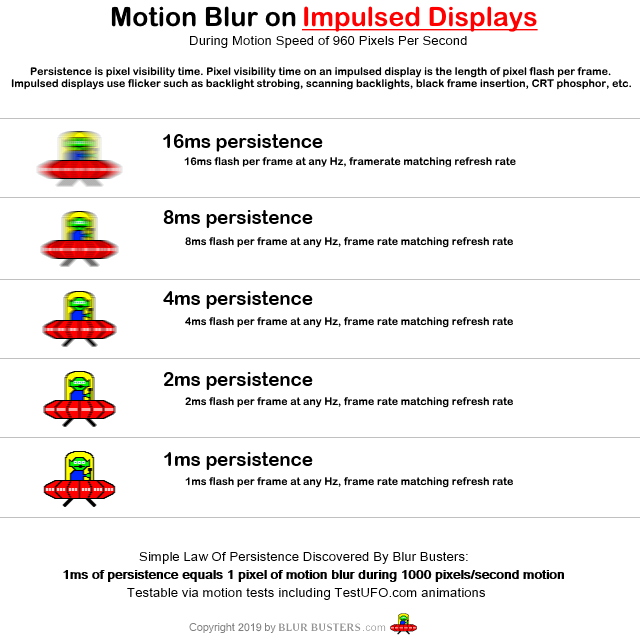

-BFI/strobing has to be 120hz flicker rate or higher or people will get PWM eyestrain/fatigue. I don't know if this can be done without interpolation to increase effective frame rates to match the strobing.. which in the current generations usually produces artifacts and adds a lot of input lag.

-BFI or strobing also has to use a much higher starting brightness otherwise SDR color/brightness will be much dimmer and HDR color volume is thrown out entirely. This is a big problem for all displays but especially for the already more dim SDR and ABL capped HDR OLEDs. I've read reports that strobing can cut the display color brightness/overall brightness by 2/3.

-BFI has limited motion definition gains unless you are running higher fps (impossible off of a non-hdmi 2.1 gpu to hdmi 2.1 4k 120hz tvs) or using motion interpolation to get a soap opera or VR time warp/space warp effect.

https://forums.blurbusters.com/viewtopic.php?t=5262

This means:

- A non-strobed OLED has identical motion blur to a fast non-strobed TN LCD (a model with excellent overdrive tuning).

- An OLED with a 50%:50% onff BFI will reduce motion blur by 50% (half original motion blur)

- An OLED with a 25%:75% onff BFI will reduce motion blur by 75% (quarter original motion blur)

Typically, most OLED BFI is only in 50% granularity (8ms persistence steps), though the new 2019 LG OLEDs can do BFI in 25% granularity at 60Hz and 50% granularity at 120Hz (4ms persistence steps)

Except for the virtual reality OLEDs (Oculus Rift 2ms persistence), no OLEDs currently can match the short pulse length of a strobe backlight just yet, though I'd expect that a 2020 or 2021 LG OLED would thus be able to do so.

Vega from that thread:

I've tested this on my LG C8 OLED versus my 165 Hz 1440p TN. With the OLED set to 1080p 120 Hz, the OLED pixels are so fast (sample and hold in this case) that I can see each individual frame. It doesn't "appear" as smooth as say the TN panel set to 1080p/120 Hz because even a fast TN will "smear" the images together. OLED doesn't blur one frame to the next.

To me seeing as OLED pixels are so dark fast, if kept sample and hold, the refresh rate even needs to be higher than LCD to get that silky smooth fast refresh feeling.

https://www.blurbusters.com/faq/motion-blur-reduction/

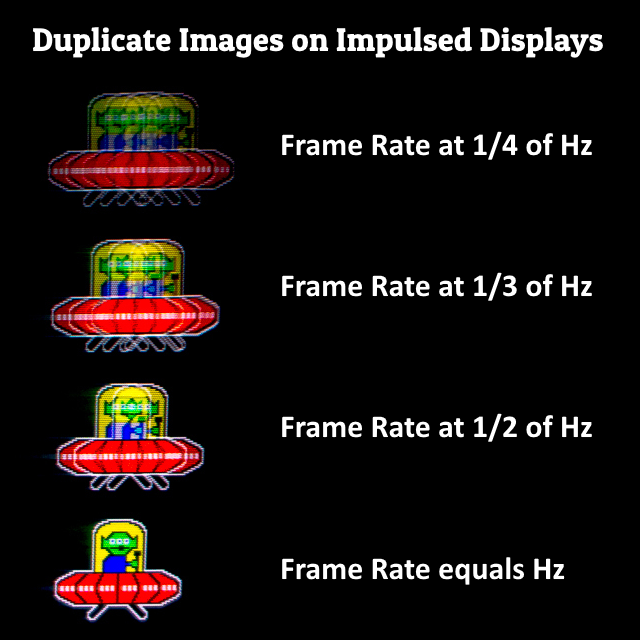

Sometimes blur reduction looks very good — with beautiful CRT-style motion clarity, no microstutters, and no noticeable double images.

Sometimes blur reduction looks very bad — with distracting side effects such as double images (strobe crosstak), poor colors, very dim, very microstuttery, flickery.

You need to have an extremely high frame rate in order to keep a high frame rate minimum unless you are using some kind of interpolation. Running lower strobe rates in order to use lower frame rate graphs means worse PWM flicker.

Strobing visually looks highest-quality when you have a consistent frame rate that match the refresh rate. If your framerate slows down, you may see dramatic microstuttering during Blur Reduction.

In some cases, it is sometimes favourable to slightly lower the refresh rate (e.g. to 85Hz or 100Hz for ULMB) in order to allow blur reduction to look less microstuttery — by more easily exactly matching frame rate to a lower refresh rate — if your GPU is not powerful enough to do consistent 120fps.

Frame rates are lower than the strobe rate will have multiple-image artifacts.

- 30fps at 60Hz has a double image effect. (Just like back in the old CRT days)

- 60fps at 120Hz has a double image effect.

- 30fps at 120Hz has a quadruple image effect.

Inadequate frame rates at higher strobing Hz:

Even if you had VRR + BFI/strobing to match the frame rates to the strobing , riding a roller coaster of different strobe lengths into lower strobes and back due to your varying frame rate span would be eye fatiguing and anything under 120hz strobing/blanking is bad in the first place imo.

You'd also be running lower frame rate ranges at 4k resolution with no hope of keeping a high enough frame rate to not sink below 120hz matched strobes in raw frame rates unless you were running a very easy to render or old game that gets over 150fps average.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)