Just joined this forum, first time OP here...

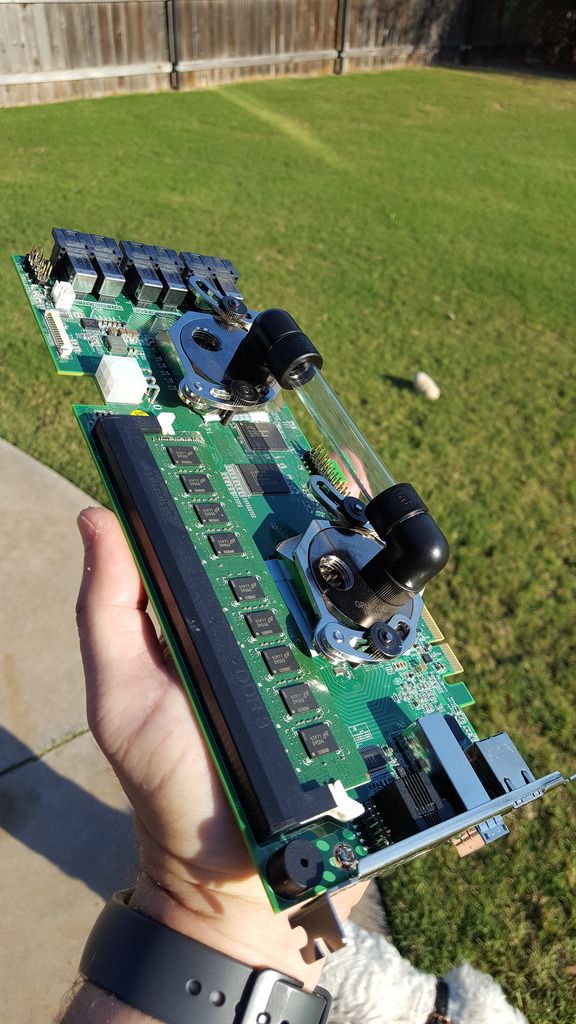

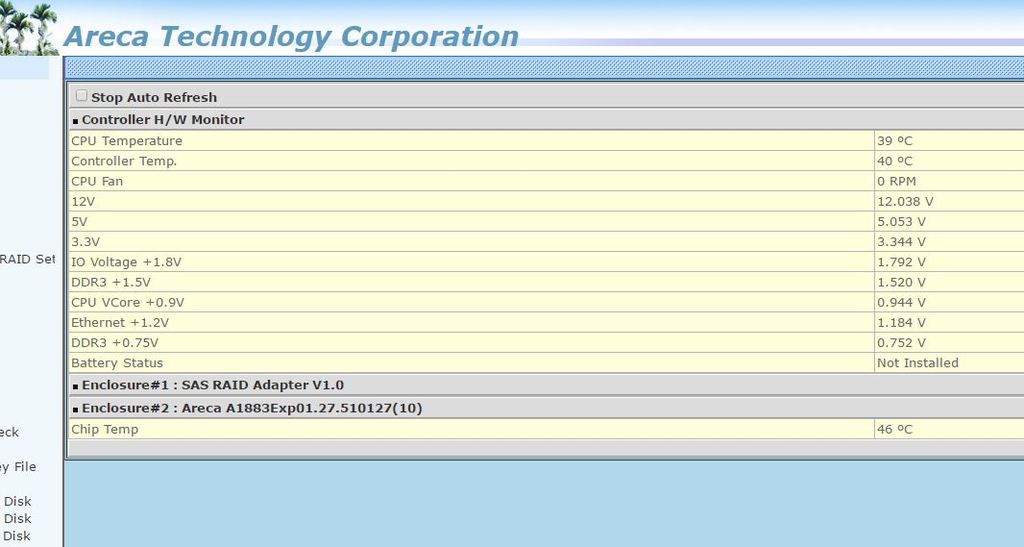

Dell PowerEdge R900 - VMware ESXi 5.5 U3 - Areca 1882 X configured as RAID attached to Proavio DS316J enclosure with 16 3 TB Seagate SV35

1 RaidSet 14 disks with 2 hot spares

1 RAID5, 40 TB VolumeSet on this RaidSet

7/20: E#2SLOT#09 failed, hot spare picked up, rebuild begins to E#2SLOT#11

Rebuild ran through the night

7/21: 6:00 AM this morning, E#2SLOT#11 failed

Both E#2SLOT#9 and E#2SLOT#11 showed as failed on the enclosure

I pulled both the failed drives and replaced them, and added them as hot spares

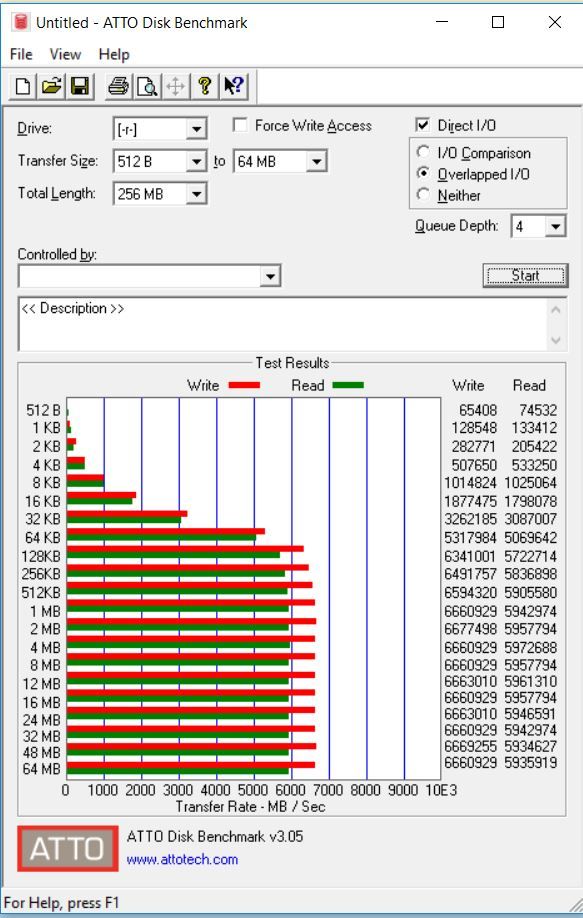

Areca Raid Storage Manager shows RaidSet as 'Rebuilding'', VolumeSet as 'Failed', 1 slot as failed, 2 hot spares, and 1 free disk. See pics, please.

In addition, I had an 1883X configured as JBOD in the same R900. PCIe Fatal Error started flashing on the server. I shut it down, and pulled the RAID controller - reboot - still PCIe error. Put in 1882 as a replacement, pulled 1883 JBOD - no more PCIe Fatal Error.

View attachment 5656 View attachment 5657View attachment 5659

So the questions I have are:

1) Why didn't the second hot spare pick up when the second drive failed?

2) What is the real status of the drives?

3) Am I shooting myself in the foot trying to run 2 PCIe controllers in this server?

Any advice/information is appreciated.

Jeff-

A 40TB/14 Drive RAID5 array is like playing russian roulette, it's not a question of if you will lose, just when. Why oh why did you create a R5 with 2 HS instead of a R6 with 1 HS? Can you please post the complete log from the card? Unfortunately, it sounds like you lost 2 drives in an array that could only handle losing 1 drive, that is why it is failed, but the log will tell all. Do you have the original RAIDSET and VOLUMESET specifics. Do you know which drives came out of which slots? Unfortunately, even though the SV series drives support ERC, I have not seen great results with them in arrays.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)