Hey all,

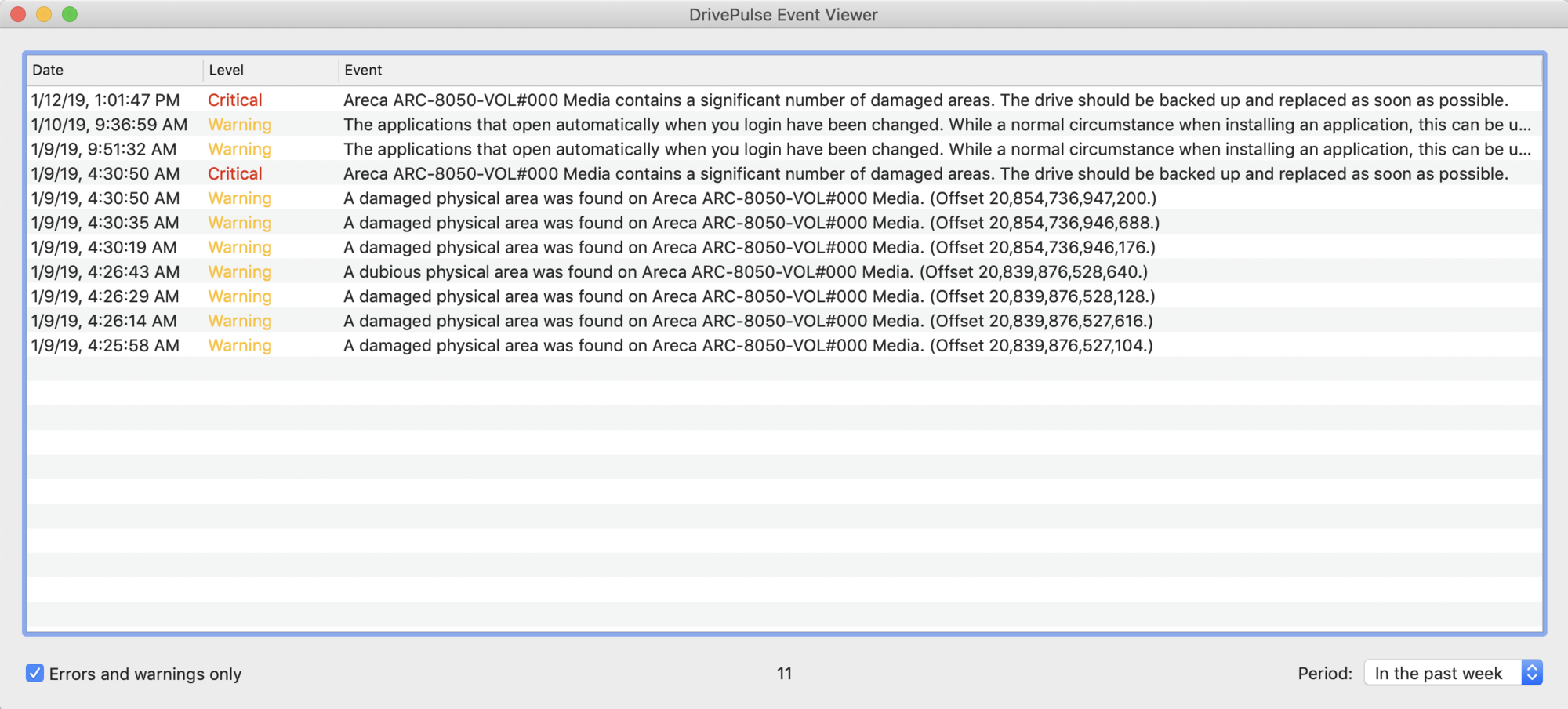

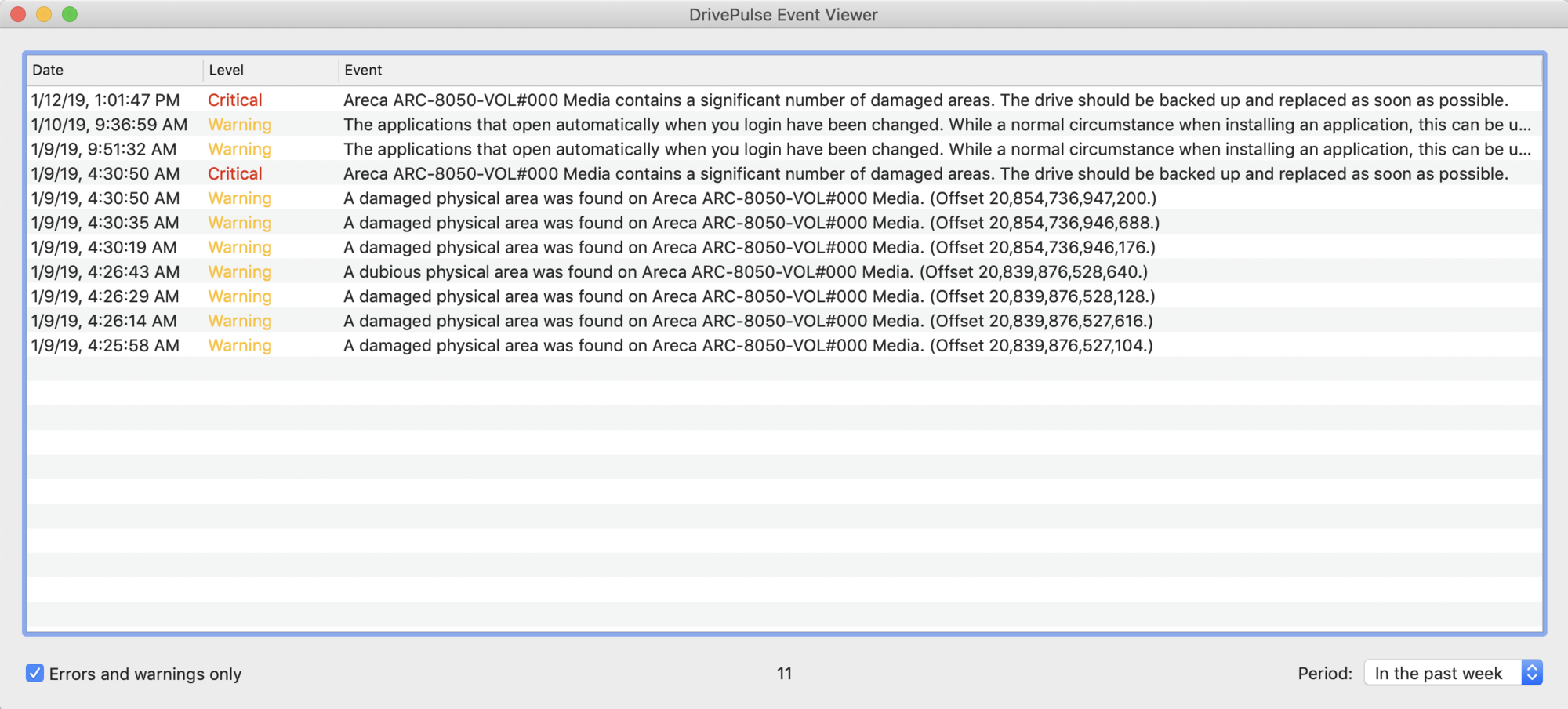

I've got an Areca ARC-8050T 6-bay (less than 6 months old) with 5 Seagate Ironwolf drives in a RAID 5 array. It's been performing great. However, I've been running Drive Genius from Prosoft, which monitors drive health in the background. It recently started giving error messages showing bad blocks on the ARC (see error log below), including a critical error message recommending backup and drive replacement.

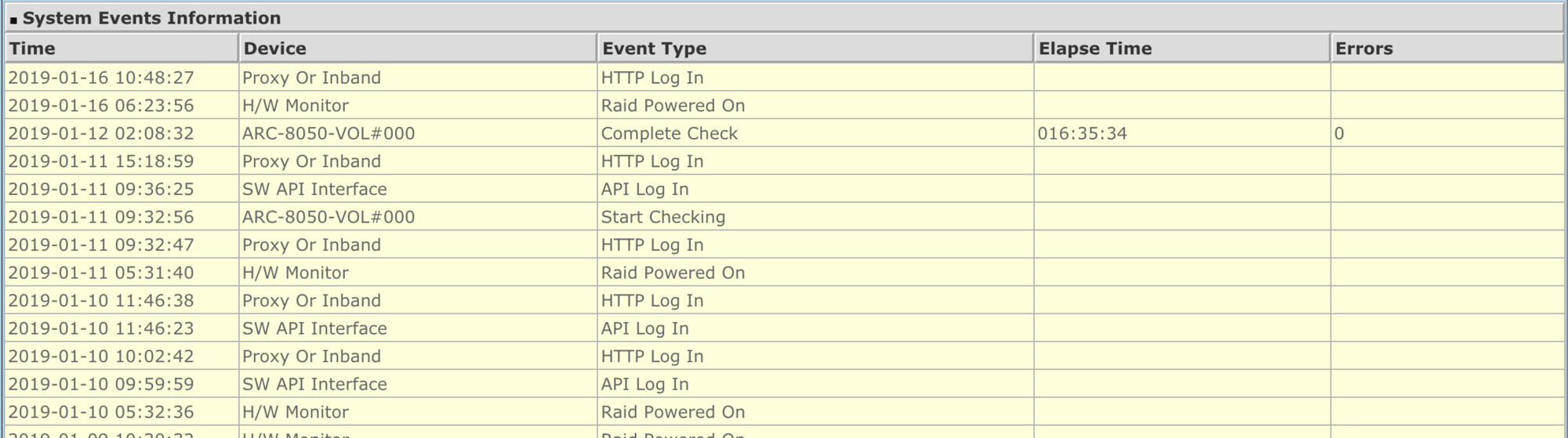

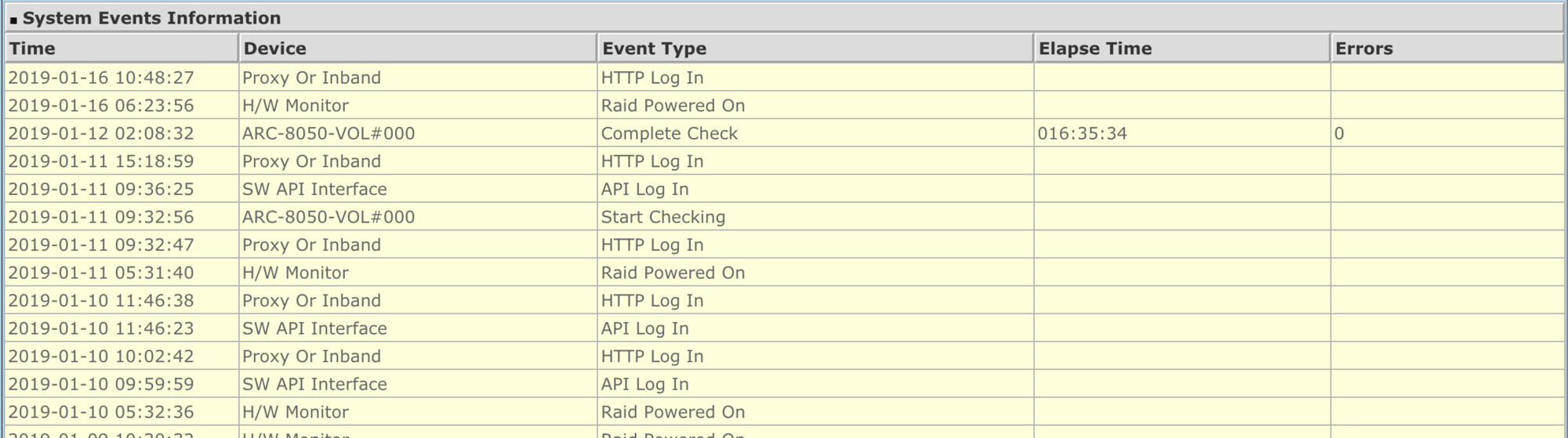

I got scared and decided to run a full consistency check on the array from the Areca raid manager console, with the 'scrub bad blocks' and 'recompute parity' options unchecked. After completing that check, 0 errors showed up (see event log below).

Should I still be worried? Would Drive Genius be showing errors that the Areca check is somehow missing? Should I trust the Areca results and move on? Or am I misinterpreting these conflicting results somehow?

This is the first Areca array I've used and I'm fairly unexperienced in troubleshooting RAID arrays in general, so any input from more experienced folks would be much appreciated! Let me know if I can provide any more info.

I did order a spare matching drive I could add to the array, either as a hot spare or by converting it to a RAID 6. My original plan had been to expand the size of the RAID 5 at some point, but this experience is making me think more fail-safe measures may be a smarter route!

I've got an Areca ARC-8050T 6-bay (less than 6 months old) with 5 Seagate Ironwolf drives in a RAID 5 array. It's been performing great. However, I've been running Drive Genius from Prosoft, which monitors drive health in the background. It recently started giving error messages showing bad blocks on the ARC (see error log below), including a critical error message recommending backup and drive replacement.

I got scared and decided to run a full consistency check on the array from the Areca raid manager console, with the 'scrub bad blocks' and 'recompute parity' options unchecked. After completing that check, 0 errors showed up (see event log below).

Should I still be worried? Would Drive Genius be showing errors that the Areca check is somehow missing? Should I trust the Areca results and move on? Or am I misinterpreting these conflicting results somehow?

This is the first Areca array I've used and I'm fairly unexperienced in troubleshooting RAID arrays in general, so any input from more experienced folks would be much appreciated! Let me know if I can provide any more info.

I did order a spare matching drive I could add to the array, either as a hot spare or by converting it to a RAID 6. My original plan had been to expand the size of the RAID 5 at some point, but this experience is making me think more fail-safe measures may be a smarter route!

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)