That was very interesting and unique. I just have not kept up with Apple things. May get me a mini in the future to play around with.What you’re saying is true, at least what the Mini is designed for. However it has long found itself in office, educational, and server environments.

That “image” of all of those minis hanging out in the background? That’s not for show. The Mini has been used as a "low cost" way to create clusters or used as low cost dedicated servers. There's even a company that specializes in deployment of these, though I would imagine most people in the IT space would simply do all of this work themselves.

https://www.macminivault.com/services/custom/

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Apple launched the M2 Pro and the M2 Max

- Thread starter Lakados

- Start date

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

Great. And it will not have the throughput of an M2. That base M2 Mini + 16GB of RAM for $800 will outperform that 12-core NUC regardless of how much RAM or how big an SSD you stick inside of it. While no matter what prices you assign to the values of the SSD and NVME drives you put in there, it WILL BE more expensive than $800.

You had a point? Mine was that nobody should sell computers with 8GB of ram and 256GB SSD in 2023, especially ones where they solder these components to the motherboard. The only reason anyone does it is because their product lineup doesn't look as overpriced as it really is. The moment you start adding more ram and a larger SSD, that Macbook M2 Pro will exceed $2k. While my older laptop can easily achieve more ram and a larger SSD for $150.

Then you're ignorant of even the most basics of GPU architecture. This would introduce so much latency, you'd likely give up 50% or more of your performance. Frame times would become a joke. You want serviceability literally at the cost of everything else. Why even use modern computers then?I've been asking this for years.

Sounds like you're ignorant about Apple's architecture as well. The GPU/CPU and RAM are all on a single CPU package. They are categorically NOT on the motherboard. This is again why Apple inherently has speed advantages that cannot be replicated any other way. The M2's GPU RAM is also physically a part of the SoC.That's what Apple has been doing.

If you're going to make arguments, make factual ones.

Sell those to whom exactly? There is no way anyone could profit more from selling an iPhone for parts vs selling an iPhone whole. The amount of people that have the technical skill and knowledge to desolder the SSD chips and the ROM chips that are used by Secure Enclave from the phone, replace them, then still get the OS to accept the new ROM and SSD chips then flip the phone are half a dozen people in the US at most? No repair shop in the US is capable of doing this. They're all screen and battery replacement jockeys. If they had that level of skill, they should be doing basically anything else with their time other than repairing phones.Thanks to Apple making it hard to find parts, these machines can be dissembled and their parts sold for an even bigger profit. Even the motherboard which is locked, can be used as a donor board to get replacement components for repair shops. This is why I don't password lock my phone, because if someone finds it I don't want them taking it apart because I locked it. I'd rather they keep it on and running so I can track it down. It's easier for a thief to sell the whole device but if they have to they will sell it for parts or in parts. Genuine Apple parts are hard to come by.

On a Macbook the parts are slightly more extensive, but the exact same problems would be present on their phones. Because of secure enclave, even if you did desolder the SSD and solder in another one, you still wouldn't get the machine to boot because of the T2 chip. Where do you find the ROM for the T2 chip? And even if you do, again how do you consistently get the machine to boot after replacing the SSD and the secure enclave ROM (because I'm pretty sure if you did this the machine would also simply not boot as it would recognize the change)? So the most expensive part of the machine is a brick. You can sell what, the top case, bottom case, fans, speakers, and display? Those will get you "some money", but good luck showing me that you can make any level of "real" money from this. Especially considering everything is serialized. In other words the reward is no where near the risk of getting caught. Especially considering how specialized the work would have to be and how little you could get from any of this.

You've taken a $2000 laptop and are able to sell the parts for maybe $500, with a majority of that being selling the display, IF you can find a buyer.

On an iPhone it would be the same. You take a $1200 phone and you're basically only able to sell the screen. Apple does a full screen replacement not under warranty for $350? So maybe you can get $200 for it?

You're just making stuff up. You haven't thought any of this through. I'd love you to show me all the repair shops that are just collecting this hardware to do repairs they aren't capable of doing though.

No, that's the way YOU would do things. There are tons of people in Resolve groups that are using Mini's as a low cost way to do editing. You know the reason why Apple advertised the Mini as a way to do editing? It's because: *surprise* people use it to do editing. To my knowledge it would be impossible to get a new video editing machine built on a PC for $800 ($599 base cost +$200 ram upgrade) that will be as performative as the M2 Mac Mini. Even if you bought every part used it would be a tremendous stretch, and I'm still reasonably certain the M2 Mini would come out on top. You're welcome to try though.If I wanted speed I wouldn't get a NUC. If I'm doing video editing or any task that needs lots of storage, I'll build a desktop PC. Same goes for the Mac Mini, except it's a NUC you can't upgrade. Mac Mini and NUC's are devices that help introduce people into this hardware and make a great little server or HTPC.

Just because use cases are limited for you, doesn't mean they are for anyone else. The fact that they have been used by organizations as an easy and quick way to create server farms, macOS data centers, and for virtual computing speaks directly to that fact.

Steve Jobs may have intended the Mac Mini as a gateway system, but that isn't in point of fact the only thing that it is.

I like this guy. If you were more like him, we'd butt heads less. First off, he states himself that the switch to PC wasn't easy. That Apple's ecosystem and apps are much better, but he needed his machine to do specific things. So he made the hard decision to switch.

Cool deal. Very reasonable. There are still plenty of ways he could've figured out live streaming, he also acknowledges that. He didn't want to figure it out? Okay! This is why there is choice in the market. But if this is supposed to prove some point you have, I don't really see how it does.

Cause even this dude says: it's tradeoffs. Which of the millionth time, is what it is. Neither PC nor Mac are inherently better than one another. It comes down to specifically what you want to do. You want to run 10 year old, 32-bit apps, and go on and on about it endlessly: A vast majority of the professional market does not. This guy does not. 64-bit ARM was a non-issue. Even his discussion about the Goldilocks problem as he calls it wasn't enough of a problem to put him off of Apple. In the end it was an entirely different issue.

It's always a sign that you don't have a point when you start posting memes, which you do almost immediately. But to address your "point" or lack there of, Apple's 32-bit emulation worked perfectly. They intentionally depreciated it over the course of 3 years exactly because of the same problems I've told you before many times. If you don't depreciate things then coders will never move to newer, faster, more optimized code.Lots of people use old software. The only reason Apple doesn't is because their old apps are 32-bit x86 and emulating that was hard or buggy or both.

If you want to see just how good macOS 32-bit emulation is you could use any Intel based Mac and continue to run Mojave on it. And that's what people do if they "must" have 32-bit software. However again this is a problem in search of an issue because people don't. If you're wondering why 32-bit isn't on ARM, obviously it was because 32-bit was transitioned out long before Apple started making ARM only machines.

You always assign problems to things that the general computing base doesn't have. Then make up a reason why Apple doesn't do things (strawman) and then move on. I don't really care if you ever buy or use a Mac. I don't care if you hate Apple. I just take issue with the large amounts of FUD that you put out there as if it's fact, when literally everything you're talking about is preferences. Preferences which are all based around tradeoffs. There are legitimate architectural benefits to having RAM be on the die of the processors. There are legitimate reasons to depreciate older code. You don't care and don't appreciate those things? That's fine, again it's the FUD that I take issue with.

Last edited:

Could be true in some market but in my experience really not (and why Linux0Microsoft has to support them). You want to run 10 year old, 32-bit apps, and go on and on about it endlessly: A vast majority of the professional market does not.

In 2017:

The survey, announced on Monday, was carried out by Spiceworks, a provider of Web site learning resources for IT pros as well as software tools. It was conducted in September across various organizational types and sizes in the United States and Canada. Per that survey, Office 2010 was the most used edition at 83 percent, followed by Office 2007 (68 percent) and Office 2003 (46 percent).

I am currently shifting our 32bits C++11 professional program to 64 bits C++17 right now (it is using a 32 bits very expensive 2010 or so licensing system).

Just make the mental experience, the next most linux-windows upgrade make it so that anything 2012 or older stop working, which professional market-industry would not perceive the change ?

The extremely dynamic-relatively new, youtube video maker type maybe, but the mines-factory-lawyer-pharmaco-dentist-name it, that have more niche programs, often semi-homemade, well into age non tech savy workforce that do not like change and will never do it themselve, my Office 2007 still work crowd, my accounting software that need WindowsXP on that old laptop crowd do exist and in my small experience quite common.

That said it should be obvious that there is a giant crowd that does not care and not only that the notion that they should make no sense.

All things being equal being able to run out of the box more things is betters obviously, but also obviously design decision to support older stuff does not create a all things being equal situation.

I see advantages to both sides, like you suggested. Compatibility is obviously very important in fields where companies can't always afford to upgrade software or replace computers very often. But Apple's approach also means that development moves forward, and prevents some of the pitfalls that have hurt not just Microsoft's sales, but the industry as a whole.Could be true in some market but in my experience really not (and why Linux0Microsoft has to support them)

In 2017:

The survey, announced on Monday, was carried out by Spiceworks, a provider of Web site learning resources for IT pros as well as software tools. It was conducted in September across various organizational types and sizes in the United States and Canada. Per that survey, Office 2010 was the most used edition at 83 percent, followed by Office 2007 (68 percent) and Office 2003 (46 percent).

I am currently shifting our 32bits C++11 professional program to 64 bits C++17 right now (it is using a 32 bits very expensive 2010 or so licensing system).

Just make the mental experience, the next most linux-windows upgrade make it so that anything 2012 or older stop working, which professional market-industry would not perceive the change ?

The extremely dynamic-relatively new, youtube video maker type maybe, but the mines-factory-lawyer-pharmaco-dentist-name it, that have more niche programs, often semi-homemade, well into age non tech savy workforce that do not like change and will never do it themselve, my Office 2007 still work crowd, my accounting software that need WindowsXP on that old laptop crowd do exist and in my small experience quite common.

That said it should be obvious that there is a giant crowd that does not care and not only that the notion that they should make no sense.

All things being equal being able to run out of the box more things is betters obviously, but also obviously design decision to support older stuff does not create a all things being equal situation.

The classic example is WannaCry. That malware wreaked havoc precisely because many companies and institutions were still running Windows XP; in some cases, they had extended service contracts to keep support going beyond the 2014 cutoff. They'd been conditioned to think they could expect to run the same software for seemingly all eternity. Goodness knows there are real examples of companies clinging to Windows 7 to use XP mode to run NT apps released in the 1990s. At that point, legacy support crosses over from "helpful" to "holding things back."

To bring this back to the core topic: I really, really don't think the Mac mini's intended audiences care that it's a 64-bit ARM computer that won't run apps which haven't been updated in years. They're either everyday customers who run widely available apps or pros who rarely if ever use ancient software. For that matter, I don't think someone buying an Intel NUC is fretting over whether or not they can run vintage code. The Mac mini M2 looks like a fine machine for what it's meant to do; I really wish Apple would bump up the RAM or storage, but it at least lowered the price of entry.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

Programs like Office though could easily be updated. It's the cost of licensing that is the issue there, and not necessarily the problem of app compatibility.Could be true in some market but in my experience really not (and why Linux0Microsoft has to support them)

In 2017:

The survey, announced on Monday, was carried out by Spiceworks, a provider of Web site learning resources for IT pros as well as software tools. It was conducted in September across various organizational types and sizes in the United States and Canada. Per that survey, Office 2010 was the most used edition at 83 percent, followed by Office 2007 (68 percent) and Office 2003 (46 percent).

Microsoft, to their credit, has worked pretty hard at bringing the cost of licenses down and give the option to go to a subscription model to get more of world on the latest app(s). They're a victim of their own success as they can't force or drag anyone to upgrade and supporting all these old versions of apps isn't viable. These are all problems Apple doesn't have specifically because they depreciate old code. That and they give the OS away for free and they also now give their Office equivalent apps away for free. Between that and things like Google Office, I'm sort of surprised that this issue hasn't been solved for general office workers.

For the general office world, they would likely not notice.I am currently shifting our 32bits C++11 professional program to 64 bits C++17 right now (it is using a 32 bits very expensive 2010 or so licensing system).

Just make the mental experience, the next most linux-windows upgrade make it so that anything 2012 or older stop working, which professional market-industry would not perceive the change ?

I'll put it like this: Apple made a bunch of forward thinking moves eliminating things "in advance" because they had usage statistics and realized supporting certain things wasn't worth it anymore. Some examples are: floppy discs, DVD-Rom, Zip-drives, Firewire, etc. Every time a port or a media type was eliminated there was a big noisy uproar, but basically every time we've found out they were right. I haven't wanted or needed to use optical media in 10 years. It was attachment in my mind that made me want to keep having disc drives that I never used.

Because Apple does all of the software changes relatively slowly, they recognize the time necessary for compliance, end-users rarely notice issues. In recent years there have been two major transitions. 1, as DukenukemX brings up, the move away from 32-bit code libraries. And the other the move to ARM. I actually think the move away from 32-bit caused far more problems than the move to ARM. It's been 3 years since Mojave. I'm sure there are some people that are still upset about lack of 32-bit, but I haven't heard of any mass exodus from Apple because of it. In fact usage statistics since ARM show Apple appliances actually selling in greater volume. Likely due to just how good the MBA is and also how cheap the Mac Mini is.

Correct me if I'm wrong here, but the big issue with all of the industries you just named is mostly related to keeping specific old machines working no? I can imagine old stuff like an X-Ray machine being something that costs a lot and doesn't get upgraded as being the issue. The problem there is capital investment vs ROI (which there likely wouldn't be any benefit).The extremely dynamic-relatively new, youtube video maker type maybe, but the mines-factory-lawyer-pharmaco-dentist-name it, that have more niche programs, often semi-homemade, well into age non tech savy workforce that do not like change and will never do it themselve, my Office 2007 still work crowd, my accounting software that need WindowsXP on that old laptop crowd do exist and in my small experience quite common.

I quit my management position at UPS back in 2009, but even at that time they used incredibly outdated software to do certain things. For them, I kind of get it, as the cost to change the infrastructure was the problem. The inbound/outbound software was all still DOS based, and the scanners were based around Microsoft CE. Even in 2009, everything was at minimum 10 years outdated. UPS Ontario recently did an expansion (by recent, I mean maybe 7-8 years ago), quadrupling the throughput (the hub literally doubled in size by area, and it was already the size of maybe 3 Costcos put together). And part of that expansion was to move to more modern system to keep in line with the flagship hub, Lousiville. I imagine they finally bit the bullet and transitioned though. They would've had to. At least I hope so. That equipment was terrible to work on.

Although it likely costed UPS 10's of millions to update everything in that hub, there would be tangible benefit from doing so. Namely better scanning and tracking of packages as well as being able to have infrastructure that can show where every package, truck, and plane is in real time. Things that the system we were using when I was there wasn't capable of. If I was curious enough, there are still people I know that work there that I could talk to, but I honestly never want to think about the shipping industry again.

Right. Basically yes and yes. If we could live in a world in which we could be backwards compatible with everything forever with zero consequences: I'm all for it. But given the tradeoffs most of the world doesn't need anything past 10 years and I would rather have a code base that's up to date, and highly optimized. I want my modern tasks to go as quickly as possible.That said it should be obvious that there is a giant crowd that does not care and not only that the notion that they should make no sense.

All things being equal being able to run out of the box more things is betters obviously, but also obviously design decision to support older stuff does not create a all things being equal situation.

Basically this. After working in environments where upper management doesn't want to replace any of this old software ever, I gladly am on the other side that wants to trash it all as quickly as possible. The only problem on the Windows side is now Microsoft is doing things that I would say are actively anti-consumer. I absolutely loath the inclusion of spyware and I had multiple occasions where their forced auto-updates have broken installs, leading to downtime and huge amounts of headaches. Windows 10 and now Windows 11 have kind of put the final nail in the coffin for me that I never want to go back to Windows. Perhaps if Linux (likely through Valve ironically) gets polished enough that could become an option. Until then I'm happy to "pay more" to get bullet proof stability and support that I also never have to manage rather than the mess I've personally had to deal with on the PC side.I see advantages to both sides, like you suggested. Compatibility is obviously very important in fields where companies can't always afford to upgrade software or replace computers very often. But Apple's approach also means that development moves forward, and prevents some of the pitfalls that have hurt not just Microsoft's sales, but the industry as a whole.

The classic example is WannaCry. That malware wreaked havoc precisely because many companies and institutions were still running Windows XP; in some cases, they had extended service contracts to keep support going beyond the 2014 cutoff. They'd been conditioned to think they could expect to run the same software for seemingly all eternity. Goodness knows there are real examples of companies clinging to Windows 7 to use XP mode to run NT apps released in the 1990s. At that point, legacy support crosses over from "helpful" to "holding things back."

To bring this back to the core topic: I really, really don't think the Mac mini's intended audiences care that it's a 64-bit ARM computer that won't run apps which haven't been updated in years. They're either everyday customers who run widely available apps or pros who rarely if ever use ancient software. For that matter, I don't think someone buying an Intel NUC is fretting over whether or not they can run vintage code.

On this we agree. Because Apple does have us over a barrel in terms of internal upgrades, I do wish it was less expensive. So DukenukemX, if you wanted to know whether or not I'm perfectly happy with everything and if I could be critical of Apple, there you go.The Mac mini M2 looks like a fine machine for what it's meant to do; I really wish Apple would bump up the RAM or storage, but it at least lowered the price of entry.

Obviously this is a part of their pricing strategy and they have the smartest minds to know how to extract the most amount of dollars. Even in saying that though, for people doing professional work, it would be hard to build a PC that would be as performative as the new Mini for $1200 or less even though buying those RAM/SSD upgrades in the Mini feels bad. The PC will in theory be upgradeable (but that has been shown to matter less and less). The Mac Mini though will take up basically no space, be dead silent, and use very little power. Tradeoffs.

There the obvious, old machine on serial-parrallel bus aspect but there is also running old program on new machine that exist.Correct me if I'm wrong here, but the big issue with all of the industries you just named is mostly related to keeping specific old machines working no? I can imagine old stuff like an X-Ray machine being something that costs a lot and doesn't get upgraded as being the issue. The problem there is capital investment vs ROI (which there likely wouldn't be any benefit).

For example of a program that had very old part in them (like still some code from 1994 when openGL was not sure to be the winner yet), a CAD software that take a scan of a mouth and generate model of crown and what not.

You can want to run that a relatively recent machine for performance reason (and ease to find) and not having an security issue and have it plug in your network to easily get scan and send models out, but you do not necessarily mind if the program itself use old stuff and it is niche enough that redoing a lot of it because decade of wintel-32 get flushed out will be quite the hassle.

Office is really good at accepting old files, yes it is usually just for cost and doing has little as possible that the office 97-2007 had such long life.

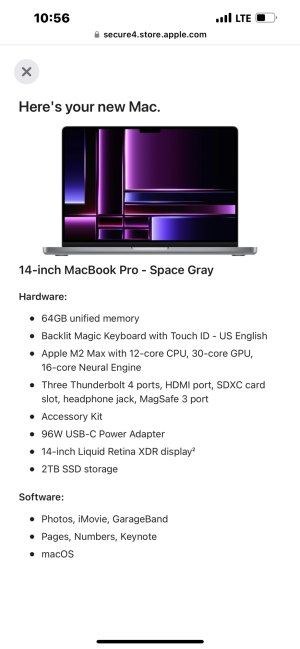

This thread has reminded me why I just bought a MacBook Pro $4220 out the door and I am so glad I work in the oil and gas industry and make $400 a day working 6 on 3 off (weeks) can basically pay for this machine in one paycheck. I can’t wait to get off on the eighth and get my MacBook on the 13th windows 10 is really starting to suck and 11 being jammed down my throat. Just had enough of it.

Going to keep my rig for gaming in my 3-D renters, but I’m gonna start moving everything else to the Mac

Going to keep my rig for gaming in my 3-D renters, but I’m gonna start moving everything else to the Mac

Attachments

The most alluring part of the MacBook Pro update to me: that you don't have to worry about your battery life taking as serious a hit with the 14-inch M2 Max as you did with the M1 Max. It wouldn't be the system for me (the Max is overkill), but it's now a question of how much you can afford, not whether you're willing to sacrifice longevity for performance.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

Short answer: yes.Is the ssd soldered onto the mobo for the mac mini again?

Long answer: it’s literally just solid state storage chips on the board. All I/O functions are handled by the ARM chip, there is no separate SSD controller (eg: phision). This is specifically how/why Apple’s I/O is as fast as it is while having real time encryption encoding and decoding.

Going forward there will never be removable storage on any of these Apple devices again. I wouldn’t have any other expectation. The only exception to that is theoretically the upcoming Arm Mac Pro. Even in that machine however, I would expect the main drive would be chips like the current Mac Pro, without a controller etc. Its technically upgradeable, but only with proprietary SSD’s, because it’s just solid state chips on a PCB, which is controlled from the T2 chip and not a separate SSD controller.

Apple currently installs the SSD memory chips butted right against the CPU to cut down the traces to the absolute minimum possible to keep latency as low as mechanically possible. While that isn't super impactful it does improve performance slightly and alows for better worst case scenarios when the device has to hot swap system memory to storage because its run out which in the base configuration does happen more than many would like to think, especially if they install Chrome.Short answer: yes.

Long answer: it’s literally just solid state storage chips on the board. All I/O functions are handled by the ARM chip, there is no separate SSD controller (eg: phision). This is specifically how/why Apple’s I/O is as fast as it is while having real time encryption encoding and decoding.

Going forward there will never be removable storage on any of these Apple devices again. I wouldn’t have any other expectation. The only exception to that is theoretically the upcoming Arm Mac Pro. Even in that machine however, I would expect the main drive would be chips like the current Mac Pro, without a controller etc. Its technically upgradeable, but only with proprietary SSD’s, because it’s just solid state chips on a PCB, which is controlled from the T2 chip and not a separate SSD controller.

Full teardown video for those interested:

It is a very clean-looking board, very simple, beautiful really.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,937

I'm convinced that the only reason people buy Macs is to say they bought one. They then secretly using a PC.This thread has reminded me why I just bought a MacBook Pro $4220 out the door and I am so glad I work in the oil and gas industry and make $400 a day working 6 on 3 off (weeks) can basically pay for this machine in one paycheck. I can’t wait to get off on the eighth and get my MacBook on the 13th windows 10 is really starting to suck and 11 being jammed down my throat. Just had enough of it.

Going to keep my rig for gaming in my 3-D renters, but I’m gonna start moving everything else to the Mac

Apple currently installs the SSD memory chips butted right against the CPU to cut down the traces to the absolute minimum possible to keep latency as low as mechanically possible. While that isn't super impactful it does improve performance slightly and alows for better worst case scenarios when the device has to hot swap system memory to storage because its run out which in the base configuration does happen more than many would like to think, especially if they install Chrome.

View attachment 545451

Full teardown video for those interested:

It is a very clean-looking board, very simple, beautiful really.

Lets be honest here, the reason Apple solders anything to the board is to save money, just like all other manufacturer. Apple put half as many SSD chips in their M2 Macbooks which cut the performance in half, so Apple doesn't do it for performance when they could have easily put a second chip. You could make the argument for the ram being closer to lower latency, but there's a reason why desktop PC's use DDR5 for CPU and GDDR6 for GPU's, because that's optimal for their applications. The use of LPDDR5 is trying to achieve the bandwidth of a GPU, while offering comparable latency for the CPU with the sacrifice of not being able to ever upgrade the ram. It also consumes less power being closer to the SoC, but ultimately this is done to cut costs, not to enhance performance.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,937

Jesus, even Apple's 512GB Macbook Pro with the M2 Pro will be missing some SSD chips, just like the regular M2 with the 256GB model. That's a 40% performance decrease. Why you people buy this junk?

https://arstechnica.com/gadgets/202...have-slower-ssd-performance-than-m1-versions/

https://arstechnica.com/gadgets/202...have-slower-ssd-performance-than-m1-versions/

Cut it by half but nobody using those MBP's would notice, it looks bad on a benchmark test for sure, but given how they are actually used the CPU and encoders are going to reach their limit long before they max out the storage configuration, and the people who know they need to worry about storage speeds would be knowing what they need in advance because they aren't buying the base model. The base model MBP is for coffee shop writers and students who want to show off.Lets be honest here, the reason Apple solders anything to the board is to save money, just like all other manufacturer. Apple put half as many SSD chips in their M2 Macbooks which cut the performance in half, so Apple doesn't do it for performance when they could have easily put a second chip. You could make the argument for the ram being closer to lower latency, but there's a reason why desktop PC's use DDR5 for CPU and GDDR6 for GPU's, because that's optimal for their applications. The use of LPDDR5 is trying to achieve the bandwidth of a GPU, while offering comparable latency for the CPU with the sacrifice of not being able to ever upgrade the ram. It also consumes less power being closer to the SoC, but ultimately this is done to cut costs, not to enhance performance.

It's fast enough and the buyers don't care.Jesus, even Apple's 512GB Macbook Pro with the M2 Pro will be missing some SSD chips, just like the regular M2 with the 256GB model. That's a 40% performance decrease. Why you people buy this junk?

https://arstechnica.com/gadgets/202...have-slower-ssd-performance-than-m1-versions/

View attachment 545459

Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,819

Because it works better with my iPhone.Jesus, even Apple's 512GB Macbook Pro with the M2 Pro will be missing some SSD chips, just like the regular M2 with the 256GB model. That's a 40% performance decrease. Why you people buy this junk?

https://arstechnica.com/gadgets/202...have-slower-ssd-performance-than-m1-versions/

View attachment 545459

My only two active laptops are macs - a 2015 era 13" MBP with I5 and 8G (slow, but plays movies and youtube just fine), and a 2021 M1 Pro 14", which is one of the best laptops I've ever used. Why wouldn't I? Does everything I need it to do just fine. When I'm on the road it's just fine for everything I could ever need, and when I'm at home and need more, I have VMs on x86 and a couple of monster workstations. An intel based laptop would do nothing better and many things worse than the Mac does (I hate running Linux on laptops; it still sucks). I sold my last x86 laptop (Alienware 13) because I didn't have a purpose for it.I'm convinced that the only reason people buy Macs is to say they bought one. They then secretly using a PC.

It solves the problem within the bounds of cost and performance.Lets be honest here, the reason Apple solders anything to the board is to save money, just like all other manufacturer.

And so far, buyers haven't cared that much?Apple put half as many SSD chips in their M2 Macbooks which cut the performance in half, so Apple doesn't do it for performance when they could have easily put a second chip.

Different apps, different usesYou could make the argument for the ram being closer to lower latency, but there's a reason why desktop PC's use DDR5 for CPU and GDDR6 for GPU's, because that's optimal for their applications.

No one really cares.The use of LPDDR5 is trying to achieve the bandwidth of a GPU, while offering comparable latency for the CPU with the sacrifice of not being able to ever upgrade the ram. It also consumes less power being closer to the SoC, but ultimately this is done to cut costs, not to enhance performance.

You get frustrated that folks have a different use case than you. When the laptop gets old or too slow, I'll throw it out and buy another. I'm not going to bother upgrading a 3-4 year old laptop - a new one will be a heck of a lot better anyway.

Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,819

Don’t forget about opencore to keep them on a supported OS.My only two active laptops are macs - a 2015 era 13" MBP with I5 and 8G (slow, but plays movies and youtube just fine), and a 2021 M1 Pro 14", which is one of the best laptops I've ever used. Why wouldn't I? Does everything I need it to do just fine. When I'm on the road it's just fine for everything I could ever need, and when I'm at home and need more, I have VMs on x86 and a couple of monster workstations. An intel based laptop would do nothing better and many things worse than the Mac does (I hate running Linux on laptops; it still sucks). I sold my last x86 laptop (Alienware 13) because I didn't have a purpose for it.

It solves the problem within the bounds of cost and performance.

And so far, buyers haven't cared that much?

Different apps, different uses

No one really cares.

You get frustrated that folks have a different use case than you. When the laptop gets old or too slow, I'll throw it out and buy another. I'm not going to bother upgrading a 3-4 year old laptop - a new one will be a heck of a lot better anyway.

Exactly, in what realistic use case can you see where the difference is going to be meaningful? Nobody is gaming on an MBP so load times there aren't a factor, for video or audio encoding CPU and accelerators are going to reach their limit before the storage does, for day-to-day, it's still so fast that things happen "instantly" as saving a document still happens in a fraction of a second, just not a fraction of a fraction of a second.It's fast enough and the buyers don't care.

I don't even bother, to be honest. The 2015 is normally sitting in the gym to play workout videos, and occasionally moves if I'm doing work in the DC closet to play TV shows while I'm running cable. It has almost no other use. The new one is for work and gets carried when I head to a customer site, and is always up to date by corporate anyway.Don’t forget about opencore to keep them on a supported OS.

Red Falcon

[H]ard DCOTM December 2023

- Joined

- May 7, 2007

- Messages

- 12,449

Lets be honest here, the reason Apple solders anything to the board is to save money, just like all other manufacturer. Apple put half as many SSD chips in their M2 Macbooks which cut the performance in half, so Apple doesn't do it for performance when they could have easily put a second chip. You could make the argument for the ram being closer to lower latency, but there's a reason why desktop PC's use DDR5 for CPU and GDDR6 for GPU's, because that's optimal for their applications. The use of LPDDR5 is trying to achieve the bandwidth of a GPU, while offering comparable latency for the CPU with the sacrifice of not being able to ever upgrade the ram. It also consumes less power being closer to the SoC, but ultimately this is done to cut costs, not to enhance performance.

Both of these, QFT.Cut it by half but nobody using those MBP's would notice, it looks bad on a benchmark test for sure, but given how they are actually used the CPU and encoders are going to reach their limit long before they max out the storage configuration, and the people who know they need to worry about storage speeds would be knowing what they need in advance because they aren't buying the base model. The base model MBP is for coffee shop writers and students who want to show off.

Apple is cutting costs, and while the average hipster may not know it, it certainly isn't to the customer's benefit.

At a minimum at least Apple is pushing the ARM ISA forward leaps and bounds beyond where it was at just a few years ago.

I suppose we can accept that this is Apple being Apple, and take the good with the bad for these use-cases and design choices.

Anyone want to take bets on whether or not you can solder down an extra flash ship and double your SSD, if you are someone who has the tools and skills to solder BGA? I bet they block that from working. (Dell did the same thing with video ram on an XPS at some point, but all it took was swapping a couple of resistors to enable the extra VRAM.)missing some SSD chips

Irrevelant to anyone who's not an Apple user, though, because they're not sharing that.At a minimum at least Apple is pushing the ARM ISA forward leaps and bounds beyond where it was at just a few years ago.

Red Falcon

[H]ard DCOTM December 2023

- Joined

- May 7, 2007

- Messages

- 12,449

Not quite, the ARM ISA can be used for other means outside of MacOS and MacOS-related apps, especially for compiling, optimizing, testing, etc.Irrevelant to anyone who's not an Apple user, though, because they're not sharing that.

If Apple doesn't share their work extending and/or improving the ISA to others, those extensions and improvements can't be used by other people making ARM chips, from Qualcomm to ST. That's what I meant.Not quite, the ARM ISA can be used for other means outside of MacOS and MacOS-related apps, especially for compiling, optimizing, testing, etc.

I'm convinced that the only reason people buy Macs is to say they bought one. They then secretly using a PC.

Unless I’m playing PC games or otherwise have use of my bigger monitor, all of my computer use is on an Apple device, either my iPhone or my MBP. I usually don’t even touch the PC during the week, I get home from work and grab the MacBook.

Yeah my Mac for work my PC for play. I traded in my office Threadripper for a Mac Studio, only a minor improvement in performance but I no longer need to open a window and leave the room when I run reports. The Lenovo would heat the room while whaling like a banshee.Unless I’m playing PC games or otherwise have use of my bigger monitor, all of my computer use is on an Apple device, either my iPhone or my MBP. I usually don’t even touch the PC during the week, I get home from work and grab the MacBook.

This is why the sale of ARM failing was ultimately bad. Apple makes ARM a household recognizable thing while actively detracting from the platform. Apples ARM chips are a full generation of not 2 generations ahead of Broadcom, Qualcomm, MediaTek, and the rest which actively hurts the ARM brand as manufacturers look at them and actively ask, “why can’t yours do that?”. ARM’s licensing model which is what made it such an attractive platform also hinders it as R&D costs explode.If Apple doesn't share their work extending and/or improving the ISA to others, those extensions and improvements can't be used by other people making ARM chips, from Qualcomm to ST. That's what I meant.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

That might be true at the consumer level, but nVidia has been showing off Grace, and I'm fairly sure most of the server/high-end compute market is interested.This is why the sale of ARM failing was ultimately bad. Apple makes ARM a household recognizable thing while actively detracting from the platform. Apples ARM chips are a full generation of not 2 generations ahead of Broadcom, Qualcomm, MediaTek, and the rest which actively hurts the ARM brand as manufacturers look at them and actively ask, “why can’t yours do that?”. ARM’s licensing model which is what made it such an attractive platform also hinders it as R&D costs explode.

If Apple wanted to, they could probably re-enter the server market. I just don't think they have an interest in the support part of the market that top end vendors need to have. The irony there is that Mac Mini's are getting used a lot as dev servers.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

Yeah, I maintain a decent desktop for gaming - But I'm done with Windows for anything other than demanding gaming. There are so many simple things that MacOS just handles better. Multi-Monitor in particular feels lightyears ahead on Mac compared to Windows. The touchpad on Apple devices feels lightyears ahead as well - Although again, this is mostly due to the fact that MacOS just handles stuff like this better than Windows. Even gaming I've moved over to the MacBook as much as it makes sense.Unless I’m playing PC games or otherwise have use of my bigger monitor, all of my computer use is on an Apple device, either my iPhone or my MBP. I usually don’t even touch the PC during the week, I get home from work and grab the MacBook.

Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,819

I prefer browsing on my ancient 2012 mini. Fuck windows (except for games)Yeah, I maintain a decent desktop for gaming - But I'm done with Windows for anything other than demanding gaming. There are so many simple things that MacOS just handles better. Multi-Monitor in particular feels lightyears ahead on Mac compared to Windows. The touchpad on Apple devices feels lightyears ahead as well - Although again, this is mostly due to the fact that MacOS just handles stuff like this better than Windows. Even gaming I've moved over to the MacBook as much as it makes sense.

Attachments

And Nvidia will keep that to themselves, just as Apple is keeping its stuff private. And I doubt we’ll see any of the Nvidia arm Server parts any time soon. Though they will probably have some cool developer boards to play with.That might be true at the consumer level, but nVidia has been showing off Grace, and I'm fairly sure most of the server/high-end compute market is interested.

If Apple wanted to, they could probably re-enter the server market. I just don't think they have an interest in the support part of the market that top end vendors need to have. The irony there is that Mac Mini's are getting used a lot as dev servers.

Saves space/thickness too. Apple are real big on trying to make things thin, and when you push that hard, you end up having to trade off things like socketed items. Not saying cost isn't a reason as well, but Apple, and others, get on the "SOLDER ALL THE THINGS" bandwagon to try and make ultra-thin computers. You can see this with Dell where the amount of things soldered on tends to be proportional to the size, as well as the cost.Lets be honest here, the reason Apple solders anything to the board is to save money, just like all other manufacturer.

I am in the same place. PC for gaming—and file management, Finder still kind of sucks IMO—but Macs for everything else. I miss being able to dual-boot Windows on a MBP for travel gaming but that is a small sacrifice for the sheer power+efficiency I get with M1/M2.Yeah my Mac for work my PC for play. I traded in my office Threadripper for a Mac Studio, only a minor improvement in performance but I no longer need to open a window and leave the room when I run reports. The Lenovo would heat the room while whaling like a banshee.

Anyone who gives a fuck about speed is getting more than 512GB of storage. Its like bitching about AMD only having one CCX on their low end CPUs.

I almost never touch Apple, but I need to do some app dev now apparently. urgh. And my surface got biffed out a fourth storey window by the ex. So I ordered up an Air. I'm looking forward to it, but like, I'm not going to be making it sweat, it can't run fortnite.

I almost never touch Apple, but I need to do some app dev now apparently. urgh. And my surface got biffed out a fourth storey window by the ex. So I ordered up an Air. I'm looking forward to it, but like, I'm not going to be making it sweat, it can't run fortnite.

The Mac version of Fortnite is still technically around... if you'd already downloaded it before the Apple-and-Google-vs-Epic uproar, anyway. And I'm sure compiling your apps will put some load on it!Anyone who gives a fuck about speed is getting more than 512GB of storage. Its like bitching about AMD only having one CCX on their low end CPUs.

I almost never touch Apple, but I need to do some app dev now apparently. urgh. And my surface got biffed out a fourth storey window by the ex. So I ordered up an Air. I'm looking forward to it, but like, I'm not going to be making it sweat, it can't run fortnite.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,937

The point is that the M2 Pro has a slower SSD compared to the M1 Pro due to Apple being cheap. It's also going to wear down faster due to less chips to deal with the wear. We're talking about a $2,000 starting price, for a laptop that has cost cutting in order to save Apple a few dollars per laptop. It's not even a small amount, as its 40% slower read speeds.It's fast enough and the buyers don't care.

I also heard Apple is into recycling but clearly that's a lie when all these soldered on SSD's inevitably fail and you have to junk a laptop.Saves space/thickness too. Apple are real big on trying to make things thin, and when you push that hard, you end up having to trade off things like socketed items. Not saying cost isn't a reason as well, but Apple, and others, get on the "SOLDER ALL THE THINGS" bandwagon to try and make ultra-thin computers. You can see this with Dell where the amount of things soldered on tends to be proportional to the size, as well as the cost.

Lots of people like you saying they rarely use their PC but I think you're all lying, especially after seeing what this guy went through to use his Macbook.Unless I’m playing PC games or otherwise have use of my bigger monitor, all of my computer use is on an Apple device, either my iPhone or my MBP. I usually don’t even touch the PC during the week, I get home from work and grab the MacBook.

UnknownSouljer

[H]F Junkie

- Joined

- Sep 24, 2001

- Messages

- 9,041

Perhaps you should look at the numbers and not go off of percentages. Even on a single chip the SSD far outstrips every SATA SSD. If you're a general tasker, you will not notice the difference. You'd have to have specific applications that are I/O intensive for it to matter. It's 2800MB/s~ vs 1400MB/s~ (rounded down even) on a single chip vs dual chip M2 Mini. Or 4900MB/s~ vs 2900 MB/s~ (also rounded down) on the Macbook Pro.The point is that the M2 Pro has a slower SSD compared to the M1 Pro due to Apple being cheap. It's also going to wear down faster due to less chips to deal with the wear. We're talking about a $2,000 starting price, for a laptop that has cost cutting in order to save Apple a few dollars per laptop. It's not even a small amount, as its 40% slower read speeds.

I highly doubt anyone buying a base level MPB will notice NVME Gen 3 speeds as being "slow". Even power users would have a hard time saturating that. There are precisely no general users I could put either of those two Macbook Pros in front of that would show me a task where they'd notice a difference (other than literally a benchmark). I'm not sure if I could find a power user that would notice the difference.

Should Apple have given the faster speed to everyone and doubled the silicon at every level? Perhaps, it definitely "feels bad man". But in terms of the usable difference? Doesn't really matter much.

Or you could have Apple repair it.I also heard Apple is into recycling but clearly that's a lie when all these soldered on SSD's inevitably fail and you have to junk a laptop.

Considering we're on year 2+ of ARM Mac's and I haven't heard of anyone having a problem (even on 8GB machines that are stuck having to swap constantly), I'm not sweating it.

Also, normal people get rid of their desktops/laptops every 3-5 years on the outside. Another point you don't seem to understand and I have had to bring up ad nauseum. 95%+ of these machines useful life will have expired and I doubt anyone will get anywhere close to running out the SSD.

Lots of people like you saying they rarely use their PC but I think you're all lying, especially after seeing what this guy went through to use his Macbook.

We can't help other people's experiences. I currently happily play all of my emulators and D3 in macOS with zero problems. I also played through the Tomb Raider series and DX:HR and DX:MD also in macOS. I finished DOS2 on macOS, I have DOS1 and at some point I'll finish it. I'm looking forward to Balduar's Gate 3, which also is coming to macOS (thanks Larian). All of those games have Mac ports. Don't believe me? I don't really care.

I would gladly bet all of my money and win that I'm being truthful 10 out of 10 times. Get me on the stand, lie detector test, whatever. Meanwhile you can't get it through your head that people actually like these machines because you're not capable of seeing two sides of an argument.

EDIT: Okay, this guy is an idiot. He should've spent the $20 to simply buy a USB-C to HDMI cable, or USB-C to DP cable and he wouldn't have had any problems. Having whatever that is in the middle at 6:20 is the cause for all of his issues. And if he wanted all those other ports, he could have easily gotten them on the other open port as well as power. A huge part of all his mistakes were simply not using the correct peripherals for the task.

He also bought the worst machine for gaming, if that's his intended purpose. The whole point of this clickbait video is to show the worst laptop for gaming in the worst light. Ignoring the rest of the lineup that has 4x TB ports, or 2x TB ports and multiple USB (Mac Mini).

He also didn't use Steam or GOG for some reason. You know, two platforms with everything that easily allow you to scroll through macOS games? Then tried playing titles above 1080p and refused to use other resolutions other than native, even though every PC $1000 laptop would also not be capable of 2.8k resolution at reasonable speeds.

If you honestly think that's the experience on every other Mac machine, you're the idiot in this case. Even more than this guy who can at least make money off of his clickbait video.

The only thing he said of any relevance is that most games don't have macOS ports so a majority of games are "locked away" as he puts it. But you'll never see anyone say that there aren't more games available for Windows than macOS. Obviously true, it's just whether that matters or not. And yeah, Mac only gamers are also obviously a minority. There is no dispute there.

Last edited:

This was tried with the slots on the studio and it didn't work.Anyone want to take bets on whether or not you can solder down an extra flash ship and double your SSD, if you are someone who has the tools and skills to solder BGA? I bet they block that from working. (Dell did the same thing with video ram on an XPS at some point, but all it took was swapping a couple of resistors to enable the extra VRAM.)

The weird thing is Duke's obsession with the unsupported belief that people primarily care about performance for gaming.

Don't get me wrong, there are plenty of people who buy faster computers with games in mind. But many of us buy speedier systems because they better meet our productivity needs. I regularly juggle several apps at a time for work, including media editing tools — I don't want my computer bogging down if I can avoid it. Even a 'casual' user may not want their computer to choke on multiple apps, or to have enough power for editing family photos and videos for years down the road.

No one here is suggesting that you buy a Mac primarily for gaming. The library is much smaller, and Apple's GPUs aren't going to threaten high-end AMD or NVIDIA cards. But you can do some gaming on them, and for many of us playing the occasional game is good enough. We're willing to make that tradeoff to get a modern Mac's other advantages, such as strong general performance (particularly for media editing), super-quiet operation, compact form factors and long battery life. A 14-inch MacBook Pro is a very capable portable editing rig, and won't dramatically throttle on battery power like Windows laptops. A Mac mini is now a pretty solid desktop; the base M2 model is faster, quieter and much smaller than a comparable machine from Dell or HP, while the M2 Pro version is a good creative tool.

Oh, and on the NAND question: the evidence suggests it's more likely that Apple is trying to reduce the number of NAND chips at least partly due to supply shortages. You can't get faster storage from a product that isn't shipping.

Don't get me wrong, there are plenty of people who buy faster computers with games in mind. But many of us buy speedier systems because they better meet our productivity needs. I regularly juggle several apps at a time for work, including media editing tools — I don't want my computer bogging down if I can avoid it. Even a 'casual' user may not want their computer to choke on multiple apps, or to have enough power for editing family photos and videos for years down the road.

No one here is suggesting that you buy a Mac primarily for gaming. The library is much smaller, and Apple's GPUs aren't going to threaten high-end AMD or NVIDIA cards. But you can do some gaming on them, and for many of us playing the occasional game is good enough. We're willing to make that tradeoff to get a modern Mac's other advantages, such as strong general performance (particularly for media editing), super-quiet operation, compact form factors and long battery life. A 14-inch MacBook Pro is a very capable portable editing rig, and won't dramatically throttle on battery power like Windows laptops. A Mac mini is now a pretty solid desktop; the base M2 model is faster, quieter and much smaller than a comparable machine from Dell or HP, while the M2 Pro version is a good creative tool.

Oh, and on the NAND question: the evidence suggests it's more likely that Apple is trying to reduce the number of NAND chips at least partly due to supply shortages. You can't get faster storage from a product that isn't shipping.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)