III_Slyflyer_III

[H]ard|Gawd

- Joined

- Sep 17, 2019

- Messages

- 1,248

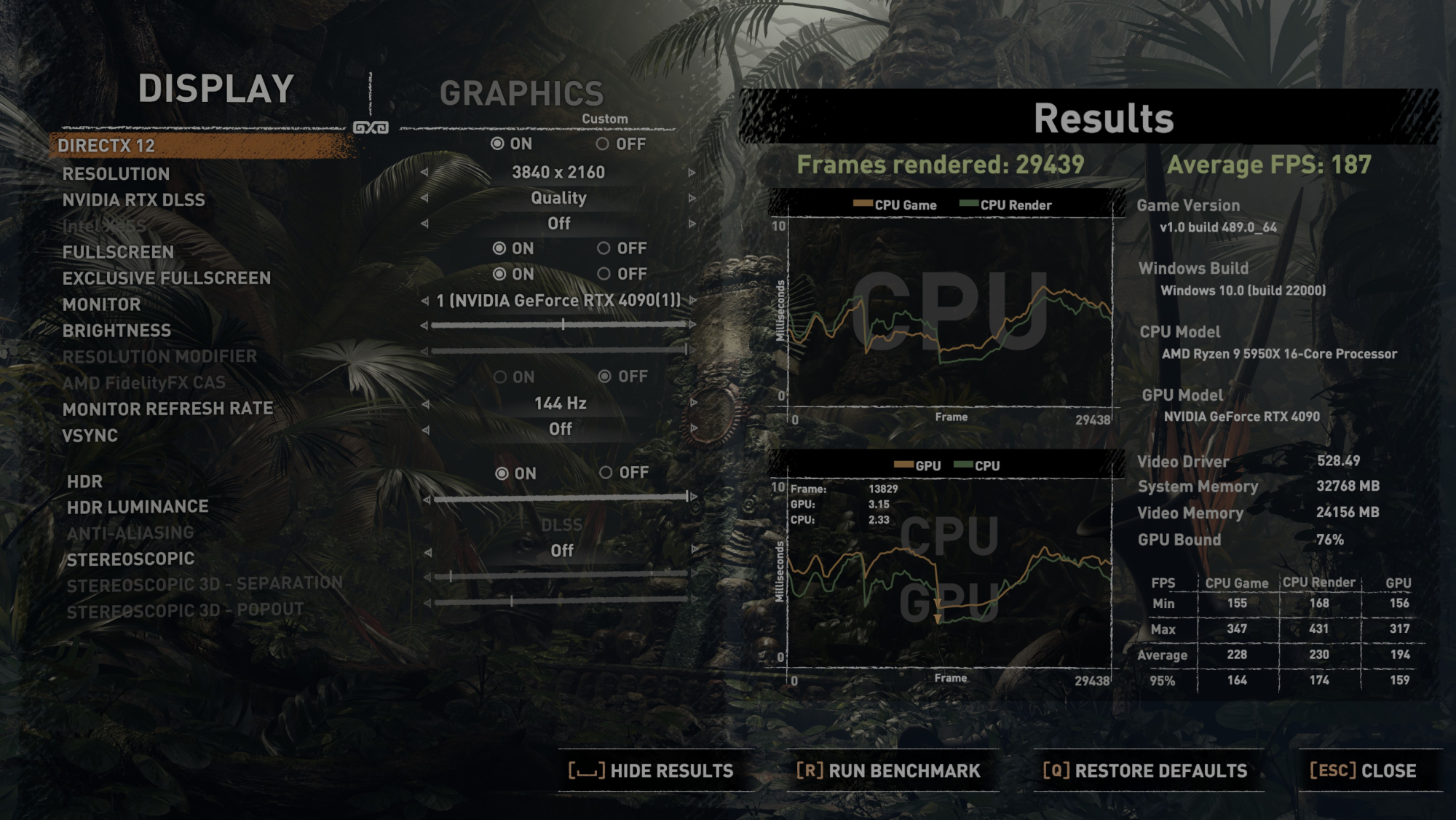

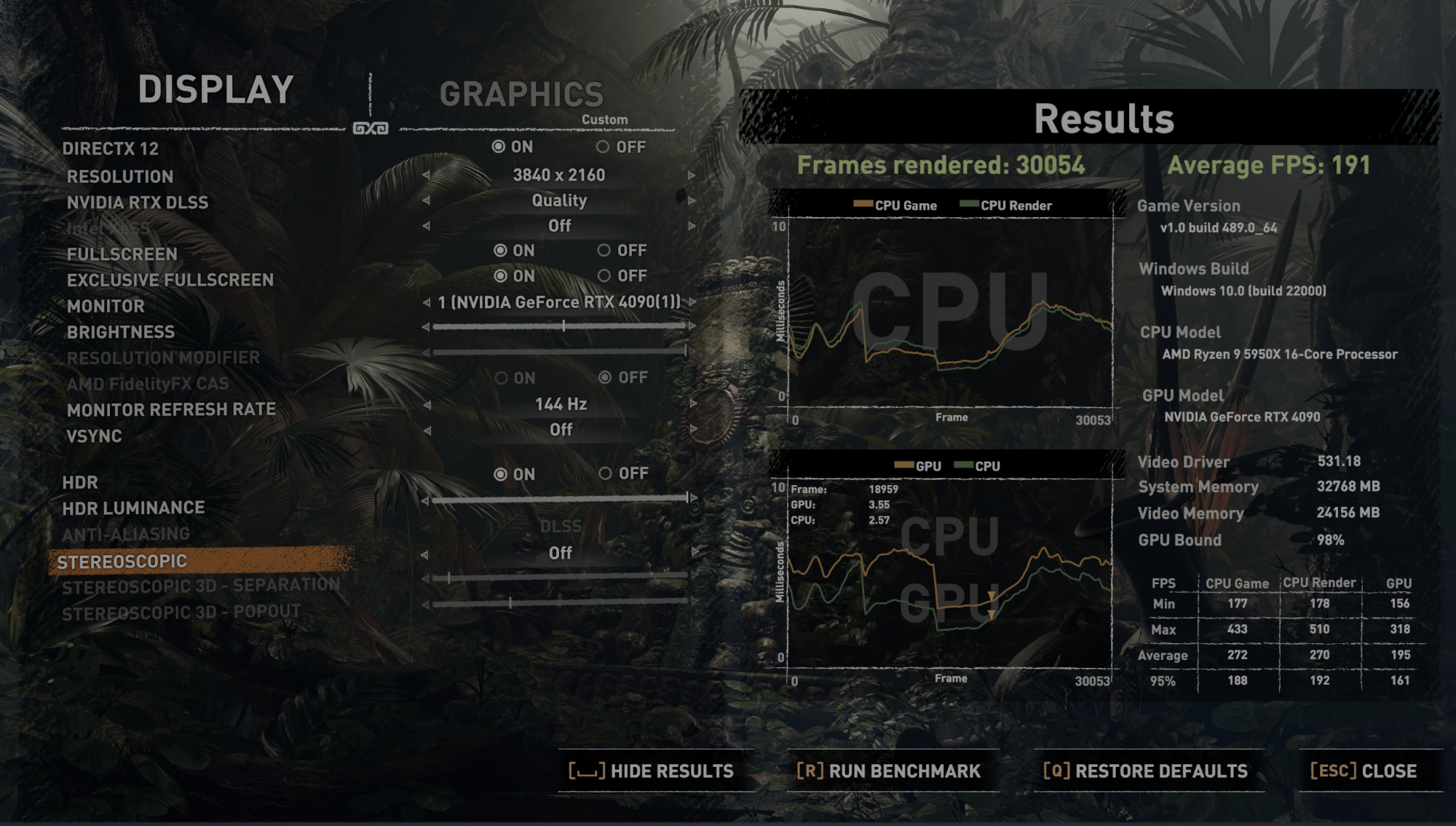

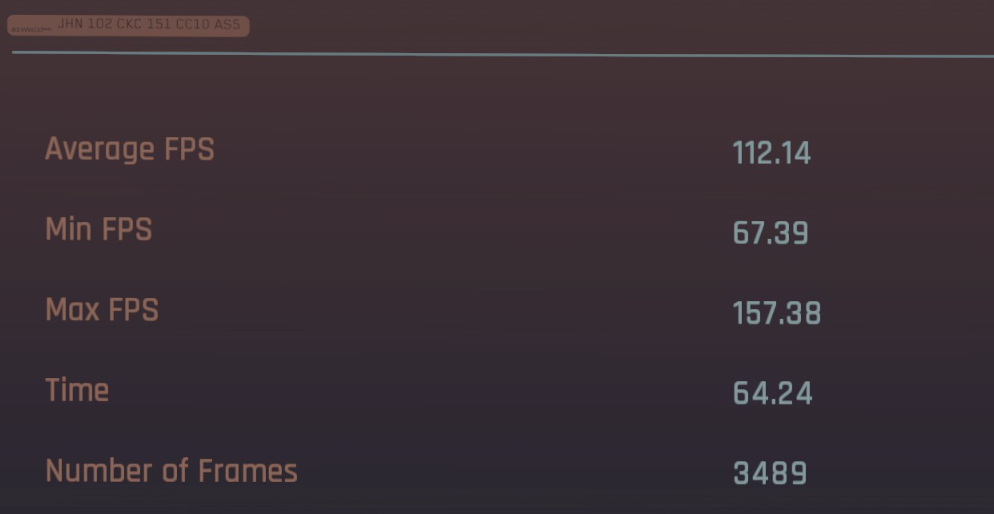

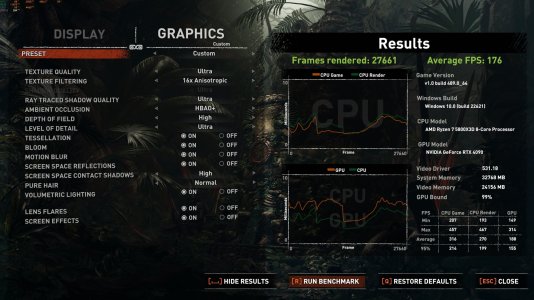

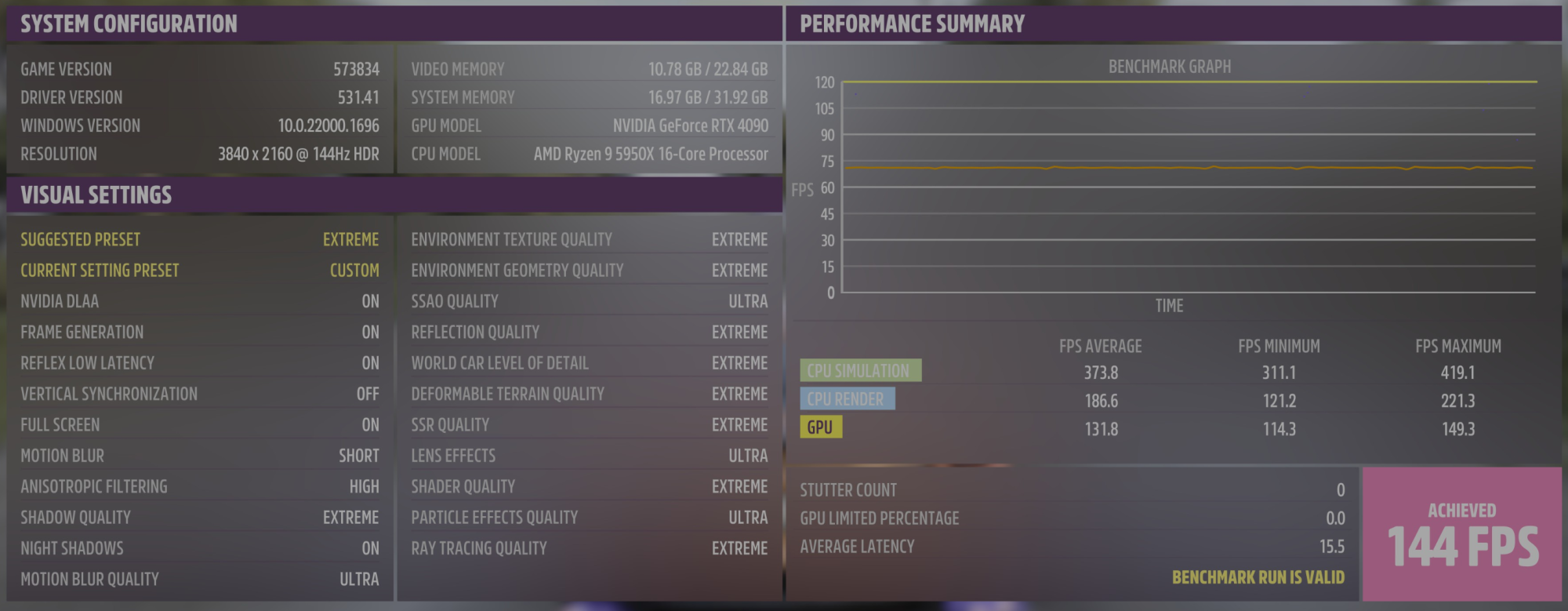

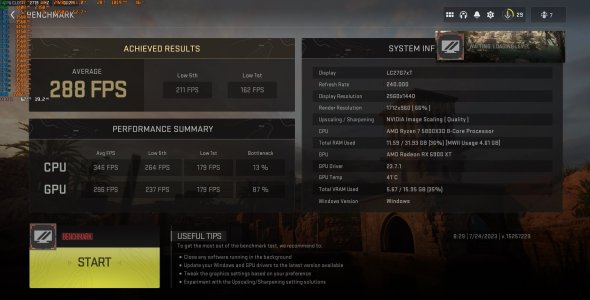

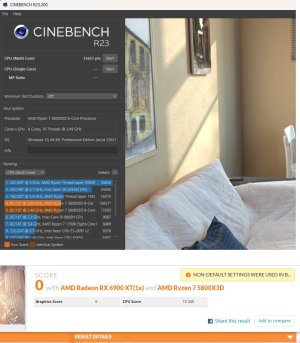

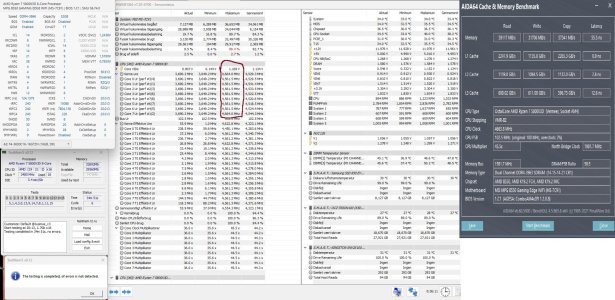

Awesome... I'll do some runs in SoTTR and CP2077 tomorrow after work and snag some screenshots and settings. I don't expect this trick to match or beat a 5800X3D in games that specifically take advantage of 3d cache, but I am extremely surprised how this all core OC smoothed everything out. Night and day man, still impressed so far a week later.Oh with such a high overclock on GPU of course your fps will be high.

I can download other games if that helps.

Let me know which ones. I will download SOTTR over night. CP2077 I also have. Post settings and I can run these benches.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)