Araxie

Supreme [H]ardness

- Joined

- Feb 11, 2013

- Messages

- 6,463

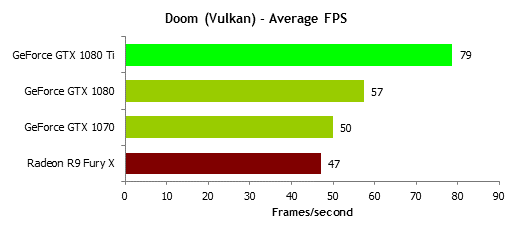

my point was that AMD keeps competing with Nvidia's mid-range cards and can come close enough...if AMD can actually go for it and produce a really high end Vega card then with their improved a-sync DX12 architecture and Dx12/Vulkan performance they can compete with Nvidia on the high end...especially when taking into account AMD's aggressive pricing

Doom is the only new game that has Vulkan support so there's nothing else to compare it with

http://www.eurogamer.net/articles/d...n-patch-shows-game-changing-performance-gains

everything what you could find on the web right now is gona be useless since the introduction of Nvidia latest drivers which include DX12 performance optimizations(378.78) which in some games as Rise of the tomb raiders according their claims is up to 33% performance improvement, in Hitman other 23%, AoTS 9%, Gears of war 4 another 9%.. that, as itself can also pretty much mean the actual difference in performance versus AMD i'ts most nullified to say it soft..

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)