Factum

2[H]4U

- Joined

- Dec 24, 2014

- Messages

- 2,455

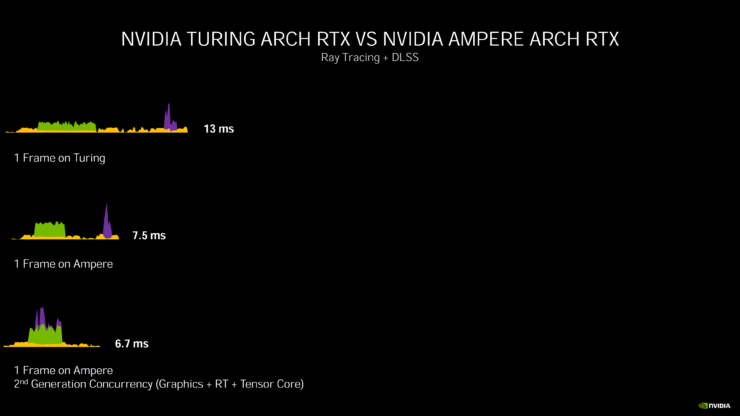

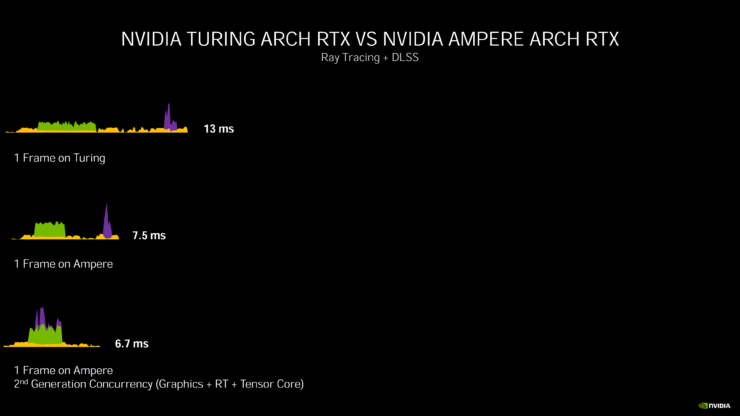

I was looking over the ampere slides when this caught my eye:

1 frame on Turing take 13 milliseconds. Raster + Raytracing + DLSS. Kinda running concurrently, kinda not. Raster run for the whole 13 milliseconds. Raytracing running for 1/3 of the frame. And DLSS having a quick sprint near the end.

1 frame on Ampere take 6.7 milliseconds. Raster + Raytracing + DLSS running fully concurrently. Raster starts, raytracing starts 1/4 into the frame, followed almost immediately by DLSS and the last half'ish of the frame is just raster.

On Turing the CUDA cores runs the whole frame, the raytracing is done concurrently ens. raster keeps going and then DLSS engages and ends. A maximum on two units firing concurrently at any given time.

But on Ampere all three unit types runs concurrently, meaning more parts of the chip is "on" at the same time.

So a frame on Ampere take about 50% of the time in the slide, meaning it should put out double the frames compared to Turing, keep more of the chip "on" while doing so. So should also draw more power while doing so...due to more work being done concurrently.

Then again I could just be to tired and not thinking straight

1 frame on Turing take 13 milliseconds. Raster + Raytracing + DLSS. Kinda running concurrently, kinda not. Raster run for the whole 13 milliseconds. Raytracing running for 1/3 of the frame. And DLSS having a quick sprint near the end.

1 frame on Ampere take 6.7 milliseconds. Raster + Raytracing + DLSS running fully concurrently. Raster starts, raytracing starts 1/4 into the frame, followed almost immediately by DLSS and the last half'ish of the frame is just raster.

On Turing the CUDA cores runs the whole frame, the raytracing is done concurrently ens. raster keeps going and then DLSS engages and ends. A maximum on two units firing concurrently at any given time.

But on Ampere all three unit types runs concurrently, meaning more parts of the chip is "on" at the same time.

So a frame on Ampere take about 50% of the time in the slide, meaning it should put out double the frames compared to Turing, keep more of the chip "on" while doing so. So should also draw more power while doing so...due to more work being done concurrently.

Then again I could just be to tired and not thinking straight

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)