500w + is where the OAM format comes in, even data centers with all their advanced cooling and power delivery technologies very rarely push more than 450w on a traditional PCIe interface, it's just too risky.I'm pretty certain the rumors were real. They just couldn't get the cards to reliably draw that level of power without melting and lighting your system on fire. If they could have done it, they would have, and charged us another grand on top of what they're already asking.

https://www.techspot.com/news/96261-nvidia-rtx-titan-ada-reportedly-canceled-after-melted.html

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD's RX 7900 XT cards allegedly support unannounced DisplayPort 2.1 connectors

- Thread starter kac77

- Start date

This may be a little too optimistic.I got a feeling they will be close to 4090 speeds while being cheaper and use less power

-Close to 4090

-Cheaper

-Uses less power

Choose one.

If it’s close to the 4090 and significantly cheaper all AMD does is guarantee the scalpers correct that pricing incongruity for them.This may be a little too optimistic.

-Close to 4090

-Cheaper

-Uses less power

Choose one.

Legendary Gamer

[H]ard|Gawd

- Joined

- Jan 14, 2012

- Messages

- 1,590

I chose cheaper (slightly, like maybe 200 bucks on the top end).This may be a little too optimistic.

-Close to 4090

-Cheaper

-Uses less power

Choose one.

AMD is valued ahead of Intel these days, no way they keep their prices low.

Rockenrooster

Gawd

- Joined

- Apr 11, 2017

- Messages

- 955

Uh, what it the 3090Ti's power usage again???The problem with rumors is well they are rumors. Everyone was claiming that the 4090’s would use 450+ watts and possibly 500 at stock. Only to find that they are as power hungry as a 3090ti.

Oh yeah... 450w, and some AIBs are 500w stock. And 4090 stock is 450w (very close to 500w avg in furmark)

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,567

If I remember, the reviews were actually showing less power usage than a 3090ti.Uh, what it the 3090Ti's power usage again???

Oh yeah... 450w, and some AIBs are 500w stock. And 4090 stock is 450w (very close to 500w avg in furmark)

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,101

Correct.If I remember, the reviews were actually showing less power usage than a 3090ti.

Rockenrooster

Gawd

- Joined

- Apr 11, 2017

- Messages

- 955

probably also depends on which game you test also,Correct.

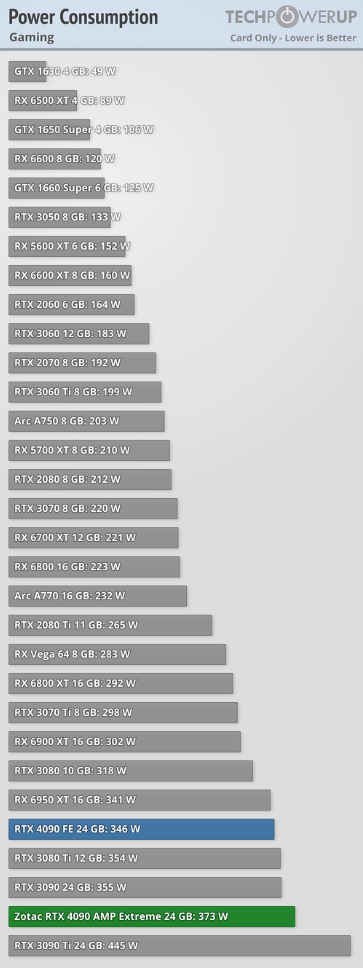

View attachment 518461

Hardware Unboxed used Halo Infinite and the 4090 got 50 watts less than the stock 3090Ti (although they use total system power, CPU may be adding a few watts)

LTT used F1 2022 and the 4090 got 425w and the 3090Ti got 450w.

FPS Review uses multiple games, in Metro Exodus the 4090 peaked at 444.8w

on average it does use less power than the 3090Ti, but not by much at all.

That chart shows the 4090 using 100w less power than a 3090Ti, no other review site I found even comes close to that, they are all within 0-50w of each other. They must of gotten a much better sample than the others.

Its always good to review multiple sources, never trust just one single source for things like this.

There are also a lot of other testing conditions as well, in cases where you are CPU bound for instance the GPU isn't going to be working as hard.probably also depends on which game you test also,

Hardware Unboxed used Halo Infinite and the 4090 got 50 watts less than the stock 3090Ti (although they use total system power, CPU may be adding a few watts)

LTT used F1 2022 and the 4090 got 425w and the 3090Ti got 450w.

FPS Review uses multiple games, in Metro Exodus the 4090 peaked at 444.8w

on average it does use less power than the 3090Ti, but not by much at all.

That chart shows the 4090 using 100w less power than a 3090Ti, no other review site I found even comes close to that, they are all within 0-50w of each other. They must of gotten a much better sample than the others.

Its always good to review multiple sources, never trust just one single source for things like this.

Rockenrooster

Gawd

- Joined

- Apr 11, 2017

- Messages

- 955

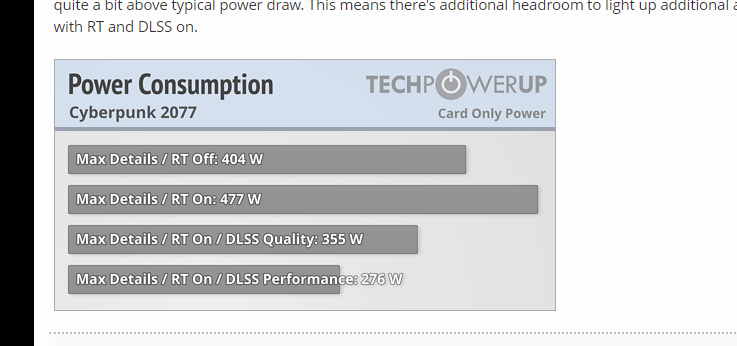

Did some more digging, I'm pretty sure Techpowerup's chart is very misleading because they say they use CP2077 @1440p with no ray tracing for the "gaming power consumption" test. I'm pretty sure it's CPU bottlenecked at that point because it goes from being ~30% faster than the 2nd fastest card @1440p to over 50% faster than the 3090ti @ 4k.

They even note on the same page that when you enable RT, power usage jumps up by over 70 watts and there is no equivalent chart in their 3090Ti review...

They really need to update their power consumption testing.....

They even note on the same page that when you enable RT, power usage jumps up by over 70 watts and there is no equivalent chart in their 3090Ti review...

They really need to update their power consumption testing.....

It will use the same as 3090 ti in the heaviest pure GPU scenarios, but less a lot of time (aka real world gaming) it will use less, sometimes significantly so. Not only is it more efficient in general but this gen of card is doing a better job of managing power depending on actual needs. For example if you play with a framerate cap the power usage drops very significantly now, Ampere was very bad at that (unlike AMD which did well).

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,532

Well in the HU review there were numerous discrepancies in performance of the 4090 in 1440p vs. 4k.Did some more digging, I'm pretty sure Techpowerup's chart is very misleading because they say they use CP2077 @1440p with no ray tracing for the "gaming power consumption" test. I'm pretty sure it's CPU bottlenecked at that point because it goes from being ~30% faster than the 2nd fastest card @1440p to over 50% faster than the 3090ti @ 4k.

They even note on the same page that when you enable RT, power usage jumps up by over 70 watts and there is no equivalent chart in their 3090Ti review...

They really need to update their power consumption testing.....

View attachment 518495

Rockenrooster

Gawd

- Joined

- Apr 11, 2017

- Messages

- 955

Yep, and that is a consequence of being CPU bottlenecked, barring any driver issues...Well in the HU review there were numerous discrepancies in performance of the 4090 in 1440p vs. 4k.

Axman

VP of Extreme Liberty

- Joined

- Jul 13, 2005

- Messages

- 17,315

And if you are so inclined to eat a hat as you say. Might I recommend something along the lines of these.

View attachment 518315

Are those built using chocolate-dipped Pringles?

This may be a little too optimistic.

-Close to 4090

-Cheaper

-Uses less power

Choose one.

I think it'll be a little of all three but AMD will let AIBs make their own crazy designs that are maybe just one.

Belgian chocolate crisps, Rolo's, and Strawberry Licorish Lace.Are those built using chocolate-dipped Pringles?

Axman

VP of Extreme Liberty

- Joined

- Jul 13, 2005

- Messages

- 17,315

Oh thank God.

I would love to be proven wrong, but my opinion is that AMD won't even come close this generation. The generational leap the 4090 displays is hefty, and I don't think anyone was expecting this amount of uplift from both the new architecture and the move to TMSC's 4NM node.

In before biggest navi lands between 3070 and 3080 using more power than a 3080 priced at $549.99, just to have NV release a 3070Ti that sits just above biggest navi while sipping power in comparison for $599.99.

Just my prediction, I could be totally wrong; but just how many times does history have to back hand slap people before we learn the lesson?1

Worth about as much as the last few "opinions". Somewhere between jack and shit.No offense, but AMD has consistently been releasing GPUs that consume more power but provide lower performance than their competition. So why would you even think that they're going to magically require less power to still come second best this time around?

Now don't get me wrong. Nvidia certainly increased the power requirements of the 30 series for sure. But it seems strange to me that people keep thinking that AMD will magically produce a GPU that can somehow beat their competition. Competition, that mind you, that has been consistently pushing the envelope in GPU technology and actually moving the industry forward.

I wait until independent reviews are up and are verifiable before even thinking about stating something like that. I'm not about to delude myself just to get let down yet again. It's been too many times now, I know I've learned my lesson, I just wish more people learned as well.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,800

To some people, AMD should always remain a 2nd rate brand. I mean look, they're not even going to use such "envelope pushing" features such as DP 1.4....Worth about as much as the last few "opinions". Somewhere between jack and shit.

DP is a shitshow right now, VESA didn't start certifying DP 2.0 devices until May of this year.To some people, AMD should always remain a 2nd rate brand. I mean look, they're not even going to use such "envelope pushing" features such as DP 1.4....

Here's the announcement they made on May 10'th 2022.

"Certification of UHBR reference devices must undergo rigorous testing to ensure they meet the requirements outlined in the DisplayPort 2.0 CTS. We’re excited to announce that a set of reference silicon has been verified to meet the requirements of the DisplayPort 2.0 spec through our certification program. VESA now has the testing infrastructure in place to evaluate and certify OEM end products, and we are ready to work with the ecosystem to bring next-generation DisplayPort chipsets and IP to market."

Now their initial partners for this announcement were, AMD, MediaTek, and Realtek.

Announcing a spec as complete in 2019, then taking 3 years to get the infrastructure in place to actually start certifying hardware to meet those specifications is kinda BS, they can blame COVID all they want they dropped the ball on it hard.

So while it annoys the crap out of me that Nvidia is still on DP 1.4 I sort of get it. Are there any 4K DP 2.0 monitors even available for purchase right now? Or any resolution for that matter? I get the whole chicken and egg thing but still, the 4000 series was likely signed off on and in production before May of this year.

Teenyman45

2[H]4U

- Joined

- Nov 29, 2010

- Messages

- 3,244

My biggest concern with Display Port has always been power feedback through the cable's power pin that would sometimes burn out a video card.

I felt so far ahead of the time when I hooked up a TV capture card to my computer and bypassed the need to record to a VCR... but the 2GB file size limits and lack of live-at-record file compression capped me to 20 minutes of video at a time per file.

If anything, ports and cables mattered more back then than today because of how everything was analog and likely to cause interference with each other. Now extreme bandwidth matters only if you're trying to push well into triple digit framerates on a 4K resolution TN or VA panel. Regarding the other features governed by digital cable specs, are you running THX Dolby Atmos Surround extra-hyper-mega-super-unobtani direct sound through a TV or monitor's built in speakers?

And I knew a lot of people who cared about how many heads their VCRs had, and not just because more heads are in more expensive VCRs and therefore must be better. Were you the one responsible for making sure it didn't just blink 12:00 all the time? Did you set your VCRs to channel 3 or 4? Did you have a switching box for a NES or Atari?"Did you ever care what chip was used inside your VCR?"

Hmmm never thought about that....

Our first VCR was a Sony behemoth with knobs and switches I never understood (it looked like a piece of legit 80's stereo equipment)

- I recall the biggest feature was time-based recording of some-sort

Now, I just feel old....

I felt so far ahead of the time when I hooked up a TV capture card to my computer and bypassed the need to record to a VCR... but the 2GB file size limits and lack of live-at-record file compression capped me to 20 minutes of video at a time per file.

If anything, ports and cables mattered more back then than today because of how everything was analog and likely to cause interference with each other. Now extreme bandwidth matters only if you're trying to push well into triple digit framerates on a 4K resolution TN or VA panel. Regarding the other features governed by digital cable specs, are you running THX Dolby Atmos Surround extra-hyper-mega-super-unobtani direct sound through a TV or monitor's built in speakers?

next-Jin

Supreme [H]ardness

- Joined

- Mar 29, 2006

- Messages

- 7,387

I would love to be proven wrong, but my opinion is that AMD won't even come close this generation. The generational leap the 4090 displays is hefty, and I don't think anyone was expecting this amount of uplift from both the new architecture and the move to TMSC's 4NM node.

I mean it’s a little more than average, what makes it so high are those DLSS 3 numbers which is just ridiculous. 120fps+ at 4k max RT/Settings with DLSS Quality on Cyberpunk is just crazy.

GDI Lord

Limp Gawd

- Joined

- Jan 14, 2017

- Messages

- 305

We'd love to!!I do. You should hear the stuff I don't broadcast.

Podcast called “frozen shit talk”We'd love to!!

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,858

So, is this a new connector, or just a new backwards compatible spec.

I feel like the industry needs to calm the F down with all of these different connectors requiring adapters.

I mean, if we could all just settle on one mainstream connector (I vote mini DP) and maybe throw in an HDMI for good measure for TV users that would be great, and then keep it that way for like 20 years without changing it, that would be great.

I feel like the industry needs to calm the F down with all of these different connectors requiring adapters.

I mean, if we could all just settle on one mainstream connector (I vote mini DP) and maybe throw in an HDMI for good measure for TV users that would be great, and then keep it that way for like 20 years without changing it, that would be great.

Last edited:

horrorshow

Lakewood Original

- Joined

- Dec 14, 2007

- Messages

- 9,440

Were you the one responsible for making sure it didn't just blink 12:00 all the time? Did you set your VCRs to channel 3 or 4? Did you have a switching box for a NES or Atari?

1. Blinking 12:00? The VCR I mentioned had scrolling numbers for it's clock like an old bike lock

2. Channel 3 or gtfo heh

3. Toward the end of the VCR era, my buddy and I had a multitude of switch boxes connected to his first gen Tivo, connected to an old school pirated cable brown box, along with 2 VCR's for... things, and a plethora of gaming systems etc

- This was ultimately replaced with renting 8 Netflix DVD's at a time and ripping them to divx/mkv using a dedicated box, burning them to cd's, and putting them in giant case logic binders

.... There was a time.

HUH? I am confused here. RDNA1 to RDNA2 was almost double performance. Now you have more than doubel shaders, fatter bus likely, revamped architecture. 4090 is good but not sure blown away is the word I would use. I think ampere had more consistent raster uplift compared to gen before. May be RT leap is bigger sure thats where their focus is but not overall raster.I would love to be proven wrong, but my opinion is that AMD won't even come close this generation. The generational leap the 4090 displays is hefty, and I don't think anyone was expecting this amount of uplift from both the new architecture and the move to TMSC's 4NM node.

Its not too hard to believe amd might do just fine because given the potential specs and what they achieved raster wise in RDNA 2.

I think the rumors got wrong about the 4090 actual power. They probably didn't know it was board that was capable. They designed it with 4090ti in mind. That will probably be 525w+ card with same 600w headroom.The problem with rumors is well they are rumors. Everyone was claiming that the 4090’s would use 450+ watts and possibly 500 at stock. Only to find that they are as power hungry as a 3090ti.

So, because we go and have doom and gloom for the new AMD cards let’s be patient and wait and see. I am really interested as a hardware nerd to see how crossfire on a single gpu die works! Either way we know this Generation AMD Can keep costs and power down.

Thunderdolt

Gawd

- Joined

- Oct 23, 2018

- Messages

- 1,015

The rumors were created by unqualified and inexperienced people reading a leaked "design spec" for 600W and thinking that meant it would run at 600W during ordinary gaming. Unfortunately for them, that's not how these specs work.I'm pretty certain the rumors were real. They just couldn't get the cards to reliably draw that level of power without melting and lighting your system on fire. If they could have done it, they would have, and charged us another grand on top of what they're already asking.

https://www.techspot.com/news/96261-nvidia-rtx-titan-ada-reportedly-canceled-after-melted.html

It was designed as a 450W card from the start. The extra 150W was basic safety factor as well as to leave room for overclockers. This is how design specs work. With all four connectors plugged in, the factory BIOS allows you to go +33% on the TDP. That brings the TDP to... drumroll please.... 598.5W.

RDNA 2 managed to come close to Ampere while having a significant node advantage. Samsung 8nm was about the same as TSMC 12/10nm but nowhere close to TSMC 7nm. RDNA 3 may have almost 2x the transistor count of RDNA 2 but Lovelace has 3x the transistor count of Ampere.HUH? I am confused here. RDNA1 to RDNA2 was almost double performance. Now you have more than doubel shaders, fatter bus likely, revamped architecture. 4090 is good but not sure blown away is the word I would use. I think ampere had more consistent raster uplift compared to gen before. May be RT leap is bigger sure thats where their focus is but not overall raster.

Its not too hard to believe amd might do just fine because given the potential specs and what they achieved raster wise in RDNA 2.

Raster count is great, but studios are moving away from raw Raster. The current development toolsets heavily favours ray traced asset design and textures.

AMD is going to put out a good card there is no doubt. I thoroughly believe the 1440p and 1080p race this year is going to be tight and overcrowded with choice.

In the 1440p and below AMD is going to be a force it’s going to be RDNA 3 vs Ampere and Lovelace and I believe they are going to come out swinging.

Nvidia is showing us the top end but that’s like 5% of the PC gaming market at most. The real fight hasn’t started yet. 4K is good for bragging rights but that’s not where most of the market is. I hope this is the year AMD positions itself to actually claim some market share, the RDNA 3 design should give them huge yields and allow them to step up their game. AMD doesn’t need to compete with the 4090 or even the 4080 16, it’s everything below that they need to step too and I think this is the year they are in the position to do just that.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,800

We'll see just how many new and popular games in the next couple of years actually make good use of this. We've been hearing this for the past 2 gpu generations.Raster count is great, but studios are moving away from raw Raster. The current development toolsets heavily favours ray traced asset design and textures.

Anything using the current Unreal, Unity, CryEngine, Lumberyard, or Frostbite engines. Their Dev sets all prioritize raytracing over rastering at this point same with the bulk of the asset and texture libraries.We'll see just how many new and popular games in the next couple of years actually make good use of this. We've been hearing this for the past 2 gpu generations.

We have to remember the last 2 years have been an oddity. The average state of PC gaming actually regressed over covid as parts were unavailable and projects stalled out.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,800

Again. We'll see. When RT matters to the masses, I'll be there right along them.Anything using the current Unreal, Unity, CryEngine, Lumberyard, or Frostbite engines. Their Dev sets all prioritize raytracing over rastering at this point same with the bulk of the asset and texture libraries.

We have to remember the last 2 years have been an oddity. The average state of PC gaming actually regressed over covid as parts were unavailable and projects stalled out.

Devs want it to matter, it’s far cheaper for them to optimize for ray tracing than rasterizing. Ray tracing automates what is a very laborious and expensive part of game development and they are putting fewer and fewer resources into that manual process.Again. We'll see. When RT matters to the masses, I'll be there right along them.

I am confused here. Isn't raster important regardless of if you are doing ray tracing or not? It all plays a part no?Devs want it to matter, it’s far cheaper for them to optimize for ray tracing than rasterizing. Ray tracing automates what is a very laborious and expensive part of game development and they are putting fewer and fewer resources into that manual process.

It does but lighting effects take up the vast bulk of the “optimization” process.I am confused here. Isn't raster important regardless of if you are doing ray tracing or not? It all plays a part no?

In a development art department, they have whole teams dedicated to taking art assets, converting them to texture assets, optimizing lighting meshes, placing scenes, and tweaking perceived light sources, shadows, and reflections.

So when people complain that a game runs poorly because it’s “not optimized” the bulk of that is from art assets not being handled properly. Or the art assets having to interact with the physics engine in a way they didn’t properly tweak.

The current texture and development libraries are being shifted over to a ray-traced approach replacing entire departments with a server and a few button clicks that take minutes to complete which took people hours or days to do. So raster is still important to a point, it still makes up the bulk of the visual work.

But what kind of frame rates do you get on most high-end games on high resolutions but low settings? They rip along. Rasterization is good enough for the most part but it’s those visual eye-candy bits that make up the hard parts and that is where the expenses are for both the developer and the hardware.

Last edited:

horrorshow

Lakewood Original

- Joined

- Dec 14, 2007

- Messages

- 9,440

Again. We'll see. When RT matters to the masses, I'll be there right along them.

It's like "shaders" back in the Geforce 2/3 days.... "They used this tech in Jurassic Park?? No way.."

It took till DX 8.1 for shaders to become a standard. (with HL2/Doom3)

Honestly, I'm much more intrigued with DLSS/FSR than ray-tracing.

Until there's a universal standard for ray-tracing; which I'll assume AMD will decide with consoles etc....

We're still at the GLquake/Vquake stage IMO

Shut up 7900XT also has PCIe 6.0Honest question, what good would DP 2.1 actually be on it if it underperforms NV 4000 series?

(I'll see myself out)

WHAT it operates sub zero and doubles as a slushy machine!!!Shut up 7900XT also has PCIe 6.0

(I'll see myself out)

CAD4466HK

2[H]4U

- Joined

- Jul 24, 2008

- Messages

- 2,724

Good post...but Doom 3 was OpenGL.It's like "shaders" back in the Geforce 2/3 days.... "They used this tech in Jurassic Park?? No way.."

It took till DX 8.1 for shaders to become a standard. (with HL2/Doom3)

Honestly, I'm much more intrigued with DLSS/FSR than ray-tracing.

Until there's a universal standard for ray-tracing; which I'll assume AMD will decide with consoles etc....

We're still at the GLquake/Vquake stage IMO

And its engine was released under GPL here:Good post...but Doom 3 was OpenGL.

https://github.com/id-Software/DOOM-3-BFG

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)