Smoked Brisket

Gawd

- Joined

- Feb 6, 2013

- Messages

- 786

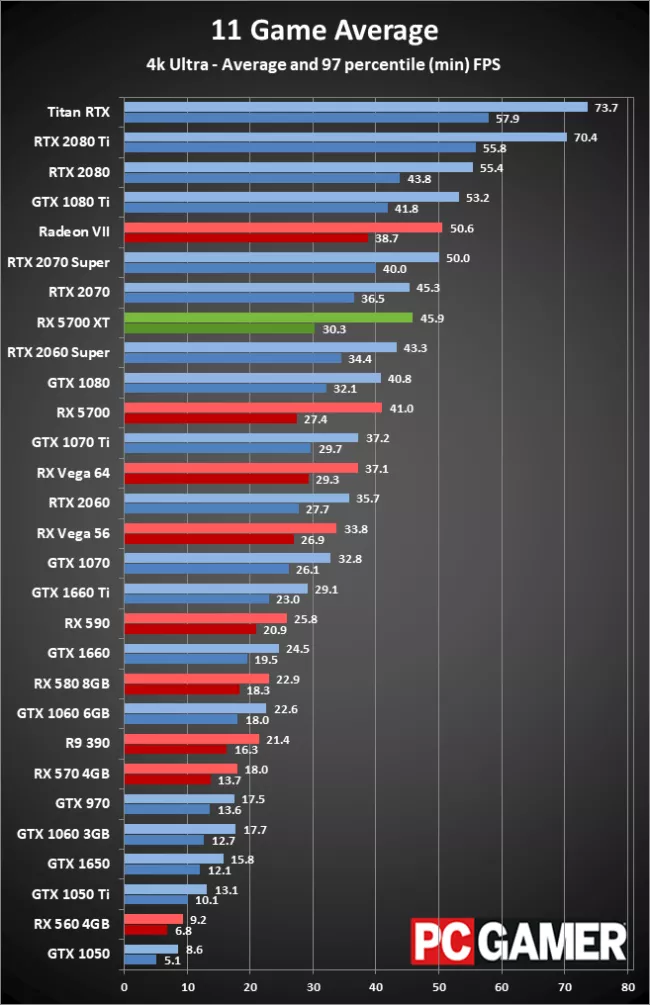

Man this sucks, and I hate to pile on! But the new AMD drivers came out and 2 games that were working just fine started to fail to launch. This happens with every beta driver. I went back to last months drivers and it is all fine. This is a process that happens every new driver. Why is that? I am serious, this is real world experience. If you have an adrenaline driver that works do not update until at least 2 new versions are released.I've been a proponent of AMD drivers in the past, but I got bit by them this weekend on my 5700 XT.

Got a new monitor, swapped it out, and was stuck at 60Hz. Thought the monitor was broken at first (165Hz capable) but a AMD driver update fix it.

Then I was getting crashing after about 5 or 10 minutes in several games. Had to do a DDU safe mode uninstall and reinstall (of the same newest driver).

Now things are working fine, but it wasn't the best experience.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)