erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,890

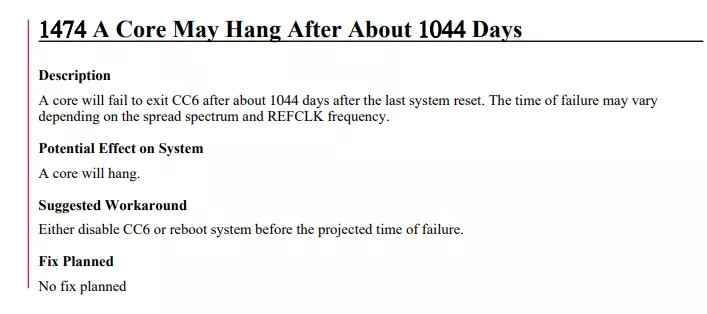

“A clock timer bug brings second-gen EPYCs to a halt.”

“And then there are the folks that just want to join the uptime club and set a record. To do that, you have to beat the computer onboard the Voyager 2 spacecraft. Yeah, the one that was the second to enter interstellar space. That computer has been running for 16,735 days (48+ years), and counting.

For terrestrial records, 6,014 days (16 years) seems to be the record for a server, but I've seen plenty of debate over other contenders for the crown. (The small/r/uptimeporn/Reddit community has plenty of examples of extended uptimes.)

In either case, you won't get to break that type of record with any of the EPYC Rome chips -- this errata will not be fixed, so not all your cores will exceed the 1,044-day threshold by much under any circumstances. AMD's note says it won't fix the issue — perhaps the company decided the issue is too costly to fix in silicon, or a microcode/firmware fix has too much performance overhead, or maybe there simply aren't enough impacted customers to make the fix worthwhile.

In either case, disabling the server's CC6 sleep state will help you sleep at night, or you could just make sure to reboot every 1,000 days or so.”

Source: https://www.tomshardware.com/news/amds-epyc-rome-chips-could-hang-after-1044-days-of-uptime

“And then there are the folks that just want to join the uptime club and set a record. To do that, you have to beat the computer onboard the Voyager 2 spacecraft. Yeah, the one that was the second to enter interstellar space. That computer has been running for 16,735 days (48+ years), and counting.

For terrestrial records, 6,014 days (16 years) seems to be the record for a server, but I've seen plenty of debate over other contenders for the crown. (The small/r/uptimeporn/Reddit community has plenty of examples of extended uptimes.)

In either case, you won't get to break that type of record with any of the EPYC Rome chips -- this errata will not be fixed, so not all your cores will exceed the 1,044-day threshold by much under any circumstances. AMD's note says it won't fix the issue — perhaps the company decided the issue is too costly to fix in silicon, or a microcode/firmware fix has too much performance overhead, or maybe there simply aren't enough impacted customers to make the fix worthwhile.

In either case, disabling the server's CC6 sleep state will help you sleep at night, or you could just make sure to reboot every 1,000 days or so.”

Source: https://www.tomshardware.com/news/amds-epyc-rome-chips-could-hang-after-1044-days-of-uptime

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)