cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,087

GTX 1060 vs. RX 480 - An Updated Review.

http://www.hardwarecanucks.com/foru.../73945-gtx-1060-vs-rx-480-updated-review.html

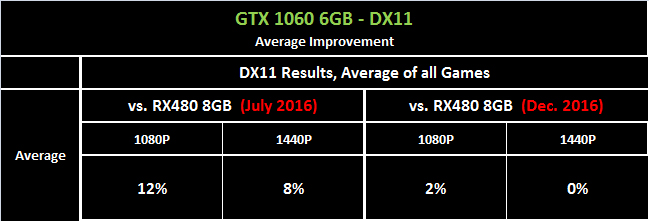

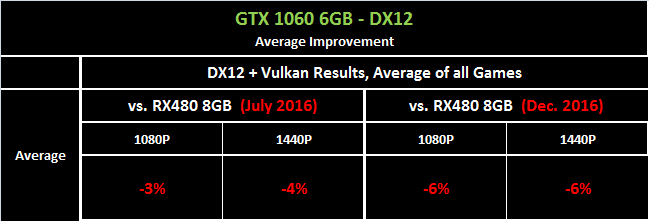

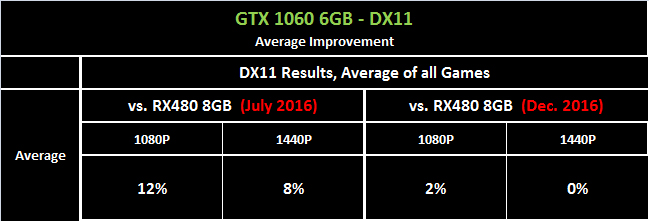

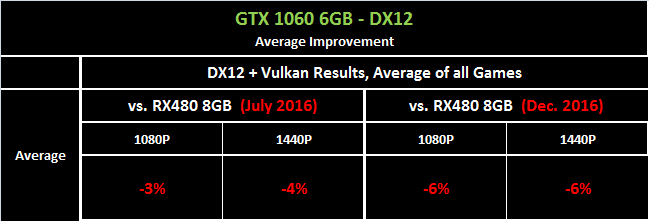

Hardware Canucks tested reference and OC'd variants of AMD RX 480 and GTX 1060 cards in a head to head showdown at resolutions of 1080p and 1440p. They tested a TON of games to see how the drivers for these cards have improved since launch. If anything you should be able to look at your favorite games and see which of these cards is faster for the games that YOU play.

The GTX 1060 6GB versus RX 480 8GB saga obviously doesn’t end here and if the last few months are anything to go by, these cards will be fighting tooth and nail until the day they’re replaced. What AMD has accomplished between Polaris’ initial rollout and now is impressive to say the least but their board partners have given the RX 480 a slight premium above its $240 launch price. NVIDIA on the other hand is hanging doggedly on and their board partners have responded by lowering the GTX 1060’s entry price. This has caused what should have been a runaway AMD win to degenerate into a tit-for-tat situation

So which one of these would I buy? That will likely boil down to whatever is on sale at a given time but I’ll step right into and say the RX 480 8GB. Not only has AMD proven they can match NVIDIA’s much-vaunted driver rollouts but through a successive pattern of key updates have made their card a parallel contender in DX11 and a runaway hit in DX12. That’s hard to argue against.

http://www.hardwarecanucks.com/foru.../73945-gtx-1060-vs-rx-480-updated-review.html

Hardware Canucks tested reference and OC'd variants of AMD RX 480 and GTX 1060 cards in a head to head showdown at resolutions of 1080p and 1440p. They tested a TON of games to see how the drivers for these cards have improved since launch. If anything you should be able to look at your favorite games and see which of these cards is faster for the games that YOU play.

The GTX 1060 6GB versus RX 480 8GB saga obviously doesn’t end here and if the last few months are anything to go by, these cards will be fighting tooth and nail until the day they’re replaced. What AMD has accomplished between Polaris’ initial rollout and now is impressive to say the least but their board partners have given the RX 480 a slight premium above its $240 launch price. NVIDIA on the other hand is hanging doggedly on and their board partners have responded by lowering the GTX 1060’s entry price. This has caused what should have been a runaway AMD win to degenerate into a tit-for-tat situation

So which one of these would I buy? That will likely boil down to whatever is on sale at a given time but I’ll step right into and say the RX 480 8GB. Not only has AMD proven they can match NVIDIA’s much-vaunted driver rollouts but through a successive pattern of key updates have made their card a parallel contender in DX11 and a runaway hit in DX12. That’s hard to argue against.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)