Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD vs nVidia Colors - Concrete proof of differences

- Thread starter erek

- Start date

ManofGod

[H]F Junkie

- Joined

- Oct 4, 2007

- Messages

- 12,864

But that is the only thing you can garner from this thread/poll.

Simple human bias.

Not quite, since there are not 100% assurances that everything will be the same.

GoodBoy

2[H]4U

- Joined

- Nov 29, 2004

- Messages

- 2,768

Albert Einstein said:Stay away from negative people. They have a problem for every solution.

HumanBias

Weaksauce

- Joined

- Dec 13, 2022

- Messages

- 121

Your bias is noted loud and clear.Not quite, since there are not 100% assurances that everything will be the same.

But your subjective view/deflection of the data has no objective value it only displays you have bias.

There is a reason a video nullifies a subjective testimony as a video is objective data while a testimony is a subjective memory prone to bias/flaws.

There is objective meassured data showing parity which invalidates your subjective bias in plenum.

Arguing aginst data with no data but only your personal biased view is like calling a circle square and inisisting your view is correct while ignoring reality.

The circle is round not square not matter what you believe.

The past dozen of posts are amusing because technology (quite impartial colorometers) has been able to replicate the exact same color output on the same monitor of both AMD and nvidia cards across many generations. All they see is rgb, could care jack all about nvidia drivers and amd ones, and simply adjust all relevant image settings to get a desired output based on the user preference. From my experience in messing with the control panels of both nvidia and AMD, there's what I think colors "should" be, then there's colorimeter software and hardware that says, your eyes are bunk.

Last edited:

ManofGod

[H]F Junkie

- Joined

- Oct 4, 2007

- Messages

- 12,864

Your bias is noted loud and clear.

But your subjective view/deflection of the data has no objective value it only displays you have bias.

There is a reason a video nullifies a subjective testimony as a video is objective data while a testimony is a subjective memory prone to bias/flaws.

There is objective meassured data showing parity which invalidates your subjective bias in plenum.

Arguing aginst data with no data but only your personal biased view is like calling a circle square and inisisting your view is correct while ignoring reality.

The circle is round not square not matter what you believe.

LOL! I know what I saw with the cards that I had and the reality was there with all my eyes to see.

Oh, and we all have biases but that does not change what is, regardless. I know for a fact what I saw with the 980Ti, R9 290 and R9 Furies on the Samsung 4k 28 inch monitor, using display port and that was that. If the 980Ti was not washed out, I would have stuck with it but it was and nothing changed that fact. (EVGA 980 Ti)

HumanBias

Weaksauce

- Joined

- Dec 13, 2022

- Messages

- 121

Your bias is noted but that does not alter measurements done with EODIS3.LOL! I know what I saw with the cards that I had and the reality was there with all my eyes to see.The fact that things are not 100% either or in the IT field is exactly why I have been successful in it.

My eyes tell me exactly what I need to know and are not bunk, at all. After all, this equipment you speak of was created by man who, wait for it, had to use their eyes to determine the accuracy of that equipment.

Oh, and we all have biases but that does not change what is, regardless. I know for a fact what I saw with the 980Ti, R9 290 and R9 Furies on the Samsung 4k 28 inch monitor, using display port and that was that. If the 980Ti was not washed out, I would have stuck with it but it was and nothing changed that fact. (EVGA 980 Ti)

Your bias has no implicantions for reality again it only speaeks to your personal bias.

There are people who claim all sorts of things against reality but that does not mean those claims have any value espcially when they oppose data.

Unless you can bring verifiable data to the table all your words just confirmation to as why objective data is better than subjective opinions.

Your belief < objective data no matter the spin.

ManofGod

[H]F Junkie

- Joined

- Oct 4, 2007

- Messages

- 12,864

Your bias is noted but that does not alter measurements done with EODIS3.

Your bias has no implicantions for reality again it only speaeks to your personal bias.

There are people who claim all sorts of things against reality but that does not mean those claims have any value espcially when they oppose data.

Unless you can bring verifiable data to the table all your words just confirmation to as why objective data is better than subjective opinions.

Your belief < objective data no matter the spin.

That card and monitor combination is long gone. However, I have no confirmation bias when it came to that setup, the Nvidia card looked washed out and the AMD cards did not and after 6 months, nothing changed that. The fact that you are unwilling to acknowledged that fact shows a significant bias on your part, in my opinion.

HumanBias

Weaksauce

- Joined

- Dec 13, 2022

- Messages

- 121

Your bias is noted but that changes nothing.That card and monitor combination is long gone. However, I have no confirmation bias when it came to that setup, the Nvidia card looked washed out and the AMD cards did not and after 6 months, nothing changed that. The fact that you are unwilling to acknowledged that fact shows a significant bias on your part, in my opinion.

The only place we still observe claims likes your assert are in hardware forums, not in the publisher/video/gaming industry.

If vendor A had a clear, objective, verifiable advantage over vendor B it would be used/mentioned/displayed in PR material.

Even the poll would shows this but it doesn't.

The poster who made a humorus remark about "flat earth" actually summed up this thread whether intentional er not:

You are the "flat earther" speaking against reality without any verifiable data in disceptatio.

0 = 0

1 = 1

0 ≠ 1

1 ≠ 0

No more simplified way of communicating this.

I will not respond futher to your flawed subjective bias unless you back it up with objective verifiable data in the same manner as I do not respond to "flat earthers" without objective verifiabe data.

ManofGod

[H]F Junkie

- Joined

- Oct 4, 2007

- Messages

- 12,864

Your bias is noted but that changes nothing.

The only place we still observe claims likes your assert are in hardware forums, not in the publisher/video/gaming industry.

If vendor A had a clear, objective, verifiable advantage over vendor B it would be used/mentioned/displayed in PR material.

Even the poll would shows this but it doesn't.

The poster who made a humorus remark about "flat earth" actually summed up this thread whether intentional er not:

You are the "flat earther" speaking against reality without any verifiable data in disceptatio.

0 = 0

1 = 1

0 ≠ 1

1 ≠ 0

No more simplified way of communicating this.

I will not respond futher to your flawed subjective bias unless you back it up with objective verifiable data in the same manner as I do not respond to "flat earthers" without objective verifiabe data.

And your inability to see through your own biases and possibly admit that you could simply be wrong, in this instance, shows that you are not experienced in the IT field as much as you think you are. I have repeatedly said the circumstances for which I showed what occurred in my instance, the hardware used and what occurred. You look at that as a possible affront to what you think, in my opinion, and because of that one simple bias, could not possibly see your way that in this instance, I am right.

Or, put your money where your mouth is, like I did. I spent $650 on that 980Ti and almost bought a second one for SLI support. However, I instead sold it for half of what I bought it for, since the 1080 was out by that point, and bought a Sapphire R9 Fury (Non X) and had no issues with that washed out colors anymore, on that monitor.

Essentially, examples against what is considered the norm does not negate those examples, at all.

Agreed. I prefer AMD cards for their colors. I thought I was crazy when I used to say it, most people are kind of color blind and can't tell the difference, but man... I can. I also think Nvidia gets higher FPS because they cap image quality and they've been doing it for long years.Not clicking the link. However, for my own personal experiences, over the years, AMD has far better color appearances than Nvidia has, on the same monitor and different graphics cards. If you do not agree with me, I do not care, I know what my eyes tell me and that is all that matters to me.

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,114

You've always had the option to change that.Agreed. I prefer AMD cards for their colors. I thought I was crazy when I used to say it, most people are kind of color blind and can't tell the difference, but man... I can. I also think Nvidia gets higher FPS because they cap image quality and they've been doing it for long years.

I know. Fact is that, even changing it all, video quality is still poorer on Nvidia side, no matter what.You've always had the option to change that.

ManofGod

[H]F Junkie

- Joined

- Oct 4, 2007

- Messages

- 12,864

I know. Fact is that, even changing it all, video quality is still poorer on Nvidia side, no matter what.

For whatever reason, I experienced the same on the last Nvidia card I had, as well. However, with the combinations of cards and monitors available, some monitors may work better with AMD and others with Nvidia. We know for certain that a monitors output is not the same across the board, even with tweaking.

I have 3 monitors: Asus 27' EHE, LG 22' EA53 and Acer 27' VAH. In all of them, note that they're completely different, AMD cards deliver the best colors for videos in a comparison with what Nvidia and Intel do. The latter is even worse than Nvidia in this matter actually. Image looks more vibrant and colorful and rich with AMD. Idk, I may be crazy, but I notice a visible quality difference in favor of AMD.For whatever reason, I experienced the same on the last Nvidia card I had, as well. However, with the combinations of cards and monitors available, some monitors may work better with AMD and others with Nvidia. We know for certain that a monitors output is not the same across the board, even with tweaking.

ManofGod

[H]F Junkie

- Joined

- Oct 4, 2007

- Messages

- 12,864

I have 3 monitors: Asus 27' EHE, LG 22' EA53 and Acer 27' VAH. In all of them, note that they're completely different, AMD cards deliver the best colors for videos in a comparison with what Nvidia and Intel do. The latter is even worse than Nvidia in this matter actually. Image looks more vibrant and colorful and rich with AMD. Idk, I may be crazy, but I notice a visible quality difference in favor of AMD.

Before someone comes in and says this, your experiences are not indicative of overall experiences.

HumanBias

Weaksauce

- Joined

- Dec 13, 2022

- Messages

- 121

Human bais is a powerfull thing.

Most of the time it is quite benevolent and conserns personal views.

"I like coffee"

"I like my coffee with milk"

"I like rock music"

"I like the color green"

Those are subjective views where there are no objective truths.

Where human bias becomes an issue is when subjective views, not supported by objective data or in conflict with objective data, are presented as "facts"

That human bias in more problematic and can be divided into a lot of catagories, but most of us have encountered some of these at one point in time:

- Flat earthers

- Moon hoaxers

- Anti vaxxers

The above are people impacted by Dunning-kruger, discarding objective verifiable data, substituting it with their own human bias.

In their perception, they hold the "truth" and the rest of the human race (and science/data) is wrong.

The objective verifiable data is all part of some bigger conspiracy and if you do not adheer to their subjective human bias you are either not very intelligent or you are part of the conspiracy.

All reaseearch whows that the human mind, our perception and our memories are very suceptible to flaws/errors.

That is why even if 20 witnesses to a crime say one thing, but a single video show another thing we discard those 20 testimonies because humans are prone to flaws/errors while the video is a objective verifiable fact.

The number of people saying "I know what I saw!" is irrelevant.

People claim to se UFO's (in this context extra-terrestial crafts) all the time.

When presented with a video of the blimp that was observed they will refuse it.

"I know what I saw!"

"I know what a blimp looks like and this was not it!"

"The video is a fake!"

Their huamn bias blinds their view of reality.

No amount of evidence/data will alter their huamn bias because they do not makes mistakes in their own view.

There are infallible in their mind.

The data is wrong

They "know" what they saw, despite the opposing data.

The question then becomes how much time and effort should be wasted trying guide such enteties back in the realm of reality?

I am reminded of the the following quote:

"That which can be asserted without evidence, can be dismissed without evidence." - Christopher Hitchens

So we are left with two options.

Option A:

Screen Calibration devices are flawed and present false data, +99% of users in publishing/video/gamning sectors are unable to percieve colors and the industry standards are wrong, but a very small subset of users have "supervision" that negates everything else.

Option B: A very, very, very small subset of users are suffering from human bias.

Most of the time it is quite benevolent and conserns personal views.

"I like coffee"

"I like my coffee with milk"

"I like rock music"

"I like the color green"

Those are subjective views where there are no objective truths.

Where human bias becomes an issue is when subjective views, not supported by objective data or in conflict with objective data, are presented as "facts"

That human bias in more problematic and can be divided into a lot of catagories, but most of us have encountered some of these at one point in time:

- Flat earthers

- Moon hoaxers

- Anti vaxxers

The above are people impacted by Dunning-kruger, discarding objective verifiable data, substituting it with their own human bias.

In their perception, they hold the "truth" and the rest of the human race (and science/data) is wrong.

The objective verifiable data is all part of some bigger conspiracy and if you do not adheer to their subjective human bias you are either not very intelligent or you are part of the conspiracy.

All reaseearch whows that the human mind, our perception and our memories are very suceptible to flaws/errors.

That is why even if 20 witnesses to a crime say one thing, but a single video show another thing we discard those 20 testimonies because humans are prone to flaws/errors while the video is a objective verifiable fact.

The number of people saying "I know what I saw!" is irrelevant.

People claim to se UFO's (in this context extra-terrestial crafts) all the time.

When presented with a video of the blimp that was observed they will refuse it.

"I know what I saw!"

"I know what a blimp looks like and this was not it!"

"The video is a fake!"

Their huamn bias blinds their view of reality.

No amount of evidence/data will alter their huamn bias because they do not makes mistakes in their own view.

There are infallible in their mind.

The data is wrong

They "know" what they saw, despite the opposing data.

The question then becomes how much time and effort should be wasted trying guide such enteties back in the realm of reality?

I am reminded of the the following quote:

"That which can be asserted without evidence, can be dismissed without evidence." - Christopher Hitchens

So we are left with two options.

Option A:

Screen Calibration devices are flawed and present false data, +99% of users in publishing/video/gamning sectors are unable to percieve colors and the industry standards are wrong, but a very small subset of users have "supervision" that negates everything else.

Option B: A very, very, very small subset of users are suffering from human bias.

Human bais is a powerfull thing.

Most of the time it is quite benevolent and conserns personal views.

"I like coffee"

"I like my coffee with milk"

"I like rock music"

"I like the color green"

Those are subjective views where there are no objective truths.

Where human bias becomes an issue is when subjective views, not supported by objective data or in conflict with objective data, are presented as "facts"

That human bias in more problematic and can be divided into a lot of catagories, but most of us have encountered some of these at one point in time:

- Flat earthers

- Moon hoaxers

- Anti vaxxers

The above are people impacted by Dunning-kruger, discarding objective verifiable data, substituting it with their own human bias.

In their perception, they hold the "truth" and the rest of the human race (and science/data) is wrong.

The objective verifiable data is all part of some bigger conspiracy and if you do not adheer to their subjective human bias you are either not very intelligent or you are part of the conspiracy.

All reaseearch whows that the human mind, our perception and our memories are very suceptible to flaws/errors.

That is why even if 20 witnesses to a crime say one thing, but a single video show another thing we discard those 20 testimonies because humans are prone to flaws/errors while the video is a objective verifiable fact.

The number of people saying "I know what I saw!" is irrelevant.

People claim to se UFO's (in this context extra-terrestial crafts) all the time.

When presented with a video of the blimp that was observed they will refuse it.

"I know what I saw!"

"I know what a blimp looks like and this was not it!"

"The video is a fake!"

Their huamn bias blinds their view of reality.

No amount of evidence/data will alter their huamn bias because they do not makes mistakes in their own view.

There are infallible in their mind.

The data is wrong

They "know" what they saw, despite the opposing data.

The question then becomes how much time and effort should be wasted trying guide such enteties back in the realm of reality?

I am reminded of the the following quote:

"That which can be asserted without evidence, can be dismissed without evidence." - Christopher Hitchens

So we are left with two options.

Option A:

Screen Calibration devices are flawed and present false data, +99% of users in publishing/video/gamning sectors are unable to percieve colors and the industry standards are wrong, but a very small subset of users have "supervision" that negates everything else.

Option B: A very, very, very small subset of users are suffering from human bias.

There's a C option: standards are overrated.

It's a business and as such, all that matters is profitting for survival. They actually can cap quality to the point where ordinary people won't notice the difference as there's no standard human being, let alone human sense. Some people perceive colors differently because they have 4 types of color receptors in the eyes and some others because they bare a different brain tunning and therefore can see what others cannot. It doesn't mean the difference is not there, it's just that common people are not capable of seeing it. Same thing happens with vinil discs and CD's: some will tell the difference in sound when most people will not, but it doesn't mean there's absolutely no difference between them. There actually is and we all know that.

Only because one can see what others cannot, it doesn't mean one's wrong and others are right.

HumanBias

Weaksauce

- Joined

- Dec 13, 2022

- Messages

- 121

If hardware (obejctive verifiable data) says your eyes (subjective bias) are wrong, your eyes are wrong.There's a C option: standards are overrated.

It's a business and as such, all that matters is profitting for survival. They actually can cap quality to the point where ordinary people won't notice the difference as there's no standard human being, let alone human sense. Some people perceive colors differently because they have 4 types of color receptors in the eyes and some others because they bare a different brain tunning and therefore can see what others cannot. It doesn't mean the difference is not there, it's just that common people are not capable of seeing it. Same thing happens with vinil discs and CD's: some will tell the difference in sound when most people will not, but it doesn't mean there's absolutely no difference between them. There actually is and we all know that.

Only because one can see what others cannot, it doesn't mean one's wrong and others are right.

Again:

"That which can be asserted without evidence, can be dismissed without evidence." - Christopher Hitchens

ManofGod

[H]F Junkie

- Joined

- Oct 4, 2007

- Messages

- 12,864

If hardware (obejctive verifiable data) says your eyes (subjective bias) are wrong, your eyes are wrong.

Again:

"That which can be asserted without evidence, can be dismissed without evidence." - Christopher Hitchens

The challenge is that you are using society and cultural dictations to claim biases instead of seeing things as they are, on an individual basis and point of view. The difference between you and I are, you are willing to march in lock step with whatever you are told to think, I am willing to think as an individual with my own point of view. Essentially, you are the only one here taking things out of context and attempting to do comparisons where there are none to make.

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,552

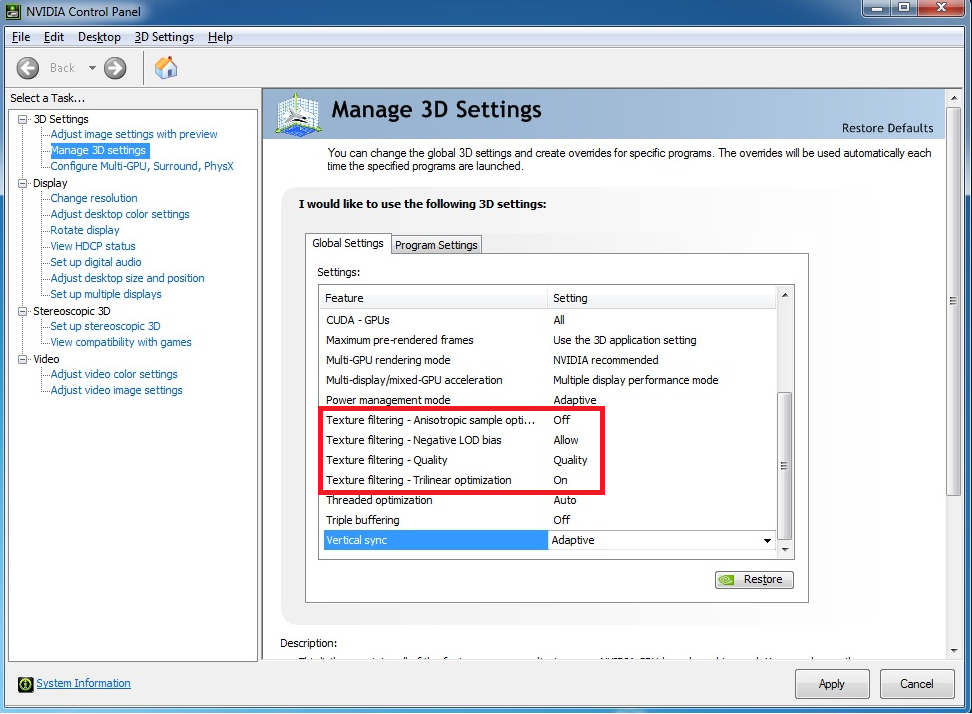

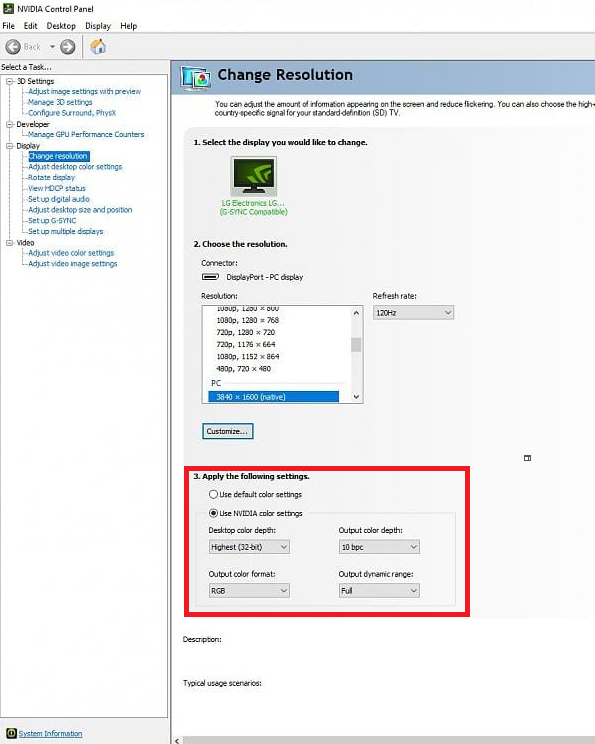

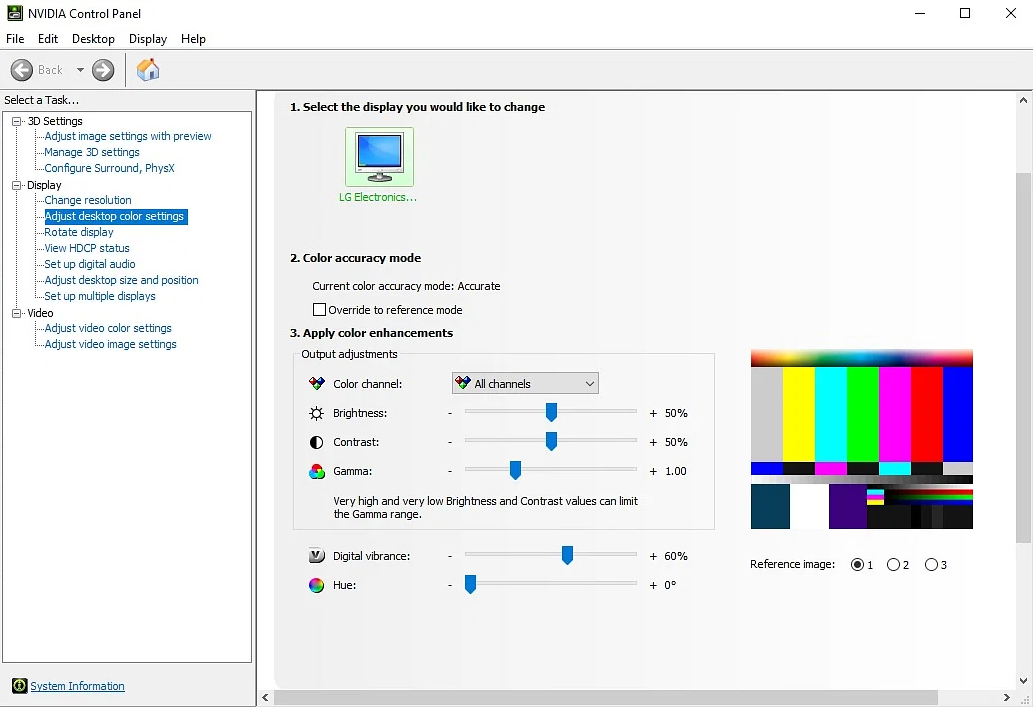

My XGI Volari V8 Duo has better colors then both.Every time you say that no amount of tweaking changed anything further emphasises that you didn't know what the hell you were doing. You see, what you say is actually impossible. You do realise that? If you really are an IT professional then you would understand how foolish your comments are. All GPUs can be tweaked to output exactly the way you want. You can set the brightness, contrast and gamma for each individual colour as well as all the colours combined. You can change the vibrance, hue and you can change the colour space and range used. And you are trying convince me that you couldn't get them to look the same? LOL Seriously dude? It's not magic. AMD doesn't have some secret tech that makes their cards output in different colours than Nvidia. They all use the same standard, they have to. Any colour that an AMD card can output a Nvidia card can output. Don't you get that?

This myth that one GPU manufacturer has better colours/picture quality is been perpetuated by people, who are either clueless or have an agenda to defend their favourite brand. It has been debunked so many times. Can they look slightly different even on the same monitor if you switch between them? Yes they can. Due to not reading the monitor's EDID correctly, applying the wrong space etc. But, the chief cause of washed out looking colours in GPUs is mismatch in ranges between the display and the GPU. Either the display is on full range and the GPU is limited or vice versa.

You didn't change the output range from limited to full in the Nvidia control panel. Your problem was as simple as that.

Trolling much guys? You can be entitled to your experience, but it doesn't make it fact. Any monitor externally calibrated with colorimeters will output the same image on both nvidia and amd hardware. Neither are better nor worse than each other. To anyone reading this thread, out of the box, it's a toss up which monitor/video card combo will come out looking more accurate. The only way to be consistent with your experience when changing screens, and video cards between brands is to get an external calibration tool like a Spyder Data Color or similar device.

ManofGod

[H]F Junkie

- Joined

- Oct 4, 2007

- Messages

- 12,864

Trolling much guys? You can be entitled to your experience, but it doesn't make it fact. Any monitor externally calibrated with colorimeters will output the same image on both nvidia and amd hardware. Neither are better nor worse than each other. To anyone reading this thread, out of the box, it's a toss up which monitor/video card combo will come out looking more accurate. The only way to be consistent with your experience when changing screens, and video cards between brands is to get an external calibration tool like a Spyder Data Color or similar device.

There is no trolling and you are not entirely correct, since different hardware combinations will most definitely be different. Even using a calibration tool is not going to guarantee the same output, unless you may be talking about super high end monitors and professional level graphics cards and even then, they are not going to be 100% the same. It has little to nothing to do with being entitled to the experience, it is simply a fact of what the results were, whether you accept those results or not. I have found entirely that it appears to me that it is mostly Nvidia users that are toting the it is all the same output, there cannot be any differences and if you are saying there are, you cannot prove it and you are wrong.

The majority of users will find that with an external device they will be able to get to a point where the images are near indistinguishable. Nothing useful in outright saying my recommendation is wrong. Blindly saying amd is better based on a single video card experience is far less helpful.

Since you like touting experience, I'll tout mine as a system administrator who has calibrated almost 1000 monitors across nvidia and amd video cards across 5 generations and various monitors for forensic investigators examining images. We all have our own user experience and mine is just as valid as your single nvidia experience all those years ago, so in order to try to make experiences as consistent across many individuals, the best way is to get some external devices to ensure you can make the experiences as similar as possible. I personally am running both an nvidia and amd gpu in multiple systems at home.

Since you like touting experience, I'll tout mine as a system administrator who has calibrated almost 1000 monitors across nvidia and amd video cards across 5 generations and various monitors for forensic investigators examining images. We all have our own user experience and mine is just as valid as your single nvidia experience all those years ago, so in order to try to make experiences as consistent across many individuals, the best way is to get some external devices to ensure you can make the experiences as similar as possible. I personally am running both an nvidia and amd gpu in multiple systems at home.

XoR_

[H]ard|Gawd

- Joined

- Jan 18, 2016

- Messages

- 1,566

Fourth photo-receptor in the eye cannot be affected by GPU thoughThere's a C option: standards are overrated.

It's a business and as such, all that matters is profitting for survival. They actually can cap quality to the point where ordinary people won't notice the difference as there's no standard human being, let alone human sense. Some people perceive colors differently because they have 4 types of color receptors in the eyes and some others because they bare a different brain tunning and therefore can see what others cannot. It doesn't mean the difference is not there, it's just that common people are not capable of seeing it.

Different screens do however stimulate rods differently making even perfectly calibrated displays with the same panel type (eg. IPS vs IPS) and same contrast (can be something which is calibrated) look different.

To me it kinda looks like they made standards to be about three quarters there... well, at least artificial look of bright objects on monitor which does not stimulate rods as much as real life objects would looks nice, even if it looks completely unrealistic

Digital 16-bit at 44.1KHz is objectively superior to vinyl. Case closedSame thing happens with vinil discs and CD's: some will tell the difference in sound when most people will not, but it doesn't mean there's absolutely no difference between them. There actually is and we all know that.

Only because one can see what others cannot, it doesn't mean one's wrong and others are right.

Xar

Limp Gawd

- Joined

- Dec 15, 2022

- Messages

- 227

IIRC, Colours and Image/Picture Quality arguments started in 1997 and ended around 2011-2012 when most cards are equipped with DP and HDMI instead of the old DVI and VGA. More or less, I think there still exists visual differences between both vendors to this day even with the recent Adrenalin, GRD, FirePro/RadeonPRO, and Quadro/RTX Axxx. We just never got to the bottom of the truth regarding it because no one seems to care enough about the subject digging it or testing it. I too was one of those majorities who preferred FPS >>> IQ/Color Quality. Probably the reason why DLSS & FSR are so popular these days, little visual compromises for much more Frames.Opinion on the qualitative rendering differences between AMD and NVIDIA (beyond frame times even)?

View attachment 532533

View attachment 532534

https://www.reddit.com/r/Amd/comments/n8uwqk/amd_vs_nvidia_colors_concrete_proof_of_differences/

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,894

I prefer IQ and without compromisingIIRC, Colours and Image/Picture Quality arguments started in 1997 and ended around 2011-2012 when most cards are equipped with DP and HDMI instead of the old DVI and VGA. More or less, I think there still exists visual differences between both vendors to this day even with the recent Adrenalin, GRD, FirePro/RadeonPRO, and Quadro/RTX Axxx. We just never got to the bottom of the truth regarding it because no one seems to care enough about the subject digging it or testing it. I too was one of those majorities who preferred FPS >>> IQ/Color Quality. Probably the reason why DLSS & FSR are so popular these days, little visual compromises for much more Frames.

Domingo

Fully [H]

- Joined

- Jul 30, 2004

- Messages

- 22,640

After every damn driver update my wife's Nvidia driver sets things to the wrong color settings in the control panel. I'll see her playing something out of the corner of my eye and all the blacks are a weird shade of gray.

Xar

Limp Gawd

- Joined

- Dec 15, 2022

- Messages

- 227

No Dithering is another issue too.After every damn driver update my wife's Nvidia driver sets things to the wrong color settings in the control panel. I'll see her playing something out of the corner of my eye and all the blacks are a weird shade of gray.

I had a weird issue with set of nvidia drivers, can't recall the version which killed my color profile setup. It would "forget" my color profile after going to sleep or going to the lock screen or randomly after a game. I'd have to manually turn it on in the display settings color profile again.

I tried clean uninstall/reinstall of drivers, reinstall my calibration software, nothing. Ended up having to wipe the windows install and then all the settings stuck fine like before. Knock on wood, it's been good for a year, but that time when it broke was weird. Note that across driver upgrades, the windows calibrated color profile should not change unless the drivers make serious changes to how the gpu outputs colors which both amd and nvidia have been good with for the last 3 generations.

I tried clean uninstall/reinstall of drivers, reinstall my calibration software, nothing. Ended up having to wipe the windows install and then all the settings stuck fine like before. Knock on wood, it's been good for a year, but that time when it broke was weird. Note that across driver upgrades, the windows calibrated color profile should not change unless the drivers make serious changes to how the gpu outputs colors which both amd and nvidia have been good with for the last 3 generations.

The fact that things are not 100% either or in the IT field is exactly why I have been successful in it.

Seriously? Are you still pretending to be an IT professional? And you come out with a statement like this

After all, this equipment you speak of was created by man who, wait for it, had to use their eyes to determine the accuracy of that equipment.

What is even happening in this thread?I prefer IQ and without compromising

XoR_

[H]ard|Gawd

- Joined

- Jan 18, 2016

- Messages

- 1,566

There is setting in Nvidia control panel to prevent programs changing LUT called "Override to reference mode", maybe you clicked it?I had a weird issue with set of nvidia drivers, can't recall the version which killed my color profile setup. It would "forget" my color profile after going to sleep or going to the lock screen or randomly after a game. I'd have to manually turn it on in the display settings color profile again.

I tried clean uninstall/reinstall of drivers, reinstall my calibration software, nothing. Ended up having to wipe the windows install and then all the settings stuck fine like before. Knock on wood, it's been good for a year, but that time when it broke was weird. Note that across driver upgrades, the windows calibrated color profile should not change unless the drivers make serious changes to how the gpu outputs colors which both amd and nvidia have been good with for the last 3 generations.

XoR_

[H]ard|Gawd

- Joined

- Jan 18, 2016

- Messages

- 1,566

You can be "IT professional" when you do any IT activity for money eg. install Windows. Extensive computer knowledge is not needed to be "professional" just like no extensive knowledge is needed to be professional in most fields. It is just needed to be really good at what you do.Seriously? Are you still pretending to be an IT professional? And you come out with a statement like this

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,534

How can you argue with that? lol you gotta love it sometimes.Seriously? Are you still pretending to be an IT professional? And you come out with a statement like this

I did DDU uninstalls and moved onto newer driver versions, but windows just refused to keep the color profiles. Have not encountered the problem since last reinstall. nvidia drivers have also moved from 3xx to 5xx since that happened.There is setting in Nvidia control panel to prevent programs changing LUT called "Override to reference mode", maybe you clicked it?

ManofGod

[H]F Junkie

- Joined

- Oct 4, 2007

- Messages

- 12,864

You can be "IT professional" when you do any IT activity for money eg. install Windows. Extensive computer knowledge is not needed to be "professional" just like no extensive knowledge is needed to be professional in most fields. It is just needed to be really good at what you do.

True and further, I have been doing IT, professionally, for 23 years and know my stuff. (Which is to say, not everything but, I do know my stuff.) The point I was making is I know how to follow directions and can even go further than them, when needed, so I made the changes and nothing changed on my previous setup.

Xar

Limp Gawd

- Joined

- Dec 15, 2022

- Messages

- 227

For those seeking more infos on this subject, just google “NVIDIA vs AMD Color Quality” and “NVIDIA vs AMD Image Quality”. Read every threads, forums, websites, and comments you’re able to find.

To truly put an end to this 30-years old doubt/argument once and for all, we’re gonna need side-by-side comparison videos and screenshots between RTX 4090 & 7900 XTX on a modern 4K OLED monitor with same DP/HDMI cable, same monitor, same monitor settings, same driver, same driver settings, same game, same in-game settings. Let’s see if there’s still any noticeable visual differences after all.

To truly put an end to this 30-years old doubt/argument once and for all, we’re gonna need side-by-side comparison videos and screenshots between RTX 4090 & 7900 XTX on a modern 4K OLED monitor with same DP/HDMI cable, same monitor, same monitor settings, same driver, same driver settings, same game, same in-game settings. Let’s see if there’s still any noticeable visual differences after all.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)