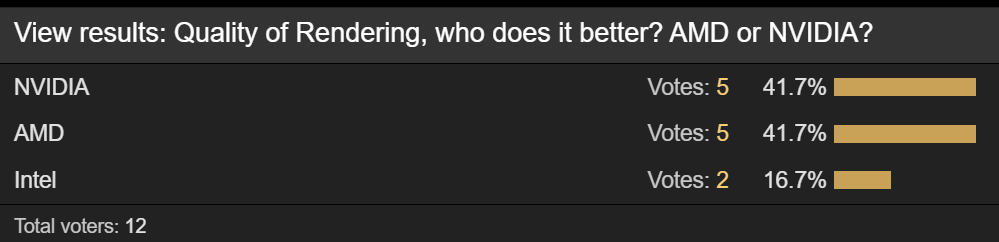

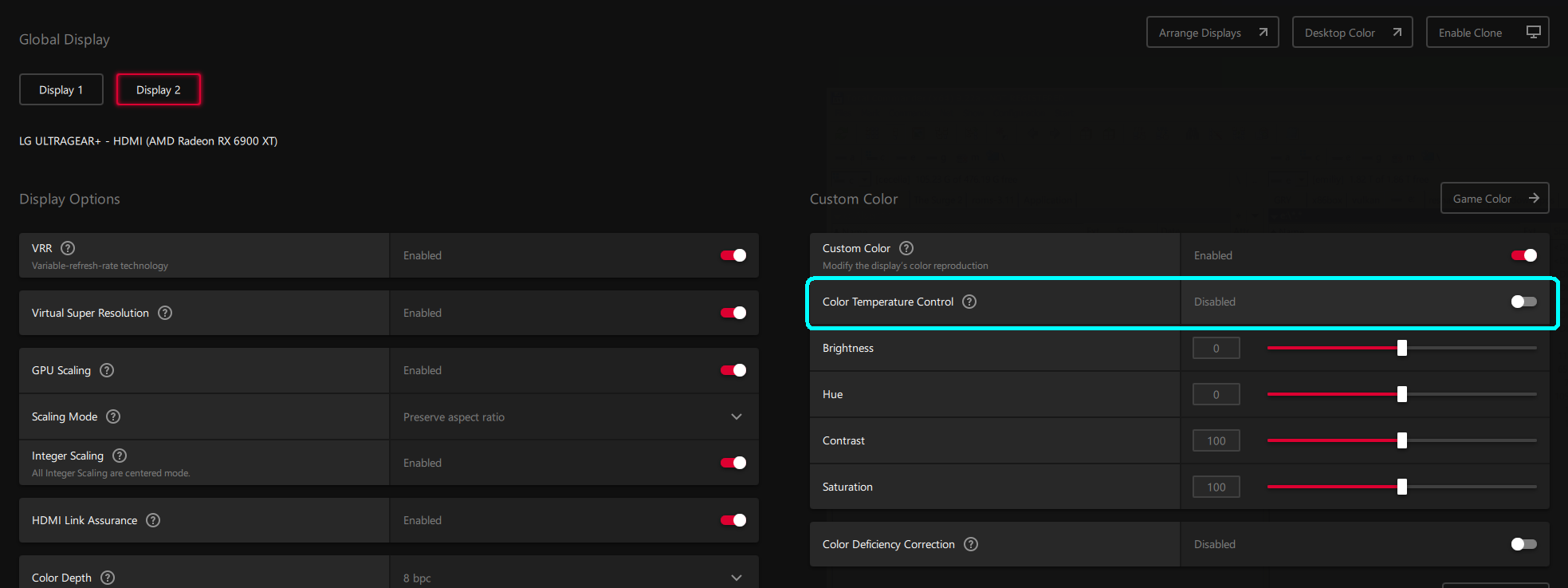

So that doesn’t count. RAMDAC was back when we were sending analog signals out; we’re not. Now we’re theoretically sending 100% digital; it either arrives intact or it doesn’t (moderate simplification). Back then yes - ATi had a better RAMDAC (as did matrix) and did a better job converting to analog, much like a better modern DAC for headphones would.From a Guru3D article surrounding the GeForce RTX 4080

View attachment 532789

https://www.guru3d.com/news-story/geforce-rtx-4080-spotted-in-usprices-starting-at-1199,13.html

It’s 2022. That point is 22+ years old now. We’re talking old as shit, unless you’re a CRT crazy.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)