JustReason

razor1 is my Lover

- Joined

- Oct 31, 2015

- Messages

- 2,483

http://www.bitsandchips.it/english/52-english-news/8604-amd-vision-in-one-patent-the-chiplet

Good news is that is the entire article. Cant say I am surprised especially after ZEN and TR/EPYC. Does have some limitless possibilities.

Zen has a modular (LEGO-like) design. AMD created Zen in order to have a malleable uArch: customers can add or remove SIMDs and instruction sets, customize the different levels of cache, etc.

But this is only the beginning of AMD vision. We know that Apple, like other companies, is using SiP (System-in-Package) technology to produce some of its own products (E.g. Apple S1), but AMD went over.

AMD Chiplet seems to be an evolution of Palo Alto Research Center (PARC) studies.

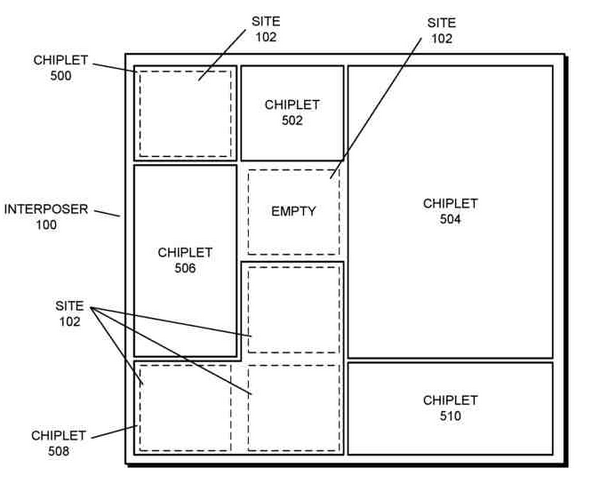

Chiplet is an evolution of today Interposer employment: the Interposer has a pattern of sites for mounting chiplets.

As we have seen so far, companies put a single GPU or CPU or a FPGA on a Interposer with some memory chips (HMC or HBM). Thanks to Chiplet, we can mix different kinds of chips on the same Interposer: GPU, ASIC, CPU, FPGA, Modem LTE, Ethernet CTRL, different memories (DDR4 or HBM), etc.

Chiplet could be a revolution because we can create a whole mainboard (Ethernet CTRL, USB CTRL, etc.) using a single CPU package!

Good news is that is the entire article. Cant say I am surprised especially after ZEN and TR/EPYC. Does have some limitless possibilities.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)