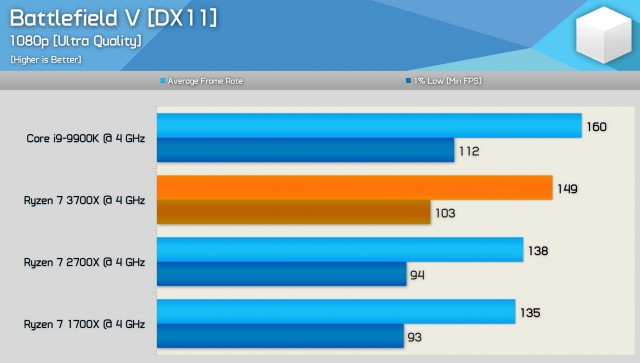

Do you do anything besides gaming at all? (encoding, rendering etc) What resolution do you game at mainly?

I meant another 5-6% on top of the 9900K's existing lead. Sorry if it was confusing.

Only gaming. 1440p 60fps but it will be 1080 when the new games come out.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)