I think people misunderstand this and what it truly means. The fact of the matter is a performance uplift isn't free. It has to come from somewhere. You can't magically make a new architecture more efficient while offering faster performance. Every once in awhile some break through leads to a major change in which you will see something like that and then what you'll see is that headroom diminished over time by performance improvements to that architecture. Also keep in mind that this is going to be in heavily multi-threaded workloads and not gaming or general tasks. You aren't going to see those temperatures very often unless you are using the machine for certain specific applications and that's all you do with it.The sad thing is, for an average user / gamer such as myself, there is no point. If anything was PCIE-5, if ddr5 made any sense, if the CPU’s didn’t run 95*c BY DESIGN,

People that really push their existing systems or overclock are already used to seeing CPU temperatures in that range. It's not that big of a deal.

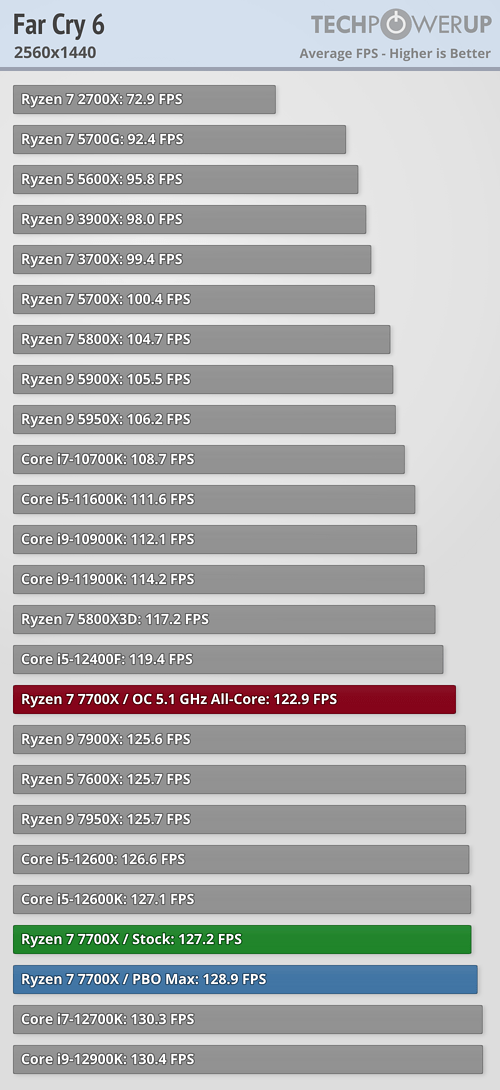

This is a ultra-competitive space now. AMD and Intel have been running their highest end CPU's at the edge of what the silicon is capable of. That's why overclocking is virtually pointless on those CPU's. Any gains in efficiency will give way to making the CPU's more powerful. Any headroom they have will be used towards out performing the competition. That's all AMD has done here. Get used to it because this is the new norm unless some serious breakthrough in semi-conductor engineering happens soon as is practical to implement for desktop and mobile systems.

AMD stated during the Ryzen 5000 series announcement that their GPU's would be the best on the planet. They were far from delivering on that statement.Well Raja has been long gone and is buggering up Arc nicely. RX 6000 series were on target. I think it will remain consistent

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)