sfsuphysics

[H]F Junkie

- Joined

- Jan 14, 2007

- Messages

- 15,991

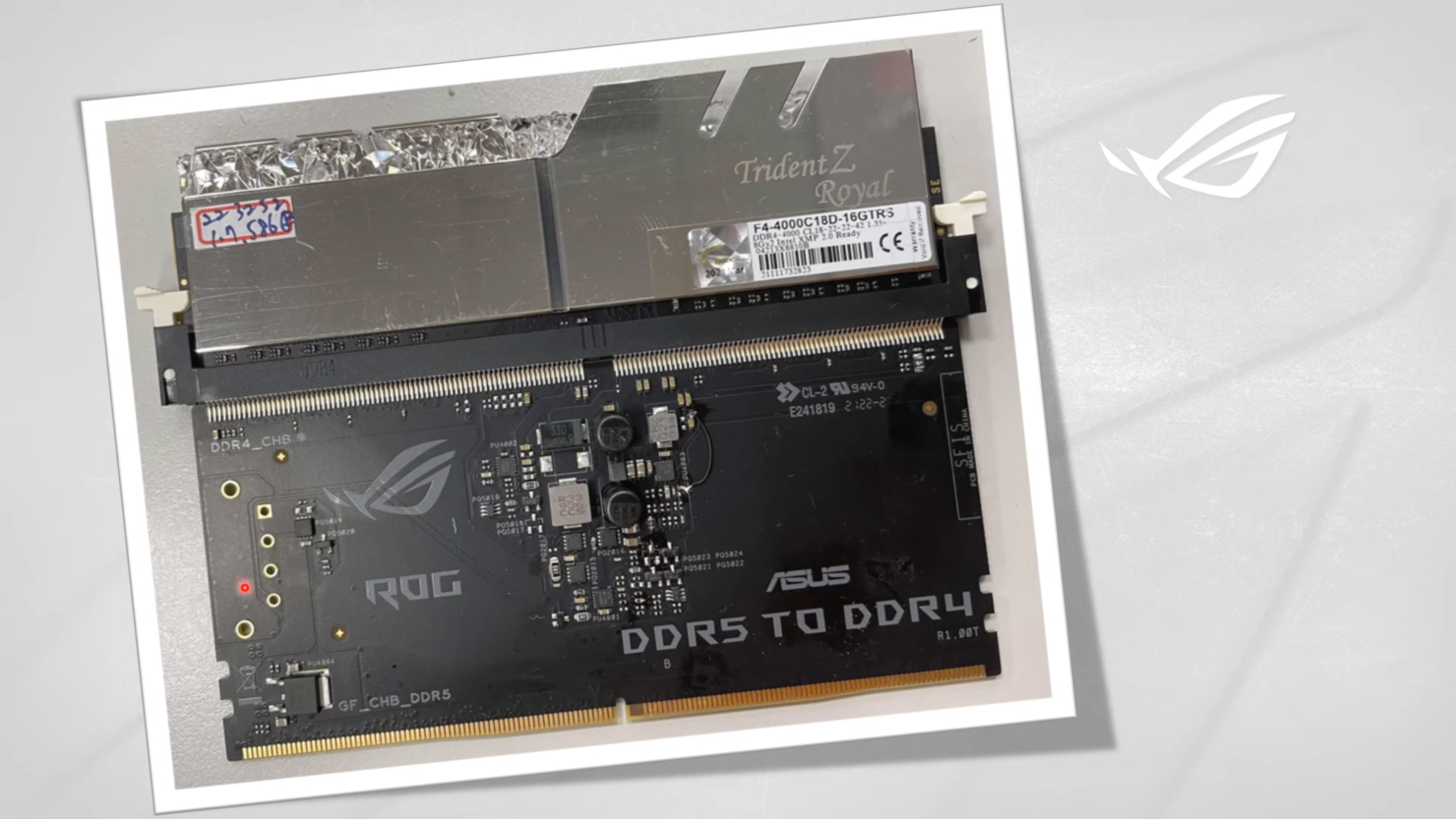

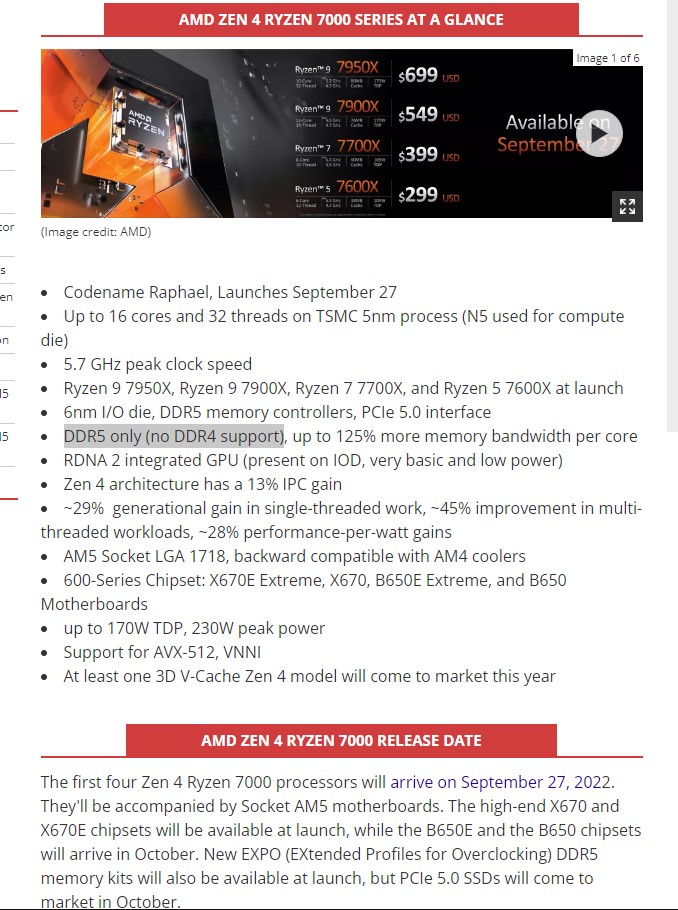

That basically is my question, so the short answer is maybe, but nothing beyond rumors.There have also been rumors of DDR4 AM5 boards.

Not so much AM5 is DDR4, but like have say "x640" MBs be DDR4, while the rest will be DDR5 MBs

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)