erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,875

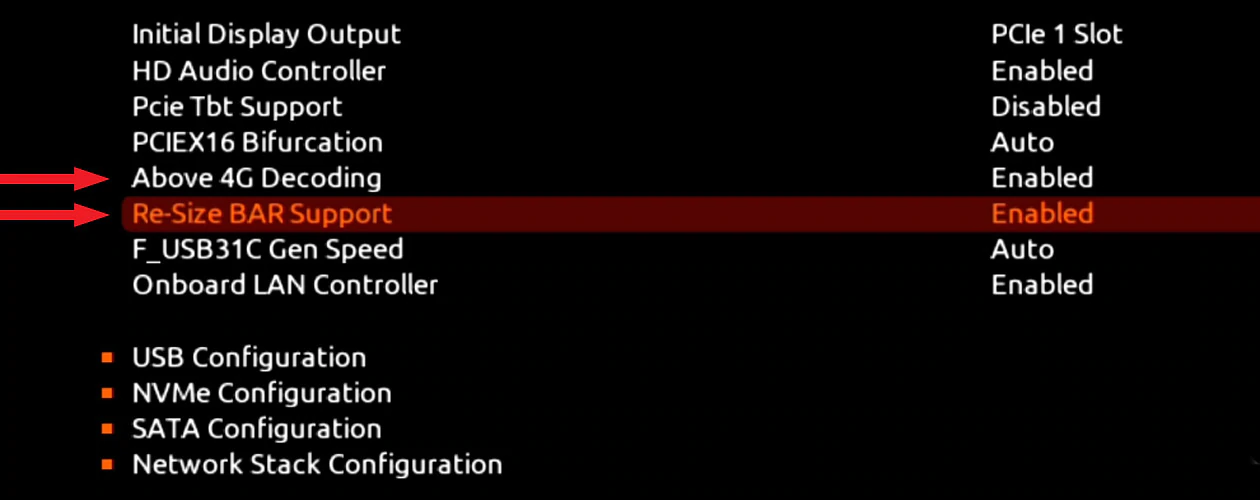

"It gets more interesting—Intel processors have been supporting this feature since the company's 4th Gen Core "Haswell," which introduced it with its 20-lane PCI-Express gen 3.0 root-complex. This means that every Intel processor dating back to 2014 can technically support Resizable-BAR, and it's just a matter of motherboard vendors releasing UEFI firmware updates for their products (i.e. Intel 8-series chipsets and later). AMD extensively advertises SAM as adding a 1-2% performance boost to Radeon RX 6800 series graphics cards. Since this is a PCI-SIG feature, NVIDIA plans to add support for it on some of its GPUs, too. Meanwhile, in addition to AMD 500-series chipsets, even certain Intel 400-series chipset motherboards started receiving Resizable BAR support through firmware updates."

https://www.techpowerup.com/275565/...mitation-intel-chips-since-haswell-support-it

https://www.techpowerup.com/275565/...mitation-intel-chips-since-haswell-support-it

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)