"As you might expect, the Nvidia RTX 2080 Ti was at the top, followed by the other top RTX cards; the 2080 Super, 2070 Super, 2060 Super, and 2060. But after that, AMD’s cards start nudging their way into the rankings, beating out capable last-generation GTX 10-series GPUs."

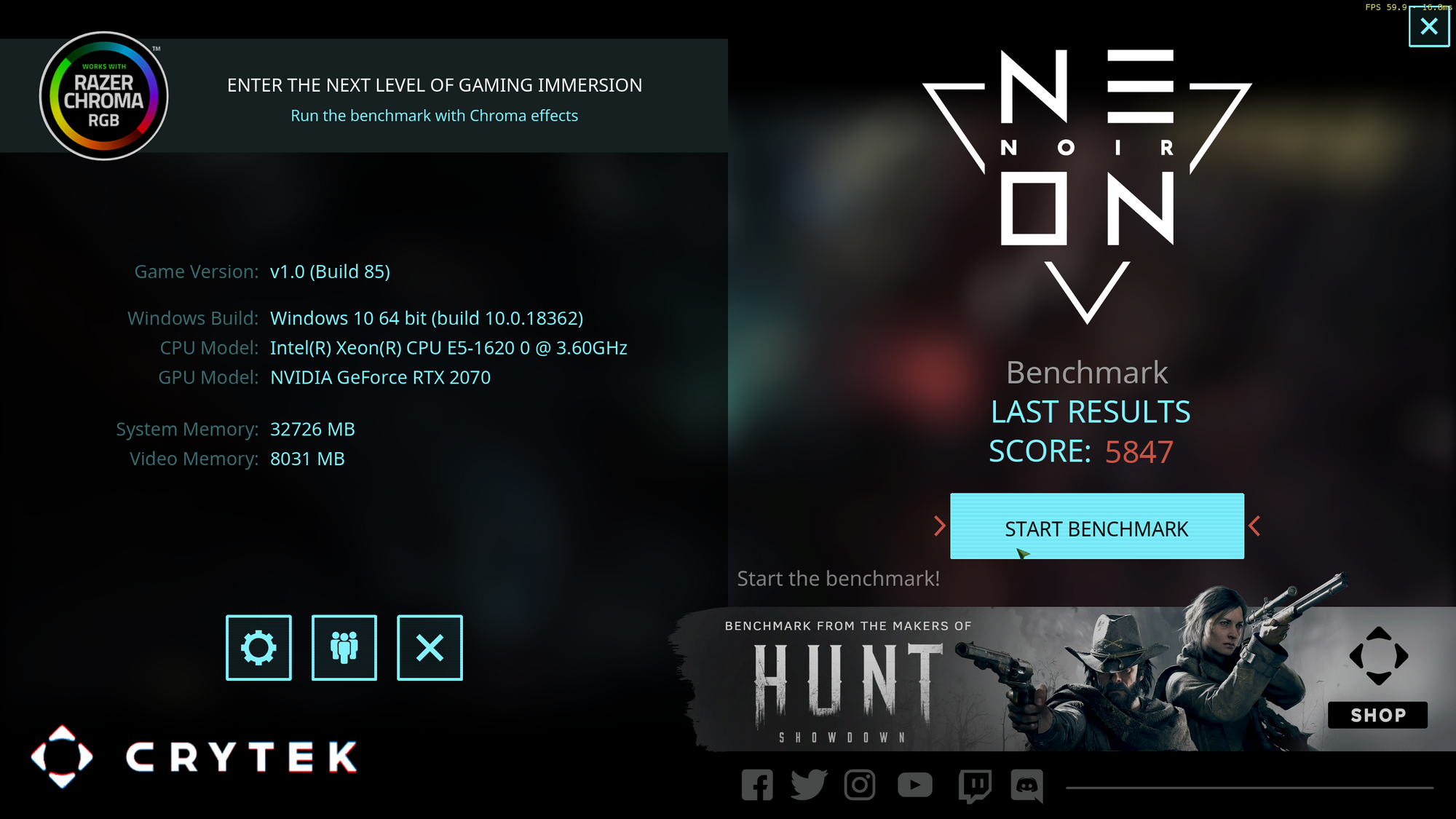

"The Neon Noir demo will be publicly available by the close of November 13, giving anyone they want a chance to try it out. It’ll be downloadable from Crytek’s Marketplace."

https://www.digitaltrends.com/computing/amd-rx-5700-xt-beast-gtx-1080-in-crytek-ray-tracing/

"The Neon Noir demo will be publicly available by the close of November 13, giving anyone they want a chance to try it out. It’ll be downloadable from Crytek’s Marketplace."

https://www.digitaltrends.com/computing/amd-rx-5700-xt-beast-gtx-1080-in-crytek-ray-tracing/

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)