Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

At sufficient frame rates, DLSS noise can be made to mostly disappear.I use e-sport just to describe it. If you want you can see the noise in every game, if you don't care about it - never mind

But I, can't accept buying an expensive video card, which will lower quality on the games that I play just to get a few FPS more - it's pointless for me.

So, it's a personal choice in the end

While we're nitpicking artifacts caused by frame rate amplification technologies such as DLSS/FSR/etc, I should comment in some technical depth on the "noise" issue...

When you do 240fps 240Hz or 360fps 360Hz, the noise cycling of ultra high framerate of DLSS can become so rapid, that it's fainter than even DLP temporal dithering (which is binary on-off pixel cycling, combined with simple RGB color switching (colorwheel or color LED/laser cycling). Obviously, DLSS is often mainly enabled in ray traced games like Cyberpunk 2077, so you're jumping 30fps to 60fps, and still seeing those DLSS artifacts. But try DLSS at 300fps, and it's totally a completely different ballgame.

Also keep in mind, some games inject GPU shader noise into the screen (like artificial analog film grain noise), which can cause DLSS quality to degrade somewhat, so that feature needs to be disabled in the game to greatly reduce the magnitude of DLSS quality degradation.

Games that use DLSS needs to put postprocess filters (e.g. noise) after the DLSS processing stage, to fix this degradation (much like how Netflix filters noise from original movies, compresses the movies, then re-adds an algorithmically-generated noise back to the films. It's a pretty impressive trick that Netflix does for compressing film grain noise better -- exclude the noise from compression. Likewise, engines sometimes need to move some filters such as film-grain and other filters (like chromatic aberration filters or JJ Abrams cake frosting filters) downstream, *after* the DLSS processing. Not all games do properly, so sometimes you get more DLSS degradation in some games than others.

Spatial method of removing DLSS noise

Or, unconventionally, you can even use the brute-force scaling trick to help filter DLSS noise, by DLSS-ing to a supersampled image (e.g. 4K) and downconverting it to 1080p for your 1080p screen, the downscaling reduces the noise. In some cases, this actually still increases frame rate above-and-beyond native 1080p rendering while looking better quality than native 1080p rendering. The downscaling blends the noise out, as an example, if you're using the DLSS+Supersampled trick. Perhaps this is not what you do for esports, but you'd do it when you wanted beautiful DLSS-accelerated supersampled AA (rendering graphics higher resolution than monitior and downscaling to monitor) -- as a method of compensating and blending out the DLSS noise. Given sufficient frame rate amplification factors, it can be a technique of improving image quality at the same or slightly higher frame rate (with even less noise than the non-DLSS image, simply because or redirecting DLSS horsepower to brute supersampling instead, as supersampled AA is the best AA you can do, but very killer on performance if you don't use DLSS to accelerate the supersampled AA). It's a blunt hammer, but some gamers do that to improve visuals in some games like Cyberpunk 2077 by using DLSS+Supersampling as the AA method. So that option exists -- a hybrid technique of using DLSS to improve AA instead of improve frame rate.

Temporal method of removing DLSS noise

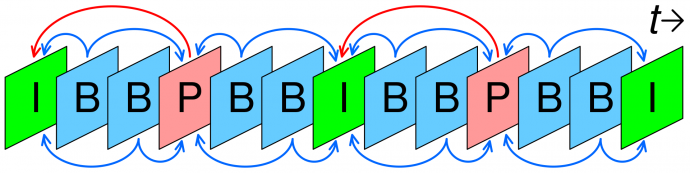

Or the other approach, you attack noise temporally -- speed up the noise cycling to make the noise invisible. So pushing noise speed to far faster speeds (noise more rapidly cycling), can push the noise visibility above human-visibility thresholds. Noise that was visible at 30fps or 60fps can become invisible at 480fps at 480Hz. Just like it's hard to see DLP noise in an E-Cinema projector at the movie theater, especially with ultra-high-resolution 4K DLP projectors from at least middle-of-theater seating, as the DLP pixels binary-cycles about 1440 times to 1920 times a second to generate the color spectrum.

So DLSS noise (that bothers some people) can become invisible when frame rates go up by a massive amount (e.g. 10x). And noise of DLSS isn't as "binary" as DLP noise or other forms of noise (e.g. bad 35mm film noise or weak-analog-TV noise).

Given bad noise, indeed noise will become visible again. But noise becomes more invisible (for a given noisefloor), the higher the framerate is -- DLSS noise is 1/10th as visible at 200fps than it was at 20fps, for example (assuming exact same game, exact same DLSS neural training set, exact same frame detail level, exact same noise intensity, e.g. pixel color error relative to adjacent pixels).

Some people glance at DLSS, see the DLSS noise, and just discard DLSS. But DLSS noise can be 10x fainter under the right circumstances, neural training set, sufficient frame rate increases, and quality of game integration, so your mileage will vary. Others see only faint noise and don't really care. While for others it's super distracting in esports especially when detail levels is reduced and the solid colors of low detail make the noise-visibility easier to see.

Obviously you can fiddle DLSS settings to make the DLSS noise much more blatant. But at the end of the day, when DLSS 4.0 or 5.0 (on a future RTX 6000 or 7000 series) amplifies frame rates by 5x-10x instead of just only 2x, the compromises of DLSS noise falls quite a bit when we're in triple or quadruple digit frame rates for the 1000Hz displays of the 2030s doing 8K 1000Hz UE5 graphics (yes, there's actually an engineering path to get there).

The RTX 4090 is rumored to be able to do 4K 400fps in some games, after all.

NVIDIA also, credited me on Page 2 of Temporally Dense Ray Tracing science paper. The paper also illustrates where more temporal samples per second ("temporally dense" = high Hz) greatly reduces the temporal-noise issues. While DLSS noise is a very different topic, the noise-visibility drops fairly dramatically when refresh rates & frame rates go up 5-10x. Cycle the noise faster (aka higher frame rates), and the noise become even less and less visible. The flickering noise pixels flicker faster than a human's flicker detection threshold, and noise looks more solid / fainter. Experimental displays already exists to show how this continues to scale.

If you make it 10x noisier (like really bad photon shot noise), it will require quite dramatic framerate increases to eliminate the noisiness of that, but for the current amounts of DLSS noise, it's possible to brute the noise-visibility out via large frame rate amplification factors (i.e. distant future DLSS increasing frame rates by 5-10x instead of just 2x for DLSS 2.0. For example, RTX 4090 DLSS "3.0" is rumored elsewhere to be a 3x-4x, but don't vouch me on that).

In other words, they're apparently finding ways to make DLSS "suck less", for an ever-expanding set of use cases, including non-esports use cases and esports use cases. Lag-wise, artifacts-wise, even better frame rates, maybe even ability to activate in non-DLSS games -- like DLSS 3.0 that's probably part of RTX 4080, etc.

At the diminishing curve of returns of this refresh rate race, refresh rate increases needs to be 3x-4x bigger to remain human visible benefits (e.g. 360Hz-vs-1000Hz 0ms-GtG is much more visible than the faint 240Hz-vs-360Hz at current GtG pixel response). Retina refresh rates is not until well into the quadruple digits (or quintuple for the case of sample-and-hold VR due to the way wide-FOV retina resolution displays really amplifies Hz limitations of sample-and-hold displays). So there is long humankind progress needed for continued refresh rate improvement and GPU improvement.

Current DLSS 1.x / DLSS 2.x / FSR are just metaphorically merely early-bird Wright Brothers 2x frame rate amplification technologies as a precursor to upcoming algorithms capable of increasing frame rates by 5x-10x (e.g. 1000fps UE5 quality within one human generation) -- there is already research going on towards this.

It is great that AMD is leapfrogging NVIDIA (somewhat) at least until the hypothetical next-generation DLSS 3.0 assumed as part of RTX 4090, but they must keep leapfrogging and one-upping. We need 5x-10x frame rate amplification ratios in future smart FSR/DLSS algorithms, for the 1000fps 1000Hz displays of the 2030s, and there are multiple algoritthmic engineering paths to get there via long-term progress of frame rate amplification technologies.

Oh, and semantical correction -- official Associated Press guidelines defines it as "esports" with no capital (not "eSports") or hyphen (not "e-sports"), unless it's the first word of a sentence, where it becomes "Esports". Most businesses in the industry, including Blur Busters, has now followed suit. It's a nitpick nuance that I typically don't care much about and "just rolled with it", but -- umm -- friendly heads up

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)

![VideoSignalStructure[1].png VideoSignalStructure[1].png](https://cdn.hardforum.com/data/attachment-files/2022/01/571506_VideoSignalStructure1.png)