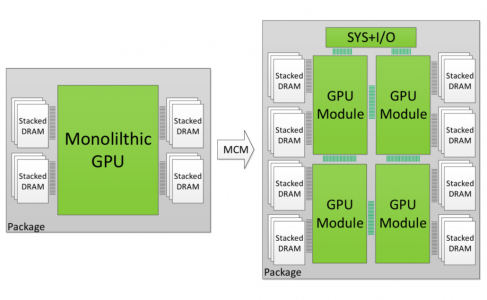

new patent submitted to the US Patent Office on December 31 describes the AMD approach to potential GPU chiplet design, by implementing ‘high bandwidth passive crosslinks.

According to the patent, a primary GPU chipset would be direct ‘communicably coupled’ to the CPU, while each of the secondary GPU chiplets in the array would be coupled to the first GPU via a passive crosslink.

In this sense, AMD considered passive crosslink as communication wires between chiplets that are placed on a single interposer. (in multiple layers if needed).

In conventional GPU designs, each of the GPU features its own last-level cache (LLC), but in order to avoid problematic synchronization, AMD thinks that each of the GPU chiplets should feature its own LLC, but in a way that each of those caches is ‘communicably coupled’ to physical resources so that the cache remains ‘unified and remains coherent across all GPU chiplets’.

Source: Freepatents via @davideneco25320

https://videocardz.com/newz/amd-pat...4pNlj-nJKIRA64fjWs-jfxliMIF3RN8XGZ7JsBYtG9WnQ

According to the patent, a primary GPU chipset would be direct ‘communicably coupled’ to the CPU, while each of the secondary GPU chiplets in the array would be coupled to the first GPU via a passive crosslink.

In this sense, AMD considered passive crosslink as communication wires between chiplets that are placed on a single interposer. (in multiple layers if needed).

In conventional GPU designs, each of the GPU features its own last-level cache (LLC), but in order to avoid problematic synchronization, AMD thinks that each of the GPU chiplets should feature its own LLC, but in a way that each of those caches is ‘communicably coupled’ to physical resources so that the cache remains ‘unified and remains coherent across all GPU chiplets’.

Source: Freepatents via @davideneco25320

https://videocardz.com/newz/amd-pat...4pNlj-nJKIRA64fjWs-jfxliMIF3RN8XGZ7JsBYtG9WnQ

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)