Pusher of Buttons

[H]ard|Gawd

- Joined

- Dec 6, 2016

- Messages

- 1,924

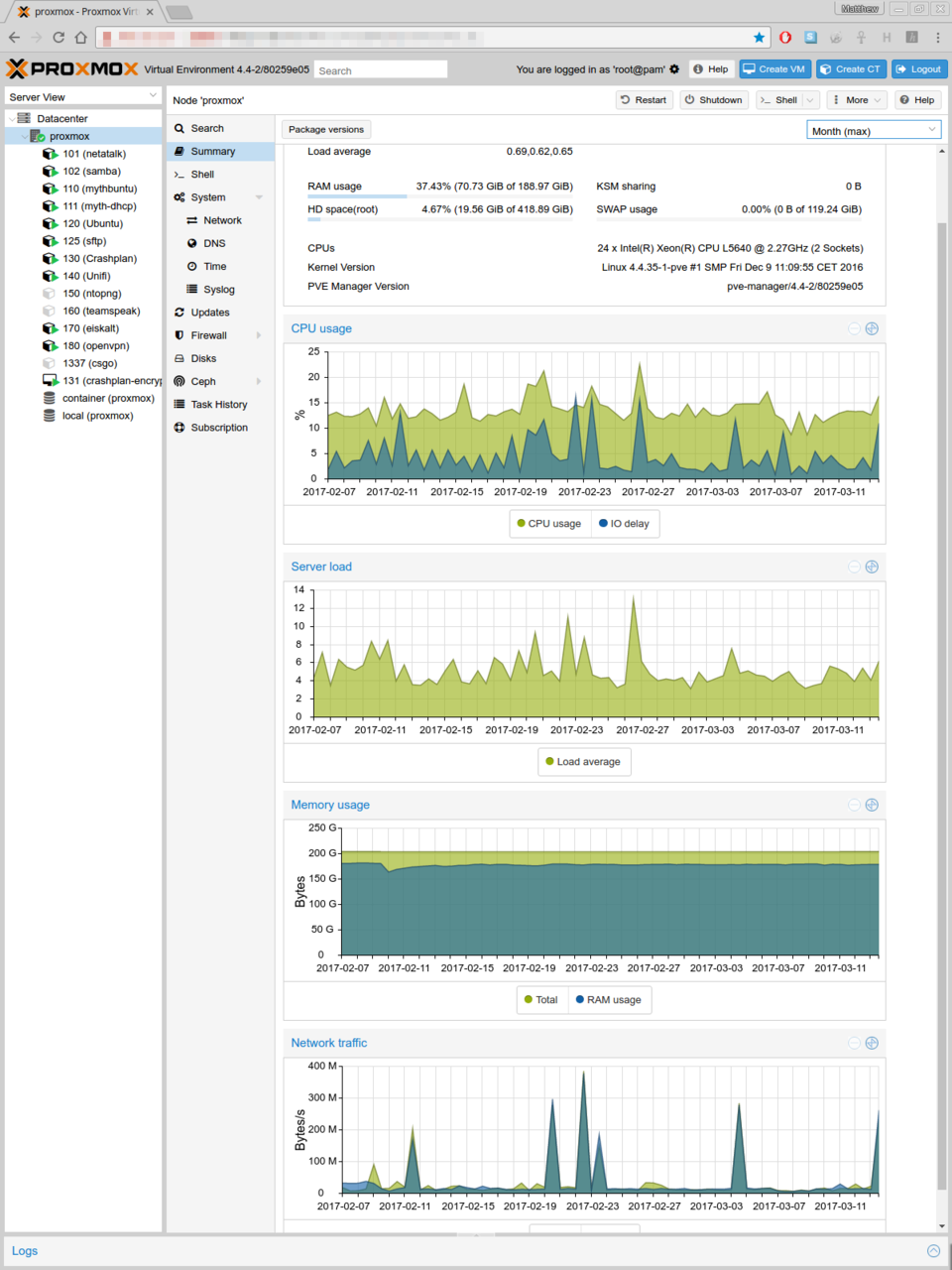

I ran an incredibly amount of stuff on a 2x8/16 64GB Dell rack at my prior job....I can't even comprehend the amount of junk you could stuff into a fully loaded dual socket Naples system.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)