HardOCP News

[H] News

- Joined

- Dec 31, 1969

- Messages

- 0

For the last nine months, AMD has brought a singular focus back to GPUs through the Radeon Technologies Group. During that time, the company has made significant investments in hardware, marketing, and software for the graphics lineup leading to four straight quarters of market share growth.

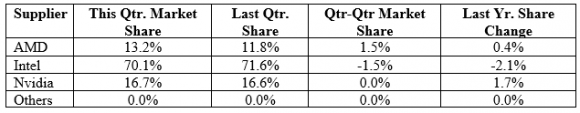

According to Mercury Research, AMD gained three points of unit volume share in Q1 2016. The recent issuance of both Mercury Research and Jon Peddie Research market share data for Q2 2016 has confirmed AMD has posted the fourth consecutive quarter of desktop discrete GPU share growth, driven by AMD’s strongest quarter of channel GPU sales since 2015 and the commencement of shipping of the next generation Polaris GPUs.

In total discrete graphics, AMD gained 4.8 share points to 34.2% of market by unit volume (based on Mercury Research). In desktop discrete, a subset of total discrete, AMD saw a 7.3 share point increase, rising to 29.9% market share. This is another positive testament AMD’s strategy is working as the company drives forward towards “Vega” offerings for the enthusiast GPU market, which AMD expects to bring to market in 2017 to complement our current generation of “Polaris” products.

AMD believes it is well positioned to continue this trend in market share gains with the recently launched Radeon RX 480, 470, and 460 GPUs that bring leadership performance and features to the nearly 85% of enthusiasts who buy a GPU priced between $100 and $300.

According to Mercury Research, AMD gained three points of unit volume share in Q1 2016. The recent issuance of both Mercury Research and Jon Peddie Research market share data for Q2 2016 has confirmed AMD has posted the fourth consecutive quarter of desktop discrete GPU share growth, driven by AMD’s strongest quarter of channel GPU sales since 2015 and the commencement of shipping of the next generation Polaris GPUs.

In total discrete graphics, AMD gained 4.8 share points to 34.2% of market by unit volume (based on Mercury Research). In desktop discrete, a subset of total discrete, AMD saw a 7.3 share point increase, rising to 29.9% market share. This is another positive testament AMD’s strategy is working as the company drives forward towards “Vega” offerings for the enthusiast GPU market, which AMD expects to bring to market in 2017 to complement our current generation of “Polaris” products.

AMD believes it is well positioned to continue this trend in market share gains with the recently launched Radeon RX 480, 470, and 460 GPUs that bring leadership performance and features to the nearly 85% of enthusiasts who buy a GPU priced between $100 and $300.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)