Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

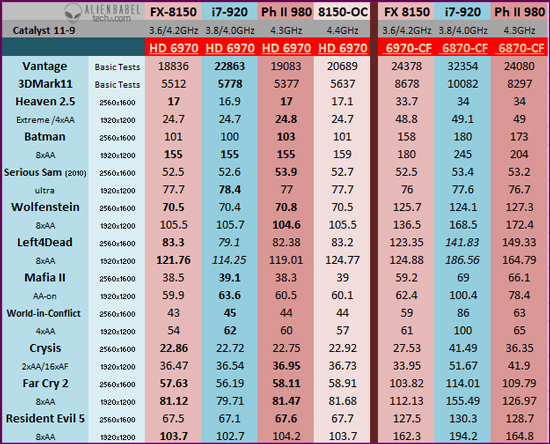

i agree on all parts. until theres an apples to apples between the AMD cfx and SLI its kind of hard to say if the problem really is the processor its self. for all we know the SLI drivers are broken as hell for the 990 chipset(and honestly i wouldn't doubt that from Nvidia). all we got was half the cookie, now we need the rest of it, without it the review doesn't really tell us as customers/readers anything.

sure the processor may be cheaper but the entire platform sure as hell isn't. yet people seem to love ignoring that fact.

Im not sure about that, You can get into a GOOD 2500k+mobo+ram for under $400?

Thats a pretty damn good Mainsteam PC....

P.S. when going to build a pc for a customer its really hard not to recommend an Intel based system....AMD just dropped the ball on bulldozer (I was accually waiting for this proc to upgrade too)

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)