Rockenrooster

Gawd

- Joined

- Apr 11, 2017

- Messages

- 955

From what I've seen it's not a DLSS replacement...

A more accurate statement would be "an alternative to DLSS" which what everyone wants.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

From what I've seen it's not a DLSS replacement...

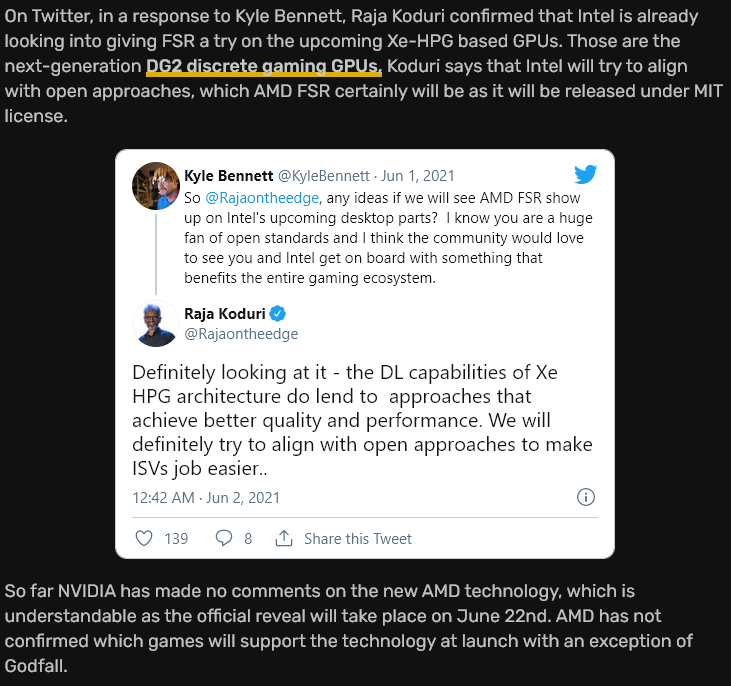

Knowing Raja fairly well and his background with open standards, it seemed like a good question. Did not expect it to get as much attention as it did, but honestly, I am glad it did. I would love to see Intel come into line with AMD on this FSR standard as it has HUGE implications for gamers across the board, not just the folks that can afford an RTX GPU and have been lucky enough to snag one.Looks like Intel is wanting to push into open source DLSS with AMD, well according to the twitter post between Kyle and Intel's GPU god

That twitter back and forth showed up on my newsfeed last night- "I know that guy!"Knowing Raja fairly well and his background with open standards, it seemed like a good question. Did not expect it to get as much attention as it did, but honestly, I am glad it did. I would love to see Intel come into line with AMD on this FSR standard as it has HUGE implications for gamers across the board, not just the folks that can afford an RTX GPU and have been lucky enough to snag one.

View attachment 362092

With AMD on next-gen consoles...

Fact of the matter, FreeSync won. Of course Nvidia calls it "G-Sync Compatible" because they will never admit defeat. But the free and open standard won out.

How many games supported DLSS on day one? How many GPUs supported DLSS on day one? That is right, none. Not a high bar and you don't need a $1200 video card for nothing supported.I don't understand the optimism when we haven't even seen a demo

I for one don't hold out much hope for FSR being better than DLSS 1.0 upon release. Anything "less clunky" than that would really impress me.

It's going to work just fine, it is using an amalgamation of two already existing supersampling methods that are very common and already abundant at a hardware level in everything from TV's to Cellphones. What AMD and Microsoft have managed to do here is create a new algorithm that utilizes the best of both methods while supposedly ignoring or compensating for both of their drawbacks, given that the linear and non-linear upscaling processes have been baked into all generations of hardware for a long time and are very well known I am sure they will do something that is beyond adequate. I don't believe it will achieve DLSS levels of quality or clarity, but I can completely see it being 90% of the quality while using significantly fewer GPU resources in the process. It looks like it will be more complex to implement as AMD does not do anywhere near the degree of hand-holding support that NVidia does for their feature sets. But given that it will be supported on PS5, XBox, and the last 3 generations of PC GPU's I can easily see it gaining large adoption as for the consoles it will very much mean the difference between hitting 60fps and not, especially at "4K" resolutions.Just because something "wins" (by your example, I take that to mean 'more widespread availability') doesn't mean it's necessarily better though. G-Sync is far from dead, so it isn't playing out like a format war.

The quality and performance of this tech remains to be seen. DLSS 2.0 is pretty amazing, the bar is set. If it follows in freesync's footsteps, it's going to be many years before it has competitive performance. I like the idea that it can run on anything, but it doesn't seem realistic that doing so will perform even reasonably well on most hardware out there. DLSS 2.0 requires significant compute power, currently achieved using dedicated hardware. Enabling those features on a 1080Ti, gonna be a slideshow. Granted, raytracing on a 1080Ti is already a slideshow... but still. Going from 12 fps to say 16 fps, 25% performance boost! But the slideshow would still be a slideshow on 95% of the hardware out there. Might be useful on 6800XT and 6900XT, which is really what it is being written for. They have to try to catch up to nVidia. To really do that, they need to aim for an 8x performance jump in their 7800XT's (as compared to the 6800XT)... being forever 2 years behind sucks, because if you are a hardware enthusiast, 2 years behind doesn't cut it.

I'm not being pro-NV by any means, but have we gotten any concrete information on FSR? So far I've only seen PR materials and some AMD performance figures. If there already are concrete information I'd love to dive in deeper.How many games supported DLSS on day one? How many GPUs supported DLSS on day one? That is right, none. Not a high bar and you don't need a $1200 video card for nothing supported.

I am sorry that NV has destroyed your thoughts on what you should get with supported features.

FidelityFX is already launched and is AMD's software collection of tools that devs can implement in-game. FSR is just another component of that tool set. FSR stands for FidelityFX Super Resolution.According to digital trends. FidelityFX will support variable rate shading, contrast adaptive sharpening, denoising, and parallel sort to improve image quality. FidelityFX will be supported in 35 games at launch. Granted this is an Xbox series X article so we'll have to wait and see how this translates to PC games. Link to article.

I love your optimism.It's going to work just fine, it is using an amalgamation of two already existing supersampling methods that are very common and already abundant at a hardware level in everything from TV's to Cellphones. What AMD and Microsoft have managed to do here is create a new algorithm that utilizes the best of both methods while supposedly ignoring or compensating for both of their drawbacks, given that the linear and non-linear upscaling processes have been baked into all generations of hardware for a long time and are very well known I am sure they will do something that is beyond adequate. I don't believe it will achieve DLSS levels of quality or clarity, but I can completely see it being 90% of the quality while using significantly fewer GPU resources in the process. It looks like it will be more complex to implement as AMD does not do anywhere near the degree of hand-holding support that NVidia does for their feature sets. But given that it will be supported on PS5, XBox, and the last 3 generations of PC GPU's I can easily see it gaining large adoption as for the consoles it will very much mean the difference between hitting 60fps and not, especially at "4K" resolutions.

Cyberpunk 2077 supported the original FidelityFX, and it was the only way to get close to 60 fps on my GTX 1060 test rig.Imagine if your say CDprojectRed and its October last year and AMD got this open standard final and out the door before you launch your Cyberpunk game. Instead of having to be forced to refund millions of dollars of purchases of last gen console sales.... you simply release your FSR patch and last gen consoles become playable... no not 120fps but playable.

I have a feeling they expected AMD to have this ready to go at launch. I know it sounds like they got pushed to get it out the door. Still I believe they expected this feature to make everything ok on last gen consoles. Something like ya we are only seeing 20 FPS... but AMD is going to have FSR ready for their next gen cards and consoles and get us to 30 FPS on PS4/Xbone it will be fine.Cyberpunk 2077 supported the original FidelityFX, and it was the only way to get close to 60 fps on my GTX 1060 test rig.

So yeah, they were working with AMD would obviously have added this new FSR if available. Not sure if they used it on consoles, but there was some render scaling.

I have a feeling they expected AMD to have this ready to go at launch. I know it sounds like they got pushed to get it out the door. Still I believe they expected this feature to make everything ok on last gen consoles. Something like ya we are only seeing 20 FPS... but AMD is going to have FSR ready for their next gen cards and consoles and get us to 30 FPS on PS4/Xbone it will be fine.

Very heavy speculation... perhaps Microsoft not pulling it from their old gen consoles as quickly may have had something to do with MS being involved implementing FSR. Not to absolve CDPR for their mess... but perhaps there was some blame to go around if they did expect to get a magic 30-40% uplift from MS/AMD.