One could make the same argument about AA when it was new or the countless other features we have. They're going to make features like this regardless of if we want them or not. I'm going to sit back and enjoy the ride.It's the new thing - all cards will be getting this and you will pay for it in the next new card. Turning it off won't save you money.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Could Tease DLSS 3-rivaling FSR 3.0 at GDC 2023

- Thread starter erek

- Start date

I was just addressing the point we are all paying for it. I think comparing AA to something like RT in terms of development costs is kind of ridiculous. The funny part is that AA probably brings much more to image quality as well.One could make the same argument about AA when it was new or the countless other features we have. They're going to make features like this regardless of if we want them or not. I'm going to sit back and enjoy the ride.

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,003

You'd be surprised how many people were avidly against AA when it was first made usable. calling it "fake resolution" and "never a substitute for higher resolution" and "not worth the performance hit" "don't notice the difference" "blurry and soft, removes all sharpness"I was just addressing the point we are all paying for it. I think comparing AA to something like RT in terms of development costs is kind of ridiculous. The funny part is that AA probably brings much more to image quality as well.

And yes, in order to make MSAA work on the first range of cards: it required hardware designed around it, more VRAM and a higher pixel throughput than a traditionally designed product at the time, and those design decisions didn't have much of an effect on the non-AA performance of the cards.

RT is being sold as a gimmick right now, the same way DX10 compute shader architecture was, the same way DX11 Tessellation was... now those are just 'graphics'. One Day RT will be just 'graphics', but that day is not here yet.

funkydmunky

2[H]4U

- Joined

- Aug 28, 2008

- Messages

- 3,870

Until Nvidia's attempt at a proprietary lock down fails once again and the market swings to the open source model that NEVER needed magic sauce all along. Been there, seen that.no matter how good FSR gets it'll never match DLSS quality

Once you feed Intel or Amd non proprietary solution Motion vectors, may has well do it for DLSS no ?Until Nvidia's attempt at a proprietary lock down fails once again and the market swings to the open source model that NEVER needed magic sauce all along. Been there, seen that.

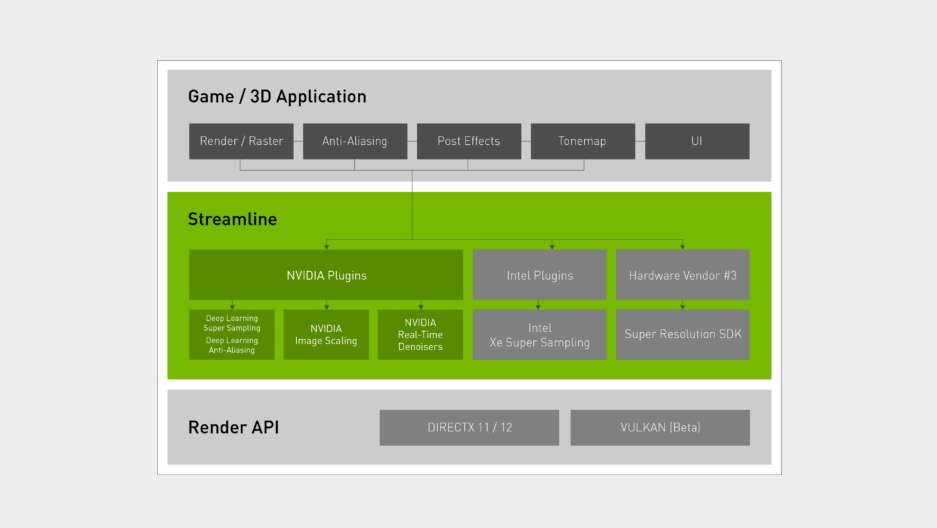

Maybe it will look like this in the future, cementing Nvidia DLSS presence among almost all title that support anytype of advance supersampling that require the game engine to do anything like FSR 2+ of intel super sampling.

Possible that a single way that will make all solution of all vendor work for every game that want to user supersample (like Streamline try to do) https://github.com/NVIDIAGameWorks/Streamline become the norm.

Or maybe unreal 5 type of solution will beat all the video card vendor solution....

TrunksZero

Gawd

- Joined

- Jul 15, 2021

- Messages

- 550

Streamline sounds great, but nVidia is the wrong company to be doing it and it's likely going to absolutely screw us. I hope I'm wrong.

You'd be surprised how many people were avidly against AA when it was first made usable. calling it "fake resolution" and "never a substitute for higher resolution" and "not worth the performance hit" "don't notice the difference" "blurry and soft, removes all sharpness"

I would be surprised if you found one example to back up your claim. AA was first developed for advanced cameras and widely accepted.

And yes, in order to make MSAA work on the first range of cards: it required hardware designed around it, more VRAM and a higher pixel throughput than a traditionally designed product at the time, and those design decisions didn't have much of an effect on the non-AA performance of the cards.

MSAA is an ENHANCEMEMT of AA. That's not what I am talking about and you know it. Standard AA.

RT is being sold as a gimmick right now, the same way DX10 compute shader architecture was, the same way DX11 Tessellation was... now those are just 'graphics'. One Day RT will be just 'graphics', but that day is not here yet.

NO RT is not being SOLD as a gimmick. Nvidia sells it as the next coming of Christ. I would say Shader and Tesseliization are and will aliways be much more imprortant to graphics than RT will ever be at a sliver of the development costs of RT eyecandy.

Last edited:

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,859

Streamline sounds great, but nVidia is the wrong company to be doing it and it's likely going to absolutely screw us. I hope I'm wrong.

Yeah, Nvidia has a long history of market manipulation using proprietary solutions as lockin-ins and lock-outs.

They are the worst possible company to trust for any kind of industry standard.

And we need industry standards. Implementations might be proprietary, but all standards in the PC market need to be open. That's what makes the PC great.

As soon as you put up proprietary walls, everything goes to shit, and Nvidia is the worst at this. Sometimes it feels like they have been actively trying to ruin the PC market for decades.

No corporation is your friend, they are all in it to make money for their shareholders after all, but there are definitely ones with better and worse track records.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,801

A key point that a lot miss.No corporation is your friend, they are all in it to make money for their shareholders after all, but there are definitely ones with better and worse track records.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,669

Especially most amd fans.A key point that a lot miss.

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,894

https://github.com/NVIDIAGameWorks/RayTracingDenoiserOnce you feed Intel or Amd non proprietary solution Motion vectors, may has well do it for DLSS no ?

Maybe it will look like this in the future, cementing Nvidia DLSS presence among almost all title that support anytype of advance supersampling that require the game engine to do anything like FSR 2+ of intel super sampling.

View attachment 554440

Possible that a single way that will make all solution of all vendor work for every game that want to user supersample (like Streamline try to do) https://github.com/NVIDIAGameWorks/Streamline become the norm.

Or maybe unreal 5 type of solution will beat all the video card vendor solution....

Nvidia is fronting it, but the source is openStreamline sounds great, but nVidia is the wrong company to be doing it and it's likely going to absolutely screw us. I hope I'm wrong.

https://github.com/NVIDIAGameWorks/Streamline

anybody can contribute or fork it should they feel a need.

Projects like these are critical to Nvidia right now, their ARM purchase failed because they have a history of being too monopolistic and protectionary, well now they can create projects like these with their size, develop for Nvidia first and your stuff will also work with everybody else's, it keeps Nvidia front and center of all the action where everybody else is a trickle-down effect. Sadly for us, it also puts a cage on Intel and AMD because they are literally "downstream" so it guarantees they are catching Nvidia's run-off, but on the other hand, it will also give Intel a much-needed leg. Nvidia's gain here is they can show international bodies they are working with the GPU community as a whole and being less monopolistic and protectionary, if they can keep this up for a few years the next time they go to make a large purchase they can say they learned from their past mistakes and are working hard on not being those things they were before. While true, the lessons they learned are how to better game the system.

Ultimately though for the consumer, this is mostly a good thing, AMD isn't a real player for desktop GPUs they make them but their financials show where their priorities are, Intel is the real up-and-comer here and it is them that Nvidia needs to work with and Intel is far more likely to play ball, Intel needs Nvidia's support and its projects like these that will help them get the developer support and make them a viable contender in the GPU space. This seems counter-intuitive at first but Nvidia wants to sell less consumer hardware, they want somebody to take the lower margin mid to low end away from them so they can focus that silicon on Server and Datacenter and custom silicon.

AMD's recent numbers for custom GPU silicon rival Nvidia's GPU sales, which is a market Nvidia wants to grow into, it's less work with better margins and consistent payouts, Nvidia is happy to hand over the low end where margins are tight and volume is key, honestly that is Intel's wheelhouse right there.

TrunksZero

Gawd

- Joined

- Jul 15, 2021

- Messages

- 550

Going to take allot more than this to compensate for decades of that mind set at nVidia. Which also is apparently a Jensen down problem, so I remain extremely skeptical it will ever change so long as the jacket is in charge.Nvidia is fronting it, but the source is open

https://github.com/NVIDIAGameWorks/Streamline

anybody can contribute or fork it should they feel a need.

Projects like these are critical to Nvidia right now, their ARM purchase failed because they have a history of being too monopolistic and protectionary, well now they can create projects like these with their size, develop for Nvidia first and your stuff will also work with everybody else's, it keeps Nvidia front and center of all the action where everybody else is a trickle-down effect. Sadly for us, it also puts a cage on Intel and AMD because they are literally "downstream" so it guarantees they are catching Nvidia's run-off, but on the other hand, it will also give Intel a much-needed leg. Nvidia's gain here is they can show international bodies they are working with the GPU community as a whole and being less monopolistic and protectionary, if they can keep this up for a few years the next time they go to make a large purchase they can say they learned from their past mistakes and are working hard on not being those things they were before. While true, the lessons they learned are how to better game the system.

Ultimately though for the consumer, this is mostly a good thing, AMD isn't a real player for desktop GPUs they make them but their financials show where their priorities are, Intel is the real up-and-comer here and it is them that Nvidia needs to work with and Intel is far more likely to play ball, Intel needs Nvidia's support and its projects like these that will help them get the developer support and make them a viable contender in the GPU space. This seems counter-intuitive at first but Nvidia wants to sell less consumer hardware, they want somebody to take the lower margin mid to low end away from them so they can focus that silicon on Server and Datacenter and custom silicon.

AMD's recent numbers for custom GPU silicon rival Nvidia's GPU sales, which is a market Nvidia wants to grow into, it's less work with better margins and consistent payouts, Nvidia is happy to hand over the low end where margins are tight and volume is key, honestly that is Intel's wheelhouse right there.

i have no argument in cost comparisons. i just think complaining about cost with regards to DLSS or FSR is silly because they're going to do it weather we like it or not. so long as people are buying cards they will continue to 'invest' in whatever the new cool tech is - weather it's useful or not.I was just addressing the point we are all paying for it. I think comparing AA to something like RT in terms of development costs is kind of ridiculous. The funny part is that AA probably brings much more to image quality as well.

personally i think FSR and DLSS are a nice addition. i haven't played with it much yet since i have not yet needed it, but in the future as games get more difficult for my card to run i can see using it to keep going longer before buying something else.

I was actually talking about standard AA. i agree FSR is nice. Well the DLSS/ RT deal is the reason GPUs are 1500 instead of say 750. Name one other graphic enhancement that has to be run through a supercomputer for optimization? What do you think that costs? I look at side by side and I can't tell the difference between the latest generations of FSR and DLSS. DLSS means nothing to me but agnostic FSR looks way more interesting. I'm not complaining - I'm bringing up a point. There are lots of threads here you can peruse if you want to read some hypocritical crying. I'm trying to stay out of that crap. Apparently it gets me into trouble.i have no argument in cost comparisons. i just think complaining about cost with regards to DLSS or FSR is silly because they're going to do it weather we like it or not. so long as people are buying cards they will continue to 'invest' in whatever the new cool tech is - weather it's useful or not.

personally i think FSR and DLSS are a nice addition. i haven't played with it much yet since i have not yet needed it, but in the future as games get more difficult for my card to run i can see using it to keep going longer before buying something else.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,669

Dlss hasn't needed that since 2.0. Raytracing never has.Name one other graphic enhancement that has to be run through a supercomputer for optimization? What do you think that costs?

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,114

No matter the rendering resolution, it is all scaled to the output resolution on consoles.From my understanding that rarely how it is done, they use a very similar algorithm (if not exactly the same) for all resolutions, screen that allow pure pixel scaling are more the exception that the norm and I would imagine it is quite easy to beat anyway.

Playstation-Xbox console for example do not seem to worry and go all around 800p to 1800p with dynamic and zone resolution.

FSAA, not MSAA. MSAA wasn't a thing until it was added to OpenGL in version 1.5 around the mid-2000s.You'd be surprised how many people were avidly against AA when it was first made usable. calling it "fake resolution" and "never a substitute for higher resolution" and "not worth the performance hit" "don't notice the difference" "blurry and soft, removes all sharpness"

And yes, in order to make MSAA work on the first range of cards: it required hardware designed around it, more VRAM and a higher pixel throughput than a traditionally designed product at the time, and those design decisions didn't have much of an effect on the non-AA performance of the cards.

RT is being sold as a gimmick right now, the same way DX10 compute shader architecture was, the same way DX11 Tessellation was... now those are just 'graphics'. One Day RT will be just 'graphics', but that day is not here yet.

2.0 is when it was no longer needed. Ever since then DLSS profiles get updated silently through GeForce Experience. People who don't install GFE only get the updated profiles when they install new drivers.Dlss hasn't needed that since 2.0. Raytracing never has.

Yeah your admittedly mediocre devices seems to be beating intel for a number of years now and came out of nowhere to almost match nvidia last gen. That other thread about GPU history got me thinking - they also soundly beat nvidia in the three generations starting from 9700 pro all the way till x1900, then again with the 7970 and 290X they had a better arch as well while nvidia was decent performance wise but also being shady, piling on extra tesselation when it wasn't needed during the fermi days, started the TWIMTBP shenanigans and wanted to go even further with their dodginess till the tech media and especially Kyle called them out for it and they backed off and i won't even get into the other market manipulations they've done in the past.Yeah the multi billion dollar company is just some scrappy startup in the face of mean old Nvidia. Keep making excuses... AMD = Admittedly Mediocre Devices, and it's not for lack of funding.

Also it's strange how you just put out a blanket statement that it's not for a lack of funding which isn't really factually correct is it? GPU's take a few years from the R&D phase till production. AMD's combined CPU+GPU R&D budget was far, far lower than Nvidia's especially if you take their comparative figures from 3/4 years ago.

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,859

Yeah your admittedly mediocre devices seems to be beating intel for a number of years now and came out of nowhere to almost match nvidia last gen. That other thread about GPU history got me thinking - they also soundly beat nvidia in the three generations starting from 9700 pro all the way till x1900, then again with the 7970 and 290X they had a better arch as well while nvidia was decent performance wise but also being shady, piling on extra tesselation when it wasn't needed during the fermi days, started the TWIMTBP shenanigans and wanted to go even further with their dodginess till the tech media and especially Kyle called them out for it and they backed off and i won't even get into the other market manipulations they've done in the past.

Also it's strange how you just put out a blanket statement that it's not for a lack of funding which isn't really factually correct is it? GPU's take a few years from the R&D phase till production. AMD's combined CPU+GPU R&D budget was far, far lower than Nvidia's especially if you take their comparative figures from 3/4 years ago.

Funding is definitely a part of it.

AMD has had some cool ideas, and occasionally these cool ideas result in a spurt where they are competitive and even beat Nvidia (though this has been a while)

What they lack is consistency, and you only get that kind of consistency by having a well funded large driver and hardware development groups over multiple generations.

Let's not forget that Intel almost killed AMD. AMD had arguably the better CPU's than Intel did through some clever innovations and acquisitions licensing and poachings of staff (Nexgen, DEC/Alpha etc.) 20 years ago, but Intel used their clout to block them from getting any of the lucrative OEM deals, meaning they had to survive based on us low volume enthusiasts. They could never take enough advantage of their leadership position in the early 2000's to pay for the development of their next gen CPU's. Intel sabotaging AMD performance in the Intel compiler was just the icing on the cake.

AMD had to sell of their fabs just to survive.

AMD used their billion dollar legal settlement from Intel to Acquire ATi as they saw a potential future in moving in to on-die GPU's being more likely to be successful, than taking on Intel head on with GPU-less CPU's.

One of the many reasons Bulldozer and the follow-on FX designs were so bad was because they didn't have enough money to fund R&D to get it done. They had to take shortcuts, using automated copy and paste tools during an era when the norm was to hand optimize circuits. They were also forced to use the same design for Server, Desktop and what little mobile they were trying to get into.

For years AMD's CPU division and GPU division competed with eachother for limited funds to develop decent products. it wasn't all that long ago we first heard of Zen architecture being developed, and thought it sounded promising, but all wondered if AMD could survive long enough to actually make it to market. Surprise. They did, and it has been very good for them on the CPU side of things.

I suspect their primary focus right now is to achieve that generation after generation consistency on the CPU side, and only once they feel it is relatively well cemented, let them get more distracted by GPU's. GPU's have definitely had less investment than would be ideal. They scraped their way up to being pretty close, and would have overtaken Nvidia with the 6000 series if RT hadn't become so important. The 6900xt was faster than anything Nvidia had in traditional raster rendering when it launched. Nvidia pulled a "Lucy with the football" on them. As they were racing to really take on raster performance with storm, Nvidia moved the goal-posts and took away their thunder. And that's fine, that's competition. I often feel Nvidia tries to change the market to suit them, rather than compete in the market they are in, which is a little shitty, but it is what it is.

It will take AMD a couple of generations to adjust to the new market realities which are RT and AI forward. I think they can do it and cement themselves as a regular contender on both the CPU and GPU front, occasionally slightly ahead, occasionally slightly behind, but nowhere near as far behind as they were in the dark days from ~2005 until 2016.

And that's all I hope for. Balance in the market. An AMD which can compete with Intel when it comes to CPU's and with Nvidia when it comes to GPU's.

But that isn't enough.

Economic research shows you need 3-5 viable competitors to really make a competitive free market. We might get a 3rd in Intel on the GPU side, but the CPU side seems hopeless for as long as x86 is the primary desktop chip because of Intel's licensing arrangements. I wish we still had Cyrix/Centaur and NEC around as options.

The barriers to entry are so high at this point that it seems unlikely anyone else can enter. On the CPU side at least until x86 gives way on the desktop for whatever is next, be it ARM or RISC-V or whatever. That will open it up a little bit. There are some other players there who currently are focused on mobile, but who could scale up to desktop.

On the GPU side both Samsung and Qualcomm make mobile GPU's. One wonders what it would be like if those were scaled up to discrete desktop GPU power levels...

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,859

2.0 is when it was no longer needed. Ever since then DLSS profiles get updated silently through GeForce Experience. People who don't install GFE only get the updated profiles when they install new drivers.

I did not know that they updated DLSS profiles outside of regular driver updates.

That's good to know, but I'm still not installing the GeForce Experience. Way too much shady shit runs in the background when it is installed. All sorts of strange services, processes and Nvidia containers are always active.

Maybe they are innocuous, maybe not, but I didn't want that shit running in the background on my machine, so I decided to remove it and never install it again.

I'm allergic to always running background processes, except when I intentionally set them up myself.

AMD FidelityFX Super Resolution 3 (FSR3) to officially launch tomorrow

While HYPR-RX made its debut with the September drivers, FSR3’s exact release date remained a mystery until now. Officially, FSR3 is set to make its first appearance in games starting tomorrow, as confirmed by a tweet from Frank Azor.

Although Frank didn’t specify the games launching with FSR3 tomorrow, AMD has confirmed that Forspoken and Immortals of Avenum will be among the first titles to feature this technology.

https://videocardz.com/newz/amd-fidelityfx-super-resolution-3-fsr3-to-officially-launch-tomorrow

That seems sudden…AMD FidelityFX Super Resolution 3 (FSR3) to officially launch tomorrow

While HYPR-RX made its debut with the September drivers, FSR3’s exact release date remained a mystery until now. Officially, FSR3 is set to make its first appearance in games starting tomorrow, as confirmed by a tweet from Frank Azor.

Although Frank didn’t specify the games launching with FSR3 tomorrow, AMD has confirmed that Forspoken and Immortals of Avenum will be among the first titles to feature this technology.

https://videocardz.com/newz/amd-fidelityfx-super-resolution-3-fsr3-to-officially-launch-tomorrow

Surprisingly though FSR 3 support doesn’t seem to be coming to Starfield, or it’s at least not on AMD’s list of titles. In response to this PureDark has said if they don’t add it he will as a mod so…

Last edited:

Nvidia MUST be blocking FSR3 from Starfield while they work on DLSS. Its the ONLY logical reason why FSR3 is not in Starfield yet</sarcasm>That seems sudden…

Surprisingly though FSR 3 support doesn’t seem to be coming to Starfield, or it’s at least not on AMD’s list of titles. In response to this PureDark has said if they don’t add it he will as a mod so…

FSR 3 Frame Gen also seems to work quite decently on an Ampere GPU like the RTX 3080 in Forspoken. Ideally you just need to force Reflex with Special K & you should have a great experience with it.

https://twitter.com/Sebasti66855537/status/1707737289172857294

https://twitter.com/Sebasti66855537/status/1707737289172857294

Input lag feels fine at 120Hz locked. Should get even better once it supports Gsync/VRR/Freesync. I can see little bit of artifacts on the marker in the distance at 120Hz locked when I move the camera faster. Tends to get worse below 100 etc.

Also my frametime graph does not look right, it's a thick line:

View: https://imgur.com/a/IfofP1r

Only judging the 120Hz locked performance with FSR3, it's a good start for FSR3 IMO, but they absolutely need to support Gsync/VRR/Freesync ASAP.

Also my frametime graph does not look right, it's a thick line:

View: https://imgur.com/a/IfofP1r

Only judging the 120Hz locked performance with FSR3, it's a good start for FSR3 IMO, but they absolutely need to support Gsync/VRR/Freesync ASAP.

AMD FSR 3 Now Available

It is recommended to use AMD FSR 3 with VSync, as when enabled, frame pacing relies on the expected refresh rate of the monitor for a consistent high-quality gaming experience. Additionally, it is recommended that Enhanced Sync is disabled in AMD Software: Adrenalin Edition™ settings, as it can interfere with frame pacing logic. A game using AMD FSR 3 in this configuration will show a “zigzag” pattern on frame time timing graphs in performance measuring tools such as AMD OCAT. This is completely expected and does not indicate uneven frame pacing.

https://community.amd.com/t5/gaming/amd-fsr-3-now-available/ba-p/634265?sf269320079=1

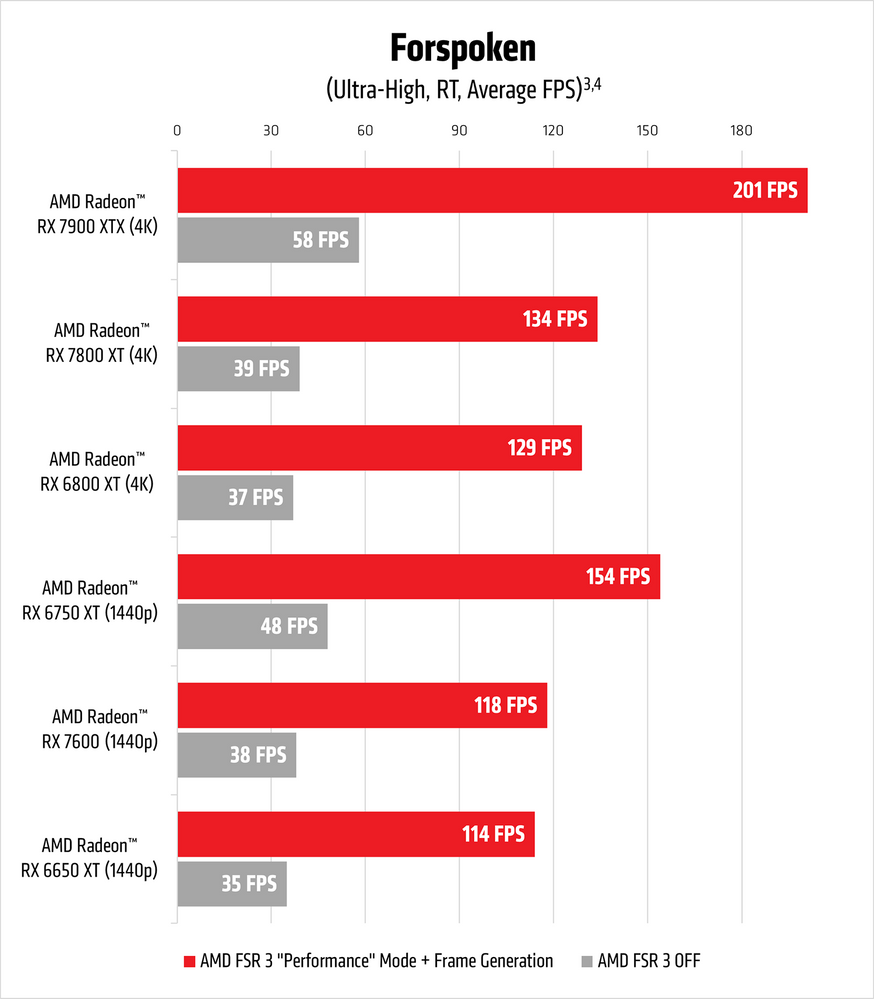

FG Performance on AMD Radeon

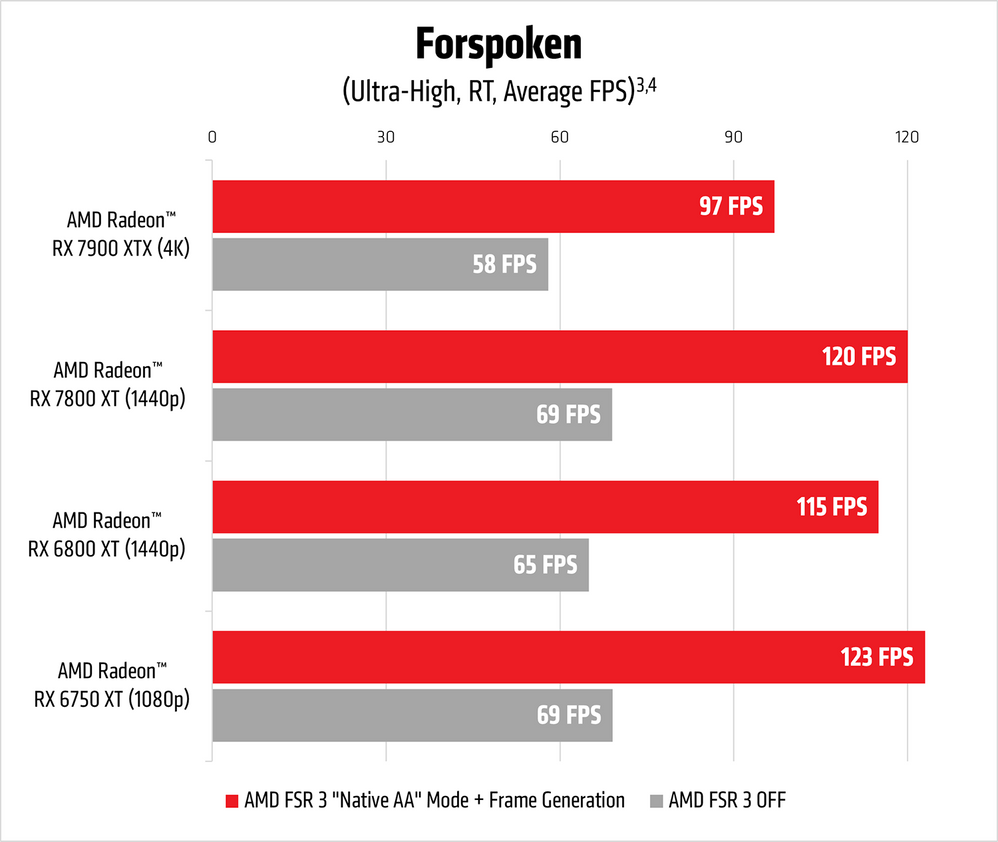

Native AA mode Gaming

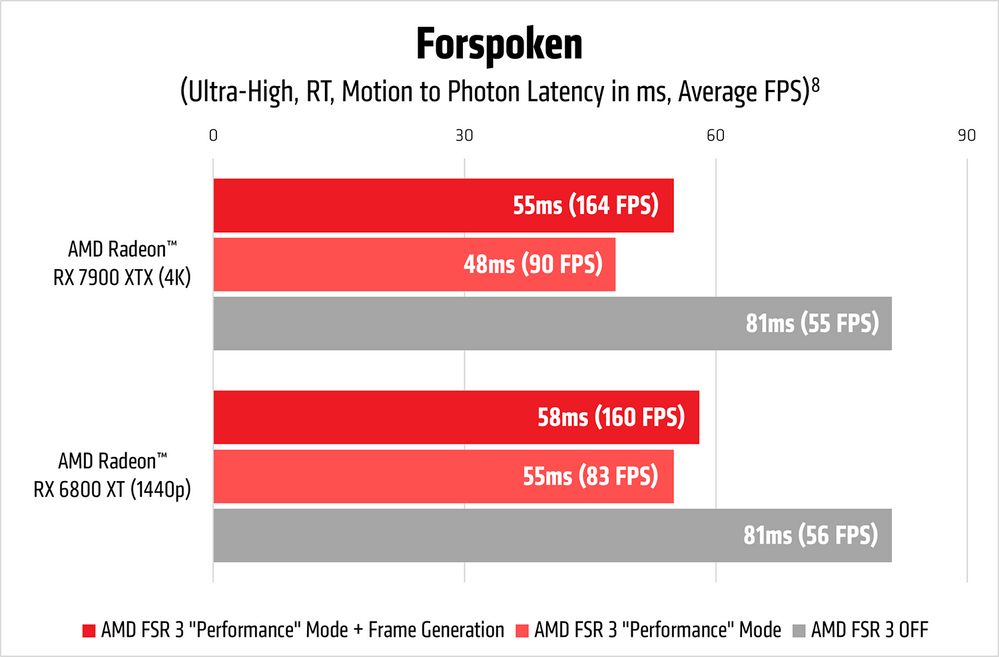

Latency Reduction Results

Furious_Styles

Supreme [H]ardness

- Joined

- Jan 16, 2013

- Messages

- 4,532

Can't wait to see how well this works on 30 series, finally see if nvidia was blowing smoke that entire time.

SpecialK for Nvidias users is the way to go atm.

"Broken low latency mode in the engine, enforcing Nvidia Reflex to Low Latency and setting Reflex Trigger to end-of-frame in SpecialK makes the frametime flat."

Edit: Hmm, FSR3 performance decrease (in terms of artifacts and also latency) is a little bit to heavy by switching from 120Hz to 100Hz. I hope this is not a sign, that it is only running well when playing 120FPS and above.

"Broken low latency mode in the engine, enforcing Nvidia Reflex to Low Latency and setting Reflex Trigger to end-of-frame in SpecialK makes the frametime flat."

Edit: Hmm, FSR3 performance decrease (in terms of artifacts and also latency) is a little bit to heavy by switching from 120Hz to 100Hz. I hope this is not a sign, that it is only running well when playing 120FPS and above.

Last edited:

SPARTAN VI

[H]F Junkie

- Joined

- Jun 12, 2004

- Messages

- 8,762

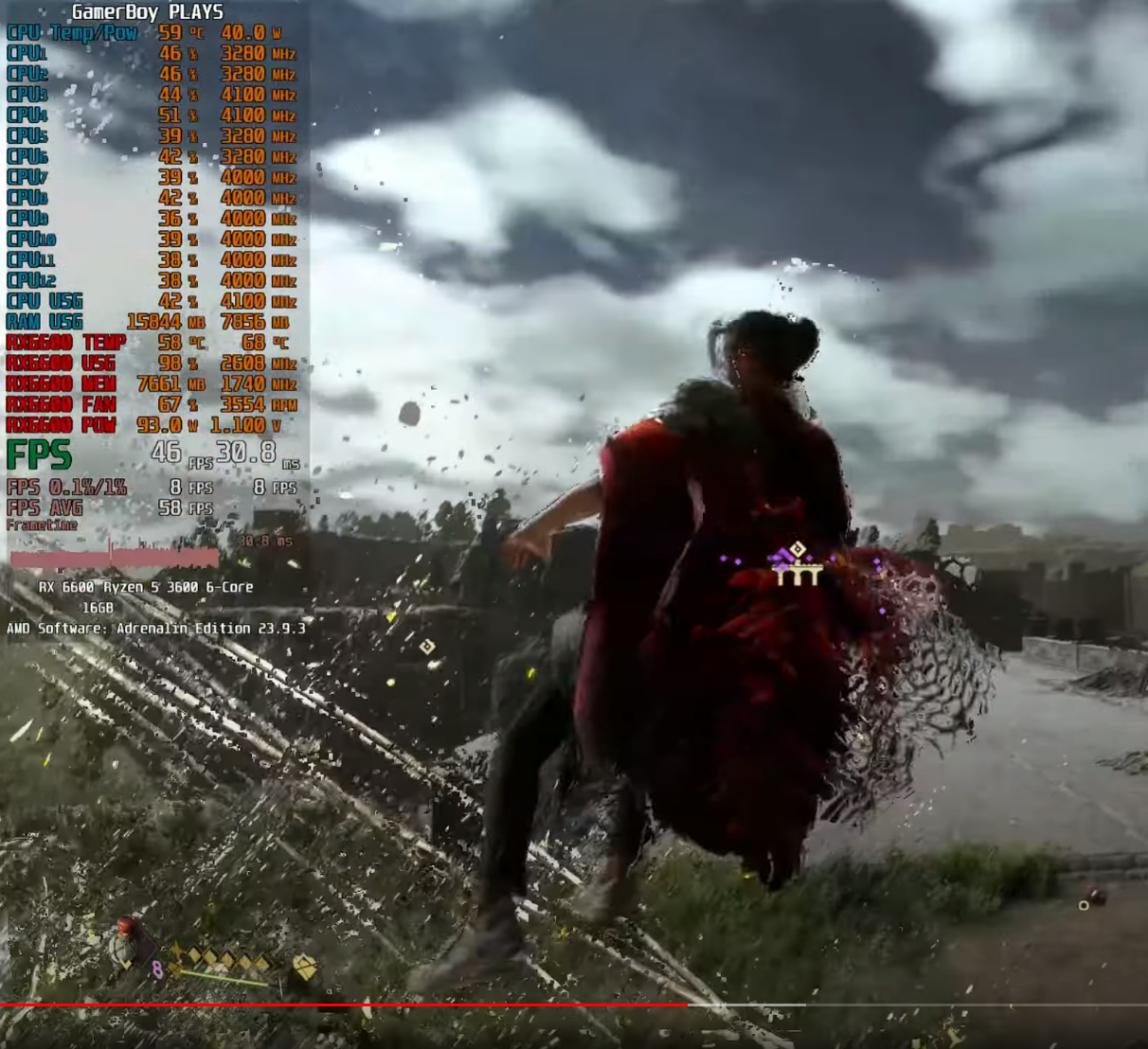

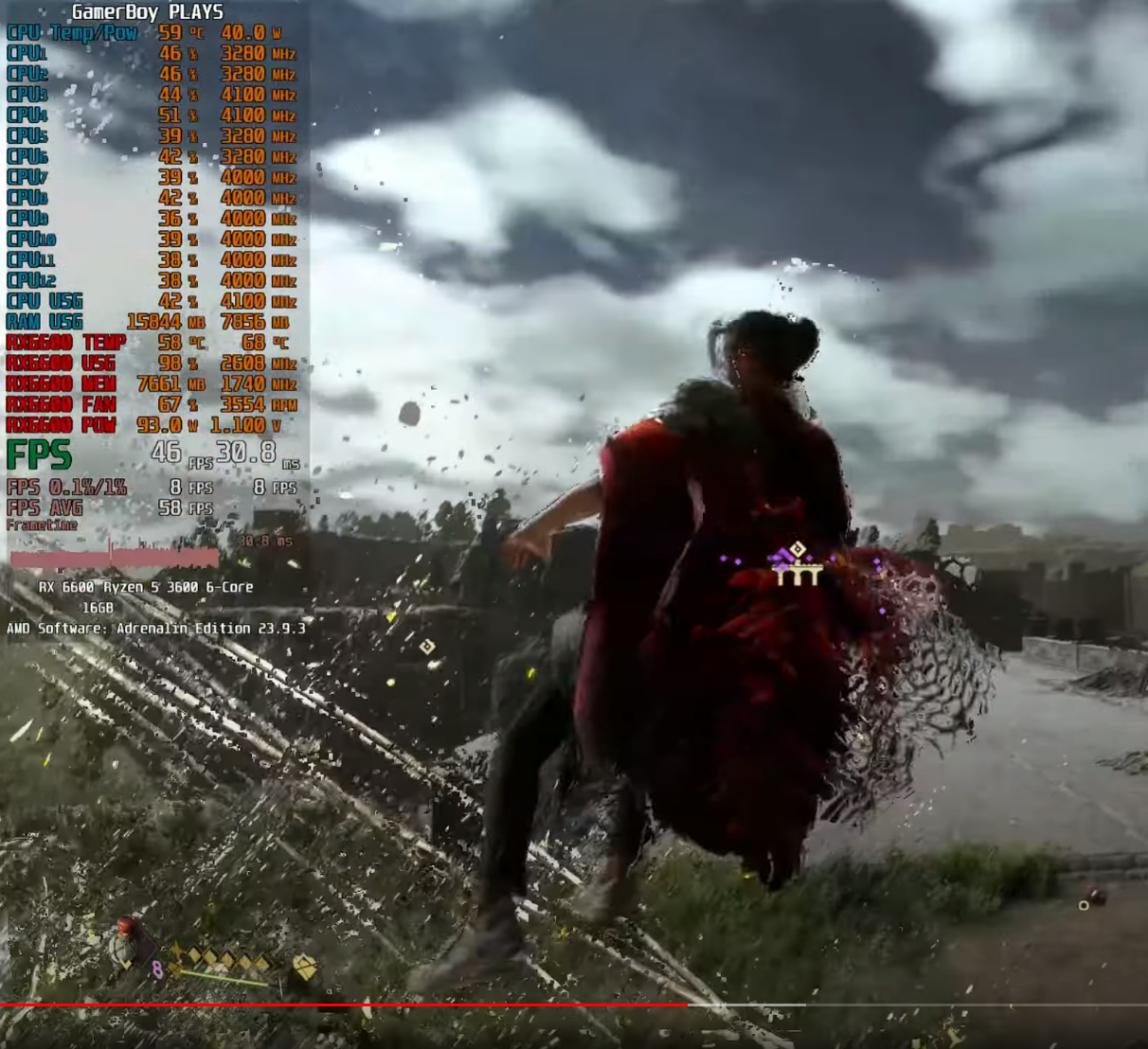

Hoping for some frame by frame analysis soon. There are a few YT videos already, but it's difficult to tell if I'm seeing compression artifacts, intended effects from the game (forspoken), or actual frame gen artifacts.

View: https://www.youtube.com/watch?v=GNNXaVA5Cl8

For example, this frame has a bunch of blocks around the character's head and in the sky, then there's some noticeable haloing around the alpha texture of her cloak:

View: https://www.youtube.com/watch?v=GNNXaVA5Cl8

For example, this frame has a bunch of blocks around the character's head and in the sky, then there's some noticeable haloing around the alpha texture of her cloak:

Notice also that Aveum enables Anti-Lag+, different from Forspoken which, as I see now, only supports the regular AntiLag but not antiLag+.So in every aspect from latency to image quality to performance, please judge FSR 3.0 by Aveum, not by Forspoken.

FSR Off: 62fps

FSR/AA: 55fps (-11%)

FSR/AA+FG: 85fps (+37%)

FSR/Quality: 86fps (+38%)

FSR/Q+FG: 128fps (+106%)

FSR/Q+FG+AntiLag+: 124fps (+100%)

https://twitter.com/opinali/status/1707779389755166931

FSR Off: 62fps

FSR/AA: 55fps (-11%)

FSR/AA+FG: 85fps (+37%)

FSR/Quality: 86fps (+38%)

FSR/Q+FG: 128fps (+106%)

FSR/Q+FG+AntiLag+: 124fps (+100%)

https://twitter.com/opinali/status/1707779389755166931

Armenius

Extremely [H]

- Joined

- Jan 28, 2014

- Messages

- 42,114

Immortals of Aveum also has DLSS Frame Generation, so a direct comparison can be made.Notice also that Aveum enables Anti-Lag+, different from Forspoken which, as I see now, only supports the regular AntiLag but not antiLag+.So in every aspect from latency to image quality to performance, please judge FSR 3.0 by Aveum, not by Forspoken.

FSR Off: 62fps

FSR/AA: 55fps (-11%)

FSR/AA+FG: 85fps (+37%)

FSR/Quality: 86fps (+38%)

FSR/Q+FG: 128fps (+106%)

FSR/Q+FG+AntiLag+: 124fps (+100%)

https://twitter.com/opinali/status/1707779389755166931

FSR 3 Runs Faster Than DLSS 3 in Immortals of Aveum but Stutters More

https://wccftech.com/fsr-3-runs-faster-than-dlss-3-in-immortals-of-aveum-but-stutters-more/

https://wccftech.com/fsr-3-runs-faster-than-dlss-3-in-immortals-of-aveum-but-stutters-more/

Really FSR3 for Eve Online.... the game runs on a graphing calculator because 90% of your screen is made up of text windows and your actual game space is the other 10%FSR 3 Runs Faster Than DLSS 3 in Immortals of Aveum but Stutters More

https://wccftech.com/fsr-3-runs-faster-than-dlss-3-in-immortals-of-aveum-but-stutters-more/

Space Marine 2 will be the real showcase for it then

I have no doubt that AMD will get equal performance, but I am highly suspect on quality, and so far the one function that brings AMD on par looks to be anti-lag+ which is 7000 series only.it's Nvidia's worst nightmare if FSR 3 matches or gets close in quality/performance to DLSS 3...FSR can be used on any GPU so it's important for Nvidia to maintain their lead in upscaling

Starting to see tests popping up with the 4080's 4090s' and 7900 series parts. But I want to see it on the 6000 and the 3000 series last gen, but we need it on more games, the 2 that currently have it aren't exactly good examples of quality implemented anything.

Will probably be better in some ways (algo should have an easier time to manage UI elements, drop too big of a change between 2 frames, etc...

Immortals of Aveum is not an easy title for this, tend to be quite GPU taxed, Starfield will maybe do bigger FPS boost (or people testing with more average CPUs)

Would not be surprised if a lot of DLSS 3 > FSR 3 in image quality will be about DLSS > FSR more than the extra frame

https://www.dsogaming.com/pc-performance-analyses/forspoken-amd-fsr-3-0-benchmarks-impressions/

What’s also crucial to note is that we did not experience any major latency issues when using FSR 3.0 Frame Generation. Even with a 60fps baseline, the mouse movement was pretty good. Forspoken is a third-person game and does not require fast/precise movements. As such, FSR 3.0 Frame Generation appears to be working great here. I’d really love to see FSR 3.0 Frame Generation in a racing game as that’s one genre in which I immediately felt the extra latency of DLSS 3 Frame Generation. Nevertheless, this is a good start for AMD and FSR 3.0.

Unfortunately, this isn’t a perfect launch though. Right now, FSR 3.0 Frame Generation suffers from major frame pacing issues on both NVIDIA’s and AMD’s hardware. Not only that but we experienced major tearing issues on our G-Sync monitor. Due to these tearing issues, mouse movement never felt smooth. Furthermore, there are major stuttering and performance issues when using the in-game V-Sync option. So yeah, AMD needs to fix these FSR 3.0 Frame Generation issues as soon as possible.

https://www.dsogaming.com/pc-perfor...k-vs-nvidia-dlss-3-vs-amd-fsr-3-0-benchmarks/

Unfortunately, Immortals of Aveum suffers from the same FSR 3.0 issues that are in Forspoken. While in static images the quality of FSR 3.0’s upscaling is great, in motion things get really messy. When moving the camera, there is a lot more shimmering and aliasing with FSR 3.0. Moreover, the game suffers from major frame pacing and stuttery camera movement issues. To be honest, the game never really feels smooth, and that’s something that AMD has to address as soon as possible.

What’s also interesting here is that you can enable NVIDIA Reflex when using AMD FSR 3.0. This is a must-have for all future FSR 3.0 games. With NVIDIA Reflex, we didn’t really feel the extra input latency of FSR 3.0 Frame Generation. To be honest, I was expecting AMD FSR 3.0 Frame Generation to feel laggy. However, both Forspoken and Immortals of Aveum feel responsive.

The only way we could resolve these stuttery movement issues was by lowering our monitor refresh rate to 120Hz and then using VSync from NVIDIA’s Control Panel. However, this workaround introduces MAJOR input latency issues. Both Forspoken and Immortals of Aveum feel laggy with VSync.

Although FSR 3.0 Frame Generation can push higher framerates, the overall gaming experience is worse than DLSS 3 Frame Generation. DLSS 3 Frame Generation currently wins in both smoothness and image quality. And, if you force VSync in FSR 3.0, DLSS 3 Frame Generation will also win in responsiveness.

We will need to wait for the DF/Hardware Unboxed of the world to make a more in debt than a couple of hours experiment and at least the first patches.

Immortals of Aveum is not an easy title for this, tend to be quite GPU taxed, Starfield will maybe do bigger FPS boost (or people testing with more average CPUs)

Would not be surprised if a lot of DLSS 3 > FSR 3 in image quality will be about DLSS > FSR more than the extra frame

https://www.dsogaming.com/pc-performance-analyses/forspoken-amd-fsr-3-0-benchmarks-impressions/

What’s also crucial to note is that we did not experience any major latency issues when using FSR 3.0 Frame Generation. Even with a 60fps baseline, the mouse movement was pretty good. Forspoken is a third-person game and does not require fast/precise movements. As such, FSR 3.0 Frame Generation appears to be working great here. I’d really love to see FSR 3.0 Frame Generation in a racing game as that’s one genre in which I immediately felt the extra latency of DLSS 3 Frame Generation. Nevertheless, this is a good start for AMD and FSR 3.0.

Unfortunately, this isn’t a perfect launch though. Right now, FSR 3.0 Frame Generation suffers from major frame pacing issues on both NVIDIA’s and AMD’s hardware. Not only that but we experienced major tearing issues on our G-Sync monitor. Due to these tearing issues, mouse movement never felt smooth. Furthermore, there are major stuttering and performance issues when using the in-game V-Sync option. So yeah, AMD needs to fix these FSR 3.0 Frame Generation issues as soon as possible.

https://www.dsogaming.com/pc-perfor...k-vs-nvidia-dlss-3-vs-amd-fsr-3-0-benchmarks/

Unfortunately, Immortals of Aveum suffers from the same FSR 3.0 issues that are in Forspoken. While in static images the quality of FSR 3.0’s upscaling is great, in motion things get really messy. When moving the camera, there is a lot more shimmering and aliasing with FSR 3.0. Moreover, the game suffers from major frame pacing and stuttery camera movement issues. To be honest, the game never really feels smooth, and that’s something that AMD has to address as soon as possible.

What’s also interesting here is that you can enable NVIDIA Reflex when using AMD FSR 3.0. This is a must-have for all future FSR 3.0 games. With NVIDIA Reflex, we didn’t really feel the extra input latency of FSR 3.0 Frame Generation. To be honest, I was expecting AMD FSR 3.0 Frame Generation to feel laggy. However, both Forspoken and Immortals of Aveum feel responsive.

The only way we could resolve these stuttery movement issues was by lowering our monitor refresh rate to 120Hz and then using VSync from NVIDIA’s Control Panel. However, this workaround introduces MAJOR input latency issues. Both Forspoken and Immortals of Aveum feel laggy with VSync.

Although FSR 3.0 Frame Generation can push higher framerates, the overall gaming experience is worse than DLSS 3 Frame Generation. DLSS 3 Frame Generation currently wins in both smoothness and image quality. And, if you force VSync in FSR 3.0, DLSS 3 Frame Generation will also win in responsiveness.

We will need to wait for the DF/Hardware Unboxed of the world to make a more in debt than a couple of hours experiment and at least the first patches.

The only way we could resolve these stuttery movement issues was by lowering our monitor refresh rate to 120Hz and then using VSync from NVIDIA’s Control Panel. However, this workaround introduces MAJOR input latency issues. Both Forspoken and Immortals of Aveum feel laggy with VSync.

Although FSR 3.0 Frame Generation can push higher framerates, the overall gaming experience is worse than DLSS 3 Frame Generation. DLSS 3 Frame Generation currently wins in both smoothness and image quality. And, if you force VSync in FSR 3.0, DLSS 3 Frame Generation will also win in responsiveness.

MAJOR input latency issues? Wat? I get the feeling that there is something wrong with these reviews. Especially WCCFTECH must did something totally wrong. I get a locked 116FPS with DLSS3 at 4K maxed out and with a GPU usage of around 75-91% in the exact location and 120FPS locked with FSR3 with even slightly lower GPU usage, while using the QUALITY PRESET. I see really great frametimes, even in action scenes. There is only some classic traversal/loading stutter. There is also some bug in form of a heavy performance decrease when you switch back to DLSS3 and restart the game. Once you are ingame again, simply switch the DLSS quality preset from quality to balanced or whatever and back to the level you want to use. You go back to high performance then:

bug:

View: https://i.imgur.com/vtlnmgF.png

fixed:

View: https://i.imgur.com/b1lUcYR.png

This does not happen with FSR3. Although, after seeing WCCFTECHs video, I'm not so sure anymore. His performance is night and day worse than mine. When I use FSR3 the frametime graph is thick too, but I don't get frametime hiccups like he gets.

The performance is a little bit higher on average with FSR3, but DLSS3 is more stable in motion (no flickering, mission markers are stable or other things like this guard sign when you move the camera: https://www.ggrecon.com/media/os1c3tv1/immortals-of-aveum-ring-combat.jpg

In terms of smoothness and latency both feel great in Immortals, maybe DLSS3 has a slight edge because it can handle Gsync, but man...the difference is very minor IMO. Playing with controller feels great with both.

I'm using SpecialK for HDR10 injection. Man, does this look MILES better. I can't describe how much I hate SDR gaming lol. I checked performance with and without SpecialK btw. It's the same.

If AMD can tweak the motion issues a little bit more and add VRR support, I would be very impressed.

Last edited:

Hoping for some frame by frame analysis soon. There are a few YT videos already, but it's difficult to tell if I'm seeing compression artifacts, intended effects from the game (forspoken), or actual frame gen artifacts.

View: https://www.youtube.com/watch?v=GNNXaVA5Cl8

For example, this frame has a bunch of blocks around the character's head and in the sky, then there's some noticeable haloing around the alpha texture of her cloak:

View attachment 602130

Looks like an oil painting created by AI.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)