chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,580

AMD changed the CPU market with Zen and Intel had to catch up. Intel's roadmaps would have kept us on 4 cores for a fair bit longer, on the regular consumer sockets. With Zen actually proving to be a solid CPU---with a bunch of cores: Intel had to compress their roadmaps and revamp their product categories.That isn't what I said. I said "Gaming is pretty much the only reason people care about high end PCs." Get it right next time if you're capable of that (which I doubt).

What I said is true. The high end CPU market is driven almost entirely by gaming. Workstations tend to be higher core count/lower clock speed machines.

So I'm glad we've confirmed that I'm right and you're wrong. Thanks!

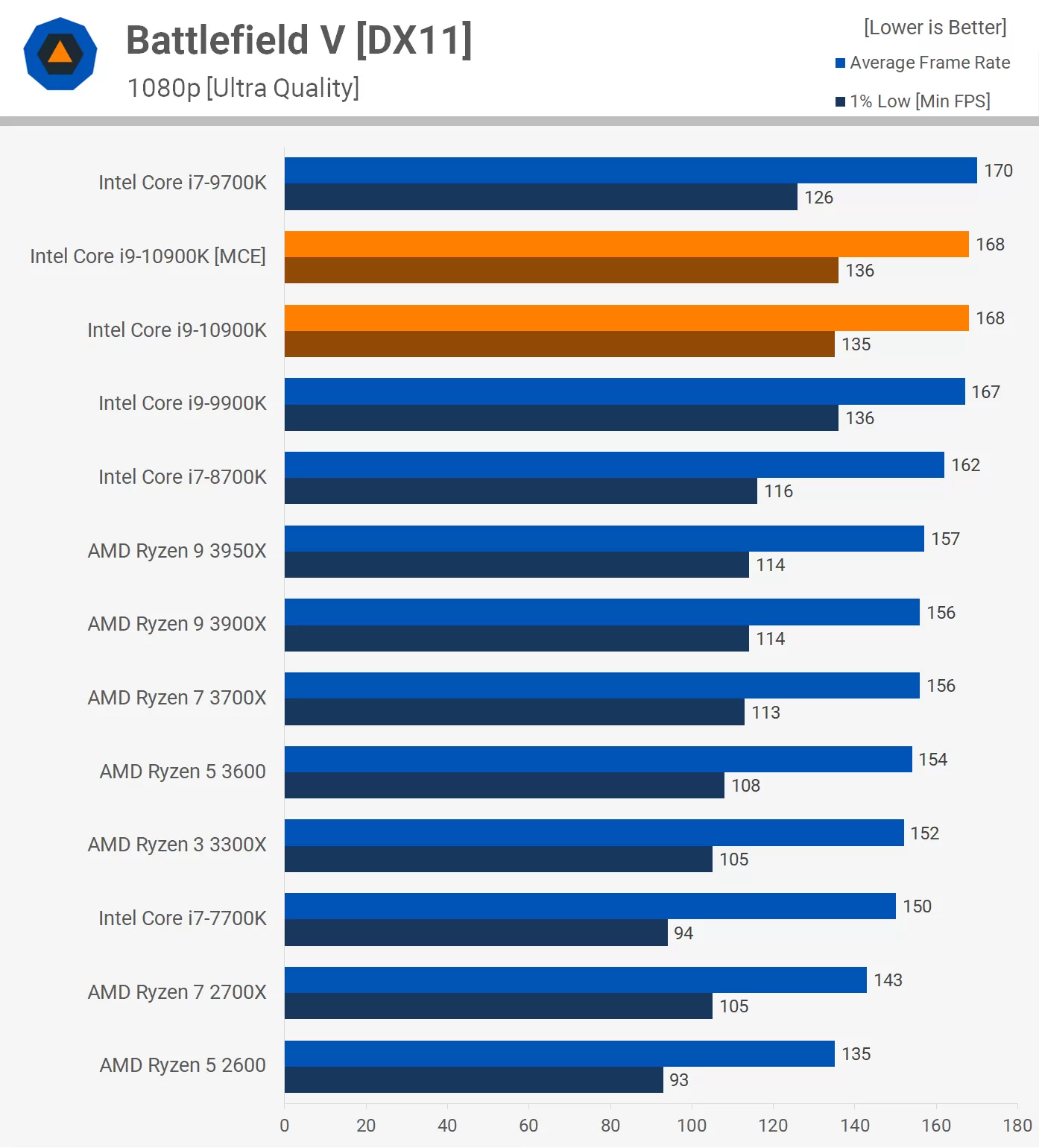

Intel is better in games, it's better in video game emulators, and given the low core count in the "next gen" consoles, Intel already has enough cores for that generation of ports. The best case scenario for AMD is to catch up, which, given Intel's utter failure over the past few years, is utterly pathetic.

View attachment 285099

Indeed, Intel had a gaming advantage and still has a gaming advantage. But that is literally their only clear advantage.

Intel only just now sort of caught up, with the 10 series. The 10 core has enough megahertz on tap, it can very nearly keep pace with AMD's 12 core, in workloads which scale across cores. But AMD also has a 16 core (we are talking about consumer PCs, here).

And....Intel doesn't have PCI-E 4 and it is yet unclear when they will. AMD has had it for over a year. Intel does have Optane, but that is so crazy expensive, its not even worth talking about as a general consumer product.

However, I still have to give props for Quicksync. AMD really needs to offer something like that.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)